Google needs to learn to read :-)…

John Lawler writes:

I recently had reason to ask the following question of Google

https://www.google.com/search?q=how+many+words+does+the+average+person+say+in+a+day

and the result turned up an old LL post, which is great, except the selection algorithm picked the wrong number as the answer, and even quoted the post you were complaining about as if it were true.

This should probably be brought to someone's attention, but it seems, what with the vast amounts of irony, hyperbole, bullshit, lying, and fact-checking on the net, this is not an isolated problem.

Read the rest of this entry »

Saying the quiet part loud

Sam Dorman, "AOC says Trump 'relished' rally chant about Omar, doesn't want to be president anymore", Fox News 7/20/2019:

"Once you start telling American citizens to 'go back to your own countries,' this tells you that this President's policies are not about immigration, it's about ethnicity and racism," Ocasio-Cortez went on to applause from the town hall crowd. "And his biggest mistake is that he said the quiet part loud. That was his biggest mistake because we know that he's been thinking this the entire time."

Read the rest of this entry »

Ambiguous initialisms

Menachem Wecker, "One NRA fights for guns. One for restaurants. Yes, D.C. has abbreviation overload.", WaPo 7/15/2019:

It was the malapropism heard around certain corners of social media. When Rep. Katie Porter (D-Calif.) asked Ben Carson recently about REOs — real estate owned properties — the housing and urban development secretary appeared to hear a reference to cookies, i.e., Oreos. While the incident quickly became a referendum on Carson's knowledge of housing policy — he would later dismiss the episode as gotcha politics, telling ABC News, "Give me a break," perhaps a subconscious Kit Kat allusion — it did point to a frequently overlooked hazard of life in Washington: Acronyms and other abbreviations, a second language for many wonks, can be confusing, problematic or simply embarrassing.

Read the rest of this entry »

Chicken baby

Just to show you how up to date Language Log can be, in this post we'll be talking about a neologism that is only a few weeks old in China. The term is "jīwá 鸡娃“, which literally means "chicken baby / child / doll".

The term surfaced abruptly and began circulating virally on social media, following a heated discussion over two articles on K-12 education (the links are here and here). The articles are respectively about the fierce competition among parents in Haidian and Shunyi districts of Beijing municipality. Haidian is a large district in the northwestern part of Beijing with many famous tourist attractions, outstanding universities, and top IT firms. Shunyi district is in the northeastern part of Beijing. Although it is not as large and powerful as Haidian, it is also considered a very desirable place to live because of its posh villas, easy access to the international airport, and China's largest international exhibition center, but above all — from a parent's point of view — some of the best private and international schools in the country.

Read the rest of this entry »

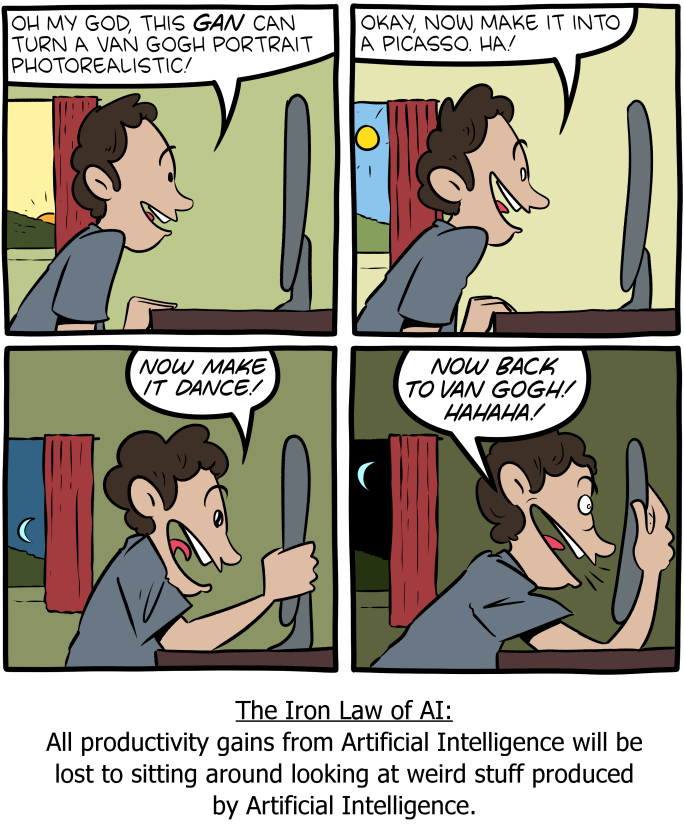

The Iron Law of AI

Today's SMBC:

Mouseover title: "The other day I was really freaked out that a computer could generate faces of people who DON'T REALLY EXIST, only to later realize painters have been doing this for several millenia."

Read the rest of this entry »

Inside out

Brian Costa, "Rory McIlroy’s British Open Chances Collapse on the First Hole", WSJ 7/18/2019 [emphasis added]:

Rory McIlroy stepped into the first tee box at Royal Portrush on Thursday morning and waved to a roaring crowd. He knew it would be a once-in-a-lifetime experience: his opening tee shot at the first British Open held in his native country in more than half a century. […]

This is the same course where, as a 16-year-old amateur in 2005, he shot a 61, which remains the course record. This is a tournament in which McIlroy has not failed to finish outside the top five since 2013 (he missed it with an injury in 2015). This is a player who, as measured by strokes gained—which compares a player’s score to the field average—has been the best on the PGA Tour this season.

Read the rest of this entry »

Ich bin ein Hongkonger

The genesis of this post lies in the following newspaper headline:

"Ich Bin Ein Hong Konger: How Hong Kong is turning into the West Berlin of the quasi-cold war between the West and China", by Melinda Liu, Foreign Policy (7/16/19)

Every historically literate person immediately recognizes the allusion to John F. Kennedy's famous speech in West Berlin on June 26, 1963:

Speaking from a platform erected on the steps of Rathaus Schöneberg for an audience of 450,000, Kennedy said,

Two thousand years ago, the proudest boast was civis romanus sum ["I am a Roman citizen"]. Today, in the world of freedom, the proudest boast is "Ich bin ein Berliner!"… All free men, wherever they may live, are citizens of Berlin, and therefore, as a free man, I take pride in the words "Ich bin ein Berliner!"

Read the rest of this entry »

ICPhS 1938

For those interested in the history of concepts and techniques in phonetics, I've scanned the Proceedings of the Third International Congress of Phonetic Sciences (1938), all 550-odd pages of it. Warning: 23 MB .pdf file.

Read the rest of this entry »

Another day, another misnegation

Thomas Friedman, "‘Trump’s Going to Get Re-elected, Isn’t He?’", NYT 7/16/2019 [emphasis added]:

I’m struck at how many people have come up to me recently and said, “Trump’s going to get re-elected, isn’t he?” And in each case, when I drilled down to ask why, I bumped into the Democratic presidential debates in June. I think a lot of Americans were shocked by some of the things they heard there. I was. […]

But I’m disturbed that so few of the Democratic candidates don’t also talk about growing the pie, let alone celebrating American entrepreneurs and risk-takers.

Read the rest of this entry »

German salty pig hand

Jeff DeMarco writes:

"Saw this on Facebook. Google Translate gives 'German salty pig hand' which I presume refers to trotters. Not sure how they got sexual misconduct!"

Read the rest of this entry »

Permalink Comments off