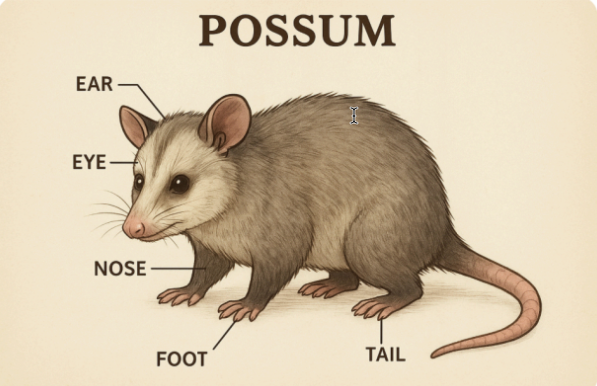

More of GPT-5's absurd image labelling

GPT-5 is impressively good at some things (see "No X is better than Y", 8/14/2025, or "GPT-5 can parse headlines!", 9/7/2025), but shockingly bad at others. And I'm not talking about "hallucinations", which is a term used for plausible but false facts or references — such mistakes remain a problem, but every answer is not a hallucination. Adding labels to images that it creates, on the other hand, remains reliably and absurdly bad.

GPT-5 is impressively good at some things (see "No X is better than Y", 8/14/2025, or "GPT-5 can parse headlines!", 9/7/2025), but shockingly bad at others. And I'm not talking about "hallucinations", which is a term used for plausible but false facts or references — such mistakes remain a problem, but every answer is not a hallucination. Adding labels to images that it creates, on the other hand, remains reliably and absurdly bad.

Read the rest of this entry »