GPT-5 can parse headlines!

« previous post | next post »

At least sometimes…

Philip Taylor sent a link to this Guardian article "West Point cancels ceremony to honor Tom Hanks as ‘outstanding US citizen’", with the comment

It was only on reading the article that I realised that West Point was/were not cancelling the ceremony in order to honour Tom Hanks (as I had originally thought/believed) but were in fact cancelling a ceremony intended to honour Tom Hanks …

I've been meaning to test GPT-5's parsing ability, ever since I discovered its surprising ability to represent semantic scope ambiguities in correct predicate logic (see "No X is better than Y", 8/13/2025, and the details of its analyses).

So I simplified the Guardian's headline slightly, in order to make the analyses fit the page better, and tried it.

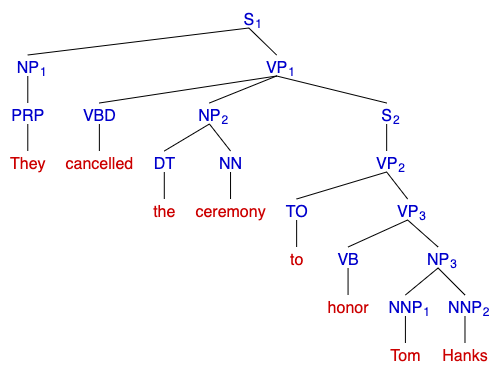

Me: Draw a parse tree for the sentence "They cancelled the ceremony to honor Tom Hanks"

GPT-5:

Thought for 25s Great sentence—there’s a classic attachment ambiguity.

Here are two clean parse trees (Penn-Treebank style with ASCII):

#1 NP-attachment (most natural: the ceremony’s purpose is to honor Tom Hanks)

S

├── NP

│ └── PRP They

└── VP

├── VBD cancelled

└── NP

├── DT the

├── NN ceremony

└── S (non-finite modifier of N; “reduced relative/purpose”)

└── VP

├── TO to

└── VP

├── VB honor

└── NP

├── NNP Tom

└── NNP Hanks

Bracketed:

(S

(NP (PRP They))

(VP (VBD cancelled)

(NP (DT the) (NN ceremony)

(S (VP (TO to) (VP (VB honor) (NP (NNP Tom) (NNP Hanks))))))))

#2 VP-attachment (less plausible meaning: they cancelled, in order to honor Tom Hanks)

S

├── NP

│ └── PRP They

└── VP

├── VBD cancelled

├── NP

│ ├── DT the

│ └── NN ceremony

└── S (purpose clause modifying the VP)

└── VP

├── TO to

└── VP

├── VB honor

└── NP

├── NNP Tom

└── NNP Hanks

Bracketed:

(S

(NP (PRP They))

(VP (VBD cancelled)

(NP (DT the) (NN ceremony))

(S (VP (TO to) (VP (VB honor) (NP (NNP Tom) (NNP Hanks)))))))

Entirely correct! (or at least close enough…)

See the full conversation, where I go on to ask for for dependency-grammar versions, which GPT-5 gets formally correct but is not very good at drawing. I didn't try to get GPT-5 to draw pretty syntax trees, rather than the ascii and bracketed versions, so I did it myself via jsSyntaxTree for those who might care. The NP-attachment version:

And the VP-attachment version:

So it seem that GPT-5 can analyze (some instances of) syntactic as well as semantic ambiguity, and can use Penn Treebank and Universal Dependency representations correctly to describe the syntactic alternatives. This deserves more investigation than I have time for this morning, including exploration of two obvious questions:

- Is GPT-5 recognizing when syntactic and logical analyses are needed, and turning the task over to special "experts"? If so, how do those subsystems work?

- Does GPT-5 use such analyses in its own training and question-answering? If so, that's quite different from just predicting the next token in a text sequence. (Though thinking for 25 seconds about the analysis of one sentences suggests that its probably not yet doing this to much of its training text…)

Update — for more on the history and the implications, see Gaspar Begus et al., "Large linguistic models: Investigating LLMs’ metalinguistic abilities", IEEE Transactions on Artificial Intelligence, 6/3/2025.

David Morris said,

September 7, 2025 @ 7:27 am

On my screen, the first line break is after 'to', but I can't decide whether that suggests one reading or the other.

West point cancels ceremony to

honor Tom Hanks [more likely the 'intended to' reading?]

West Point cancels ceremony

to honor Tom Hanks [more likely the 'in order to' reading?]

Sometimes in legal editing, I insert 'in order' to disambiguate.

David Morris said,

September 7, 2025 @ 7:48 am

Maybe I should have written "I insert 'in order' in order to disambiguate" in order to disambiguate.

Nat J said,

September 7, 2025 @ 5:23 pm

Just out of curiosity, is the label "purpose clause modifying the VP" technically correct? I would have thought that "to honor Tom Hanks" can't be classified as a clause. Or am I misunderstanding the analysis?

Michael Vnuk said,

September 7, 2025 @ 6:43 pm

I know that everyone is different, but I'm a little surprised that Philip Taylor had to read the article to understand the heading. If the heading stopped after 'Hanks', then the ambiguity would be active, but it's the 'as "outstanding US citizen"' that resolves the ambiguity. At least, it does for me, although I don't think I even spotted the ambiguity on my first reading.

Philip Taylor said,

September 8, 2025 @ 2:07 am

Oddly enough, Michael, if the headline were to have omitted the as ‘outstanding US citizen’ part, then I am reasonably confident that I would not have misunderstood it in the first place. So, as you quite correctly observe, "everyone is different".

Maryellen MacDonald said,

September 10, 2025 @ 6:34 pm

There are a number of studies on variability in people's initial interpretation of attachment ambiguities. Interpretation varies with the language that someone speaks, what they've heard or read recently, their overall amount they read, and so on. I'm not providing a list of articles here, but asking ChatGPT provides a ok-ish list; it gets tripped up by the fact that there's some variation in the use of "attachment ambiguity" in the literature, and particularly in article titles.