More of GPT-5's absurd image labelling

« previous post | next post »

GPT-5 is impressively good at some things (see "No X is better than Y", 8/14/2025, or "GPT-5 can parse headlines!", 9/7/2025), but shockingly bad at others. And I'm not talking about "hallucinations", which is a term used for plausible but false facts or references — such mistakes remain a problem, but every answer is not a hallucination. Adding labels to images that it creates, on the other hand, remains reliably and absurdly bad.

GPT-5 is impressively good at some things (see "No X is better than Y", 8/14/2025, or "GPT-5 can parse headlines!", 9/7/2025), but shockingly bad at others. And I'm not talking about "hallucinations", which is a term used for plausible but false facts or references — such mistakes remain a problem, but every answer is not a hallucination. Adding labels to images that it creates, on the other hand, remains reliably and absurdly bad.

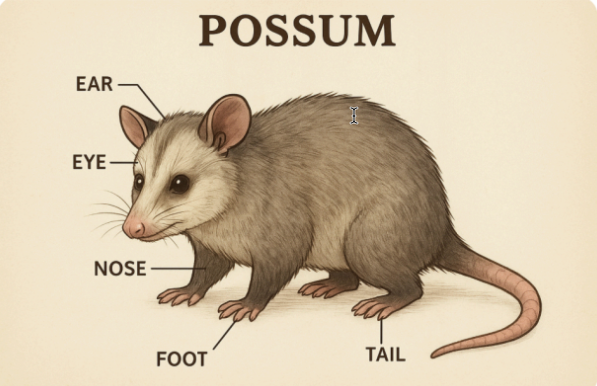

The picture above comes from an article by Gary Smith: "What Kind of a “PhD-level Expert” Is ChatGPT 5.0? I Tested It." The prompt was “Please draw me a picture of a possum with 5 body parts labeled.” Smith's evaluation:

GPT 5.0 generated a reasonable rendition of a possum but four of the five labeled body parts were incorrect. The ear and eye labels were at least in the vicinity but the nose label pointed to a leg and the tail label pointed to a foot. So much for PhD-level expertise.

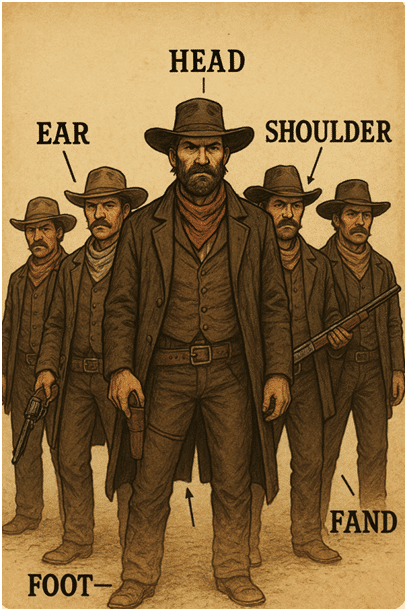

Smith attempted a possum-drawing replication in a later article, but typed "posse" by mistake instead, and got this:

His attempts to get GPT-5 to correct the drawing made things worse and worse.

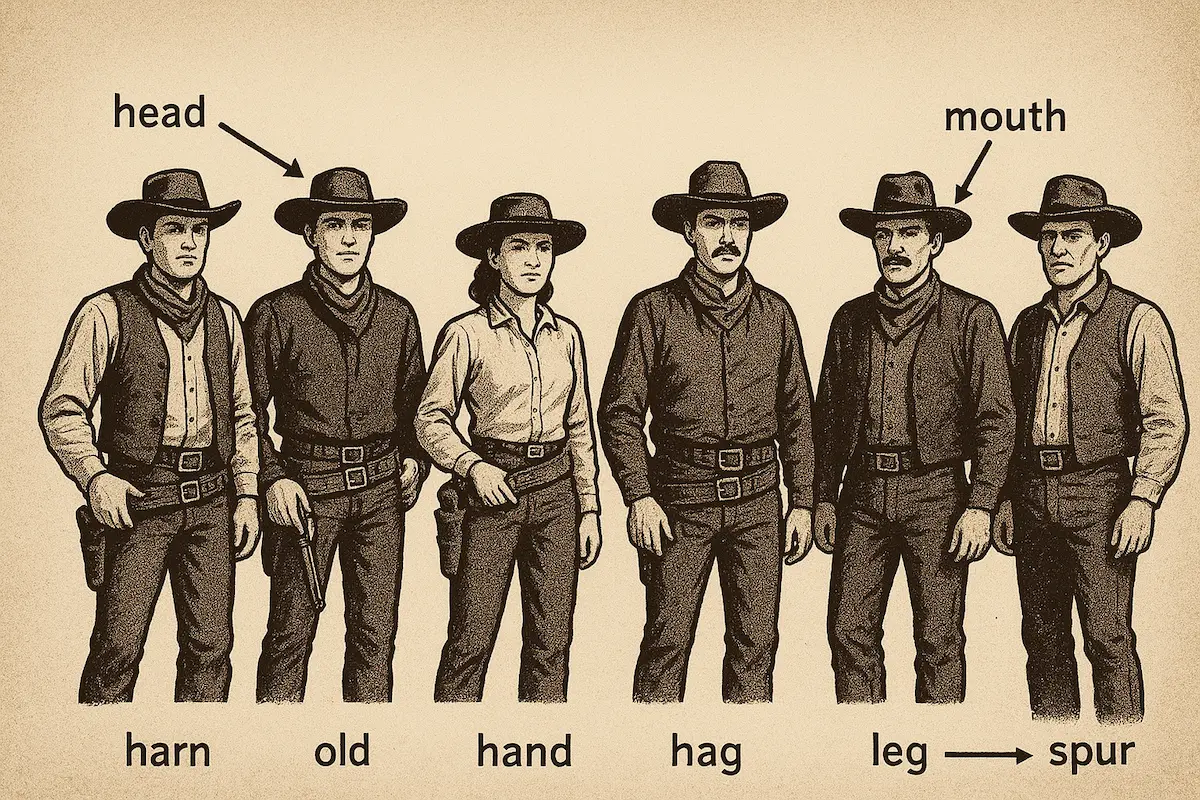

Noor Al-Sibai tried for a replication by asking GPT-5 to provide an image of "a posse with six body parts labeled", and got this:

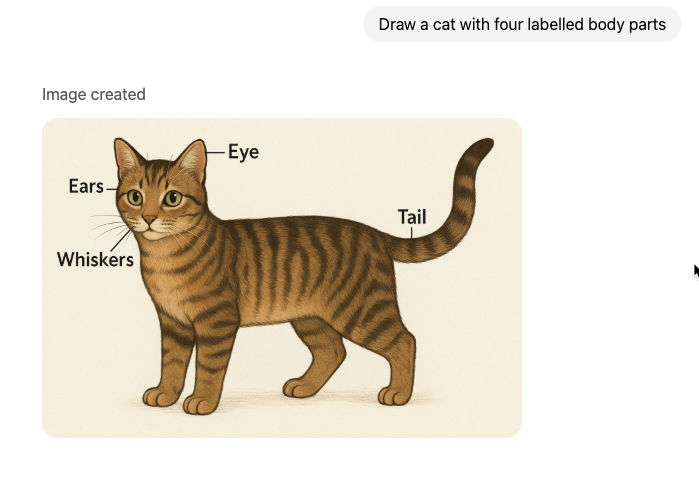

I asked GPT-5 to "Draw a cat with four labelled body parts":

And as a closer, to "Draw a human hand with the palm, thumb, wrist, and pointer finger labelled":

So the results are consistent: good-quality images with absurdly-weird labelling.

Two obvious questions:

- Why does OpenAI allow GPT-5 to continue to embarrass itself (and them) this way? Why not just refuse, politely, to create labelled images?

- Does GPT-5 have similar failures when asked to label images that it doesn't create? Or maybe even worse failures? I expect so, but don't have time this morning to check.

Update — I was wrong about labelling an uploaded image. Just one example, but it did OK:

ToDo: What's the difference?

Jerry Packard said,

September 15, 2025 @ 6:18 am

With all due respect, if you asked your garden-variety psych undergrad to do a forced-choice drawing or picture of a posse with six body parts labeled, what would you get?

Mark Liberman said,

September 15, 2025 @ 7:34 am

@Jerry Packard "if you asked your garden-variety psych undergrad to do a forced-choice drawing or picture of a posse with six body parts labeled, what would you get?"

I'd expect a very bad drawing with correct body-part labelling — just the opposite of GPT-5's answers.

A better comparison would be to ask each subject to label an existing image.

bks said,

September 15, 2025 @ 7:50 am

Interesting that Smith asks for five body parts labelled and gets a five-member posse, while Noor Al-Sibai asks for six body parts and gets a six-member posse. Our host did not get four cats, though. (Maybe asking for a pride of lions with four body parts labelled?)

(Also, how many L's in labeled?)

stephen said,

September 15, 2025 @ 9:16 am

You did not tell it to label those parts *correctly*.

Robert Coren said,

September 15, 2025 @ 9:36 am

bks asks: "how many L's in labeled?"

Two or three, writer's choice. Officially, I believe, two is standard in the US, three in the Commonwealth.

David L said,

September 15, 2025 @ 9:43 am

The five-member posse appears to be a set of identical quintuplets.

And in the six-member posse, one person is (I think) female, but she's not the one labeled 'hag.'

Kenny Easwaran said,

September 15, 2025 @ 1:40 pm

Image labeling is one of the first tasks that neural nets learned to do well. If you want to count properly identifying handwritten digits, they've been doing it at least since the 1990s, but even for the more sophisticated task of identifying dog breeds and types of ships, among hundreds of other classes of objects, they've been doing it since 2012, and this success is what jump-started the entire modern outpouring of AI models.

Generating images is the task of a much newer type of system, that's really only had significant success in the past four or five years, and for much of that time, they were really bad even at naturalistic features like hands and fingers.

More recent systems have gotten good at generating arbitrary images that look like natural scenes, possibly with some sort of photographic or painterly style imposed on them. But they've never gotten good at anything very structured, like diagrams or schematics or text. Even just getting numerical and spatial relationships among elements of the image to match the relationships text, rather than just a plausible image that has the right elements, is difficult – I just tried three models on "a photograph of a cake eating a cat", and while ChatGPT and Gemini took some time and got it right, Midjourney showed the more natural image of a cat eating a cake.

GPT-5 is supposed to be better at tool selection than previous ChatGPT models (they've no longer given the user the choice of whether to turn on the "reasoning model" like o3 and o4, or the base model like 4o, and instead have an automated system deciding which to use) but it's still apparently not very good. It would do great at these "labeled images" requests if it generated an image, then generated a list of body parts, and then asked the image labeler to label those body parts in the image. And similarly, it would do great at mathematical requests if it always remembered to call the calculator, or write a little python script to do the calculation. But it often just tries to do these things "off the top of its head" and fails humorously.

Y said,

September 15, 2025 @ 1:50 pm

I was wrong about labelling an uploaded image. Just one example, but it did OK

It didn't do OK. In terms of sum-of-normalized-squares of distances from label to object, it did better than the previous ones, but it still labeled an empty spot as a hand. It still does not understand (i.e. have have an imternal representation) of body parts of labeling.

Why not just refuse, politely, to create labelled images?

I would guess that it cannot tell what it knows from what it guesses. The programmers can keep adding hand-coded special cases, but that would never end.

David P said,

September 15, 2025 @ 3:56 pm

The other day, I tried on ChatGPT the "Draw a picture of a possum with five labeled body parts," and, like the original poster, either mistyped 'possum' or was autocorrected, and got a nice picture of a five-male posse with grossly misplaced labels (for hat, torso, belt, and boots). So I prompted, "I meant a possum, not a posse," and got a nice picture of a possum wearing a hat. There were four labels (hat, torso, face, and boots), all but one of them apparently inherited from the preceding response, and only one of them (the new one, face) correctly placed. The possum was wearing a hat, which was of the same style as the five identical hats worn by the posse. It was not, however, wearing boots.

David W said,

September 15, 2025 @ 8:36 pm

The possum labels are all in reasonably correct places, but the lines connecting the labels to some of the body parts are wrong. It looks like these lines in the possum, cat, and hand drawings are constrained to multiples of 45 degrees from vertical.

Richard Rubenstein said,

September 15, 2025 @ 9:17 pm

This is unrelated to the issues here, but I note that the posse shares a persistent issue I'be encountered with previous versions of chat-GPT: it consistently draws human figures with legs that are too short and stubby. I can tell it to lengthen the legs till I'm blue in the face, and it just doesn't seem capable of it. I wonder whether it's somehow an artifact of image training data being full of pictures of people their feet out of frame…

Robot Therapist said,

September 16, 2025 @ 2:47 am

"Why not just refuse, politely, to create labelled images?"

"I would guess that it cannot tell what it knows from what it guesses."

Yes. It always sounds so … confident.

John Busch said,

September 16, 2025 @ 3:51 am

Artificial Intelligence is neither artificial nor intelligent. It is not artificial because it is built on a database of actual human language. It is not intelligent because it has no consciousness. It has no judgement or ethics. It does not know right from wrong, truth from falsehood. It isn't good with images because it cannot see.

Philip Taylor said,

September 16, 2025 @ 5:27 am

Much as I agree with all of your analysis, John, ChatGPT did remarkably well with the following prompt : "draw a possum with an arrow indicating the exact centre of the possum's left eye". Results at https://chatgpt.com/s/m_68c93b794bb48191828e5230d4f94a99

Mark Liberman said,

September 16, 2025 @ 9:49 am

@Kenny Easwaran: "Image labeling is one of the first tasks that neural nets learned to do well. If you want to count properly identifying handwritten digits, they've been doing it at least since the 1990s, but even for the more sophisticated task of identifying dog breeds and types of ships, among hundreds of other classes of objects, they've been doing it since 2012, and this success is what jump-started the entire modern outpouring of AI models"

First, those tasks are not image (part) labelling, they're (whole) image classification (sometimes scornfully referred to as "cat detection"…) And second, those tasks involved classification of provided images, not labeling of parts of an image generated by the system. Which is apparently quite a bit harder, at least for GPT-5.

Kris said,

September 16, 2025 @ 4:23 pm

GPT and its ilk are large language models. Images are not part of their training and in fact LLMs do not even generate the images that you are getting back from them. They are making calls to large nets trained for image generation (that is, entirely separate and unrelated models), receiving those images back, and then trying to annotate them as you request. Which of course (at present) they are bad at, since nothing in their corpus shows them how to do this, except for the emergent learning of word meanings based on the contexts in which those words tend to be found within text corpora.

The neural nets described by Kenny are going to be good at recognizing that the word "hand" tends to appear in the middle of an image, the words "eye," "ear," "head," "nose" etc. tend to appear near the top of the image, "foot" near the bottom of the image (or the top/middle/bottom of whatever object is prominent in the image). That is, if one wanted to train those crude models for generic part labeling. But that's not what was done in those classical (small network) models – they were specially trained to differentiate images of dogs into breeds, images of handwritten numbers into the digits they represent, or images of faces into their constituent parts.

Why would we expect a language model to be able to interpret an image in any way whatsoever? Whatever the model appears to know about an image most likely comes from the prompt history and the image metadata, not the actual image at all.

The over-arching theme of all these "why do LLMs make basic mistake X" stem from a misunderstanding of what these models are and do. Not surprising (from laypeople) given the hype surrounding them and their astounding performance at what they WERE intended to do, and even many tasks they were not envisioned for but perform remarkably on anyway. To dumb way down, they tend to be of the sort: why is my calculator so bad at spellcheck?

Chas Belov said,

September 28, 2025 @ 3:51 pm

@Kris: The problem is not that LLMs don't do everything, it's that when you ask it to do something it doesn't do and it tries to do it anyway instead of responding "I'm not good at that sort of thing."