"Finding a voice"

An excellent article by Lane Greene: "Language: Finding a voice", The Economist 1/5/2017.

An excellent article by Lane Greene: "Language: Finding a voice", The Economist 1/5/2017.

At the beginning of 2016, Jack Grieve shared the first iteration of the Word Mapper app he had developed with Andrea Nini and Diansheng Guo, which let users map the relative frequencies of the 10,000 most common words in a big Twitter-based corpus covering the contiguous United States. (See: "Geolexicography," "Totally Word Mapper.") Now as the year comes to a close, Quartz is hosting a bigger, better version of the app, now including 97,246 words (all occurring at least 500 times in the corpus). It's appropriately dubbed "The great American word mapper," and it's hella fun (or wicked fun, if you prefer).

Some misc word maps made with the word mapper https://t.co/R2wCfnsegx pic.twitter.com/6jiDdJN5QL

— nikhil sonnad (@nkl) December 15, 2016

Read the rest of this entry »

This is a reality check on the current state of automatic speech recognition (ASR) algorithms. I took the 186-word passage by Scottie Nell Hughes discussed in yesterday's post, and submitted it to two different Big-Company ASR interfaces, with amusing results. I'll be interested to see whether other systems can do better.

Read the rest of this entry »

Today at ISCSLP2016, Xuedong Huang announced a striking result from Microsoft Research. A paper documenting it is up on arXiv.org — W. Xiong, J. Droppo, X. Huang, F. Seide, M. Seltzer, A. Stolcke, D. Yu, G. Zweig, "Achieving Human Parity in Conversational Speech Recognition":

Conversational speech recognition has served as a flagship speech recognition task since the release of the DARPA Switchboard corpus in the 1990s. In this paper, we measure the human error rate on the widely used NIST 2000 test set, and find that our latest automated system has reached human parity. The error rate of professional transcriptionists is 5.9% for the Switchboard portion of the data, in which newly acquainted pairs of people discuss an assigned topic, and 11.3% for the CallHome portion where friends and family members have open-ended conversations. In both cases, our automated system establishes a new state-of-the-art, and edges past the human benchmark. This marks the first time that human parity has been reported for conversational speech. The key to our system's performance is the systematic use of convolutional and LSTM neural networks, combined with a novel spatial smoothing method and lattice-free MMI acoustic training.

Read the rest of this entry »

Let me explain, very informally, what a predictive text imitator is. It is a computer program that takes as input a passage of training text and produces as output a new text that is composed quasi-randomly except that it matches the training text with regard to the frequencies of word or character sequences up to some fixed finite length k.

(There has to be such a length limit, of course: the only text in which the word sequence of Melville's Moby-Dick is matched perfectly is Melville's Moby-Dick, but what a predictive text imitator trained on Moby-Dick would do is to produce quasi-random fake-Moby-Dickish gibberish in which each sequence of not more than k units matches Moby-Dick with respect to the transition probabilities between adjacent units.)

I tell you this because a couple of months ago Jamie Brew made a predictive text imitator and trained it on my least favorite book in the world, William Strunk's The Elements of Style (1918). He then set it to work writing the first ten sections of a new quasi-randomly generated book. You can see the results here. The first point at which I broke down and laughed till there were tears in my eyes was at the section heading 'The Possessive Jesus of Composition and Publication'. But there were other such points too. Take a look at it. And trust me: following the advice in Jamie Brew's version of the book won't do your writing much more harm than following the original.

Read the rest of this entry »

Permalink Comments off

A rather poetic and imaginative abstract I received in my email this morning (it's about a talk on computational aids for composers), contains the following sentence:

We will metaphorically drop in on Wolfgang composing at home in the morning, at an orchestra rehearsal in the afternoon, and find him unwinding in the evening playing a spot of the new game Piano Hero which is (in my fictional narrative) all the rage in the Viennese coffee shops.

There's nothing wrong with the sentence. What makes me bring it to your notice is the extraordinary modification that my Microsoft mail system performed on it. I wonder if you can see the part of the message that it felt it should mess with, in a vain and unwanted effort at helping me do my job more efficiently?

Read the rest of this entry »

Permalink Comments off

Arvind Narayanan, "Language necessarily contains human biases, and so will machines trained on language corpora", Freedom to Tinker 8/24/2016:

We show empirically that natural language necessarily contains human biases, and the paradigm of training machine learning on language corpora means that AI will inevitably imbibe these biases as well.

Read the rest of this entry »

Two of the hardest problems in English-language parsing are prepositional phrase attachment and scope of conjunction. For PP attachment, the problem is to figure out how a phrase-final prepositional phrase relates to the rest of the sentence — the classic example is "I saw a man in the park with a telescope". For conjunction scope, the problem is to figure out just what phrases an instance of and is being used to combine.

The title of a recent article offers some lovely examples of the problems that these ambiguities can cause: Suresh Naidu and Noam Yuchtman, "Back to the future? Lessons on inequality, labour markets, and conflict from the Gilded Age, for the present", VOX 8/23/2016. The second phrase includes three ambiguous prepositions (on, from, and for) and one conjunction (and), and has more syntactically-valid interpretations than you're likely to be able to imagine unless you're familiar with the problems of automatic parsing.

Read the rest of this entry »

"Heavy Metal and Natural Language Processing – Part 1", Degenerate State 4/20/2016:

Natural language is ubiquitous. It is all around us, and the rate at which it is produced in written, stored form is only increasing. It is also quite unlike any sort of data I have worked with before.

Natural language is made up of sequences of discrete characters arranged into hierarchical groupings: words, sentences and documents, each with both syntactic structure and semantic meaning.

Not only is the space of possible strings huge, but the interpretation of a small sections of a document can take on vastly different meanings depending on what context surround it.

These variations and versatility of natural language are the reason that it is so powerful as a way to communicate and share ideas.

In the face of this complexity, it is not surprising that understanding natural language, in the same way humans do, with computers is still a unsolved problem. That said, there are an increasing number of techniques that have been developed to provide some insight into natural language. They tend to start by making simplifying assumptions about the data, and then using these assumptions convert the raw text into a more quantitative structure, like vectors or graphs. Once in this form, statistical or machine learning approaches can be leveraged to solve a whole range of problems.

I haven't had much experience playing with natural language, so I decided to try out a few techniques on a dataset I scrapped from the internet: a set of heavy metal lyrics (and associated genres).

[h/t Chris Callison-Burch]

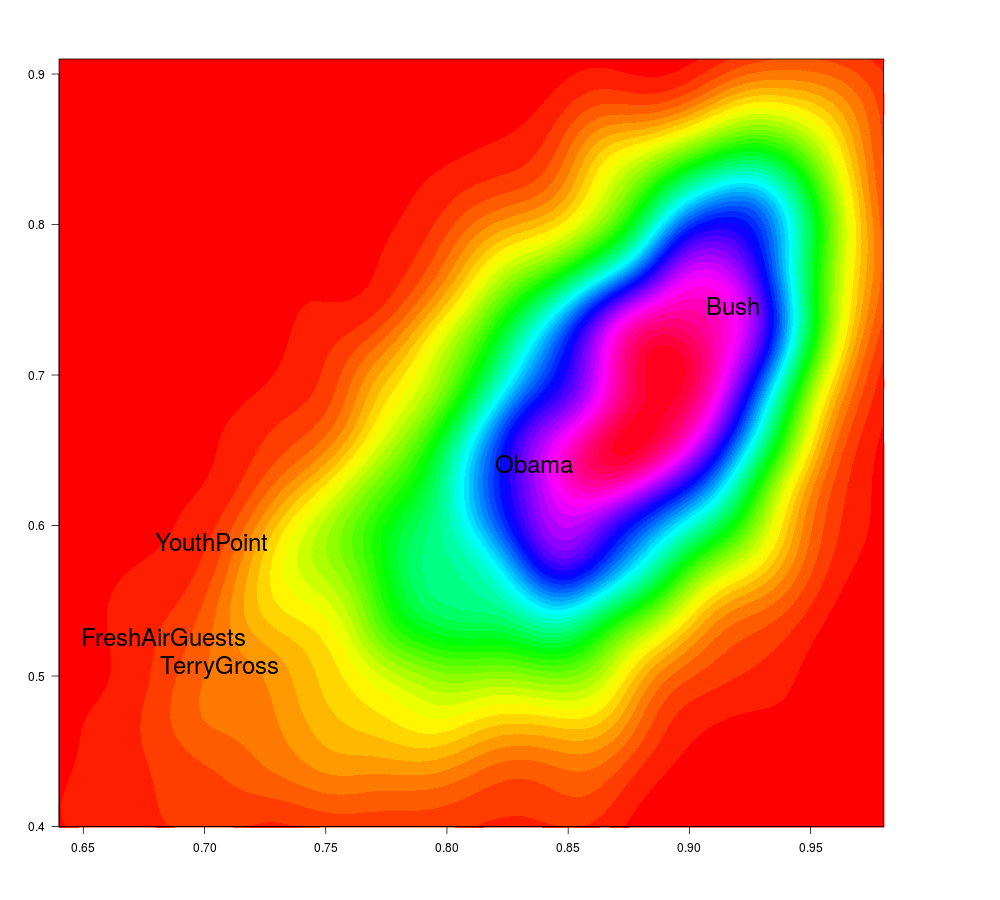

Earlier this year, I observed that there seem to be some interesting differences among individuals and styles of speech in the distribution of speech segment and silence segment durations — see e.g. "Sound and silence" (2/12/2013), "Political sound and silence" (2/8/2016) and "Poetic sound and silence" (2/12/2016).

So Neville Ryant and I decided to try to look at the question in a more systematic way. In particular, we took the opportunity to compare the many individuals in the LibriSpeech dataset, which consists of 5,832 English-language audiobook chapters read by 2,484 speakers, with a total audio duration of nearly 1,600 hours. This dataset was selected by some researchers at JHU from the larger LibriVox audiobook collection, which as a whole now comprises more than 50,000 hours of read English-language text. Material from the nearly 2,500 LibriSpeech readers gives us a background distribution against which to compare other examples of both read and spontaneous speech, yielding plots like the one below:

Read the rest of this entry »

(1/2) For those of you keeping score at home, I gave exactly 18 f*cks about my Pats. Upon reflection, 12 probably would have been sufficient

— Ben Affleck (@BenAffleck) June 23, 2016

(2/2) We Boston fans have always been known for our subtlety. One of my favorite interviews; hope you get to see the entire episode. #GoPats

— Ben Affleck (@BenAffleck) June 23, 2016

Tim Kenneally, "Ben Affleck Has a F-ing Thing or 18 or Say About His Bill Simmons Interview", The Wrap 6/23/2016.

Read the rest of this entry »

The Blizzard Challenge needs you!

Every year since 2005, an ad hoc group of speech technology researchers has held a "Blizzard Challenge", under the aegis of the Speech Synthesis Special Interest Group (SYNSIG) of the International Speech Communication Association.

The general idea is simple: Competitors take a released speech database, build a synthetic voice from the data and synthesize a prescribed set of test sentences. The sentences from each synthesizer are then evaluated through listening tests.

Why "Blizzard"? Because the early competitions used the CMU ARCTIC datasets, which began with a set of sentences read from James Oliver Curwood's novel Flower of the North.

Anyhow, if you have an hour of your time to donate towards making speech synthesis better, sign up and be a listener!

In a comment on one of yesterday's posts ("Adjectives and Adverbs"), Q. Pheevr wrote:

It's hard to tell with just four speakers to go on, but it looks as if there could be some kind of correlation between the ADV:ADJ ratio and the V:N ratio (as might be expected given that adjectives canonically modify nouns and adverbs canonically modify verbs). Of course, there are all sorts of other factors that could come into this, but to the extent that speakers are choosing between alternatives like "caused prices to increase dramatically" and "caused a dramatic increase in prices," I'd expect some sort of connection between these two ratios.

So since I have a relatively efficient POS tagging script, and an ad hoc collection of texts lying around, I thought I'd devote this morning's Breakfast Experiment™ to checking the idea out.

Read the rest of this entry »