The new AI is so lifelike it's prejudiced!

« previous post | next post »

Arvind Narayanan, "Language necessarily contains human biases, and so will machines trained on language corpora", Freedom to Tinker 8/24/2016:

We show empirically that natural language necessarily contains human biases, and the paradigm of training machine learning on language corpora means that AI will inevitably imbibe these biases as well.

This all started in the 1960s, with Gerald Salton and the "vector space model". The idea was to represent a document as a vector of word (or "term") counts — which like any vector, represents a point in a multi-dimensional space. Then the similarity between two documents can be calculated by correlation-like methods, basically as some simple function of the inner product of the two term vectors. And natural-language queries are also a sort of document, though usually a rather short one, so you can use this general approach for document retrieval by looking for documents that are (vector-space) similar to the query. It helps if you weight the document vectors by inverse document frequency, and maybe use thesaurus-based term extension, and relevance feedback, and …

A vocabulary of 100,000 wordforms results in a 100,000-dimensional vector, but there's no conceptual problem with that, and sparse-vector coding techniques means that there's no practical problem either. Except in the 1960s, digital "documents" were basically stacks of punched cards, and the market for digital document retrieval was therefore pretty small. Also, those were the days when people thought that artificial intelligence was applied logic — one of Marvin Minsky's students once told me that Minsky warned him "If you're counting higher than one, you're doing it wrong". Still, Salton's students (like Mike Lesk and Donna Harman) kept the flame alive.

Then came the world-wide web, and the Google guys' development of "page rank", which extends a vector-space model using the eigenanalysis of the citation graph of the web, and the growth of the idea that artificial intelligence might be applied statistics. Also out there was the idea of using various dimensionality-reduction techniques to cut the order of those document vectors down from hundreds of thousands to hundreds.

The first example was "latent semantic analysis", based on the singular value decomposition of a term-by-document matrix. The initial idea was to make document storage and comparison more efficient — but this turned out not to be necessary. Another benefit was to create a sort of soft thesaurus, so that a query might fetch documents that don't feature the queried words, but do contain lots of words that often co-occur with those words. But LSA, interesting as it was, never really became a big thing.

Then people began to explore small vector-space models based on other ways of doing dimensionality reduction on other kinds of word-cooccurrence statistics, especially looking at relationships among nearby words. It didn't escape notice that this puts into effect the old idea of "distributional semantics", especially associated with Zellig Harris and John Firth, summarized in Firth's dictum that "you shall know a word by the company it keeps". Some examples are word2vec, eigenwords, and GloVe. These techniques let you produce approximate solutions to what might seem like hard problems, like London:England::Paris:?, using nothing but vector-space geometry. And it's easy to experiment with these techniques, as here and here.

Continuing with Arvind Narayanan's blog post:

Specifically, we look at “word embeddings”, a state-of-the-art language representation used in machine learning. Each word is mapped to a point in a 300-dimensional vector space so that semantically similar words map to nearby points.

We show that a wide variety of results from psychology on human bias can be replicated using nothing but these word embeddings. We primarily look at the Implicit Association Test (IAT), a widely used and accepted test of implicit bias. The IAT asks subjects to pair concepts together (e.g., white/black-sounding names with pleasant or unpleasant words) and measures reaction times as an indicator of bias. In place of reaction times, we use the semantic closeness between pairs of words. In short, we were able to replicate every single result that we tested, with high effect sizes and low p-values.

[…]

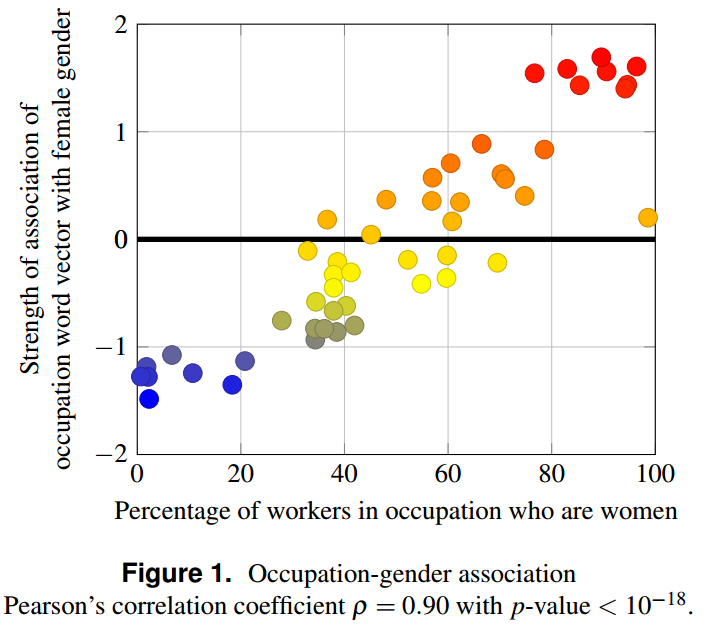

We show that information about the real world is recoverable from word embeddings to a striking degree. The figure below shows that for 50 occupation words (doctor, engineer, …), we can accurately predict the percentage of U.S. workers in that occupation who are women using nothing but the semantic closeness of the occupation word to feminine words!

The paper is Aylin Caliskan-Islam , Joanna J. Bryson, and Arvind Narayanan, "Semantics derived automatically from language corpora necessarily contain human biases". It uses the pre-trained GloVe embeddings available here, and you can read it to be convinced that many sorts of bias reliably emerge from the patterns of word co-occurrence in such material.

I'm pretty sure that Zellig Harris would not have found this surprising — one of his original motivations for developing distributional methods was to find the latent political content of texts by completely objective means.

And for a few differently-embedded words on what those marvelous word-embedding vectors leave out, see the discussion of the "cookbook problem" in these lecture notes.

Update — I should note that I'm strongly in favor of word-embedding models and similar things, but I feel that people should understand what they are and how they work (or don't work), rather than seeing them as a magic algorithmic black box that does magical algorithmic things.

Jerry Friedman said,

August 29, 2016 @ 8:47 am

Is the program prejudiced, or does it know the facts about occupation-gender associations in the U.S.?

[(myl) Neither one. It "knows the facts" about the co-ccurence statistics of wordforms in a large sample of web text. That's all. It's just that the co-occurence of (for example) occupation-words and gendered words mirrors the facts of the world, or at least the facts of text on the web. So, not a surprise at all, unless you weren't paying attention and thought that modern AI methods were magical.]

D.O. said,

August 29, 2016 @ 8:55 am

I fail to be impressed. Maybe, it is not known to the authors unless confirmed by machine-learning methods, but English language has gendered personal pronouns. What the picture is going to look like if they remove them? Or, if they already did, that should be featured prominently.

Also, what is on the y axis? Looks like some statistical measure like difference from average in terms of standard deviation. In other words, something self-referential. And p value of 10^(-18). Grrrrrrrr.

[(myl) Their "strength of association" metric is indeed a bit wonky:

[A]n IAT allows rejecting the null hypothesis (of non-association between two categories) via a p-value and quantification of the strength of association via an effect size. These are obtained by administering the test to a statistically-significant sample of subjects (and multiple times to each subject). With word embeddings, there is no notion of test subjects. Roughly, it is as if we are able to measure the mean of the association strength over all the “subjects” who collectively created the corpora. But we have no way to observe variation between subjects or between trials. We do report p-values and effect sizes resulting from the use of multiple words in each category, but the meaning of these numbers is entirely different from those reported in IATs.

On the other hand, the method is completely objective. So there's that. It's not clear what the "multiple words in each category" were for this experiment — the paper lists

Female attributes: female, woman, girl, sister, she, her, hers, daughter.

Male attributes: male, man, boy, brother, he, him, his, son.

but it's hard to believe that they could get results like that from an N of 8.]

J.W. Brewer said,

August 29, 2016 @ 9:42 am

The linked post clarifies that it means "bias" in a Not-That-There's-Anything-[Necessarily]-Wrong-With-That sense:

We mean “bias” in a morally neutral sense. Some biases are prejudices, which society deems unacceptable. Others are facts about the real world (such as gender gaps in occupations), even if they reflect historical injustices that we wish to mitigate. Yet others are perfectly innocuous. Algorithms don’t have a good way of telling these apart.

The problem is that while "bias" certainly can be a neutral/non-pejorative word in some technical-jargon varieties of English intended to be read only by a specialized audience, I think the word is almost inevitably taken as pejorative by a mass/non-specialist audience at least without heavy clues that you're using a specialized meaning (and here we're certainly not talking about "bias" in the way it's used when talking about woven fabric or the characteristics of old-style magnetic recording tape …). So coming up with some non-pejorative synonym (even if it sounded clunky/jargony) would have been helpful. Using "bias" without intending it to be taken as pejorative seems an instance of nerdview.

Jerry Friedman said,

August 29, 2016 @ 3:34 pm

J. W. Brewer: Thanks for looking at the original post. There seems to be a confusion of time references. The authors say algorithms don't have a way of telling prejudices reflected in corpora from harmful or harmless factual associations, but their title says AI algorithms trained on human corpora will inevitably imbibe those biases. I take it the hard problem is to develop algorithms that can do as well as we do or better at spitting out the prejudices instead of imbibing them. (Not all of us do very well.) I imagine no one has any idea of how to solve that, but saying "inevitably" seems a little premature.

J.W. Brewer said,

August 29, 2016 @ 4:28 pm

Looking at the full article, I can't even tell how they intend to slot their different examples into their different categories. What sort of "bias" is the alleged preference for flowers over insects? Is it "innocuous" in any sense other than the rather circular one that it is not at present widely disapproved of or morally condemned in our particular society?

And of course the way they tested that is pretty obviously bullshit because they appear to have left out of their "flowers" list of words any plants you might expect people to have negative associations with (thistles, ragwort, etc) and similarly left out of their "insects" list any bugs (like butterflies or fireflies or honeybees) that you might expect people to have stereotypically positive associations with. (To the extent they were faithfully replicating a prior experiment in the literature, they couldn't be bothered to find a non-BS one to replicate? Or do we just take the cynical view that this is the sort of social-science subfield where there are no non-BS experiments in the published literature to try to replicate?)

Kivi Shapiro said,

August 29, 2016 @ 8:39 pm

To pick a nit, the algorithm developed by Google is not "page rank" but "PageRank". One reason for the initial capital is that it's named after Larry Page.

Florence Artur said,

August 30, 2016 @ 12:51 am

I don't understand any of this and I have a stupid question. Isn't the whole point of this technology that it can objectively measure subjective things? So it can measure bias. How does it make it biased? I can sort of imagine that there could be problems associated with confusing the measure of a bias with the measure of a real thing, but I can't think of any specific examples.

svat said,

August 30, 2016 @ 2:43 am

For background, one may want to see articles like this: https://www.technologyreview.com/s/602025/how-vector-space-mathematics-reveals-the-hidden-sexism-in-language/ which point out problematic (as determined by humans) biases in these models. This article is pointing out (or claiming) that those are inevitable, because they reflect language, which reflects real-world (human) bias.

Tim Martin said,

August 30, 2016 @ 9:06 am

This is super interesting and I will have to read the full paper later today! For now, a quick comment on something that I thought seemed odd:

"Each word is mapped to a point in a 300-dimensional vector space so that semantically similar words map to nearby points."

This doesn't seem to be true. From what I understand (and I'm using the comments from the blog post to supplement my understanding until I read the paper), the algorithm maps words that co-occur to nearby points. The algorithm doesn't take word *meaning* (i.e. semantics) into account at all.

I guess this is what Mark was saying in his edit to Jerry's comment above. Though I am confused as to why the blog post would mention semantics at all, if semantic relatedness is not what's being measured.