Over the years, we've viewed the phenomenon of word aversion from several angles — a recent discussion, with links to earlier posts, can be found here. What we're calling word aversion is a feeling of intense, irrational distaste for the sound or sight of a particular word or phrase, not because its use is regarded as etymologically or logically or grammatically wrong, nor because it's felt to be over-used or redundant or trendy or non-standard, but simply because the word itself somehow feels unpleasant or even disgusting.

Some people react in this way to words whose offense seems to be entirely phonetic: cornucopia, hardscrabble, pugilist, wedge, whimsy. In other cases, it's plausible that some meaning-related associations play a role: creamy, panties, ointment, tweak. Overall, the commonest object of word aversion in English, judging from many discussions in web forums and comments sections, is moist.

One problem with web forums and comments sections as sources of evidence is that they don't tell us what fraction of the population experiences the phenomenon of word aversion, either in general or with respect to some particular word like moist. Dozens of commenters may join the discussion in a forum that has at most thousands of readers, but we can't tell whether they represent one person in five or one person in a hundred; nor do we know how representative of the general population a given forum or comments section is.

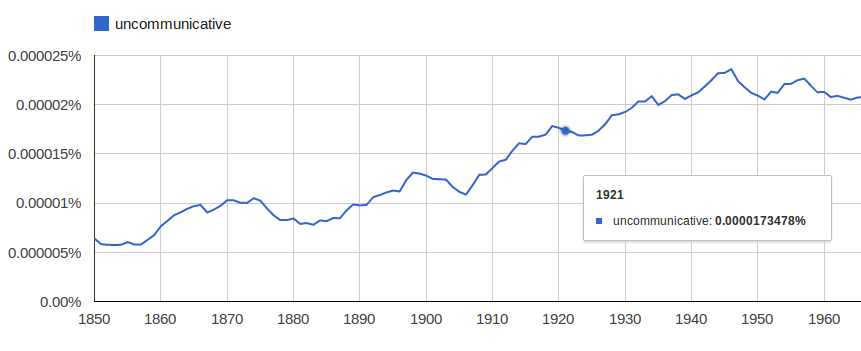

Pending other approaches, it occurred to me that we might be able to learn something from looking at usage in literary works. Authors who are squicked by moist, for example, will plausibly tend to find alternatives. (Well, in some cases the effect might motivate over-use; but never mind that for now…)

So for this morning's Breakfast Experiment™, I downloaded the April 2010 Project Gutenberg DVD, and took a quick look.

Read the rest of this entry »

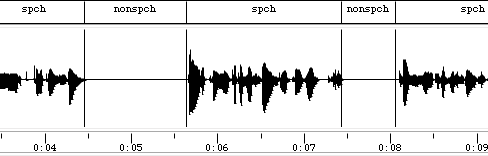

The village of Akazu’yw lies in the rainforest, a day’s drive from the state capital of Belém, deep in the Brazilian Amazon. Last week I traveled there, carrying a dozen Android phones with a specialized app for recording speech. It wasn't all plain sailing…

The village of Akazu’yw lies in the rainforest, a day’s drive from the state capital of Belém, deep in the Brazilian Amazon. Last week I traveled there, carrying a dozen Android phones with a specialized app for recording speech. It wasn't all plain sailing…