'lololololol' ≠ Tagalog

« previous post | next post »

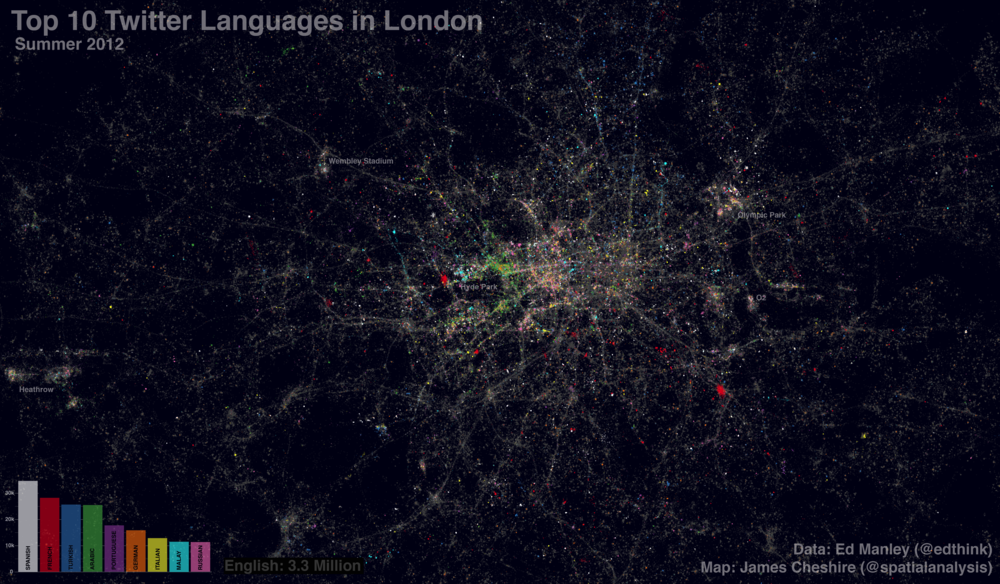

Ed Manley, "Detecting Languages in London's Twittersphere", UrbanMovements 10/22/2012:

Over the last couple of weeks, and as a bit of a distraction from finishing off my PhD, I've been working with James Cheshire looking at the use of different languages within my aforementioned dataset of London tweets.

I've been handling the data generation side, and the method really is quite simple. Just like some similar work carried out by Eric Fischer, I've employed the Chromium Compact Language Detector – a open-source Python library adapted from the Google Chrome algorithm to detect a website's language – in detecting the predominant language contained within around 3.3 million geolocated tweets, captured in London over the course of this summer. […]

One issue with this approach that I did note was the surprising popularity of Tagalog, a language of the Philippines, which initially was identified as the 7th most tweeted language. On further investigation, I found that many of these classifications included just uses of English terms such as 'hahahahaha', 'ahhhhhhh' and 'lololololol'. I don't know much about Tagalog but it sounds like a fun language. Nevertheless, Tagalog was excluded from our analysis.

There's some discussion here of how the language detection tool works:

The tool works by scanning a chunk of text and then segmenting and analyzing four-character “tokens.” These tokens are compared against a very large table of reference tokens that have language properties associated with them.

The source code that Manley used is said to be available here. But the cited python wrapper for C++ code in the source package does not seem to be present, and I couldn't get the C++ test program to compile, and don't have time this morning to figure out what's wrong. So I'll just note that in the Wikipedia article on the Tagalog language in Tagalog, the string "haha" occurs 8 times, and the string "ahah" occurs 4 times; but none of "ahhh", "hhhh", "lolo", "olol" occur at all.

I'll be grateful to any reader who takes the time to figure out how to get the stand-alone version of the "chromium-compact-language-detector" to compile and run in a standard linux environment. A more detailed overview of the algorithm would be nice as well. Apparently it works from a list of UTF-8 4-grams that are considered to be diagnostic of language identity — and this table is "compact" in the sense that it's much smaller than a full 4-gram language model for each language would be — but it's not clear to me how the table was created, or exactly how it's used in the program.

Thus the string "lolo" occurs three times among the 64k table entries in

src/encodings/compact_lang_det/generated/compact_lang_det_generated_quadschrome.cc:

{ {0x7aed000b, 0x6281082c, 0x78a90211, 0x00000000}}, // fuat, lolo, _niev,

{ {0x26c5011c, 0xcd2a0ca6, 0xb05b0039, 0x00000000}}, // _lolo_, ужбе_, skär,

{ {0x78a90065, 0x32180784, 0x26c50416, 0x00000000}}, // niev, sary_, lolo_,

The string "olol" doesn't occur at all. Again, some enlightenment as to where this table came from and how it's used would be enlightening.

A nice zoomable version of the London language map can be found in "Mapped: Twitter Languages in London", SpatialAnalysis.co.uk 10/22/2012, and there's some media coverage in Shane Richmond, "Twitter map of London shows 66 languages", The Telegraph 10/26/2012.

[ht Peter McBurney]

Update — for those who might want to try the code, Stephen Checkoway provides this recipe:

hg clone https://code.google.com/p/chromium-compact-language-detector/

cd chromium-compact-language-detector

./autogen.sh

./autogen.sh # Their script is broken: it runs things in the wrong order

./configure

make

sudo make install

cd bindings/python

./setup.py build

cd build/lib.linux-x86_64-2.7

LD_LIBRARY_PATH=/usr/lib:/usr/local/lib python

And then 'import cld' works. The LD_LIBRARY_PATH is a hack because cld.so depends on libcld.so.0, but for some reason, it isn't looking in /usr/local/lib for it. I didn't bother tracking down why.

Bob Couttie said,

October 31, 2012 @ 8:53 am

"The string "olol" doesn't occur at all. "

You might try "ulol", the normal spelling in the Philippines

[(myl) But the point at issue is to explain why the language-identification tool mistakenly tags "lololololol" as Tagalog. The algorithm is apparently based on 4-grams of letters, and so "ulol" isn't really relevant. But anyhow, the 3-gram "lol" doesn't occur in the cited article either, suggesting that its overall frequency in Tagalog text is low.]

John Lawler said,

October 31, 2012 @ 9:46 am

Tagalog has recently been in the news in Canada, too.

Though on a rather different statistical front; this upsurge in Tagalog use doesn't come from tweets, or n-grams, or text of any sort, but rather from demographic reports.

Al Sabado said,

October 31, 2012 @ 10:10 am

Hello, we're probably fond of redundancy (as we normally do with names like Jun-Jun, Lot-Lot, Len-Len, Bon-Bon, and the list goes on). Of course, there's "lololololol" to note that the person happily writing (usually texting) it is laughing out loud—a lot.

Your reader from the Philippines. :)

Theodore said,

October 31, 2012 @ 10:29 am

Could there be some issue with detecting the character encoding? Is it looking at e.g. UTF-16 2-grams?

Anyway, should we desire to name this newly-discovered language, I nominate "hash-Tagalog".

Alon Lischinsky said,

October 31, 2012 @ 10:40 am

I propose ‘TagaLOL’.

Afuna said,

October 31, 2012 @ 11:33 am

Native speaker, not a linguist, but I wonder if it could be because Tagalog uses repetition of the first syllable, along with prefixes, for aspect and tense.

For example, the base word "loko" (to fool) can be written as:

* "nagloloko" (present tense, no object)

* "magloloko" (future tense, no object)

* "niloloko" (present tense, with object)

* "naglolokohan" (playing around: present tense)

* "maglolokohan" (playing around: future tense)

* "panloloko" (fooling: gerund)

* "manloloko" (liar/person doing the fooling)

Also, "lolo" is a Filipino word for "grandpa". I say Filipino rather than Tagalog because this one was picked up from Spanish — though the Chrome language detector can't distinguish between the two. This probably isn't enough to influence the token frequency breakdown, at least not when compared to the various combinations of verbs starting with "lo".

Kevin Finity said,

October 31, 2012 @ 11:40 am

You can find a lot of the scoring details by exploring DoQuadScoreV3() in cldutil.cc.

So if you look at the first table entry you've got up there, 0×6281082c goes with "lolo" without any prefix or suffix. Using QuadHashV25(), the 4-gram 'lolo' hashes to 6281, which is a key to the other half (the value) 082c. If you look that up in the other (16k) table, entry 082c is 0x21003a02 (which the comments say is eu.tl…_220).

Other comments say it's a "4-byte entry of 3 language numbers and one probability subscript".

The language numbers come from languages.cc, so 21 (dec 33) is Tagalog, 00 is UNKNOWN, and 03 is Dutch.

The remaining "a02" indicates the probabilities for the 3 languages. The 'a' (10) byte goes with Tagalog, the 0 goes with unknown, and 2 goes with Dutch. The probabilities for each quadgram are added to the running total for those 3 languages so far.

Most of the rest is pretty opaque to me, since I don't really have time to look at this in depth, but presumably at some point it takes the language with the top probability and presents that as the best guess.

Endangered Languages and Cultures » Blog Archive » London tweets said,

November 1, 2012 @ 4:18 am

[…] tip Mark Liberman at Language Log] Category: Endangered Languages | Comment […]

Rogier said,

November 1, 2012 @ 5:34 am

An excellent example of how domain mismatch causes problems for classifiers.

I don't know whether this is an obvious point, but the frequency (and conditional probability) of "lolo" in Tagalog by itself doesn't matter much. It's the frequency of "lolo" in Tagalog compared to other languages (roughly, the posterior probability) that decides which language gets chosen. (I'm assuming a uniform prior.) If "lolo" occurs infrequently in Tagalog, but never in other languages, its presence could still form the clincher argument.

[(myl) This is true; but the desire to have a "compact" classifier — based on a table of 64k 4-grams out of the 95156^4 = 8.198695e+19 possible Unicode 4-grams — means that the 4-grams that are used will be both discriminative and also relatively common.]

I haven't looked at the code at all. But my hypothesis would be that instead of a generative model, this uses a discriminative model with strong regularisation. A generative model gives the probability of the character sequence given the language; a discriminative model directly gives the probability of the language given the character sequence. In this case, it would have the shape of an n-gram model, but the weights don't have to add up to 1. The regularisation would force uninformative weights to exactly 1 (say), hopefully without much loss in classification. The hash table would only contain the weights that are not 1, which repesent, roughly, the clincher arguments.

Mapping language, language maps | said,

November 3, 2012 @ 1:35 pm

[…] huh? You can really get a good idea of the linguistic landscape of London. Although there were some potential methodological problems with this study, I still think it's a great way to present this […]