There's a passage in James Gleick's "Auto Crrect Ths!", NYT 8/4/2012, that's properly spelled but in need of some content correction:

If you type “kofee” into a search box, Google would like to save a few milliseconds by guessing whether you’ve misspelled the caffeinated beverage or the former United Nations secretary-general. It uses a probabilistic algorithm with roots in work done at AT&T Bell Laboratories in the early 1990s. The probabilities are based on a “noisy channel” model, a fundamental concept of information theory. The model envisions a message source — an idealized user with clear intentions — passing through a noisy channel that introduces typos by omitting letters, reversing letters or inserting letters.

“We’re trying to find the most likely intended word, given the word that we see,” Mr. [Mark] Paskin says. “Coffee” is a fairly common word, so with the vast corpus of text the algorithm can assign it a far higher probability than “Kofi.” On the other hand, the data show that spelling “coffee” with a K is a relatively low-probability error. The algorithm combines these probabilities. It also learns from experience and gathers further clues from the context.

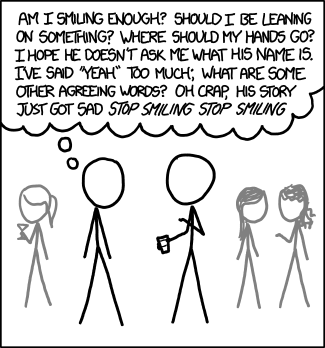

The same probabilistic model is powering advances in translation and speech recognition, comparable problems in artificial intelligence. In a way, to achieve anything like perfection in one of these areas would mean solving them all; it would require a complete model of human language. But perfection will surely be impossible. We’re individuals. We’re fickle; we make up words and acronyms on the fly, and sometimes we scarcely even know what we’re trying to say.

Read the rest of this entry »