'lololololol' ≠ Tagalog

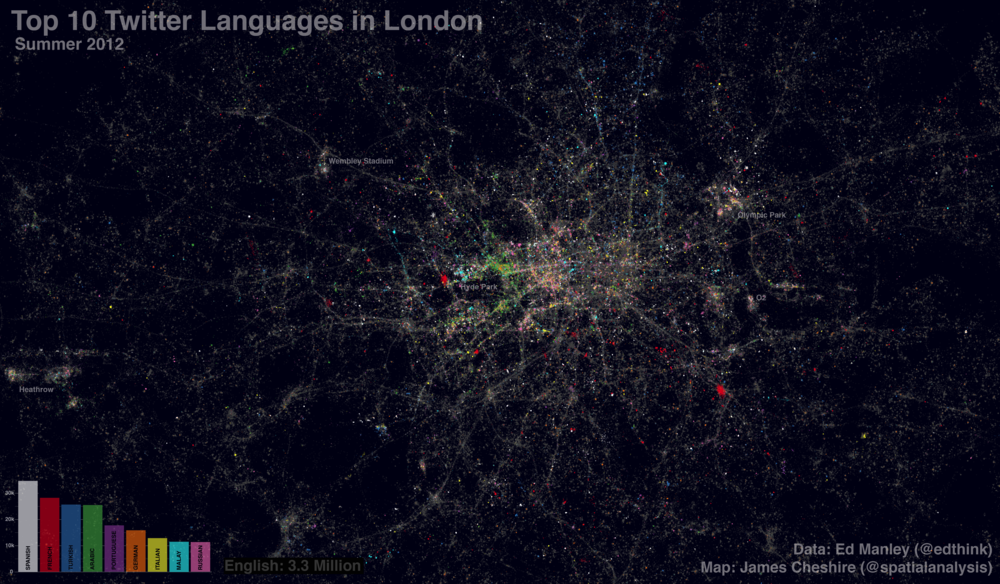

Ed Manley, "Detecting Languages in London's Twittersphere", UrbanMovements 10/22/2012:

Over the last couple of weeks, and as a bit of a distraction from finishing off my PhD, I've been working with James Cheshire looking at the use of different languages within my aforementioned dataset of London tweets.

I've been handling the data generation side, and the method really is quite simple. Just like some similar work carried out by Eric Fischer, I've employed the Chromium Compact Language Detector – a open-source Python library adapted from the Google Chrome algorithm to detect a website's language – in detecting the predominant language contained within around 3.3 million geolocated tweets, captured in London over the course of this summer. […]

One issue with this approach that I did note was the surprising popularity of Tagalog, a language of the Philippines, which initially was identified as the 7th most tweeted language. On further investigation, I found that many of these classifications included just uses of English terms such as 'hahahahaha', 'ahhhhhhh' and 'lololololol'. I don't know much about Tagalog but it sounds like a fun language. Nevertheless, Tagalog was excluded from our analysis.

Read the rest of this entry »