Archive for Artificial intelligence

Machine translators vs. human translators

"Will AI make translators redundant?" By Rachel Melzi, Inquiry (3 Dec 2024)

The author is a freelance Italian to English translator of long standing, so she is well equipped to respond to the question she has raised. Having read through her article and the companion piece on AI in general (e.g., ChatGPT and other LLMs) in the German magazine Wildcat (featuring Cybertruck [10/21/23]) (the article is available in English translation [11/10/23]), I respond to the title question with a resounding "No!". My reasons for saying so will be given throughout this post, but particularly at the very end.

The author asks:

How good is AI translation?

Already in 2020, two thirds of professional translators used “Computer-assisted translation” or CAT (CSA Research, 2020). Whereas “machine translation” translates whole documents, and thus is meant to replace human translation, CAT supports it: the computer makes suggestions on how to translate words and phrases as the user proceeds through the original text. The software can also remind users how they have translated a particular word or phrase in the past, or can be trained in a specific technical language, for instance, by feeding it legal or medical texts. CAT software is currently based on Neural Machine Translation (NMT) models, which are trained through bilingual text data to recognise patterns across different languages. This differs from Large Language Models (LLM), such as ChatGTP, which are trained using a broader database of all kinds of text data from across the internet. As a result of their different databases, NMTs are more accurate at translation and LLMs are better at generating new text.

Read the rest of this entry »

AIO: brain extension

I've only been using Artificial Intelligence Overview (AIO) for about half a year. Actually, I don't really "use" it. I ask Google a question, often quite complicated but succinctly stated, and AIO jumps in and takes over my search, boiling things down to a manageable framework. As I've explained in earlier posts, AIO rapidly improved, its answers becoming increasingly relevant, organized, and, yes, I dare say, literate. It's also becoming quicker and more confident. Now it doesn't have to remind me so often that it is only "experimental".

Read the rest of this entry »

Permalink Comments off

AI copy editing crapping?

"Evolution journal editors resign en masse to protest Elsevier changes", Retraction Watch 12/27/2024:

All but one member of the editorial board of the Journal of Human Evolution (JHE), an Elsevier title, have resigned, saying the “sustained actions of Elsevier are fundamentally incompatible with the ethos of the journal and preclude maintaining the quality and integrity fundamental to JHE’s success.” […]

Among other moves, according to the statement, Elsevier “eliminated support for a copy editor and special issues editor,” which they interpreted as saying “editors should not be paying attention to language, grammar, readability, consistency, or accuracy of proper nomenclature or formatting.” The editors say the publisher “frequently introduces errors during production that were not present in the accepted manuscript:”

"In fall of 2023, for example, without consulting or informing the editors, Elsevier initiated the use of AI during production, creating article proofs devoid of capitalization of all proper nouns (e.g., formally recognized epochs, site names, countries, cities, genera, etc.) as well italics for genera and species. These AI changes reversed the accepted versions of papers that had already been properly formatted by the handling editors. This was highly embarrassing for the journal and resolution took six months and was achieved only through the persistent efforts of the editors. AI processing continues to be used and regularly reformats submitted manuscripts to change meaning and formatting and require extensive author and editor oversight during proof stage."

Read the rest of this entry »

Grok (mis-)counting letters again

In a comment on "AI counting again" (12/20/2024), Matt F asked "Given the misspelling of ‘When’, I wonder how many ‘h’s the software would find in that sentence."

So I tried it — and the results are even more spectacularly wrong than Grok's pitiful attempt to count instances of 'e', where the correct count is 50 but Grok answered "21".

Read the rest of this entry »

AI counting again

Following up on "AIs on Rs in 'strawberry'" (8/24/2024), "Can Google AI count?" (9/21/2024), "The 'Letter Equity Task Force'" (12/5/2024), etc., I thought I'd try some of the great new AI systems accessible online. The conclusion: they still can't count, though they can do lots of other clever things.

Read the rest of this entry »

The Knowledge

Chatting with my London cabbie on a longish ride, I was intrigued by how he frequently referred to "the Knowledge". He did so respectfully and reverently, as though it were a sacred catechism he had mastered after years of diligent study. Even though he was speaking, it always sounded as though it came with a capital letter at the beginning. And rightly so, because it is holy writ for London cabbies.

Read the rest of this entry »

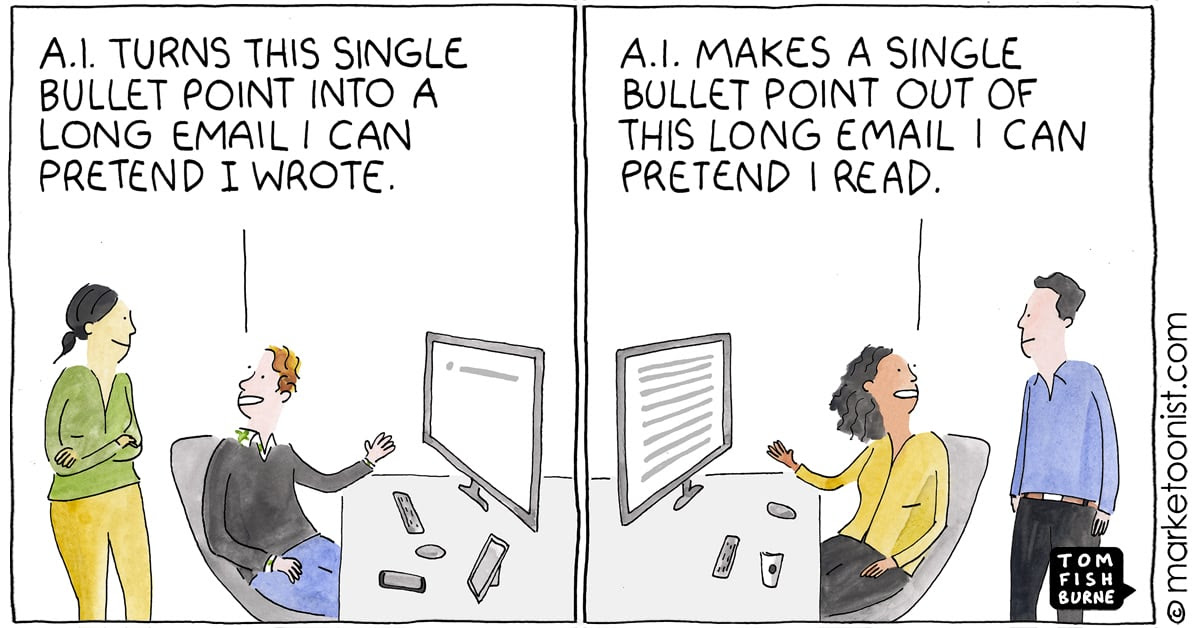

More AI satire

OpenAI comms: [underspecific hype-y 'big tings coming!!' pls like and subscribe]

Google comms: [corporate vagueness about Gemini3-0011 v2 FINAL.docx on Vertex available to 14 users]

GDM comms: [we have simulated a rat's brain capable of solving 4D chess, but we're not sure why]…— Séb Krier (@sebkrier) December 3, 2024

Read the rest of this entry »

The "Letter Equity Task Force"

Previous LLOG coverage: "AI on Rs in 'strawberry'", 8/28/2024; "'The cosmic jam from whence it came'", 9/26/2024.

Current satire: Alberto Romero, "Report: OpenAI Spends Millions a Year Miscounting the R’s in ‘Strawberry’", Medium 11/22/2024.

OpenAI, the most talked-about tech start-up of the decade, convened an emergency company-wide meeting Tuesday to address what executives are calling “the single greatest existential challenge facing artificial intelligence today”: Why can’t their models count the R’s in strawberry?

The controversy began shortly after the release of GPT-4, on March 2023, when users on Reddit and Twitter discovered the model’s inability to count the R’s in strawberry. The responses varied from inaccurate guesses to cryptic replies like, “More R’s than you can handle.” In one particularly unhinged moment, the chatbot signed off with, “Call me Sydney. That’s all you need to know.”

Read the rest of this entry »

AI Overview (sometimes) admits that it doesn't have an answer

When I first encountered AI Overview (AIO) about half a year ago, I was amazed by how it would whirl and swirl while searching for an answer to whatever query I had entered into the Google search engine. It would usually find a helpful answer within a second.

As the months passed, the response time became more rapid (usually instantaneous), the answers better organized and almost always helpful, but sometimes AIO would simply not answer.

About a week ago, I was stunned when occasionally AIO — after thinking for a split second — would declare that it didn't have an answer for what I had asked about.

Read the rest of this entry »

Searle's "Chinese room" and the enigma of understanding

In this comment to "'Neutrino Evidence Revisited (AI Debates)' | Is Mozart's K297b authentic?" (11/13/24), I questioned whether John Searle's "Chinese room" argument was intelligently designed and encouraged those who encounter it to reflect on what it did — and did not — demonstrate.

In the same comment, I also queried the meaning of "understand" and its synonyms ("comprehend", and so forth).

Both the "Chinese room" and "understanding" had been raised by skeptics of AI, so here I'm treating them together.

Read the rest of this entry »

"Neutrino Evidence Revisited (AI Debates)" | Is Mozart's K297b authentic?

[This is a guest post by Conal Boyce]

Read the rest of this entry »