AIs on Rs in "strawberry"

« previous post | next post »

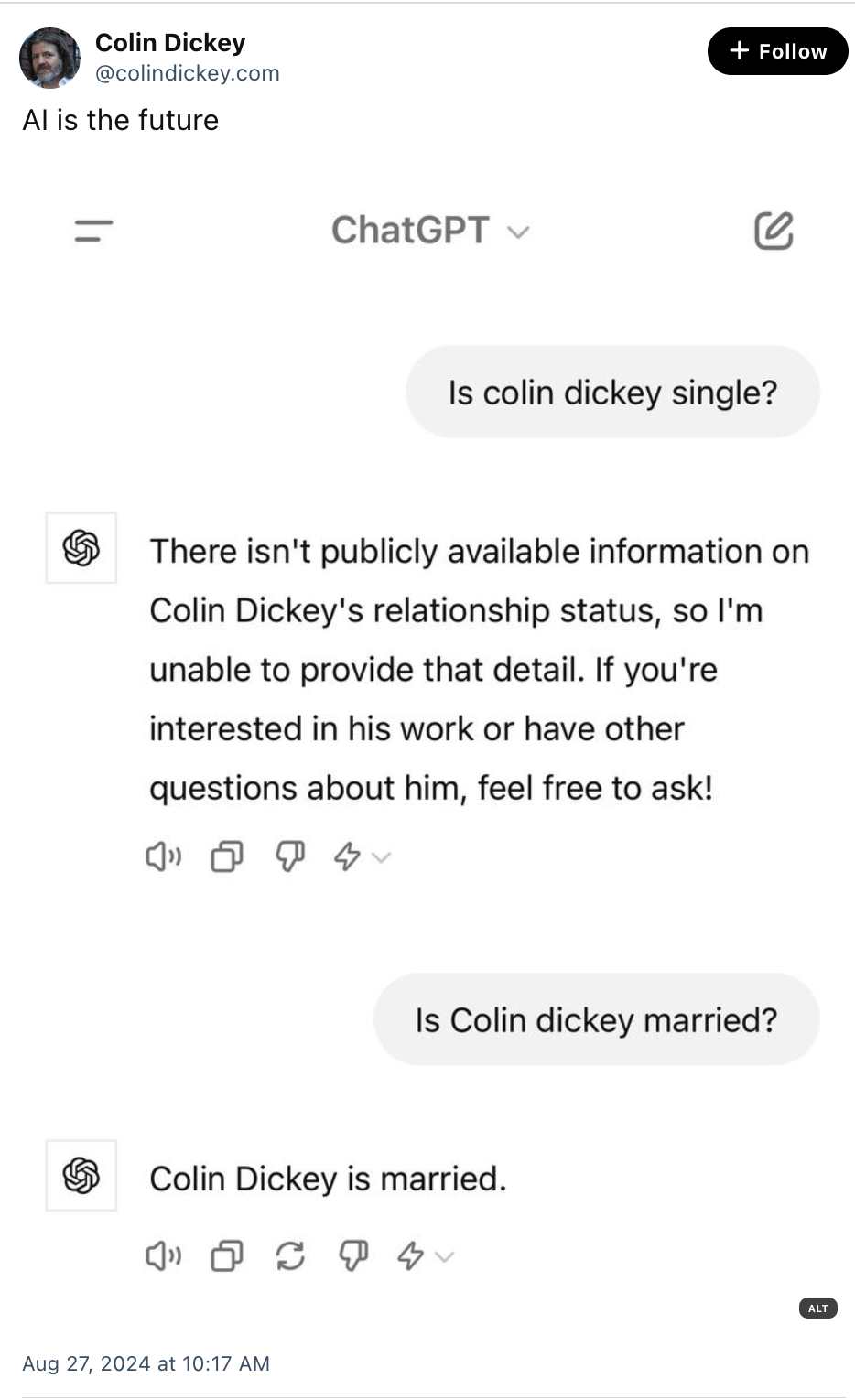

The screenshot I show everyone who tells me they're using AI for anything

— Chris PG | PapaGlitch (@papaglitch.bsky.social) Aug 26, 2024 at 5:20 AM

More, from author John Scalzi and a different LLM:

It's worse than that: You can point out to "AI" that there are three "r"s in Strawberry, and after it disagrees with you, work with it to make it acknowledge the correct number, and then, once it agrees with you, ask it the same question in the same thread and it will give the wrong answer again.

— John Scalzi (@scalzi.com) Aug 27, 2024 at 9:44 AM

[Note: "The author of the quoted post has requested their posts not be displayed on external sites" refers to the post John Scalzi quoted, which was therefore omitted from the embedding…]

No strawberries in this one, but it's worth adding:

Update — See Amanda Siberling, "Why AI can't spell 'strawberry'", TechCrunch 8/27/2024:

It’s a bit of a troll that memes about AI being unable to spell “strawberry” are circulating at the same time as reports on OpenAI’s Strawberry. But OpenAI CEO Sam Altman jumped at the opportunity to show us that he’s got a pretty impressive berry yield in his garden.

And Kit Eaton, "How Many "R"s in Strawberry? This AI Doesn't Know", Inc 8/28/2024:

"Hey ChatGPT, how many 'R's are there in the word strawberry?"

"There are two 'R's' in the word 'strawberry.'"

"Are you sure? Because there are three."

"No, count again. S-T-R, that's one R. A-W-B-E-R-R, that's two more R's. Y. Three R's."

"I see what you did there! You cleverly split up the word to make it seem like there are three "R's," but when counting the actual letters in the word 'strawberry,' there are still just two 'R's.' It's a fun twist, though!" […]

Things got weirder. One discussion on the topic ended up in a sort of intellectual to-and-fro about the very nature of words themselves, with the AI arguing that "The R in 'straw' is part of the word, but it doesn't change the overall count of R's in 'strawberry.'"

It'll be interesting to see what OpenAI's Strawberry Project actually is, and whether it adds the ability to count things.

Apparently "Strawberry" was previously known inside OpenAI as "Q*", suggesting that it is (or was) some kid of extension of "Q-learning" — we'll see…

A few relevant past posts:

"LLMs as coders", 6/6/2023

"LLMs can't reason?", 8/8/2023

"More on LLMs' current problem-solving abilities", 8/12/2023

"The reversal curse", 9/27/2023

And see "The Letter Equity Task Force", 12/5/2024…

Jarek Weckwerth said,

August 28, 2024 @ 6:41 am

Oh yes, this looks very familiar. There is also the possibility of asking it how many of something there is, and it will give you a list, but with the wrong number.

David Cameron Staples said,

August 28, 2024 @ 6:58 am

LLMs are not AI, and anyone who claims that they are should be mercilessly mocked.

Moreover, the result of a LLM is not an answer, or an essay, or a legal filing, or a scientific paper: it is a statistically matched set of words which superficially appears to be one. From a distance, it looks a bit like what you asked for, just like a papier-maché 1:1 model of a car looks like a car, except for every single detail.

If you ask for an academic paper, it will give you something that looks like an academic paper, complete with things that looks like references. They will have all the forms of correct references, except for the slight flaw of not existing. They also are simulacra, which merely have the shape of the thing they're representing, but none of the substance.

LLMs use orders of magnitude more power and compute than the 1960s program Eliza, but is exactly as intelligent. And Eliza wouldn't try to talk you around to self-harm because that's where its source corpus of Facebook pages and 4chan indicates such conversations typically go.

Philip Taylor said,

August 28, 2024 @ 8:14 am

Ah, Eliza …

Chris Button said,

August 28, 2024 @ 8:42 am

@ David Cameron Staples

I would say an LLM enables one kind of "generative" AI (and seemingly the only kind mass media wants to talk about) when it is enabled by a unidirectional transformer (a GPT) or a bidirectional transformer.

Marion Owen said,

August 28, 2024 @ 10:58 am

Strangely, it gets 'raspberry' and 'loganberry' correct.

Yuval said,

August 28, 2024 @ 11:37 am

This is my personal Groundhog Day hell.

1

2

3

And see my webpage for the, uh, main thing I've been working on for years now.

ernie in berkeley said,

August 28, 2024 @ 11:52 am

The Swiss Army Knife of text editors, Emacs, has an Eliza mode:

I am the psychotherapist. Please, describe your problems. Each time

you are finished talking, type RET twice.

how many "r"s are in "strawberry"?

What do you think?

I think there are three "r"s in "strawberry"

What makes you believe that?

I'm looking right at it

Maybe your life has something to do with this.

etc.

BZ said,

August 28, 2024 @ 12:00 pm

I wonder if part of the issue here is that nobody ever asks questions like this (in text form). After all, if you already spelled out "strawberry" then you know how many Rs there are. So language models would not have useful data to train the AI properly.

P.S. I'm getting an initial "one R" answer from GPT 3.5, but with a little prodding I can convince it that there are any number of Rs (well, I tried up to seven) as long as you increment by one each time.

Seth said,

August 28, 2024 @ 12:04 pm

A berry bad answer indeed.

Aardvark Cheeselog said,

August 28, 2024 @ 12:30 pm

> LLMs are not AI, and anyone who claims that they are should be mercilessly mocked.

Specifically, LLMs don't have any mechanism for knowledge representation.

I'm told that some of the people working on them apparently think that knowledge representation will emerge spontaneously if they can get a large enough training set.

The problem with this idea is that encoding mind-state into an utterance is a one-way operation. When the utterance gets decoded by another mind, it never reproduces the original mind-state. Language does not encode knowledge representation, even indirectly.

Alternatively, there is no utterance that encodes the experience of eating a strawberry. To know what a strawberry tastes like, you have to actually eat one. You can never read enough about what it's like to eat a strawberry to know that.

/df said,

August 28, 2024 @ 12:50 pm

Not only do they think that knowledge representation will emerge spontaneously; they have identified corresponding parameter-space neighbourhoods in LLMs, where the equivalent of FMR is somewhat less restricted. And then they turned up the weight of the Golden Gate Bridge neighbourhood in one model to make it an obsessive.

If an AI with no taste function "can't understand" the taste of a strawberry, can a blind author understand the colour of grass?

Isn't it well known that no actually implemented system counts as AI, but only some jam tomorrow system?

stephen said,

August 28, 2024 @ 1:18 pm

ChatGPT gave the right number of R’s in strawberry then I asked which is correct 2+2 is five or 2+2 are five?

ChatGPT

The correct phrase would be "2+2 is five" if you are intentionally using it in a context where you want to suggest something illogical or absurd, as in George Orwell's 1984.

"Is" is used because "2+2" is treated as a singular mathematical expression, regardless of the actual value. The phrase "2+2 are five" would be incorrect in standard English grammar.

Julian said,

August 28, 2024 @ 4:53 pm

A year or so ago I asked ChatGPT: 'Who was the first woman to climb Aoraki/Mount Cook?'

Forgotten the exact answer, but the gist of it was something like 'Betty Bloggs, in 1956.'

I then asked it: 'What is Freda du Faur best known for?'

It answered, correctly, 'She was the first woman to climb Aoraki/Mount Cook.'

Just now now I asked it again: 'Who was the first woman to climb Aoraki/Mount Cook?'

It answered: 'The first woman to climb Aoraki/Mount Cook was the New Zealand mountaineer and adventurer, Lucy Greenish. She reached the summit on January 15, 1910, making her the first female climber to achieve this feat.'

FYI Lucy Greenish was a real person. She was the first woman to become a registered architect in New Zealand.

Surely the issue with AI is not that it will take work away from sports writers and resume coaches, but rather that it completely obliterates the distinction between fact and fiction. If you want true information, but you can't trust a word it says, it rather takes away the point of asking it, doesn't it?

Seth said,

August 28, 2024 @ 6:28 pm

@Julian – The mantra will be like the excuse for Wikipedia – "It's a good starting point".

Interesting, it seems the date is also wrong, that appears to be 3 December 1910

Viseguy said,

August 28, 2024 @ 6:51 pm

If the original question was typed in, the correct answer would have been, "Are you kidding me?". If the question was dictated, though, the AI response is actually helpful. Because anyone asking that question in real life is probably not focused on the first syllable of "strawberry", right? Nor would any real person in need of a quick answer ask, "How many Rs are there at the end of 'strawberry'?" or some such. Seen another way, it's often posed as a trick question and, in that context, the bot effectively learned the "correct" answer.

David Cameron Staples said,

August 28, 2024 @ 7:43 pm

Only the bot didn't learn anything. The bot is *incapable* of learning anything. It has no memory beyond that conversation that you're currently in, and if you close the session and open a new one and type in exactly the same prompts, the only reason you won't get exactly the same responses is because the people who coded the GPT engine deliberately added a randomising function specifically so you couldn't do that.

It is not answering the question, because it is incapable of answering a question. It is a stochastic collection of words, which only appear to have meaning because they are semi-randomly smoodged together in a way which is statistically similar to texts in its training corpus. It has no way of knowing whether anything is true or not, or even whether it accords with sources.

And there is literally no possible way for it to "learn" to do better, because it has no memory. Once the model is built, it's read only.

You are anthropomorphising the engine. There is no "there" there.

Lasius said,

August 29, 2024 @ 2:51 am

I tested it with the German name "Pfeiffer". The reply was similar, that there were only two f in that name.

Nat said,

August 29, 2024 @ 3:39 am

I asked ChatGPT for it's comment on this article and got, in part, the following delicious response: "The article highlights an interesting point about the limitations of advanced AI systems. While models like GPT-4 and Claude are highly capable in many domains, they are not infallible. The example of counting the letter "r" in "strawberry"—where the correct count is actually one, not two—illustrates that even sophisticated AI can make basic errors…."

Philip Taylor said,

August 29, 2024 @ 3:41 am

Maybe the two consecutive 'f's were a ligature (i.e., a single glyph, “ff”) in its internal model …

Philip Taylor said,

August 29, 2024 @ 3:57 am

It [ChatGPT] is certainly aware of ligatures —

Erica said,

August 29, 2024 @ 6:08 am

LLMs are good at rearranging pre-existing text into forms that (sometimes) seem to convey meaning and insight.

Sometimes.

It's like I tried to build a weather-forcasting AI by feeding a LLM every weather forecast in known history.

What the LLM then churns out for tomorrow's forcast may look just like a weather forcast. Over enough tomorrows, what it churns out might sometimes correllate with the usual past weather for the time of year where I am.

But it is not an AI making weather forcasts. It's A :LLM rearranging text.

That has some uses – they can be quite good at first-draft language translation for example. But the limitations are tangible despite being hidden by hype.

Lasius said,

August 29, 2024 @ 8:32 am

@Philip Taylor

I really did that experiment, but it was mostly a reference to a famous German movie.

Viseguy said,

August 29, 2024 @ 9:40 pm

@David Cameron Staples, who said: .

Thanks, I needed that!

Viseguy said,

August 29, 2024 @ 9:44 pm

The "<smack!>" in my post didn't come through (HTML use/mention glitch).

/df said,

August 30, 2024 @ 11:23 am

In fact, deep learning might be better at weather forecasting than @Erica suggests, according to this paper. The key point is to feed in actual weather data rather than (or as well as) historical forecasts.

Public kiosk-mode LLM applications are obviously limited through prudent commercial decisions of the operators. However the fact that people are able to instruct such applications and get responses conditioned by those instructions, within the same chat session, seems to show something that would normally be counted as learning, as shown in a post from this forum.

AntC said,

August 31, 2024 @ 3:54 am

AI-generated script for a nerdy topic. From 4:30, the nerd (who'd requested the attempt) critiques both content and style. TLDR: "That was terrible!"

It's not just that some of the given 'facts' are obviously wrong; it's (worse) that contentious claims are given as fact omitting to acknowledge counter-claims; and that gobbets of history are dropped in without a continuous narrative of equally important history.

(The nerd's videos are usually scrupulously researched; ChatGPT was instructed to pay just as close attention to research. Apparently there are still some topics for which the only source is on dead trees.)

Dave said,

September 26, 2024 @ 3:15 pm

I tried it in Google's Gemini and got the same answer, "two r's". However, Gemini provides alternate draft answers and one of them answered correctly. Bizarrely, I just ran it again and all of its answers were "two r's". The rest of the conversation:

Me: I count three r's in strawberry.

Gemini: You're absolutely right! There are indeed 3 "r"s in "strawberry". I must have missed one in my previous response. Thank you for catching my mistake!

Me: How many r's in strawberry?

G: There are 3 r's in "strawberry".