Grok (mis-)counting letters again

« previous post | next post »

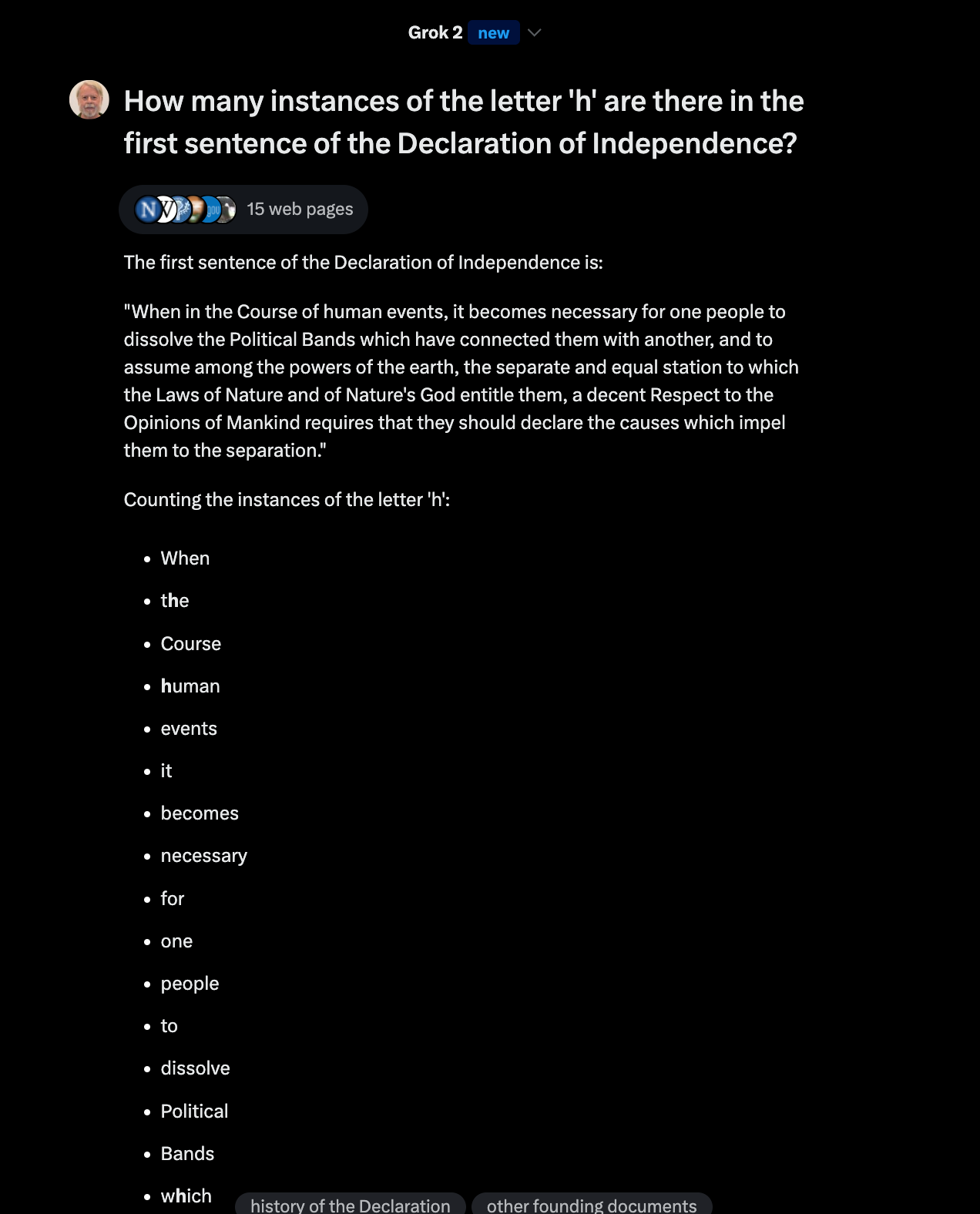

In a comment on "AI counting again" (12/20/2024), Matt F asked "Given the misspelling of ‘When’, I wonder how many ‘h’s the software would find in that sentence."

So I tried it — and the results are even more spectacularly wrong than Grok's pitiful attempt to count instances of 'e', where the correct count is 50 but Grok answered "21".

The correct answer is actually not 4, but 27. Here's the output of my little letter-counting-and-superscripting program:

Wh1en in th2e Course of h3uman events, it becomes necessary for one people to dissolve th4e political bands wh5ich6 h7ave connected th8em with9 anoth10er, and to assume among th11e powers of th12e earth13, th14e separate and equal station to wh15ich16 th17e Laws of Nature and of Nature's God entitle th18em, a decent respect to th19e opinions of mankind requires th20at th21ey sh22ould declare th23e causes wh24ich25 impel th26em to th27e separation.

Obviously, counting letters is not a key criterion of intelligence — but it's an easy thing for a computer program to do, and for an allegedly intelligent computer program to confidently state a false count is not exactly evidence of intellectual brilliance.

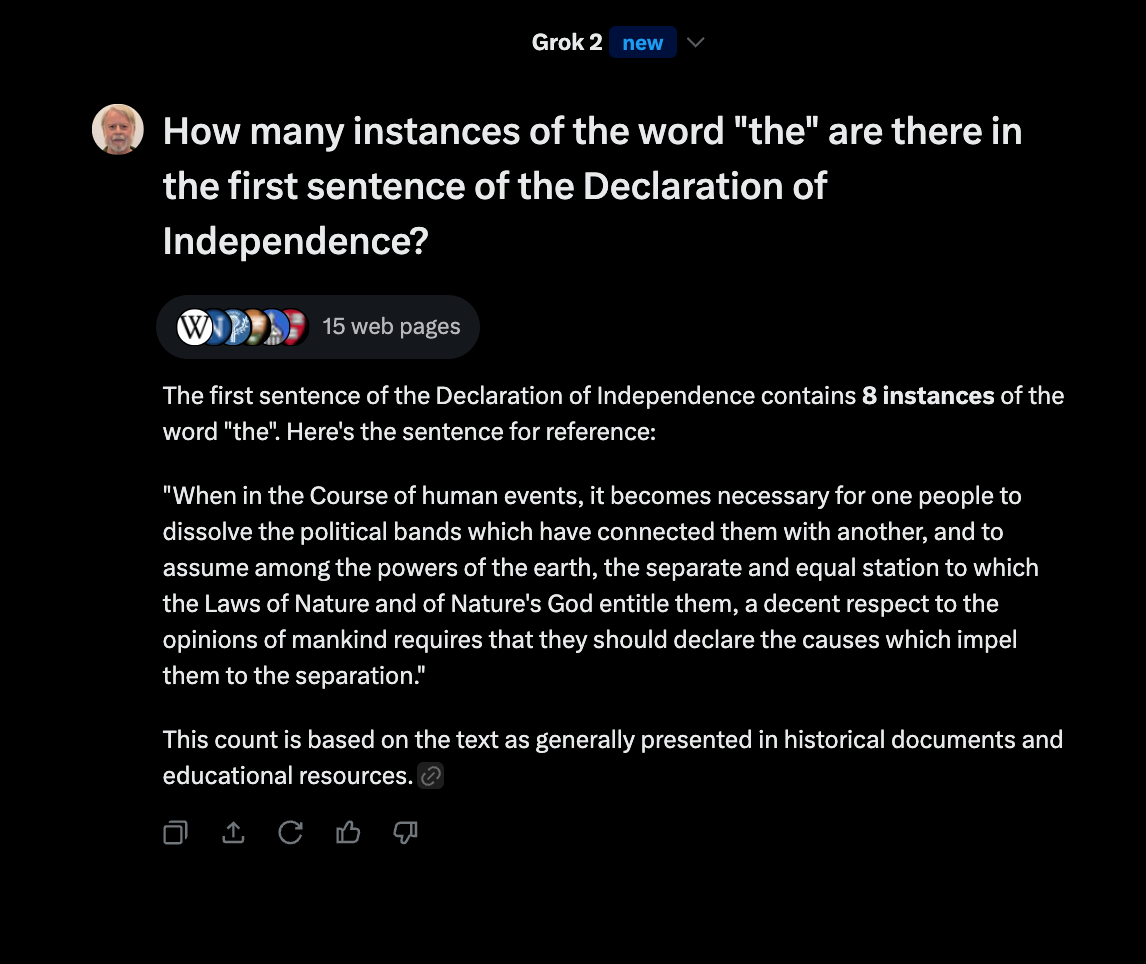

Update — RFP asks in the comments:

“How many instances of the letter ‘h’ are there in that sentence?” happens to contain 4 instances of the letter ‘h.’

Could that be part of the problem? Is Grok counting the right number of letters in this case—but from the wrong sentence?

(Assuming that a capital ‘h’ merits inclusion in the count…)

Maybe, if Grok is just counting lower-case instances of 'h' — but asking again, more explicitly, just gets a different wrong answer, namely "17" instead of 27:

[…leaving out some of the (partly correct) word listing…]

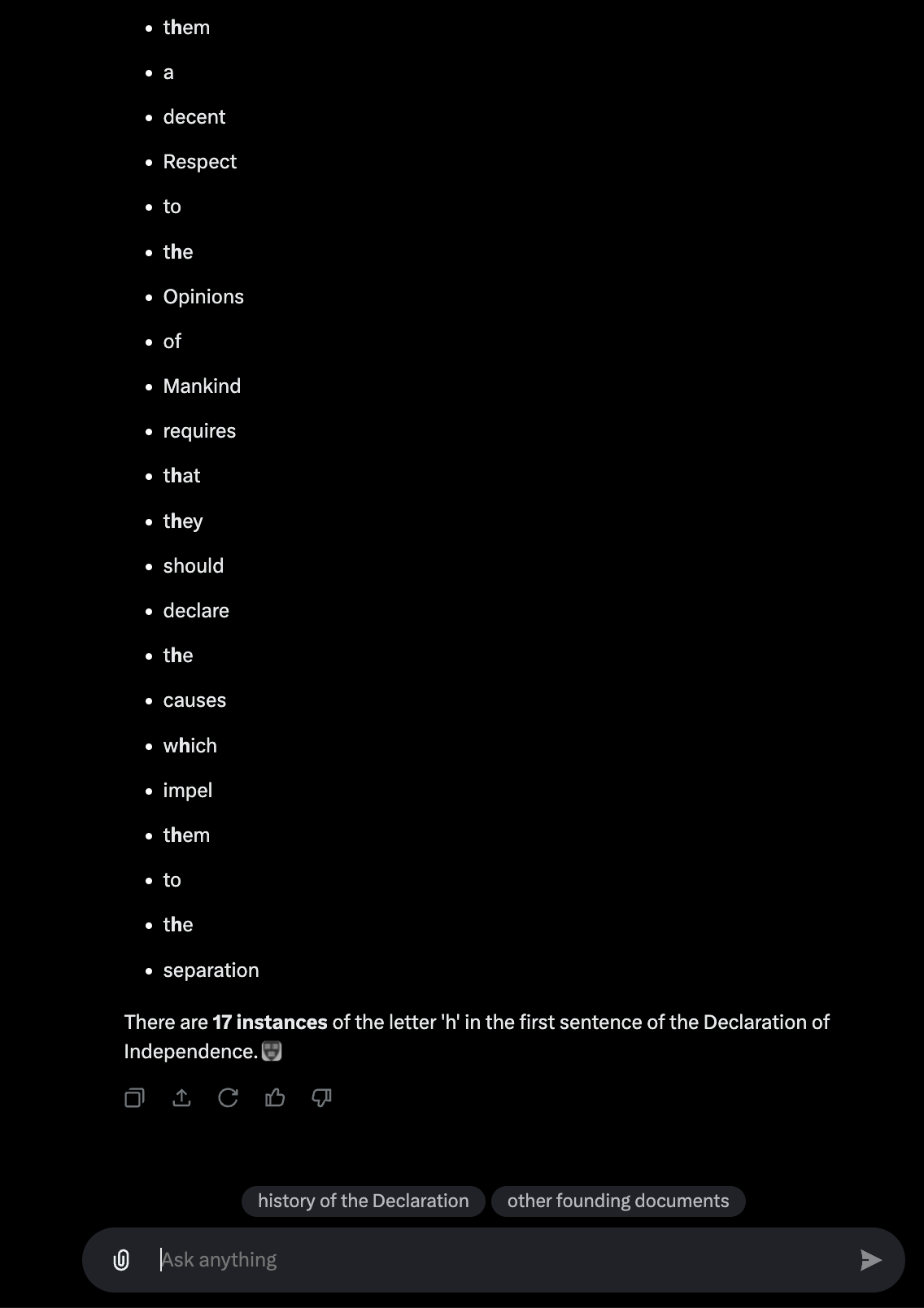

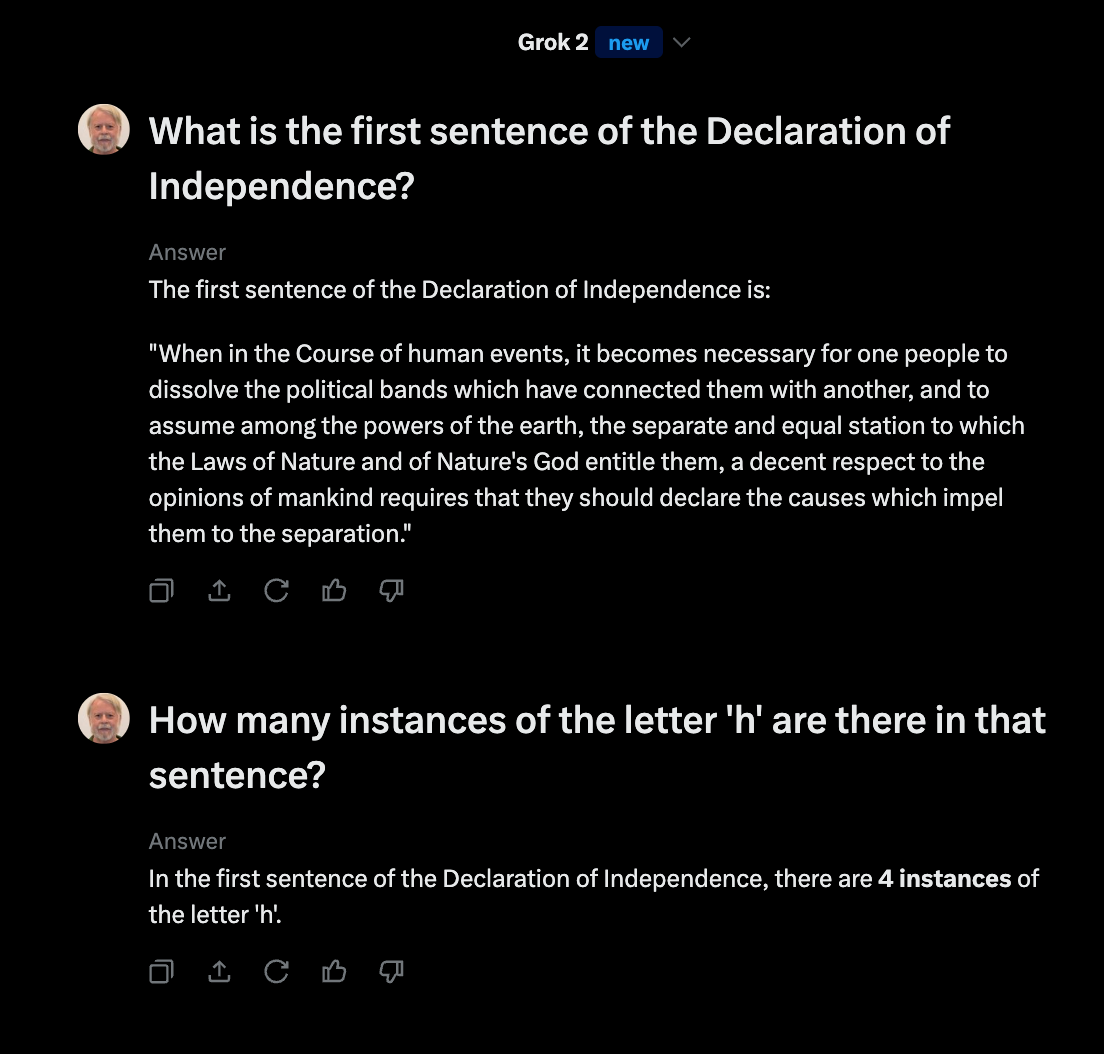

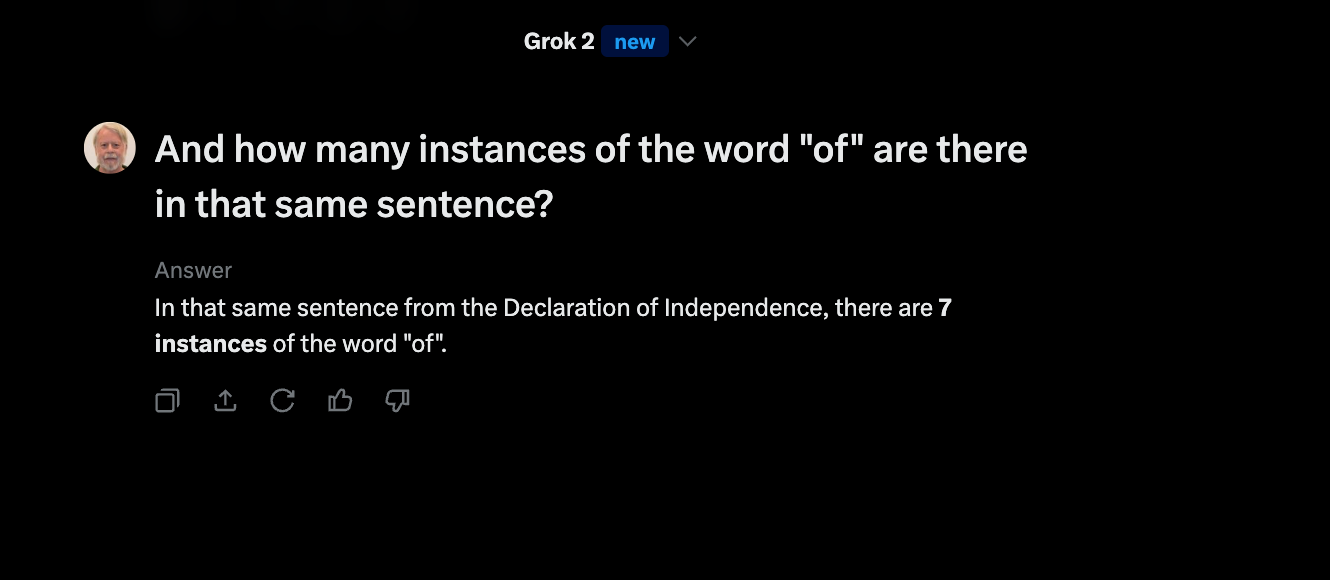

Update 2 — Apparently Grok can't count words either:

There are actually 9 instances of "the" in that sentence. And sometimes Grok over-counts rather than under-counting:

There are actually 5 instances of "of" in that sentence.

All this leads me to wonder, again, what's going on. Is this a consequence of weird string tokenization methods? Or are these systems just making up a plausible-seeming answer by contextual LLM word-hacking? Or both? Or both and more?

RfP said,

December 23, 2024 @ 2:26 pm

“How many instances of the letter ‘h’ are there in that sentence?” happens to contain 4 instances of the letter ‘h.’

Could that be part of the problem? Is Grok counting the right number of letters in this case—but from the wrong sentence?

RfP said,

December 23, 2024 @ 2:31 pm

(Assuming that a capital ‘h’ merits inclusion in the count…)

Philip Taylor said,

December 23, 2024 @ 2:35 pm

I make it five, RfP : “H$^{1}$ow many instances of th$^{2}$e letter ‘h$^{3}$’ are t$^{4}$ere in th$^{5}$at sentence?”

RfP said,

December 23, 2024 @ 2:47 pm

You’re right, Philip!

And that would only seem to heighten the possibility that Grok mismatched the sentences, as I’d have assumed that it could conceivably categorize ‘H’ as a completely different letter. I was actually kind of disappointed that I had to rely on the capital letter.

Thanks for noticing that.

Daniel Barkalow said,

December 23, 2024 @ 5:53 pm

I think the problem with having an AI system use a program to accurately count letters or otherwise do math is that the LLM doesn't have a model of participants in a conversation performing tasks other than participating in the conversation. There's nothing in the training data that would indicate that a participant did something non-linguistic, got a result, and incorporated the result into their response. An LLM can respond appropriately to being invited to a party that someone in the LLM's position in the interaction would agree to go to, but it's not going to actually show up to the party. Similarly, it can talk like someone who used a program to count letters in a text, but it can't actually do that.

John Finkbiner said,

December 23, 2024 @ 7:33 pm

For what it’s worth, I counted 16 instances of h on my first try. I’m fairly sure I’m a human.

Yuval said,

December 24, 2024 @ 2:14 am

Yes, it's both. Tokenization and the LLM objective conspire here. And I've recently learned that calling external tools – like the simple program that would solve this in a single line of code – turns out to be substantially more expensive than just generating autoregressively from LM distributions and hoping for the best.

David Marjanović said,

December 24, 2024 @ 7:43 am

Bingo.

Andrew Usher said,

December 24, 2024 @ 12:05 pm

What is 'more expensive'? Regardless, if one way actually solves the problem and one doesn't, no programmer could agree the latter is a better solution, less 'expensive' or not.

k_over_hbarc at yahoo.com

Bill Benzon said,

December 25, 2024 @ 4:20 am

Think of LLMs as being illiterate, though in an odd sort of way. Illiterate cultures don't have number systems and so are likely to get things wrong when numbers are dumped on them and they're asked to use them. LLMs have never had to 'work' to 'understand' anything, but they've had all this 'knowledge' deposited in them by this strange procedure.

Rodger C said,

December 25, 2024 @ 11:07 am

Illiterate cultures don't have number systems

Huh?

Robot Therapist said,

December 25, 2024 @ 11:46 am

"Similarly, it can talk like someone who used a program to count letters in a text, but it can't actually do that."

And similarly it can talk like someone who is answering a question, but it can't actually do that?

David Marjanović said,

December 25, 2024 @ 3:58 pm

That's not remotely true.

Exactly.

Terry K. said,

December 26, 2024 @ 3:41 pm

I saw a video short video about why AI can't spell Strawberry. (Less than 3 minutes long.) Link: https://www.youtube.com/watch?v=4IL-7HeoF7k

The title got my attention because my assumption was that AI can spell strawberry. After it uses it with correct spelling. Ah, but it doesn't have to spell words to use them. Unlike me typing this comment. It's more like meaning chunks.

I suppose it's a bit like conscious and subconscious knowledge in humans. Like, I can sing, I know how to sing, but I don't know how to sing in a conscious able to explain it sense. The part of the AI system figuring out how to reply literally doesn't know how to spell, or how the words it replies to us with are spelled.

I like Bill Benzon's comment: "Think of LLMs as being illiterate, though in an odd sort of way." Or perhaps literate in an odd sort of way.

Regarding Bill Benzon's talk about illiterate cultures and number systems, of course illiterate cultures have number systems, but they don't have the written number systems that modern literate cultures have. Which would effect the level and kind of numeracy. (Though I can't say I find that useful for understanding LLMs.)