Scientific prescriptivism: Garner Pullumizes?

The publisher's blurb for the fourth edition of Garner's Modern English Usage introduces a new feature:

With more than a thousand new entries and more than 2,300 word-frequency ratios, the magisterial fourth edition of this book — now renamed Garner's Modern English Usage (GMEU)-reflects usage lexicography at its finest. […]

The judgments here are backed up not just by a lifetime of study but also by an empirical grounding in the largest linguistic corpus ever available. In this fourth edition, Garner has made extensive use of corpus linguistics to include ratios of standard terms as compared against variants in modern print sources.

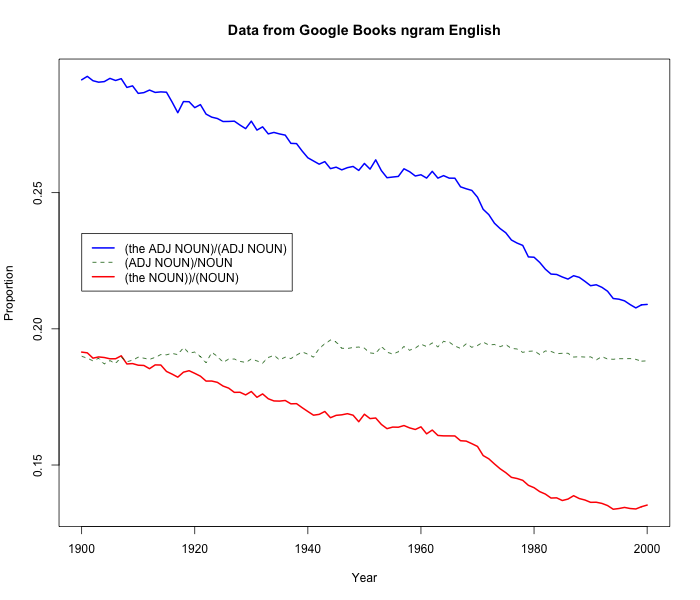

The largest linguistic corpus ever available, of course, is the Google Books ngram collection. And "word-frequency ratio" means, for example, the observations that in pluralizing corpus, corpora outnumbers corpuses by 69:1.

Read the rest of this entry »