New models of speech timing?

« previous post | next post »

There are many statistics used to characterize timing patterns in speech, at various scales, with applications in many areas. Among them:

- Intervals between phonetic events, by category and/or position and/or context;

- Overall measures of speaking rate (words per minute, syllables per minute), relative to total time or total speaking time (leaving out silences);

- Mean and standard deviation of speech segment and silence segment durations;

- …and so on…

There are many serious problems with these measures. Among the more obvious ones:

- The distributions are all far from "normal", and are often multi-modal;

- The timing patterns have important higher-order and contextual regularities;

- The timing patterns of segments/syllables/words and the timing patterns of phrases (i.e. speech/silence) and conversational turns are arguably (aspects of) the same thing at different time scales;

- Connections with patterns of many other types should also be included — phonetic and syllabic dynamics, pitch patterns, rhetorical and conversational structure, …

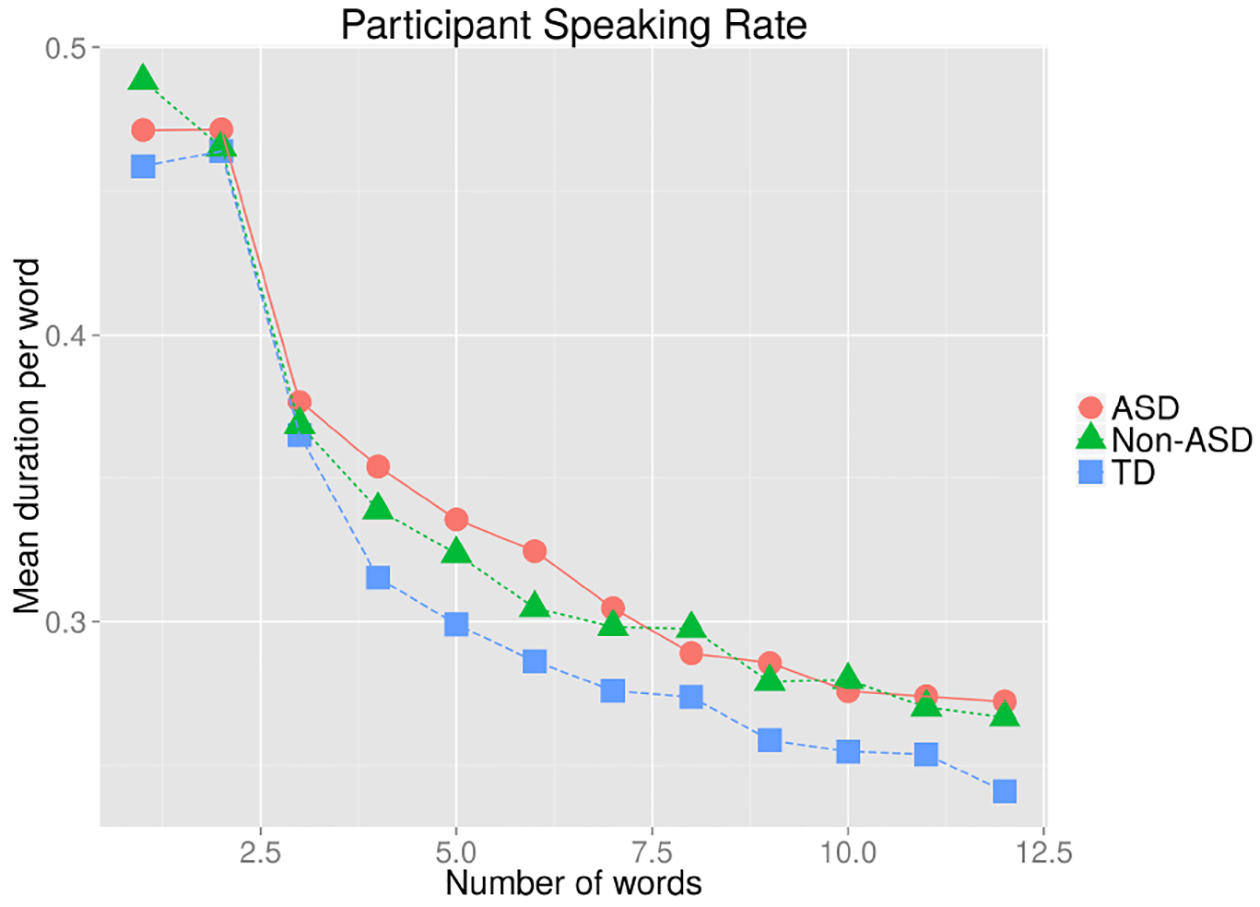

As a simple example of these interacting complexities, consider the way that mean word duration varies with the number of words per phrase, across different classes of speakers in a clinical application:

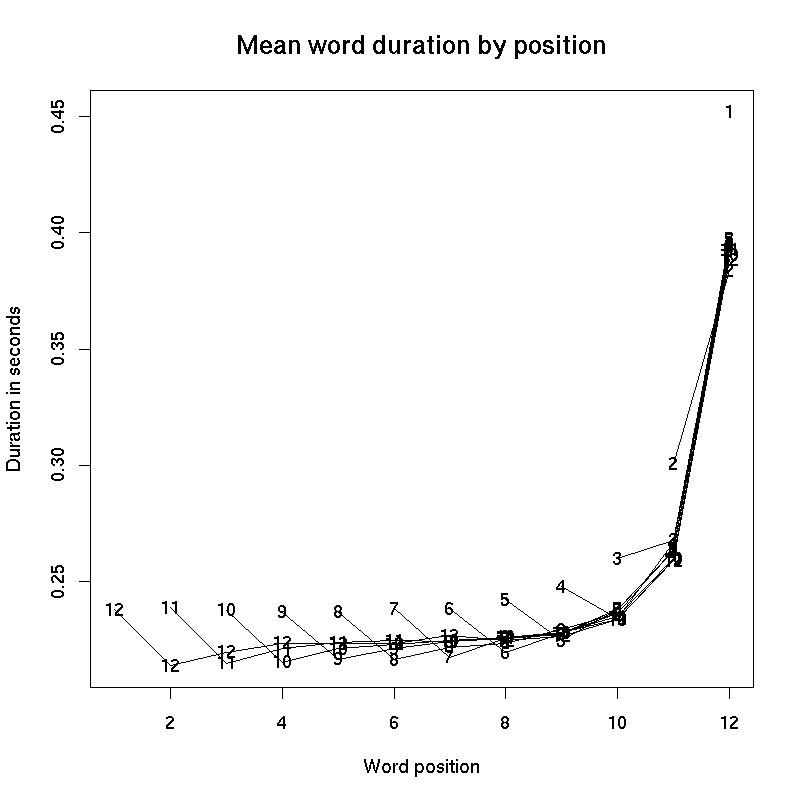

The shape of this relationship depends on the fact that the lengthening of phrase-final words, shown in the following graph (from another source) is amortized over different numbers of words:

This obviously means that measures like "words (or syllables or stress groups) per minute" are going to be affected by a speaker's phrasing, both in terms of the structure of the intended message and the speaker's pausing pattern in presenting it (which is affected by the process of composition, the creation of a motor plan, the retrieval and execution of the plan, etc.). And we need to take many other factors into account — like the choice of words, the precision of articulation, and so forth.

We could head down that road towards Models Of Everything — but that approach requires very large amounts of training data and computer time, and more important, it has problems of explainability. We want to graduate from simple-minded quantities like "mean word duration" or "words per minute" — but we still want a low-dimensional representation of timing patterns in a given recording or class of recordings, with dimensions that make sense in connection to work on how speech production and perception are influenced by factors from language and context to clinical diagnosis and tracking.

One place to start would be models of stochastic point processes, starting with traditional statistical models like those of Hawkes (1971), and moving into recent "neural" methods such as those of Mei and Eisner (2017) or Liang et al. (2023).

What to model? A simple place to start would be to take syllables as events — via segment locations in forced alignment of transcripts, or via automatic recognition of broad phonetic classes, or via the pseudo-syllables derived from simple algorithms like this one.

More on this later, I hope…

Update — In a somewhat different direction: Sam Tilsen and Mark Tiede, "Looking within events: Examining internal temporal structure with local relative rate", J. Phonetics September 2023.

Abstract: This paper describes a method for quantifying temporally local variation in the relative rates of speech signals, based on warping curves obtained from dynamic time warping. Although the use of dynamic time warping for signal alignment is well established in speech science, its use to estimate local rate variation is quite rare. Here we introduce an extension of the local relative rate method that supports the quantification of variability in local relative rate, both within and across a set of events. We show how measures of temporal variation derived from this analysis method can be used to characterize the internal temporal structure of events. In order to achieve this, we first provide an overview of the standard dynamic time warping algorithm. We then introduce the local relative rate measure and describe our extensions, applying them to an articulatory and acoustic dataset of consonant-vowel-consonant syllable productions.

Some additional background from my own weblog notes:

"The shape of a spoken phrase", 4/12/2006

"The shape of a spoken phrase in Mandarin", 6/21/2014

"The shape of a spoken phrase in Spanish", 5/29.2015

"Political sound and silence", 2/8/2016

"Poetic sound and silence", 2/12/2016

"Some speech style dimensions", 6/27/2016

"Inaugural addresses: SAD", 2/5/2017

"The shape of a LibriVox phrase", 3/5/2017

"Trends in presidential speaking rate, 6/1/2017

"A prosodic difference", 6/2/2017

"Syllables", 2/24/2020

"English syllable detection", 2/26/2020

"The dynamics of talk maps", 9/30/2022

Some references:

Alan Hawkes, "Spectra of some self-exciting and mutually exciting point processes", Biometrika 1971.

Julia Parish-Morris et al., "Exploring Autism Spectrum Disorders Using HLT", NAACL-HLT 2016.

Hongyuan Mei and Jason M. Eisner, "The neural hawkes process: A neurally self-modulating multivariate point process", NEURIPS 2017.

Chenhao Yang et al, "Transformer embeddings of irregularly spaced events and their participants", arxiv.org 2021.

Oleksandr Shchur et al., "Neural temporal point processes: A review", arxiv.org 2021.

Jiaming Liang et al., "RITA: Group Attention is All You Need for Timeseries Analytics", arxiv.org 2023.

Chris Button said,

September 11, 2023 @ 10:06 am

I remember someone challenging the idea that a few languages I was studying could have length distinctions of sonorant codas in addition to length distinctions of the vocalic nucleus (I was not the first person to note it). They wanted to put it all down to isochrony to claim that a long coda with a short nucleus and short coda with a long nucleus resulted from a desire for equal syllable length despite the fact that obstruent codas remained short regardless of the length of the nucleus. The historical expalnation came more down to moraic weight across a syllable that was surfacing as length. A related question was how much that had to to do with fortis/lenis aka tense/lax distinctions.

Mark Liberman said,

September 12, 2023 @ 1:46 pm

@Chris Button: What languages were these?

Chris Button said,

September 12, 2023 @ 3:33 pm

Theodore Stern first wrote about it in "A provisional sketch of Sizang (Siyin) Chin" (1963). I was then looking at Sizang and five other related northern Kuki-Chin languages of the Tibeto-Burman family, which supported his findings albeit with some very minor differences in specific details.