English syllable detection

« previous post | next post »

In "Syllables" (2/24/2020), I showed that a very simple algorithm finds syllables surprisingly accurately, at least in good quality recordings like a soon-to-published corpus of Mandarin Chinese. Commenters asked about languages like Berber and Salish, which are very far from the simple onset+nucleus pattern typical of languages like Chinese, and even about English, which has more complex syllable onsets and codas as well as many patterns where listeners and speakers disagree (or are uncertain) about the syllable count.

I got a few examples of Berber and Salish, courtesy of Rachid Ridouane and Sally Thomason, and may report on them shortly. But it's easy to run the same program on a well-studied and easily-available English corpus, namely TIMIT, which contains 6300 sentences, 10 from each of 630 speakers. This is small by modern standards, but plenty large enough for test purposes. So for this morning's Breakfast Experiment™, I tested it.

Not to bury the lede, the overall results are pretty similar to the results for Chinese:

N Syllables N Misses N False Alarms 80856 8089 6349

Precision=0.93 Recall=0.90 F1=0.91

The gory details:

I changed one thing in the code — I added one line after the spectral calculation, because I realized that the GNU Octave specgram() function assigns time values to the beginnings of the analysis intervals rather than to the centers:

t = t + (NFFT/FS)/2; # + 0.08 = half of analysis window...

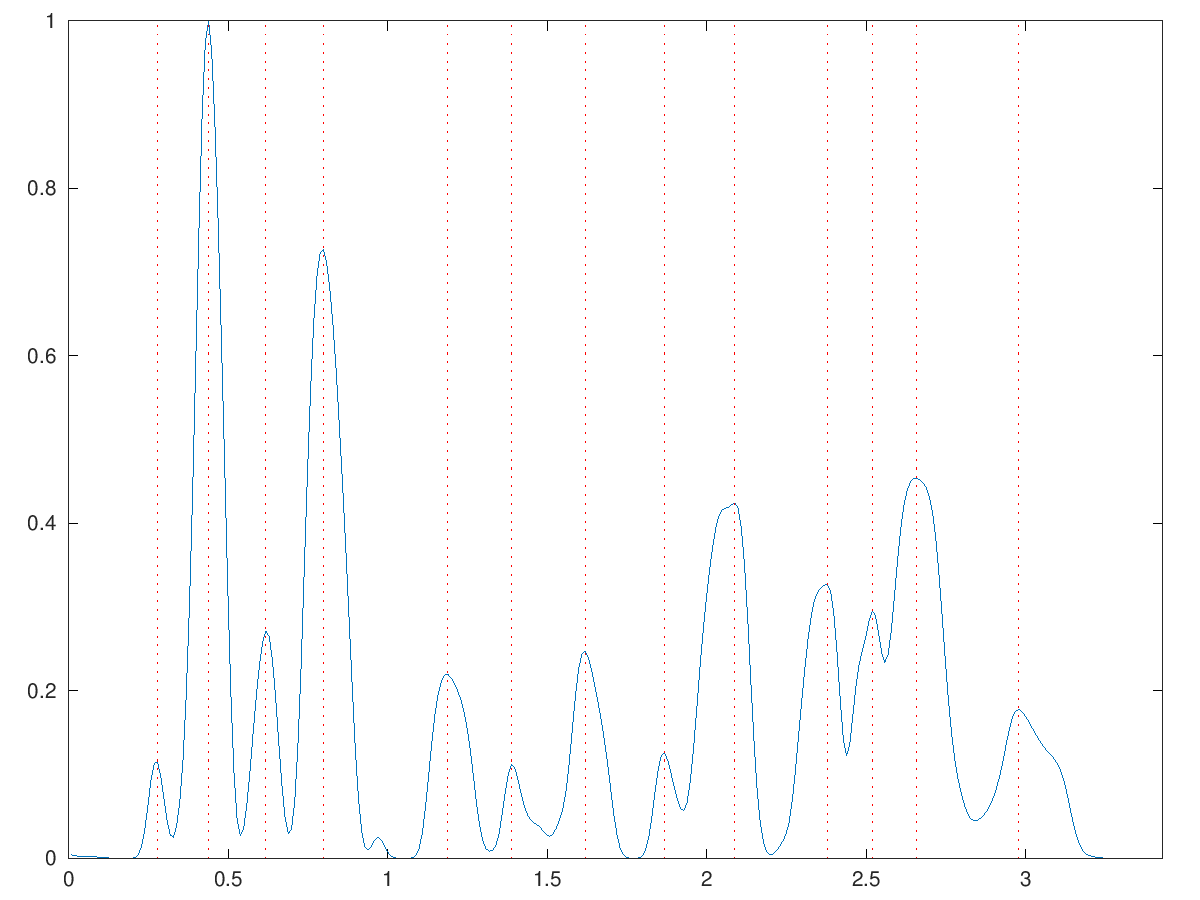

And parsing syllables in English is harder than in Chinese, especially when the transcripts lack stress marking (as they do in the TIMIT corpus). So I used the Cheap Hack™ illustrated below. Consider sentence FADG0_SA1, "She had your dark suit in greasy wash water all year":

for which the algorithm produces this sequence of detected amplitude peaks:

0.278 0.115 0.438 1.000 0.618 0.271 0.798 0.727 1.188 0.220 1.388 0.112 1.618 0.247 1.868 0.125 2.088 0.424 2.378 0.327 2.518 0.295 2.658 0.454 2.978 0.178

If we map those peak times onto TIMIT's phone-level transcription, we get this:

0.000 0.133 h# 0.133 0.251 sh 0.251 0.302 iy 0.278 0.302 0.379 hv 0.379 0.507 ae 0.438 0.507 0.527 dcl 0.527 0.547 d 0.547 0.582 y 0.582 0.666 axr 0.618 0.666 0.703 dcl 0.703 0.733 d 0.733 0.839 aa 0.798 0.839 0.892 r 0.892 0.953 kcl 0.953 0.975 k 0.975 1.133 s 1.133 1.282 ux 1.188 1.282 1.317 tcl 1.317 1.337 t 1.337 1.387 q 1.387 1.423 ix 1.388 1.423 1.504 n 1.504 1.530 gcl 1.530 1.569 g 1.569 1.634 r 1.618 1.634 1.702 iy 1.702 1.833 s 1.833 1.877 iy 1.868 1.877 1.982 w 1.982 2.158 ao 2.088 2.158 2.243 sh 2.243 2.274 epi 2.274 2.329 w 2.329 2.413 ao 2.378 2.413 2.452 dx 2.452 2.540 axr 2.518 2.540 2.581 q 2.581 2.717 ao 2.658 2.717 2.777 l 2.777 2.899 y 2.899 3.014 ih 2.978 3.014 3.143 axr 3.143 3.440 h#

That happens to place a peak somewhere within the time span of 12 of the 14 vowels. For the first syllable of "greasy", there's no peak in the [iy] vowel, but there is a peak in the onset consonant [r], which should count as part of the same syllable. (And of course it doesn't make sense anyway to precisely time-split these highly overlapped gestures…)

So the obvious Cheap Hack™ is to establish pseudo-syllables for scoring purposes, by dividing up the time (if any) between the end of a vowel and the start of the next, giving half of the difference to each vowel's pseudo-syllable. For FADG0_SA1, this gives us

0.251 0.341 iy 0.278 0.341 0.545 ae 0.438 0.545 0.699 axr 0.618 0.699 0.986 aa 0.798 0.986 1.335 ux 1.188 1.335 1.529 ix 1.388 1.529 1.768 iy 1.618 1.768 1.930 iy 1.868 1.930 2.243 ao 2.088 2.243 2.432 ao 2.378 2.432 2.561 axr 2.518 2.561 2.808 ao 2.658 2.808 3.014 ih 2.978 3.014 3.143 axr MISSING

which seems fair. The algorithm is still missing the second vowel in TIMIT's transcription of the final word "year", namely [ y ih axr ]:

Since SA1 is one of the "calibration sentences" that every TIMIT speaker read, we can ask how often the algorithm joined or split the two vowels that TIMIT assigns to year — and the answer is 469 joins, 171 splits, no extras = 74% joined.

Which roughly corresponds to my degree of uncertainty about whether year is one or two syllables :-)…

As I wrote in the earlier post:

[I]t would be easy to tune the algorithm to do better, and a more sophisticated modern deep-learning approach would doubtless do much better still. But the point is that the basic correspondence between phonological syllables and amplitude peaks in decent-quality speech is surprisingly good, and using this correspondence to focus the attention of (machine or human) learners is probably a good idea.

Michael said,

February 27, 2020 @ 12:15 pm

I'll understand if this comment is deleted as irrelevant, but funny enough the reason I came over here today was to see if LL had taken a stand on the question of "bury the lede" v. "bury the lead." The NYT editorial staff recently came out in favor of "lead," but I see that Mark, at least, is sticking to the "lede" version.

Andrew Usher said,

February 28, 2020 @ 2:22 am

And outside journalism, only 'lead' can be correct. Not that this is a news article anyway – the statement of the main result in the third paragraph is fine.

'Year' is ambiguous in that recording, but I think in rhotic speech it should be called one syllable – sear and seer are not the same.

k_over_hbarc at yahoo.com

unekdoud said,

February 28, 2020 @ 4:17 am

To me there's a concern that the Cheap Hack™ reduces the problem to identifying vowels (using human brains) and that the syllable counter is only performing so well by being sensitive to vowels (using silicon processors).

Assuming that syllables aren't just vowel-like sounds surrounded by vowel-unlike sounds, how do we know that the Cheap Hack is valid?

Michael Watts said,

February 28, 2020 @ 7:19 am

Test it on Japanese or another language where vowel length matters. It's pretty frequent in Japanese for one vowel to run into the same vowel, so there's no change in pronunciation as one syllable runs into the next.

Michael Watts said,

February 28, 2020 @ 7:21 am

(Mandarin Chinese offers some examples in that regard too, like the word 意义 yiyi /i.i/ "meaning". The syllable flow strikes me as a little less seamless than is the case in Japanese, but it'd still be interesting to look at.)

Philip Anderson said,

February 29, 2020 @ 6:09 am

Outside American journalism …

(maybe Canadian too, but it’s not ‘lede’ in Britain. I’ve seen that spelling dated to either the 70s or 50s, so not that traditional.

Philip Taylor said,

February 29, 2020 @ 7:26 am

One citation from the very early fifties, according to the OED, then late seventies onwards :