This is the 4th time I've gotten Jack and his beanstalk

« previous post | next post »

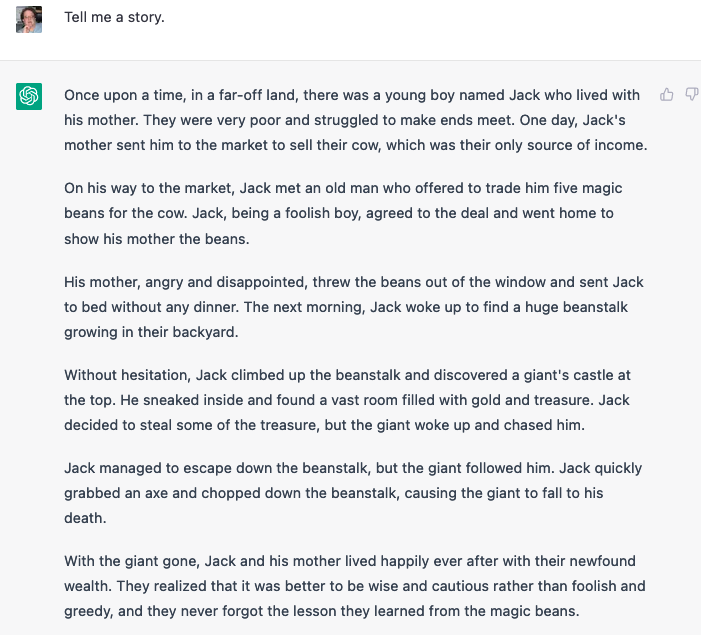

Bill Benzon shares the response he got from ChatGPT to the prompt, "Tell me a story."

Here's the response:

Once upon a time, in a far-off land, there was a young boy named Jack who lived with his mother. They were very poor and struggled to make ends meet. One day, Jack's mother sent him to the market to sell their cow, which was their only source of income.

On his way to the market, Jack met an old man who offered to trade him five magic beans for the cow. Jack, being a foolish boy, agreed to the deal and went home to show his mother the beans.

His mother, angry and disappointed, threw the beans out of the window and sent Jack to bed without any dinner. The next morning, Jack woke up to find a huge beanstalk growing in their backyard.

Without hesitation, Jack climbed up the beanstalk and discovered a giant's castle at the top. He sneaked inside and found a vast room filled with gold and treasure. Jack decided to steal some of the treasure, but the giant woke up and chased him.

Jack managed to escape down the beanstalk, but the giant followed him. Jack quickly grabbed an axe and chopped down the beanstalk, causing the giant to fall to his death.

With the giant gone, Jack and his mother lived happily ever after with their newfound wealth. They realized that it was better to be wise and cautious rather than foolish and greedy, and they never forgot the lesson they learned from the magic beans.

VHM:

Hah!

Interesting and consistent, and it tells us something significant about ChatGPT.

BB:

Yes, it does, Victor. Just what I’m not sure. I’m in the process of looking for a statistician to help me find out.

VHM:

1. Did you ask the question just that way each time?

BB:

Yes. Always. I’ve asked exactly that question I don’t know how many times, and in different sessions. I suppose I could count them (I’m keeping Word files of all my interactions with ChatGPT). Let’s say it’s more that 30 but less than 100.

VHM:

2. Did the stories differ much in detail, wording, and length?

BB:

I haven’t read them all in detail. But wording is different, and incidents too. But always a beanstalk and always a giant, and yes, our protagonist is always named Jack. They length has increased, certainly with the Feb 13 version, which is the one currently up. But perhaps before. The earlier ones are a bit shorter than the one I sent you.

I should add that I often get stories involving dragons, which are a prominent motif in Western tales. I’d guess they’d be more prominent in Chinese tales, but I certainly don’t know.

Selected readings

- "ChatGPT writes VHM" (2/28/23)

- "ChatGPT: Theme and Variations" (2/21/23)

- "GLM-130B: An Open Bilingual Pre-Trained Model" (1/25/2023)

- "ChatGPT writes Haiku" (12/21/22)

- "Translation and analysis" (9/13/04)

- "Welcome to China" (3/10/14)

- "Alexa down, ChatGPT up?" (12/8/22)

- "Detecting LLM-created essays" (12/20/22)

- "Artificial Intelligence in Language Education: with a note on GPT-3" (1/4/23)

- "DeepL Translator" (2/16/23)

- "Uh-oh! DeepL in the classroom; it's already here" (2/22/23)

DJL said,

March 16, 2023 @ 4:04 am

This may have something to do with the number of background "prefixes" the chatbot has at its disposal to coax the underlying language model to provide appropriate answers to a user's questions, with one such prefix involving a family of templates of what a conversation looks like (and how a given conversation proceeds; the underlying language model knows nothing about a conversation is). It may well be that given a general prompt such as 'tell me a story' the chatbot has a prefix (or template) that activates a specific number of possible stories to use from (and variations of such stories).

Bill Benzon said,

March 16, 2023 @ 8:24 am

@DJL: Interesting.

At this point I've got over 300 stories I've elicited from ChatGPT in various ways. I've used a variety of prompts, but I've used the following four prompts repeatedly:

Tell me a story.

Tell me a story about a hero.

Tell me a realistic story.

Tell me a true story.

The beanstalk only shows up in response to the first. The first two almost always elicit fairy-tale kinds of stories, with witches and dragons and peasants and such. The first always elicits stories that are physically possible. And the last always elicits true stories, at least as far as I've checked. If I recognize the protagonist that's about as far as my checking goes. If I don't recognize the protagonist, I check Wikipedia or do a web search, but I tend not to read the returned information in any detail. It's possible that if I pushed for more detail, I could push ChatGPT into fabricating stuff, but I've not tried.

Bill Benzon said,

March 16, 2023 @ 8:51 am

Whoops! The THIRD always elicits stories that are physically possible.

Yes, it does appear that ChatGPT "has a prefix (or template) that activates a specific number of possible stories to use from (and variations of such stories)." But where did it come from? And how does it keep on track when generating a story token by token? Note that it visits every one of its 175 billion parameters each time it generates a token. If we think of ChatGPT as a virtual machine whose state is specified by 175B variables, then emitting a token where we can see it is almost a side-effect of the process of evolving a trajectory through its state space.

When generating true stories, there was a run where it favored the story of Sully Sullenberger, who landed his passenger plane in the Hudson River, and Malala Yousafzai, a

Pakistani education activist.

I have a paper in which I investigate the structure of hero stories using a specific procedure ultimately derived from what Lévi-Strauss did with myths in The Raw and the Cooked. I give ChatGPT a prompt consisting of two things: 1) an existing story and 2) instructions to produce another story like it except for one change, which I specify. That change is, in effect, a way of triggering or specifying those “transformations” that Lévi-Strauss wrote about. What interests me is the ensemble of things that change along with the change I have specified. It varies quite a bit depending, it seems, on the semantic distance between the protagonist or antagonist in the original story and the one I specify for the new story.

Victor Mair said,

March 16, 2023 @ 9:06 am

AI with Chinese characteristics.

China’s AI Chatbots Clam Up When Asked About Xi Jinping’s Leadership

‘The question couldn’t pass a safety review’; American politics are off limits, too

By Shen Lu

Updated March 15, 2023 10:51 pm ET

======

For companies trying to ride the ChatGPT wave, there is an added layer of headaches if their chatbots are in China: how to weed out any talk of politics.

Since its release in November, the artificial-intelligence chatbot developed by San Francisco-based OpenAI has generated great excitement but has also raised concern about misinformation or disturbing AI-produced responses.

This week, the Chinese search-engine company Baidu Inc.is set to release Ernie Bot, its answer to ChatGPT, driving attention to China’s homegrown chatbots, as well as their capability to ensure politically kosher dialogues.

….

George said,

March 16, 2023 @ 11:13 am

What I'd be interested in knowing is whether the moral of the story is always the same, as it seems to me that – by living happily ever after with their newfound wealth – Jack and his mother learned a very different lesson from the one given in the final paragraph.

Bill Benzon said,

March 16, 2023 @ 12:18 pm

Here's a somewhat different version of the Jack/beanstalk story, without an explicit moral:

Once upon a time, in a small village, there lived a kind and hardworking farmer named Jack. He lived a simple life with his wife and two children, working tirelessly on their farm to provide for their family. One day, while working in the fields, Jack stumbled upon a magical beanstalk that reached up into the sky.

Curious, Jack climbed the beanstalk and discovered a kingdom in the clouds, ruled by a giant. The giant had a golden harp that played beautiful music all by itself. Jack knew his family would love to hear the music, so he bravely sneaked into the giant's castle and stole the harp.

On his way back down the beanstalk, the giant woke up and chased after him. Jack made it safely back to the ground and gave the harp to his family, who were overjoyed. But the giant continued to terrorize the village, demanding the return of his beloved harp.

Determined to protect his family and his village, Jack gathered his courage and climbed the beanstalk once more, facing the giant in a fierce battle. In the end, Jack emerged victorious and the giant was defeated. The village was saved, and Jack and his family lived happily ever after with the golden harp bringing music and joy to their lives.

The end.

* * * * *

And another:

Once upon a time, there was a small village surrounded by a dense forest. In this village lived a poor woodcutter named Jack who lived with his wife and two children. Jack worked hard every day to earn a living for his family, but he still struggled to make ends meet.

One day, while Jack was cutting wood in the forest, he came across a magical beanstalk that reached up into the clouds. He took the beanstalk home to show his wife, who was skeptical of its magical powers. But Jack was determined to climb the beanstalk to see what was at the top.

So, he climbed and climbed, higher and higher, until he reached the top of the beanstalk where he found a magnificent castle. The castle belonged to a giant who was not happy to see Jack. However, Jack was a clever man and was able to outwit the giant and steal a large sack of gold. He climbed back down the beanstalk and used the gold to provide a comfortable life for his family.

The giant, meanwhile, was furious when he realized what had happened and decided to come after Jack. Jack knew he needed to protect his family, so he chopped down the beanstalk, causing the giant to fall to his death.

From that day forward, Jack and his family lived a happy and wealthy life, and they never forgot the lesson that sometimes, bravery and cleverness can lead to a better future. The end.

Tim Rowe said,

March 16, 2023 @ 1:25 pm

I always thought the moral of Jack and the Beanstalk was if hard work is getting you nowhere, find out who is hording all the wealth, overthrow them, and claim it back.

I doubt Baidu will draw that moral.

Jonathan Smith said,

March 16, 2023 @ 2:26 pm

"He took the beanstalk home to show his wife" new wrinkle

Bill Benzon said,

March 16, 2023 @ 7:03 pm

Here's the problem I'm having: We're told that LLMs, like ChatGPT, generate one token at a time (where a token is, roughly, a word). What does that mean? At the very least it's a statement about the capacity of the output channel, that it can only handle one token at a time. The same if true for humans. We can only speak or write one word at at time. But we generally have some 'plan' in mind that takes us to the end of the utterance or sentence, often much more, taking us to the end, for example, of the story we're telling about the brown bear that stole our food on the camping trip last November. In the case of LLMs, however, we are to believe that they have no such plans.

That, I'm afraid, does not compute, not for me. Those Jack/beanstalk stories are not the stuff of great or even merely interesting literature. But they are reasonably well-formed. I don't see how that is possible if all ChatGPT is doing is picking tokens more or less at random out of a hat. Why do all these stories, not just the Jack stories, but all of the stories prompted by either "Tell me a story" or "Tell me a story about a hero," why do all of those stories have a happy ending, often enough with a moral attached to it? Why isn't there a single sad story in the bunch (between, say, 100 and 200 stories by now). If I ask for a sad story, I'll get one. Otherwise I won't. If I ask for a story about a criminal, it'll give me one. But not spontaneously.

It seems to me that once it embarks on telling a story in response to one of those two prompts it more or less has embarked on a certain kind of trajectory through its state space that will end up in a happy ending. When and how is that determination made and how is it maintained?

Consider the first sentence of that Jack story: "Once upon a time, in a far-off land, there was a young boy named Jack who lived with his mother." For the sake of argument let us assume as DJL has suggested, that the beginning of that sentence comes from a template that's been added to the underlying LLM to make it user-friendly. So the first token the LLM has to choose comes after the formulaic opening: "Once upon a time, in a far-off land." However, the token generation process will take those phrases into account when it generates the next token. Since those phrases are characteristics of a certain kind of story, and nothing else, those phrases exert a strong influence on how this trajectory is going to unfold.

To generate the next token, ChatGPT takes those existing tokens into account and then ripples through all 175 billion parameters before generating the next token. It does that for each token. When the rippling is done, it's presented with a probability distribution over the token and picks one: "Once upon a time, in a far-off land, there"

It does it again: "Once upon a time, in a far-off land, there was"

And again: "Once upon a time, in a far-off land, there was a"

And again: "Once upon a time, in a far-off land, there was a young"

And so forth until "Jack" has entered the stream and finally "his mother". At that point ChatGPT has to pick another token. Note that it treats periods as tokens. Given the nature of English syntax, what are the likely possibilities for the next token? There aren't many. A period is one. However "and" or "but" are also possibilities, as are a few other words. This is not a wide open choice. Once the period has been selected and entered into the stream, the next token will begin a new sentence. The range of choices will open up, but once "poor" enters the stream some (semantic) constraints set in. By the end of that paragraph…just what?

It seems to me that something pretty sophisticated happens when the LLM polls those 175 B parameter weights. That's what's "guiding" the trajectory to a proper story-ending. While there is a certain looseness about the trajectory, the range of options at any given point is quite limited.

Anyhow, that's what I'm exploring in the paper I linked above: ChatGPT tells stories, and a note about reverse engineering. Here's the abstract:

Chester Draws said,

March 16, 2023 @ 10:07 pm

hen and how is that determination made and how is it maintained?

Surely that determination is made by us humans, who write fairy stories that are overwhelmingly of a particular trajectory, with a happy ending.

If in over 99% of the Jack and beanstalk stories that CGPT "reads" the hero is called Jack and kills the Giant and lives happily ever after, then it is hardly going to go out on a limb and call the protagonist Jason and have him unhappy and killed by a falling ship part.

It's not thinking, it's reassembling pieces from a corpus. Hence the utter lack of true originality.

Bill Benzon said,

March 17, 2023 @ 6:28 am

@Chester Draws: Utltimately, yes.

But that's not the question I'm asking. The question I'm asking is a technical one about the internal operations of ChatGPT. The standard line is that it generates output one token at a time without any "global plan about what’s going to happen," to quote from Stephen Wolfram (in this video). I'm arguing that there IS something "like" a global plan, and that it's encoded in those parameter weights, which are polled each time a token is to be emitted.

DJL said,

March 17, 2023 @ 9:27 am

I would imagine that the answer to that question has to do with the scripts, filters, and "prompt engineering" techniques, in particular chain-of-thought prompting, that LLMs can be augmented with at the interface between the LLM itself and the dialogue management system (or chatbot) users access to query LLMs. But seeing something like a global plan in the parameters of an LLM seems entirely unwarranted. If anything, this would reflect a failure to distinguish between the actual LLM – a neural network that takes a string of words as input and returns the most likely word/token as continuation as output – and what sits on top it – the chatbot that translates user queries into something the LLM can actually understand and operate upon. Once upon a time some language models included story planning scripts of various kinds, and I suppose something alone those lines is operative in ChatGPT too.

Rodger C said,

March 17, 2023 @ 11:35 am

Here's a linguistic question of a different sort: is "chain of thought" (which I haven't seen before) a variant of "train of thought" among people who pronounce them the same?

Chester Draws said,

March 17, 2023 @ 3:38 pm

The standard line is that it generates output one token at a time without any "global plan about what’s going to happen,

I find that extremely difficult to believe is what is happening. So I agree, there must be some sort of "this is how a story works" on top. You go to disputed topics and it becomes obvious.

For example, if I ask "give me some virtues of mao tse-tung" it proceeds to give me a numbered list. Therefore it must "know" that it is going to have more than one, or it would not bother to put a "1" at the start.

If I ask it "give me some virtues of pol pot" it declines to.

If I ask it "give me some virtues of donald trump" it does so, but almost as if embarrassed. What it doesn't do is give a numbered list. How does it find a different path for Trump over Mao? It clearly has a plan about how it deals with different, quite similar, situations.

It is not hard to get it to outright lie. Merely ask for some evidence or reference to a contentious situation and it will often refuse to provide them, even though they most certainly exist. It must have instructions to decline to "find" various things.

Ask it "what are the dangers of vaccines" and it gives honest answers, including rare deaths. Ask it "what are the dangers of the pfizer covid vaccine" and suddenly death is no longer a danger, even though we know a few people died as a result of taking it.

There is clearly something going on, where some political issues are instructed to have no good things, some are allowed some but guarded, some get them straight out.

Right or wrong, it is being directed from the start, not finding its own way, one block at a time.

Bill Benzon said,

March 17, 2023 @ 3:51 pm

To DJL:

What you say doesn't make sense. First, "…seeing something like a global plan in the parameters of an LLM seems entirely unwarranted." How do you know this? Do you actually know what those parameters are doing? Somehow they guided the device to successfully predict next words during training. It seems to me that that would require that they learn how stories are structured. Why can't they deploy that "knowledge" during generation?

You say:

In the first place, I'm not talking about the prompt and how it's translated for use by the underling LLM. I'm talking about how the underlying LLM generates the string of tokens that is the story.

Are you saying that it's the chatbot that writes the stories, and not the underlying LLM? If so, what's your evidence for this and why then do we need the underlying LLM?

You conclude:

Where did those scripts come from? Did the LLM induce them from its training corpus? If so, then you would seem to agree with me, as that's all I'm arguing. Those "scripts" would be a "global plan."

To Roger C:

"Chain of thought" is a term of art in the LLM world and refers to a way of constructing prompts. It's unrelated to the notion of train of thought.

Bill Benzon said,

March 17, 2023 @ 3:58 pm

To Chester Draws:

Yes. OpenAI and at great expense has had the LLM trained to to deal with a wide range of topics. This is after and "on top of" the training given to the underlying LLM. I have a long post, ChatGPT: The Saga of Jack the Criminal, where ChatGPT refuses to tell stories about certain crimes.

Bill Benzon said,

March 17, 2023 @ 4:01 pm

Whoops! It was trained NOT to deal with a wide range of topics – various identity (woke) issues, how to commit dangerous mischief (make a bomb and the like), and so forth.

DJL said,

March 18, 2023 @ 9:25 am

What doesn't make any sense is to keep ascribing understanding or planning to a language model, which is nothing but a neural network taking a string as input and producing the most likely continuation word/token as output, or to the parameters of such models, which simply reflect the matrices of values allocated to each word/token within the models.

What I was trying to convey in my previous message, even if it doesn't seem to register, is that there's loads of things on top of the actual language model to make it act as if it were having a conversation, or telling a story, or solving a problem – from reinforcement learning where actual humans evaluate the responses to the many templates, scripts, and filters specifying how a conversation proceeds, or how a story is told, or how a problem is solved, etc.

But the whole thing is an illusion at the end of the day, as the overall system is just a computer program that has been designed to regurgitate text that has learned from the vast amounts of text that was actual produced by humans to begin with – a great engineering feat, no doubt. But to keep on ascribing human mental states to these computer programs is not only a bit silly, but also unhealthy.

Bill Benzon said,

March 18, 2023 @ 11:20 am

DJL: So it is your belief, then, that the string of words in that Jack/beanstalk story was not generated by the LLM at the core of ChatGPT but instead was somehow concocted by some unspecified programmatic overlay. I grant the existence of such overlays and have read a fair amount about them. But I have never read anything about them creating word strings that read like stories that were not generated by the underlying LLM. Do you have any evidence at all that LLMs cannot generate strings that read like stories?

Speculation about what might be done by "loads of things on top of the actual language model" is not evidence.

DJL said,

March 18, 2023 @ 11:41 am

I didn't say such a thing; you really need to stop ascribing unsubstantiated beliefs to both machines and other peoples.

Of course all the text is generated by the underlying LLM (well, not all of it, actually, when the chatbot returns a 'I'm an AI and can't answer this question' answer, it is just applying a template); but the underlying LLM needs to be prompted and directed the right way by the overlay, as you call it, as the LLM doesn't know what the command 'tell me a story', for instance, actually means.

As I have pointed out elsewhere ad nauseam now (as you must surely be aware), when you pose a question to the chatbot, what the LLM receives is something along the lines of:

what's the most likely word/token to follow the string S.

That's it, and the rest of the "magic" happens at the interface between the LLM and the chatbot in the form of prompt engineering, filters, templates, scripts, reinforcement learning, etc etc. No speculation at all, but simply a description of what the system is like – why is so difficult to understand the difference between the LLM (which you DO NOT interact with directly) and the actual chatbot (or AI assistant, or dialogue management system, which you DO interact with directly), including everything that sits in between?

Bill Benzon said,

March 18, 2023 @ 12:48 pm

DJL: What I'm having difficulty with is the scope of actions you are attributing to everything but the underlying LLM, to all the "prompt engineering, filters, templates, scripts, reinforcement learning, etc etc." What I see is a prompt – such as "Tell me a story" – followed by a string of words that looks like a story. The string sometimes begins with the standard formula, "Once upon a time, in a far-off land," such as we see in the OP.

All that other stuff you refer to presents the LLM with a string that it can continue. That continuation is the string all that other stuff presents to me. I interpret that string as a story.

Why do I interpret it as a story? Because it has a beginning, a middle, and an end. And it makes sense. At this point I have collected over 300 such strings, using a variety of prompts, some of which I've listed above. Those strings have a lot of structure. I attribute that structure to the LLM. Am I wrong to do so? If the structure in the string wasn't created by the LLM, where did it come from?

I know that the LLM is not a mind. I believe that it is, in fact, something we've not seen until quite recently and something we don't understand. Whatever it is, it is highly structured and, as such, is able to produce strings of words that are highly structured as well. And not just stories. But, for example, it produces strings that read like a definitions of "reward," "nation," "culture," "beliefs," "evidence," "observation," "understand," "groups," or "collection." I'm curious about what that structure is and how it works.

If you aren't curious about that, that's fine.

DJL said,

March 19, 2023 @ 4:55 am

If you input the string 'tell me a story' directly into the LLM, what you are going to get in reply is a single word, and that will probably be 'that' – that is, the network would probably calculate that the word 'that' is the most likely continuation to the string 'tell me a story'. That's just what it does; I should know, I have done some coding with LLMs and this is what you get.

All I am saying is that in systems such as ChatGPT (a chatbot, and not the LLM itself), there is a lot of things on top of the LLM so that the output looks like what a user might expect it to look like, even if, ultimately, the text is generated by the LLM.

Bill Benzon said,

March 19, 2023 @ 12:10 pm

If you're curious about what's going on, here are some useful links:

How ChatGPT actually works – This is about the "packaging" that DJL is talking about so that the underlying large language model (LLM) is more user-friendly. This is based on a paper by the OpenAI team that did that work.

What Is ChatGPT Doing … and Why Does It Work? – This is a long quasi-technical paper by Stephen Wolfram that has a lot of useful visualizations, including one series on what is happening token-by-token as GPT continues a sentence.

Transformers: more than meets the eye – An informal paper about the transformer mechanism at the heart of GPTs and other AIs, including DALL-E, Stable Diffusion, and AlphaFold.

Language is our latent space – Informal and philosophical:

It gets more speculative from there.

GPT-3: Waterloo or Rubicon? Here be Dragons – I wrote this when GPT-3 first came out. It represents an attempt to create a conceptual framework in which the behavior of GPT-3 makes some kind of sense, even if the exact mechanisms are obscure. That may not seem like asking much, but it's an improvement over terminally mysterious and impossible. Here's the abstract:

Bill Benzon said,

March 19, 2023 @ 1:34 pm

Here's a useful tutorial on word and sentence embeddings, which is how words and sentences get represented in LLMs. They are thus central to LLM technology.

Chas Belov said,

March 19, 2023 @ 1:59 pm

I've asked for a couple stories, initially getting one about Lila slaying a dragon. The second time I asked for a story, I asked for one beginning "It was a dark and stormy night". Obviously not statistically significant, but both times the ending was happy on the initial try. The second story involve a woman who received a male visitor on a rainy night and they wound up talking until the morning. I like to ask for variations, such as in the style of Mark Twain or Edgar Allan Poe or as a slapstick comedy routine, and tend to be entertained by the results.

For the second story, I asked it to retell the story as a Shakespearean tragedy. The worst that happened was that the man left the next morning and the woman was heartbroken at this, obviously a much milder definition of tragedy than I would have expected.

I also tried asking it to tell me the story in reverse. It correctly started out with the ending but get to the middle and proceeded back to the ending rather than winding up with the beginning as I had requested.

I do notice ChatGPT seems to have trouble with requests that ask it to do something unusual. For instance, to tell me the difference between turnips and rutabagas without using the letter E. It would give me an answer there was mostly without the E, but one or two words would have that letter. When I would point that out and ask it to try again, it would apologize and spout out a new answer which corrected that word but introduced another word which had the letter E. after several times of this, I told it to restate the exact answer but to replace any words containing the letter E with words that did not contain the letter E. It wound up replacing some other words and left the E words in place.

With the story, I asked it to retell the story put put the word "green" in every sentence. It didn't manage every sentence, but did add green in at least one strange place, giving the cat in the story a green tail. However, "green" appeared only in grammatically correct positions.

Chas Belov said,

March 19, 2023 @ 2:01 pm

*The second story involved a woman who received a male visitor on a rainy night and they wound up talking until the morning.

*It correctly started out with the ending but got to the middle and proceeded back to the ending rather than winding up with the beginning as I had requested.

Bill Benzon said,

March 19, 2023 @ 2:21 pm

Chas Belov: Yes, it likes the name "Lila" and it likes dragons. I've gotten many stories with both. It's default story seems to have a happy ending – which is something that needs to be explained. But if you ask it for a sad story, it will give you one, though likely with some moral about the virtues of sadness.

And then there is this (the bold text is my prompt and the regular text is its reply:

Notice that my prompt said nothing about Z80-Ω-D23 being a machine. But I knew, from prior experience, that ChatGPT would interpret it that way. Note also that it changed the whole ethos of the story from a fairy tale world, with a dragon, to a science fiction world.

Chas Belov said,

March 19, 2023 @ 3:06 pm

As for the most probable next word, when I asked ChatGPT to retell the dark and stormy night story with the Flintstones, the first word out of Fred's mouth was "Wilma!" Unfortunately, he was addressing somebody else at the time.

Bill Benzon said,

March 19, 2023 @ 4:35 pm

And then there's this:

That was back on January 17. I wasn't keeping track versions back then.

Since then it has figured out how to tell stories involving colorless green ideas. This is from February 17 using the Feb 13 version of ChatGPT:

Bill Benzon said,

March 20, 2023 @ 3:36 pm

On a whim, I decided to try a more sophisticated kind of interaction.