Halfalogues onward

« previous post | next post »

In response to Wednesday's post on the (media response to the) "halfalogues" research, Lauren Emberson sent me a copy of the as-yet-unpublished paper, and so I can tell you a little more about the work. As Lauren agreed in her note to me, it was bad practice for Cornell University to put out a press release on May 19, well before the paper's publication date.

It's apparently normal for journalists to write science stories purely on the basis of press releases, sometimes eked out with a few quotes from an interview. And when the press releases are misleading, this can lead to a spectacular effervescence of nonsense.

However, I'm happy to say that in this case, the press release is an accurate (though brief) description of the research, and as a result, the media coverage is also generally accurate if incomplete. (The earlier post links to a generous sample of it.)

The full citation, when the paper appears, will be Lauren Emberson, Gary Lupyan, Michael Goldstein, and Michael Spivey, "Overheard Cell-Phone Conversations: When Less Speech is More Distracting", Psychological Science, some-date-in-the-future. (I note that Psychological Science is a "RoMEO yellow" publisher with a "12-months embargo", consistent with the generally backwards attitudes of psychology journals, which means that the authors are never allowed to self-archive the final published version, and are not allowed to self-archive a prepublication version until 12 months after formal publication. This makes the month-early press release doubly shameful, in my opinion.)

The paper describes two experiments. (There's no logical reason for the division. The second experiment was presumably added to provide a control that a reviewer at PS asked for — it partially addresses the point that Bob Ladd brought up in a comment on the earlier post — or perhaps a similar point came up in internal discussion among the authors.)

Both experiments began with these raw materials:

Two pairs of college roommates (all female) seated in separate, sound-proof rooms were given conversation starters (e.g. a news article, comic) to discuss over cellphones. After two minutes of conversation, participants recapped the conversation in a monologue producing an even sampling of content in all speech conditions.

Each speaker was recorded using a wireless microphone on a separate channel of a wav file in order to facilitate a clean separation of dialogues. Eight dialogues (one speaker per channel), 8 halfalogues (derived from the non-selected dialogues; one speaker in stereo), and 8 monologues (some “recaps” had to be concatenated) were used.

A “silent condition” served as a baseline to which we compared performance in the 3 speech conditions. All speech files were 60 seconds long and were volume normalized.

The dialogues were 92% speech and the halfalogues were 55% speech. For the second experiment, they

… controlled for the unpredictable acoustics of the halfalogue speech by filtering out the information content (i.e. rendering the speech incomprehensible) while maintaining the essential acoustic characteristics of speech onsets and offsets.

[…] All procedures and material were identical with Experiment 1 with one crucial exception: all sound files were low-pass filtered such that only the fundamental frequency of the speech was audible. This manipulation rendered the speech incomprehensible while maintaining the essential acoustic characteristics of speech; similar to hearing someone speak underwater.

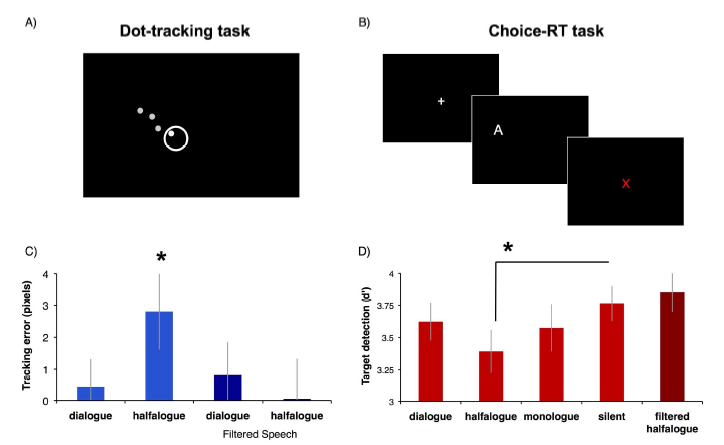

One of the two tasks was a visual monitoring task, where

participants were asked to track a moving dot using a computer mouse. The trajectory of the dot was pseudo-random, shifting at each time-point by 1o-20o in either direction. The dependent measure was the Euclidean distance between the dot and the center of a ring-shaped mouse cursor, recorded every 40ms. Greater distances corresponded to poorer ability to follow the trajectory of the dot.

The other task was a choice-reaction-time task, where

Each block began with a display of four randomly-selected English letters that served as the targets for that trial sequence. Each trial began with a fixation cross presented for 600-1000 ms followed by one of the four target letters, or a non-target letter for 400ms. Target letters were presented on 35% of the trials. Participants were allowed 1 s to respond. False alarms or misses were followed by a centrally presented red ‘X’ indicating that the participants responded incorrectly.

The subjects in Experiment 1 were 24 Cornell undergrads, 6 of them male:

Participants were seated at a computer and instructed that they would be completing two different tasks. […] They were given 1 minute of practice with each of these tasks in silence. They were then instructed that they will be completing these tasks a number of times and would sometimes hear speech from the two computer speakers situated on either side of the monitor. Participants were asked to focus their attention on the primary task.

During the experiment, participants were presented with the two tasks in alternating order for 32 blocks, each block was 60 seconds long—the duration of each speech file. The four conditions (3 speech and silence) were presented in shuffled order every 8 blocks.

In Experiment 2, the subjects were an additional 17 Cornell undergrads, 1 of whom was male. (It's not clear whether the preponderance of female subjects reflects the general make-up of those who enroll into introductory psychology at Cornell, or whether it reflects a differential propensity to volunteer for experiments in general, or differential interest in this experiment in particular. I suspect that each factor added a bit to the effect.)

Here's a graph of the results:

The authors don't tell us what the sampling rate (and thus acoustic bandwidth) of the audio recordings were, or what the effective listening levels were, or what the characteristics of the low-pass filter were. They say that "all speech files … were volume normalized", but not what that means — did they use the "normalize" command in a program like audacity, which equates peak waveform values? Did they normalize RMS amplitudes? A-weighted amplitudes? Some other weighting? These may seem like somewhat picky points, but they might well make a difference — maybe a large one — in the results of a replication. (I'm a bit surprised that Psychological Science didn't ask for these details. In any event, the stimuli in such experiments should be always be made available by the journal, now that the difficulty and expense of doing so are rapidly approaching zero.)

But overall, these experiments are simple and effective in establishing their point, which in turn was explained accurately by the mass media.

The experiments are simple enough that they could easily be replicated and extended by others. And they should be, because many questions are left open. What would be the effect of dialogues vs. halfalogues in an unknown foreign language? How about coherent monologues with silences inserted, so as match the duty-cycle statistics of the halfalogues? Or similarly-spaced sequences of syntactically, semantically or pragmatically anomalous sentences?

What about the effect of ways of making speech unintelligible that don't change the long-term average spectrum? (Their low-pass filtering, by definition, removes all the high frequencies — and there's a reason that warning sounds, for example the beeps warning you that a truck is backing up, are rich in high frequencies. Thus low-pass filtering was not an adequate way of testing the hypothesis that what's distracting is (partly) intermittent or unpredictable sounds of certain kinds, independent of any communicative content.)

These results also seem partly at variance with the results reported in Melina Kumar et al., "Telephone conversation impairs sustained visual attention via a central bottleneck", Psychonomic Bulletin & Review (2008), where the authors found that performance on a multiple-object tracking (MOT) task was disrupted when subjects heard a sequence of isolated words and had to respond with a related word for each one, but not when they only had to repeat the words that they heard. (At least, the theories advanced in the two papers are superficially inconsistent — Emberson et al. suggest that there's a large attentional load created by passive prediction, at least when half of a conversation is missing; Kumar et al. suggest that the attentional load in carrying on a conversation is entirely due to processes of response generation. Both theories could be true, or partly true and partly false, but they point in somewhat different directions.)

So if you're a student in one of the relevant disciplines — linguistics, psychology, maybe even communications or sociology if you have an especially open-minded advisor — and you're looking for an interesting topic for a potentially high-impact experiment that's relatively easy and quick to do, you should consider giving this area of research a shot.

Emily Viehland said,

June 11, 2010 @ 9:48 am

I would expect a gender difference, but there were not enough males subjects to tease this aspect apart. A bigger follow-up study with a balanced gender population would be very useful.

[(myl) Perhaps, but I question whether it would be appropriate to draw any conclusions about gender effects in general based on a sample of undergraduates — no matter how large — enrolled in Intro Psych at a single rather unrepresentative institution. If the issue were overall American sex differences in attitudes towards a national political candidate, would you believe a survey whose subjects were a few dozen 19-year-olds who volunteered in a psychology course at a single institution with highly skewed demographics in other respects? Any self-respecting social scientist would be shocked by this. Maybe gender differences in propensity to be distracted by overheard conversations between female college roommates are the same among 19-year-old psychology students at Cornell and (say) 50-year-old office workers in Atlanta or 30-year-old police officers in Billings, but it would be unwise to assume this without any evidence. See "Pop platonism and unrepresentative samples", 7/26/2008, for some further discussion.]

Rubrick said,

June 11, 2010 @ 2:24 pm

A study investigating any gender difference would surely provide more fodder for Language Log, in the form of the sort of idiotic, misleading press accounts which are apparently lacking in the current story.

[(myl) Though I have no relevant evidence, I'd be willing to bet that there are large individual differences in ability to ignore "halfalogues", as well as in ability to tune out other sorts of distractions. And it would make sense for those individual differences to carry over into group differences among people with different skills and preferences, different personality types, different life experiences, and so on. It's also quite possible that there are large sex differences, whether for biological or cultural reasons (or both). But to sort this all out, in my opinion, you'd have to do more than test some Ivy-league freshmen.]

Kelly said,

June 11, 2010 @ 2:41 pm

I had an experience once that I think is probably related to this phenomenon. My coworker had a little radio on her desk, and while it annoyed me pretty much all the time when she had it on, I found it was far more annoying when she turned it down very softly in an attempt to not disturb the rest of us. I could just almost make out what was being said or sung, and as much as I tried to ignore it I ended up spending the rest of the day listening very hard and trying to understand what I heard. It was annoying, distracting, and exhausting! I think I'd prefer to listen to a halfalogue.

exackerly said,

June 11, 2010 @ 3:05 pm

I think the only reasonable thing to do is pretend the person is talking to you and fill in the other half of the conversation yourself. Out loud of course.

john riemann soong said,

June 11, 2010 @ 4:32 pm

Why would someone /expect/ a gender difference? Based on the current data out there on language and sexual dimorphism, a respectable null hypothesis would be to assume /no/ gender difference.

[(myl) Well, if you believe the "mind blindness" theory of (some kinds of?) autism, and the "extreme male brain" theory of (some kinds of?) autism, then there ought to be fairly large sex differences in the strength of the "theory of mind" response that presumably underlies the effects found in this experiment. These two theories are supported by well-informed and sensible people — Steve Pinker and Simon Baron-Cohen respectively.

I'm rather skeptical of both theories, myself — at least I think It's More Complicated Than That™ — but I can see that plenty of smart and reasonable people would predict a sex effect in experiments of this kind.

(Whether the prediction is that males would be MORE distracted, because their ToM abilities are (by hypothesis) not as good, or LESS distracted, because ditto, is not ao clear…)]

Jason F. Siegel said,

June 11, 2010 @ 6:00 pm

Does the study make any references to literature about obligatory parsing? I imagine that the instinct to parse speech like sounds as speech is one that get amplified when we know we're not getting full information.

D.O. said,

June 11, 2010 @ 6:37 pm

Language Log might also contribute to this valuable research. You can publish half-a-blog-post with cleverly omitted parts and see how much the readers will be annoyed/distracted. My bet, you will have a 100 comments trying to fill in the gaps.

Mark said,

June 11, 2010 @ 6:47 pm

I am curious as to whether anyone pays attention to the embargoing rules at a journal like this one. If you flout the rules and post your article on your web site, is there ever any consequence? I have never heard of one. These seem like unforceable rules. I routinely post my own papers in the belief that the more people who do so, the more quickly the ridiculous constraints will die.

Rubrick said,

June 11, 2010 @ 7:06 pm

[(myl) Though I have no relevant evidence, I'd be willing to bet that there are large individual differences in ability to ignore "halfalogues", as well as in ability to tune out other sorts of distractions.]

I suspect you're right. Someone will therefore do a study which finds large within-group variation and small but perhaps significant between-group variation, which will be reported in the headlines as "Women Make Better Eavesdroppers". Which LL will then get to rail about.

Garrett Wollman said,

June 12, 2010 @ 12:10 am

@kelly & myl: I can report similar anecdotal evidence: I also find that having an officemate leave the radio turned down very low is much more distracting than having it at a normal volume, and furthermore that having an officemate have long (but unmuted) telephone conversations in Russian is also quite distracting.

SlideSF said,

June 12, 2010 @ 3:55 am

Alas, too much charging has brought me into the Valley of Debt. I know, Crimea river, right?

CBK said,

June 14, 2010 @ 1:43 am

@myl: Baron-Cohen’s estimate of how his five brain types are distributed by gender appears to be based on questionnaire responses from a total of 324 people. See Baron-Cohen, 2009 , p. 76, which references Goldenfeld et al., 2005 which says on p. 339:

“Group 1 comprised 114 males and 163 females randomly selected from the general population. Group 2 comprised 33 males and 14 females diagnosed with Asperger Syndrome (AS) or high-functioning autism (HFA).”

Do you know if he’s looked at a larger sample? (And how does he get a randomly selected person to answer a questionnaire?)

Nanani said,

June 15, 2010 @ 2:45 am

I've always found overheard conversations to be less distracting if they're not in the language I am currently working in. So, if I'm reading or writing in English, background speech in English will distract far more than in Japanese, and ditto if I am working in Japanese.

I rarely get exposed to random speech in a language I don't understand, halfalogue or otherwise.