Lowpass filtering to remove speech content?

« previous post | next post »

In the "halfalogues" research that I've discussed in a couple of posts recently ("Halfalogues", 6/9/2010; "Halfalogues onward", 6/11/2010), one of the experimental manipulations was intended to establish that "it is the unpredictable informational content of halfalogues that result [sic] in distraction", and not (for example) that the distraction is simply caused by an acoustic background that comes and goes at irregular intervals like those of conversational turns.

As I suggested in passing, the method that was used for this experimental control has some intrinsic problems, and the paper doesn't give enough information for us to judge how problematic it was. Today I'm going to explain those remarks in a bit more detail. (Nerd Alert: if you don't care about the methodology of psychophysical experimentation, you may want to turn your attention to some of our other fine posts.)

From the paper's abstract:

We show that merely overhearing a halfalogue results in decreased performance on cognitive tasks designed to reflect the attentional demands of daily activities. By contrast, overhearing both sides of a cell-phone conversation or a monologue does not result in decreased performance. This may be because the content of a halfalogue is less predictable than both sides of a conversation. In a second experiment, we control for differences in acoustic factors between these types of overheard speech, establishing that it is the unpredictable informational content of halfalogues that result in distraction.

Here's a bit more of their reasoning, from the body of the paper:

Relative to dialogues, halfalogues are less predictable in both their informational content and their acoustic properties. Whereas dialogues involve the nearly continuous switching of speech between speakers, in halfalogues, speech onsets and offsets are more unpredictable. If these unpredictable acoustic changes were solely responsible for the detrimental effects of overhearing halfalogues, then filtering out the informational content while leaving the low-level acoustic content the same, would still result in significant distraction. On the other hand, if the increased distraction observed while overhearing halfalogues is due to the relative unpredictability of the content of half of a conversation, then filtering the speech should nullify the distraction resulting from overhearing halfalogues. Thus, Experiment 2 controlled for the unpredictable acoustics of the halfalogue speech by filtering out the information content (i.e. rendering the speech incomprehensible) while maintaining the essential acoustic characteristics of speech onsets and offsets.

How did they "control for the unpredictable acoustics of the halfalogue speech by filtering out the information content … while maintaining the essential acoustic characteristics of speech onsets and offsets"?

[A]ll sound files were low-pass filtered such that only the fundamental frequency of the speech was audible. This manipulation rendered the speech incomprehensible while maintaining the essential acoustic characteristics of speech; similar to hearing someone speak underwater.

But the problem with lowpass filtering is that it removes a very large portion of "the essential acoustic characteristics of speech". Specifically and obviously, it removes the higher frequencies. And since the typical range of fundamental frequencies for female conversational speech is something like 100-300 Hz, while the range of speech-relevant frequencies goes up to 8,000 Hz or so, we're talking about removing a very large fraction of the "essential acoustic characteristics of speech".

This has many psychological consequences, but I'll focus on one simple aspect of the outcome: it's likely to make the overall psychologically-relevant sound level of the audio signal a great deal lower.

Here's the peak-normalized original for a short piece of female speech (specifically, TEST/DR2/FCMR0/SI1105.wav from the TIMIT collection):

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

Here's the similarly peak-normalized lowpass signal:

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

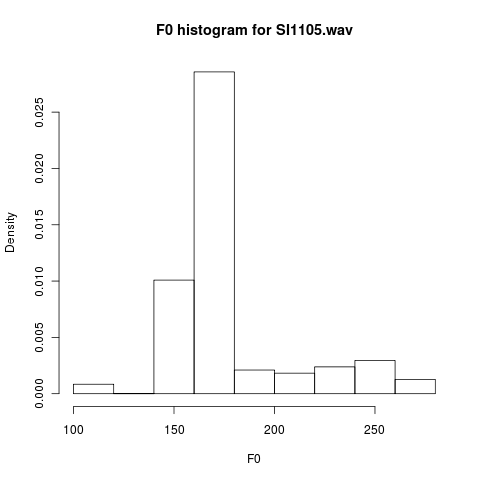

By "peak-normalized", I mean that the samples in the .wav file are scaled by a factor chosen so that the sample with the highest absolute value just fits within the 16-bit range of values allowed. And I've lowpass filtered the signal such that the edge of the passband is at 300 Hz, reflecting the fact that the highest fundamental-frequency value was 280 Hz. For completeness, here's a histogram of the pitch values in the utterance:

As you can hear, the lowpass signal sounds a lot softer, and therefore will presumably be less salient and less distracting. Go listen to the two signals again, if you've forgotten.

But there's no way to make the lowpass signal louder, except of course by turning up the gain on an external amplifier. It's been "normalized" to be as loud as it possibly can be as a digital waveform.

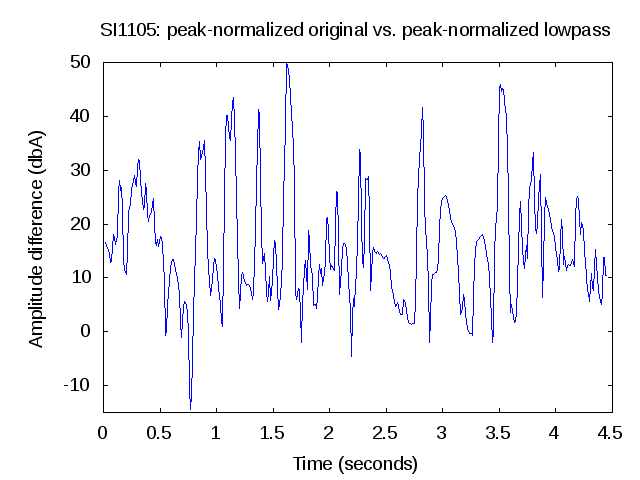

How big is the difference in acoustic sound level between the peak-normalized original and the peak-normalized lowpass version? Well, here's a plot of the difference (in decibels) between the A-weighted amplitudes of the two signals (calculated 100 times a second in a 30-msec hamming window):

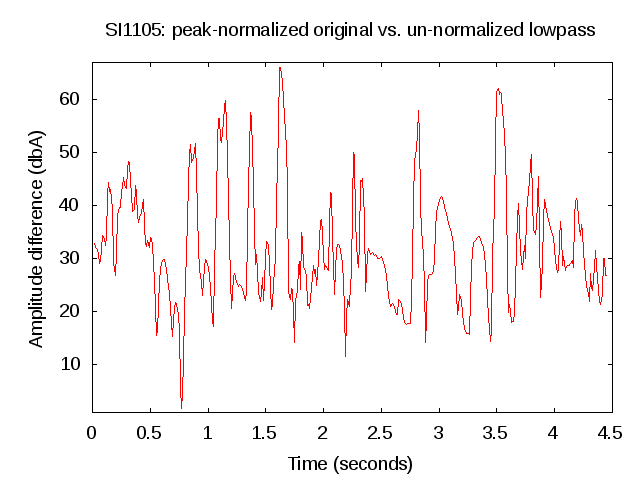

As you can see, the original signal is 10 to 50 db louder. If I hadn't peak-normalized the filtered signal, the different would have been about 15 db larger still (because the filtering process removes a lot of original signal, just someone's legs weigh less than their entire body does).

So if this was (even roughly) the way that the filtering and the volume-normalization was done, then the filtered audio was arguably a lot less distracting than the original audio, for the purely acoustical reason that its sound level was quite a bit lower.

Perhaps the filtering and volume-normalization was done in some different way — but if so, the paper's authors should have told us the details. For example, it would be possible to create a situation in which the levels of the stressed syllables in the original and in the filtered signal are comparable. But to do that, you'd have to scale the original audio down by something like 30 db relative to the filtered audio. And if the filtered audio were presented at a comfortable listening level, over loudspeakers in an office or lab setting (which was apparently the procedure in this case), then the scaled-down original audio would be rather hard to hear.

And even if the two types of audio were equated in overall sound level by some manipulation of this kind, we would not be "leaving the low-level acoustic content the same", since, by definition, the higher-frequency 95% or so of the acoustic content would be missing in the lowpass version. For this reason, lowpass filtering is a problematic (though common) way to accomplish the goal of "filtering out the information content (i.e. rendering the speech incomprehensible) while maintaining the essential acoustic characteristics of speech onsets and offsets".

There are some more complicated ways to manipulate speech so as to make it unintelligible while retained key acoustic properties of the original. But in this case, it seems to me, there are some obvious designs for appropriate controls that don't require any fancy signal-processing (though they do impose other requirements). For example, you could produce dialogues and halfalogues in two languages whose speakers are mostly non-overlapping — say Finnish and Chinese. Then each subject does the dot-tracking task (or whatever) while listening to comprehensible and incomprehensible dialogues and halfalogues. The prediction of Emberson et al., I think, is that the Finnish subjects will be distracted by the Finnish halfalogues but not by the Finnish dialogues or the Chinese dialogues or halfalogues, and vice versa for the Chinese subjects.

I suspect that this is indeed how it would turn out, except that it wouldn't surprise me to find that there are several effects simultaneously active here: a cognitive load created by following comprehensible and coherent speech, which is greater for comprehensible halfalogues than for the other conditions; an acoustic distraction effect created by intermittent stimuli, which is greater for halfalogues in general than for dialogues; and an unfamiliarity effect, which is greater for stimuli in an unfamiliar language (especially if it involves strikingly unfamiliar sound patterns) than for speech in a familiar one. It's probably also true that some people's conversations (half or full) are much more distracting than others, for example if the people or topics involved arouse strong affective reactions of one kind or another.

However, as Yogi Berra said, it's tough to make predictions, especially about the future.

I'm convinced, at least tentatively, that "mind-reading fatigue" is a big part of the reason that people find public cell-phone conversations so annoying. But this idea sits in the middle of a lot of other interesting phenomena — read about the "mismatch negativity effect" for one of several dimensions of interest — and I expect that there will be a lot of follow-up. As I mentioned in one of the earlier posts, this is a relatively easy kind of experiment to do, and there are lots of obvious things to try that would help clarify what's going on. One thing that would help: an open-source experimental design, with code for running subjects and accumulating results.

[In creating the examples above, I used sox for the filtering and volume normalization, and octave for calculating and plotting the A-weighted amplitude difference, using code that I'll be happy to share with anyone who's interested.]

Mark F. said,

June 12, 2010 @ 8:19 am

They should hire a trumpeter with a mute to produce grownup voices from Peanuts.

[(myl) One of the alternative techniques that I referred to involves using a waveform-table oscillator driven by pitch and amplitude contours derived from speech. (Example here.) You could use a trumpet-like waveform for this (though the onsets wouldn't be especially trumpet-like).]

noahpoah said,

June 12, 2010 @ 9:54 am

It's not clear to me from the wikipedia article, but when carrying out A-weighting, do you sum (from zero to the nyquist frequency) the (element-wise) product of, say, an FFT of the signal and the A-weighting function for each windowed set of samples?

It seems like you should get a function (of frequency) for each windowed set of samples (i.e., at each time point), but in your figures, you have a single value at each time point.

[(myl) See here (and here). The crucial stretch of (matlab or octave) code:

You could also apply an A-weighting filter in the time domain, and then calculate the amplitude in the usual way (e.g. by taking the root mean square of samples in a window, and then using 20*log10() to turn it into dB.)

Either way, you get one sound-level value per time region. A traditional sound-level meter does something similar, whether in analog circuitry or (more likely these days) by digital means.]

Sili said,

June 12, 2010 @ 10:33 am

Interesting. We attribute that saying to Robert Storm Petersen (or Niels Bohr – I forget).

Of course, now that I check it turns out to be of unknown origin, possibly by someone in parliament '35-'39 according to the memoirs of K.K. Steincke (1948).

[(myl) This page gives attributions to Yogi Berra, Niels Bohr, Casey Stengel, Sam Goldwyn, Dan Quayle, Will Rogers, Mark Twain, Albert Einstein, Allan Lamport, George Bernard Shaw, Victor Borge, Robert Storm Petersen, Winston Churchill, Disraeli, Groucho Marx, Enrico Fermi, Woody Allen, Confucius, Freeman Dyson, Cecil B. DeMille, Vint Cerf, and a number of others.

As Yogi himself explained, "I really didn't say everything I said!".]

Rubrick said,

June 12, 2010 @ 1:50 pm

…proving that postdictions aren't so easy either.

D.O. said,

June 12, 2010 @ 2:05 pm

Another possibility for control is reading a text in the native language (that is English, of course) almost completely devoid of the surprise value, say The Pledge of Allegiance.

D.O. said,

June 12, 2010 @ 2:08 pm

@Rubrick. There is a whole country with unpredictable past…

baylink said,

June 13, 2010 @ 2:55 am

Normalize, then *compress*; this will bring the average

level back up.

[(myl) Not true. When the file is played, it will go the D/A converter as PCM waveform samples, and whatever bit-depth is used (typically 16), the maximum (absolute) sample values are the maximum values, there's no getting around it.

If you remove 95% of the frequency spectrum of an audio clip whose amplitude is already peak-normalized, then the only way to give the residue the same sound power (or the same loudness, though this is not the same) as the original is to radically lower the level of the original.

It's possible that the experimenters did this, but my guess is that they just peak-normalized the two signals, which (as explained above) yields a big difference in sound levels, in perceived loudness, and (presumably) in distraction.]

GAC said,

June 13, 2010 @ 11:11 pm

About the foreign language idea, would it also behoove them to make sure they have native speakers? I don't know how it works for most people, but I find that dialogues in my second languages can either be tuned out entirely or listened to much more intently than normal (I have to attend to get any information, whereas overhearing a conversation in English I can get information with so little effort that I have to make an effort to tune out rather than to tune in).

That's just my experience, though. I don't know how much of a difference it makes in practice.

Nick Lamb said,

June 14, 2010 @ 1:05 pm

Um, I think baylink is right and Mark has rather missed the point.

http://en.wikipedia.org/wiki/Dynamic_range_compression

For a small adjustment you can be more brutal than a real compressor, and perform your own "brickwall" compression by running the PCM sample values through a saturating multiply operator in a scientific mathematics app rather than using music-oriented audio tools. This causes "clipping" but that actually doesn't sound too bad if it's not done too much (in contrast "wrapping" which happens with a non-saturating multiply sounds like harsh noise and is immediately objectionable).

Anyway, the end result of range compression is that your audio now sounds "louder" and we can use this sort of technique to compress the filtered audio until it sounds just as loud (either by ear, or with analysis tools built to whatever theory of audio perception you subscribe to) as the original.