Yanny vs. Laurel: an analysis by Benjamin Munson

« previous post | next post »

A peculiar audio clip has turned into a viral sensation, the acoustic equivalent of "the dress" — which, you'll recall, was either white and gold or blue and black, depending on your point of view. This time around, the dividing line is between "Yanny" and "Laurel."

What do you hear?! Yanny or Laurel pic.twitter.com/jvHhCbMc8I

— Cloe Feldman (@CloeCouture) May 15, 2018

The Yanny vs. Laurel perceptual puzzle has been fiercely debated (see coverage in the New York Times, the Atlantic, Vox, and CNET, for starters). Various linguists have chimed in on social media (notably, Suzy J. Styles and Rory Turnbull on Twitter). On Facebook, the University of Minnesota's Benjamin Munson shared a cogent analysis that he provided to an inquiring reporter, and he has graciously agreed to have an expanded version of his explainer published here as a guest post.

The production of sounds produced with a relatively open vocal tract (like the vowels in "Laurel" and "Yanny") and some consonants (like the "l", "r", "y", and "n'" sounds in "Laurel" and "Yanny") have infinitely many frequencies in them. Think of it like hundreds of tuning forks playing at once. If the lowest-frequency tuning fork vibrates at 100 cycles per second, then the tuning forks will be at integer multiples of 100 Hz: 100, 200, 300, up to infinity. If the lowest-frequency tuning fork vibrates at 120 cycles per second, then the tuning forks will be at integer multiples of 120 Hz: 120, 240, 360, up to infinity. We can change the frequency of the so-called 'lowest frequency fork' by changing the tension in our vocal folds (layperson: 'vocal cords'), which causes them to vibrate more slowly or more quickly. We hear those changes as changes in the frequency of the voice, like the pitch glide upward when you ask a yes-no question, or the pitch glide downward when you make a statement. But speech has many more frequency components than just that lowest-frequency component. Remember, infinitely many tuning forks. The difference between an "ee" and "ah" vowel is that some of the frequencies that are especially loud in "ee" are quiet in "ah" and vice versa. The same pitches are present–the tuning forks are always vibrating–but the loudness of each of the frequency components (each of the tuning forks) changes from vowel to vowel.

Hopefully I've been clear so far, because here's where it gets weird.

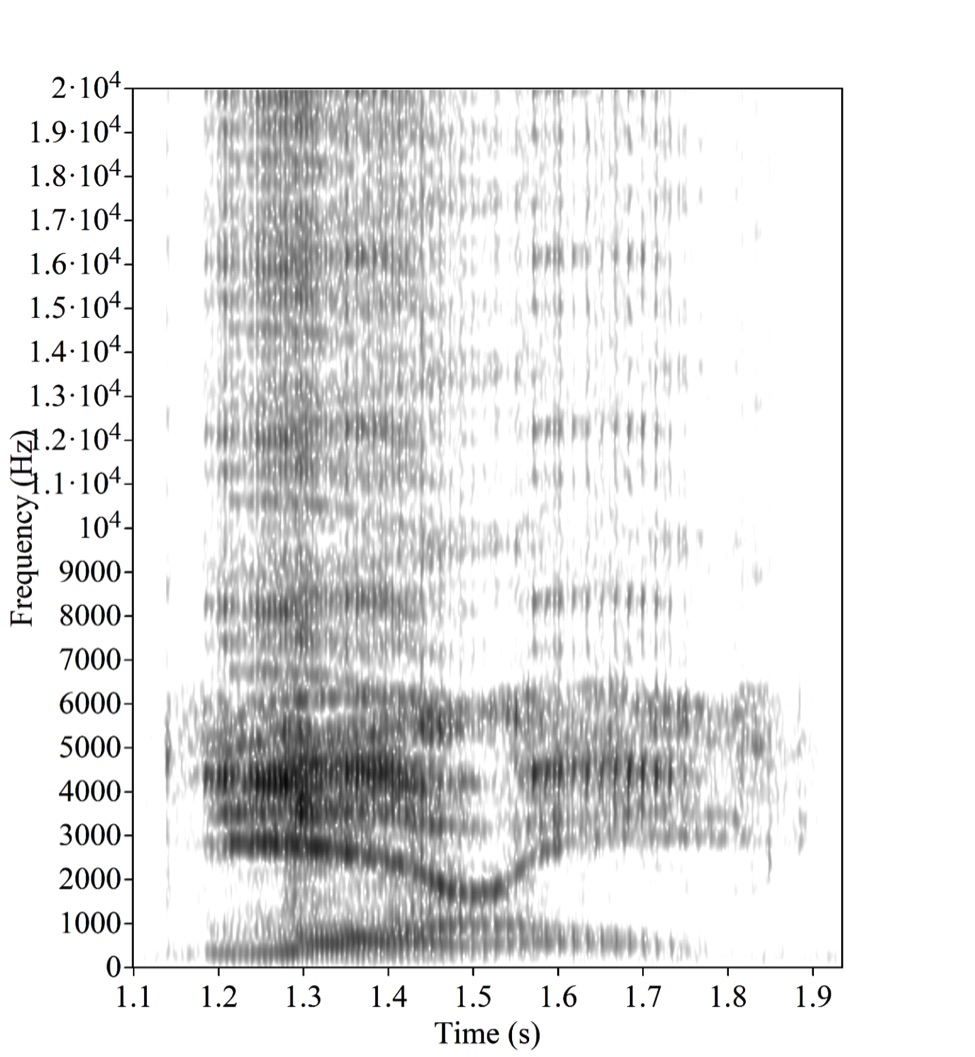

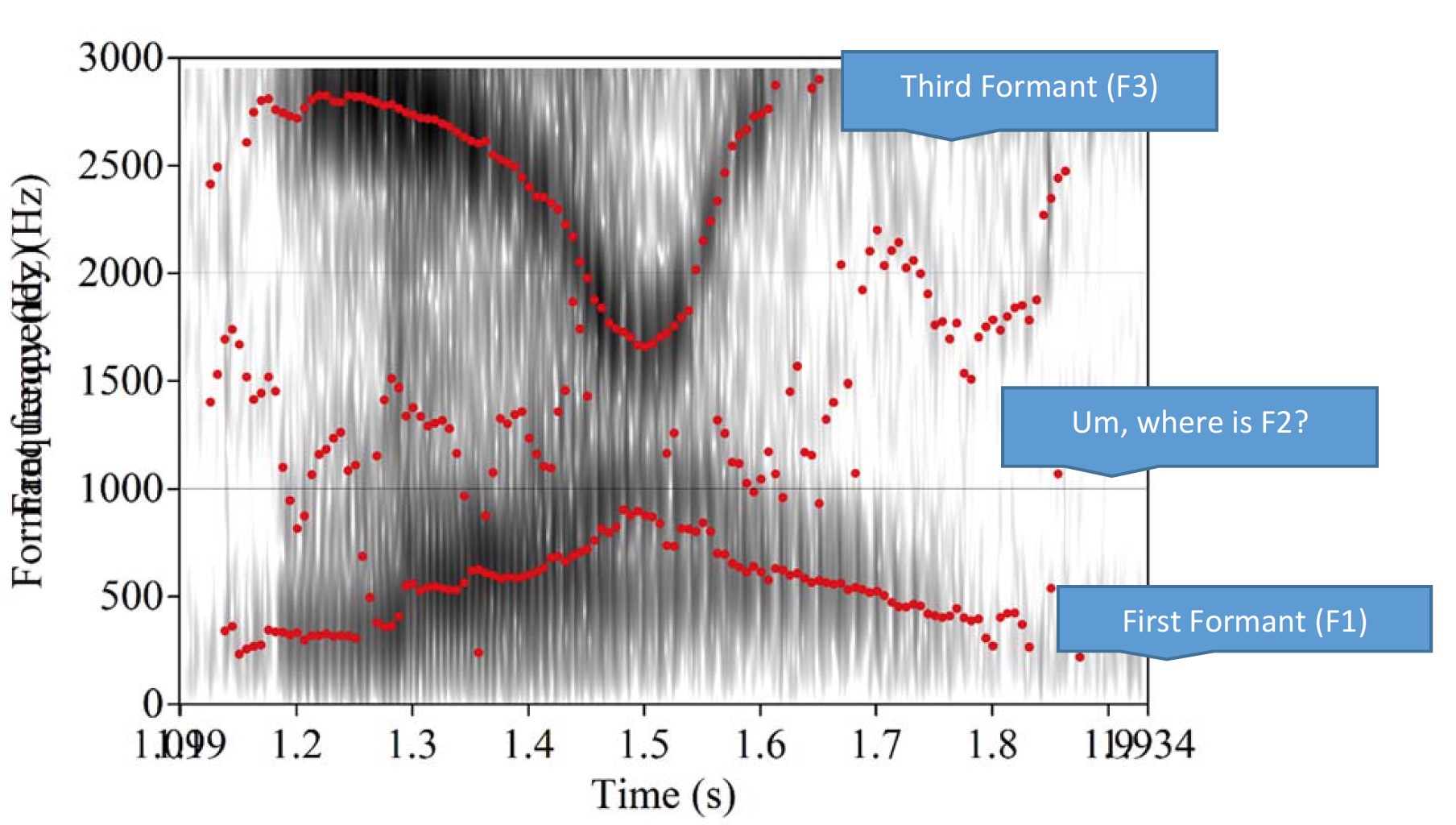

So, the frequencies (the 'tuning forks') that are loudest are what we call formants. The formants are the horizontal stripes in the picture above, called a spectrogram. A spectrogram is a quasi 3D picture. It's sort of like a topographic map. The x axis is time, so a spectrogram can show things changing over time. The y-axis is frequency. There are many different frequencies in speech—many different 'tuning forks' in our analogy—and we need to represent as many of these as we need to describe speech. In speech, we usually focus on those frequencies between 0 and 10,00 Hz, but since the youngest, healthiest humans can hear up to 20,000 Hz, we sometimes show 0-20,000 Hz, as in the above. The shading shows which of the frequencies are loudest. Think of the shading on a topographic map: the shading shows where the mountains are. It's the third dimension of the map. The dark-shaded regions are the highest amplitude (=loudest, though 'loudness' and 'amplitude' are subtly different for reasons we won't worry about here). They formants (=the frequencies where there are amplitude peaks) change over the course of the utterance, as you go from "l" to the "aw" vowel to the "er" vowel to "l". Roughly speaking, each formant has an articulatory correlate (or, the higher up you go, correlates, plural). The frequency of the lowest-frequency formant roughly tracks tongue movement in the up-down dimension (from a high position in "ee" in "beet" to a low position in the 'short a' of "bat"). The second-lowest formant roughly tracks tongue movement in the front-back dimension (from the front position of the 'short a' in "bat" to the back position of the 'long a' in "bot"). We tend to perceive these differences regardless of the absolute frequency of the formants. A child's formants are higher than an adults, because the kids' mouths and necks are smaller than adults'. Still, we perceive the lowest-frequency formant as tracking up-down tongue movement, regardless of whether it's lower frequency overall (as in an adult) or higher-frequency overall (as in a kid). Think of the way a melody sounds on an alto saxophone versus a baritone saxophone. We can hear the same notes and melody even though the timbre—the 'tone quality', so to speak—is different because of the overall size differences of the instrument. For illustration, here is the lowest 2000 Hz of the above spectrogram, with the formants overlaid on it in red.

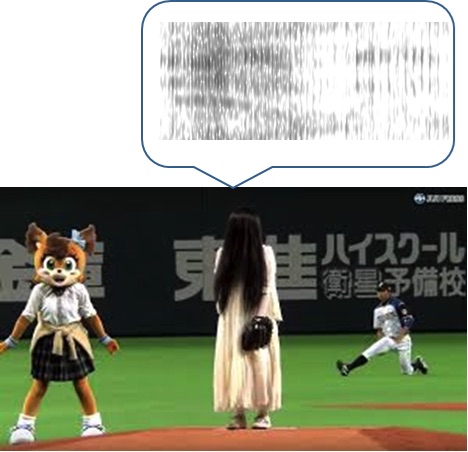

Now, right away we see that there is something awry about this signal. Where there should be a second formant, there are just speckles that appear random. One thing about this signal is that it's hard to track the F2. This is perhaps the first ingredient into why it is so susceptible to being identified differently. The F2 is, for some reason (overlapping voices? Intentional shenanigans? The girl from The Ring?) masked. That means that people can use 'top-down' knowledge (expectations, beliefs, priming from the words on the screen that this was presented with) to fill in their perception of the tongue's back-front movement. This was first pointed out by Rory Turnbull in his nice analysis of this signal.

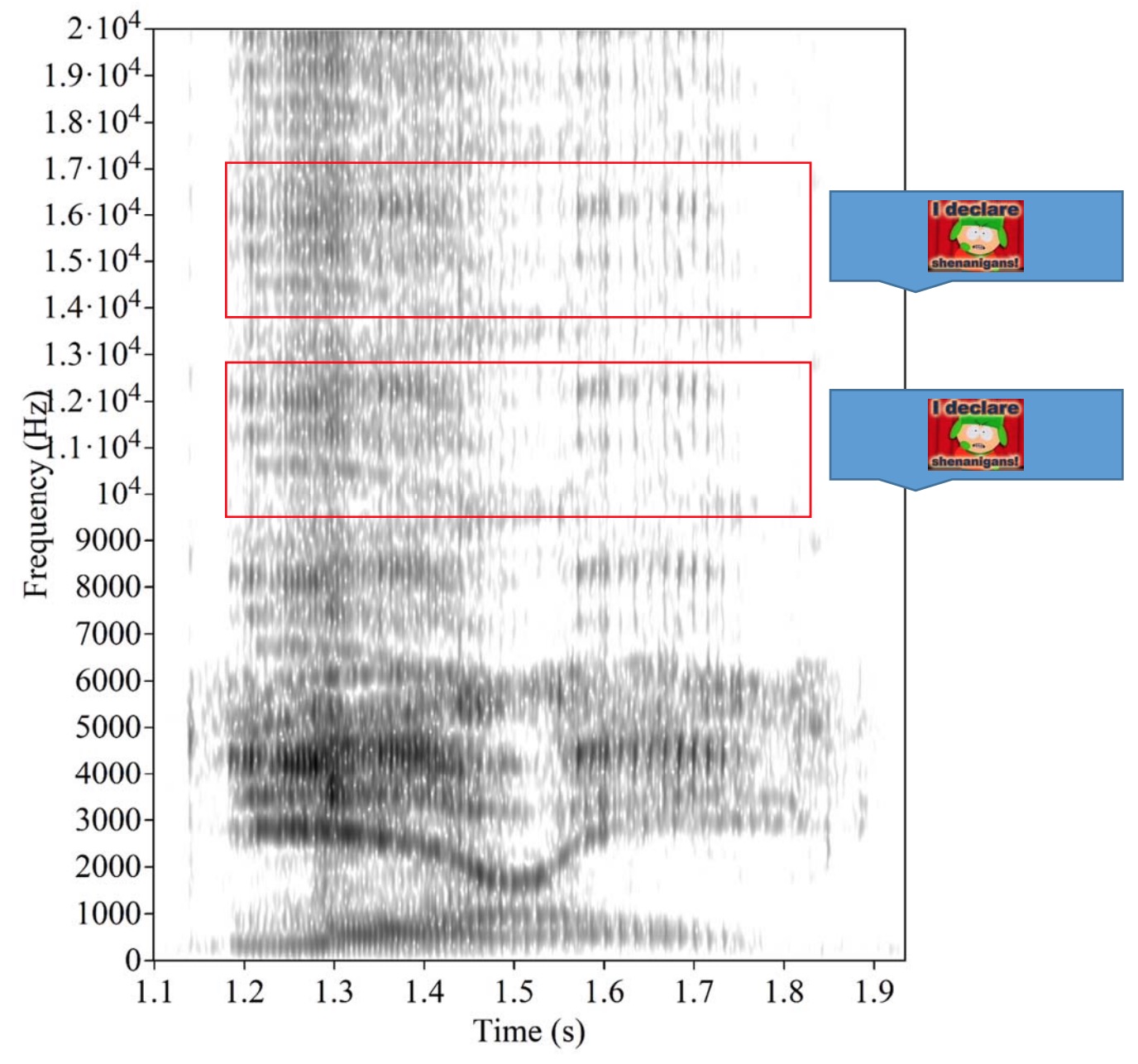

Now, look at the first spectrogram, and focus on the higher frequencies. You see some faint stripes that look like lighter-gray formants at those higher frequencies. Those shouldn't be there. Humans can't produce those. Perhaps they were made by some bored undergrad who just discovered a few facts about signal processing. Let's imagine that the bored undergrad made them by taking some formants at lower frequencies and raising their frequencies (not a hard thing to do, honestly, using the 'change gender' function in the free software application Praat), then mixing them back in with the original signal. Now, just to be clear, the higher-frequency formants are not an additional 'new voice' (scare quotes intentional) in the signal. It's the same tuning forks. It's just that the higher-frequency tuning forks has the same patterns of high and low loudnesses as the lower frequency ones. I heard the higher-frequency formant sequences when I first listened to this signal two hours ago and thought that they maybe were someone talking in the background. Then I thought "ERMERGERD, IT'S THE AUDIO VERSION OF THE RING.

Then I remembered that I was an adult, and that The Ring was just a movie. So, I decided to look at the spectrogram. Then, I made the attached spectrogram and realized that one of the primary weird things in this signal is that it has a lower-frequency formant pattern repeated at higher frequencies.

Excursus: Rory Turnbull pointed out that we don't know what kind of compression algorithm is used on Twitter. Maybe that's to blame, and not a bored undergrad. But no matter, I will assert with some confidence that these *shouldn't* be there based on what we know about human speech production.

Returning from the excursus, we can now ask: what does that mean?

One possibility is that the formant pattern at the higher frequencies is just "Laurel" transposed to higher frequencies, and that "Laurel" sounds like "Yanny" at higher frequencies. That's plausible–we never hear that kind of higher-frequency speech, and we don't have a huge body of scholarship on what higher-frequency formants would sound like.

Excursus: since writing this, Suzy Styles has tweeted a B-E-A-U-T-I-F-U-L tutorial on speech acoustics, along with her equally beautiful interpretation of this phenomenon. WTG, Dr. Prof. Styles! If you read Dr. Prof. Styles' tutorial, you will learn a figurative ton about the perception of formant-frequency changes. If you want to learn more about the perception of higher-frequency formants, you can dive head-first into UC-Davis professor Santiago Barreda's work on formant-frequency shifting in speech.

So, circling back: high-frequency formants like those highlighted with "shenanigans" above just don't occur in human speech.

Is that the only possibility? Of course not. Another possibility is that the lower-frequency signal has "Laurel" and "Yanny" mixed together on top of one another. Maybe the pitch (=the 'lowest frequency tuning fork') for "Yanny" is lower than that for "Laurel", which would jibe with the percept that was reported to me by Vox's Jen Kirby. If that were true, and if the higher-frequency formant sequences (the ones that were added artificially) were also "Yanny", then you'd be setting people up to hear "Yanny" if they could hear high frequencies well. That is, the higher-frequency formant ensembles (the girl from The Ring/bored undergrad stuff that is outlined in red boxes on my figure) might be most clearly audible to folks with good headphones and good hearing, and those who can hear them might then be more likely to hear the lower-frequency "Yanny" 'pop' away from the "Laurel" than those of us with Dad hearing (sorry, I love quacking about the fact that I made it to middle age) and cheap headphones. I'm not sure–there's no easy way to analyze overlapping speech signals. Separating concurrent voices is actually one of the worst problems in speech engineering, and is something that human ears can actually do better than machines most of the time. So, we're all left just speculating at this time. But, I'd say that my story is as plausible as anyone's. I still say that it's possible that it's just the formant pattern "Laurel" repeated at multiple frequencies, but if you say that you hear a lower-frequency "Yanny", I don't want to argue.

Now, just to give some additional evidence for this argument, I have embedded sound files that have various frequency ranges in them, from low to high. To be clear, these don't correspond to the frequency ranges above. The lowest is 0-4500 Hz, the next-lowest is 2000-6500 Hz, and the highest is 4500 to 9500 Hz. To quote University of Arizona professor Natasha Warner, "I tried filtering a whole bunch of ways (low-pass at various frequencies, high-pass at various frequencies), all on my computer with the sound turned up, and I consistently get Yanny. Of course high-pass filter at 5000 Hz makes the whole thing sound like crickets, roughly. But crickets saying 'Yanny'."

0-4500 Hz:

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

2000-6500 Hz:

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

4500-9500 Hz:

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

OK, hopefully that was a little clearer. There are a few long-term takeaways from this kerfuffle:

(1) Damn, sure is nice not to be talking about '45' for once. We need stuff like this to go down more often!

(2) Mad kudos to the woman who posted this on her Twitter. She was described as a "Social Media Influencer," and I say that she is at the top of her game. By sharing this, she brought enormous attention to herself. That's her job, and she's doing it cunningly and shrewdly.

(3) Speech is hard to understand. We might naively think it's easy to understand because we use it all the time (at least, folks who communicate in the oral/aural modalities do). Speech acoustics (and acoustics in general) are, in my thinking, not intuitive. Moreover, this body of knowledge doesn't build on other bodies of knowledge that most people have. When you learn about language in school, it's mostly about written language, not spoken. That's not me being snotty, but rather me saying that it must be hard to write about this kind of information for a broad audience, because it's three layers removed from what most people think about daily. Even disentangling the types of frequencies ('what is the lowest-frequency tuning fork?' vs. 'what are the frequencies of the loudest tuning forks?') takes a little bit of a conceptual leap. One of the reasons why speech is such a neat phenomenon is because there is so much work to be done still at the ground level. I hope that this phenomenon will inspire people to think more about speech science, experimental phonetics, and the nascent field called 'laboratory phonology'. Good places to start looking for work on these topics are www.acousticalsociety.org and www.labphon.org.

(4) Building on (3), way to go to the speech science/experimental phonetics/labphon communities for working together as a team to talk about this phenomenon. I feel hashtag blessed to be part of a community that has precious few members who are driven by credentialist ambition, and who are instead driven to work as a community to solve problems as they arise.

(5) And lastly, as someone whose primary job is to train people to be speech-language pathologists, consider this. Did you find listening to this audio sample maddeningly hard? Welcome to the daily world of people for whom speech perception is not always automatic. This includes people with even mild hearing loss, people with subtle auditory perception and processing problems that are associated with various learning disabilities (developmental language disorder, speech sound disorder, dyslexia, autism spectrum conditions), and even new second-language learners. The frustration that you might have felt listening to this signal is what many of these folks face on a daily basis when listening to something as seemingly simple as trying to identify speech in the presence of background noise. Turn your frustration into empathy and advocacy for those folks. Learn more at www.asha.org, and support your local speech-language pathologists and audiologists!

[end guest post by Benjamin Munson]

A postscript (from Ben Zimmer): It turns out the audio in question comes from the pronunciation given for the word laurel on Vocabulary.com (link). This was revealed on Reddit and has been subsequently analyzed on Twitter by Carolyn McGettigan and Suzy J. Styles. As it happens, I used to work for Vocabulary.com and its sister site, the Visual Thesaurus. In fact, back in 2008 when the audio pronunciations were first rolled out for the Visual Thesaurus, I wrote about the project here on Language Log (as well as on the VT site), explaining how we had worked with performers trained in opera, who were adept at reading the International Phonetic Alphabet. So the laurel audio ultimately comes from one of those IPA-savvy opera singers!

Update (myl): The NYT has an app that lets you use a slider to vary the l0w-to-high preemphasis of the recording, which should help you to understand what's going on.

Even later update (from Ben Zimmer): This article in Wired gives the whole backstory, including more on the opera singer who pronounced the word laurel for Vocabulary.com, and the high school students who circulated the audio. I also spoke briefly to Wired editor-in-chief Nicholas Thompson about the whole Yanny/Laurel debate on CBS Evening News.

bratschegirl said,

May 16, 2018 @ 11:04 am

I heard "Yanny" the first time and "Laurel" every subsequent time. Weird!

RP said,

May 16, 2018 @ 11:31 am

I heard "Yerry" at first but since then I've been hearing "Laurel".

random lurker said,

May 16, 2018 @ 11:51 am

The Japanese TV show Tantei! Knight Scoop (探偵!ナイトスクープ) handled a similar case in their 2009-10-30 broadcast. There's a cell phone that plays a sample that says "撮~ったのかよ" (to~ttanokayo) when you take a picture with it. A lot of people hear this as エ~アイアイ (e~aiai). They hypothesized that this was due to the low quality of the cell phone speaker and different sense of hearing in different people, and also discovered that it's easier to hear it the "wrong way" if it's played back slowed down.

The YouTube video at https://www.youtube.com/watch?v=EBSHlDCdhPw is a recreation of what they did, especially the slowing down at 1:55 onwards. They use the exact same sample that was investigated in Tantei! Knight Scoop.

Philip Taylor said,

May 16, 2018 @ 11:58 am

For me, 100% "yanny" — I cannot hear "laurel" at all. Is it possible that in <Am.E>, the vowels in "yanny" and "laurel" are closer than they are in <Br.E> ?

Ryan said,

May 16, 2018 @ 12:02 pm

Does the Vocabulary.com version have the same weird acoustic shenanigans, or was that just Twitter's compression algorithm / a bored undergrad?

David L said,

May 16, 2018 @ 12:04 pm

I am strictly in camp Yanny, but when I listened to the audio clip from vocabulary.com (in Ben's postscript), it's very clearly Laurel, in an unusually deep voice. When I go back to the other clip, though, it's still Yanny.

When I first heard the Cloe Feldman clip, I thought it was product of some sort of voice synthesis, but I can hear now that it's a distorted or somehow downgraded version of the vocabulary.com original. Can anyone say what was done to turn the original clip into the confusing one?

Ellen K. said,

May 16, 2018 @ 12:05 pm

I heard "Yanny" first. Then, after reading that "Laurel" was lower in pitch than "Yanny", I was able to mentally switch to hearing "Laurel", but then only heard "Laurel". Then, hearing it again this morning, "I heard "Yanny" again, and was able to mental shift to hearing "Laurel", and then back to hearing "Yanny".

I didn't follow much of that explanation of what's going on, but I'll still glad to see it.

random lurker said,

May 16, 2018 @ 12:14 pm

That's odd, my other comment isn't showing up. I tried to say that the date should have been 2006-08-04, the investigation is titled 携帯電話からエーアイアイ!?

David L said,

May 16, 2018 @ 12:15 pm

Ah, reviewing Prof. Dr. Styles' Twitter explanation, I see a note at the end suggesting that the original was played back and recorded from a cellphone, which would explain how the high frequency parts of the audio were overemphasized.

Julie G said,

May 16, 2018 @ 12:24 pm

F2 is there, hidden next to F1. They're only separated by 200 Hz in the "aw" vowel. You can see them separate a little in the "er" vowel; your F1 line goes right between them.

All of the phonemes in "laurel" have an F2 below 1KHz except for the r. In this recording their bandwidths are large enough that they overlap most of the time, making it ambiguous whether the high dipping format is F2 or F3.

Sergey said,

May 16, 2018 @ 12:48 pm

I hear "Yarnee" every time. I've tried to tune to a lower frequency but I couldn't hear anything different.

I've had another interesting experience with recognizing multiple speakers: I've been testing a teleconferencing solution that passes through the audio packets from 3 speakers who are loudest at each moment. I've been playing 4 different recordings into it at the same time: 2 mens' voices (higher and lower pitch), 2 women's voices (higher and lower pitch). I could mentally tune into any of these 4 voices and hear what it says, with the rest becoming background noise. This despite only 3 loudest speakers getting though at any given moment, the weakest one getting completely "drowned out" (but possibly coming back the next moment, pushing another weaker voice out).

Sergey said,

May 16, 2018 @ 12:54 pm

Hm, does it look like there is a dependency on the gender of the listener? From the sample in the comments, it looks like men are more likely to hear "Yanny" while women "Laurel". Perhaps men are better at recognizing the words in higher tones while women in the lower tones. Related to this, I've noticed that men prefer to set their GPS to a woman's voice, while women set their GPS to a man's voice, both saying that this way it's easier to understand. Personally I find the higher-pitched woman voices much easier to understand.

David Udin said,

May 16, 2018 @ 1:15 pm

Laurel, but I suffer from age-related hearing loss and use a hearing aid. If the ambiguity depends on some higher frequency content, then I'm (literally) deaf to it.

Can the higher frequency components be due to aliasing from inadequately-filtered sampling? That is, if the original was not properly low-pass filtered before being sampled (or not sampled at a high enough sampling rate) then there would be artifacts in the playback. That might explain why the high frequency content has the same shape as the normal-range content. There also may have been various kinds of resampling in going from the original to twitter that would introduce artifacts that lead to the ambiguity.

GeorgeW said,

May 16, 2018 @ 1:44 pm

I too have age-related hearing loss. With my hearings aids in, I hear a clear and consistent 'Yanny.' With them out, I hear a clear and consistent 'Laurel.' Wow.

John Swindle said,

May 16, 2018 @ 2:32 pm

Another with high-frequency hearing loss. I hear "Yoral" or maybe "Nyoral." I think I've heard cats say it.

Andy Stow said,

May 16, 2018 @ 3:24 pm

I can switch between "laurel" and "yarry." I've had multiple ear surgeries, though, including an incudo-stapedial prosthesis in my left, so my hearing is not typical.

L said,

May 16, 2018 @ 3:28 pm

When I first played it I could only hear laurel, so clearly that I thought it was a prank, until the other people in my home only heard "dierry," "millie," and "jammy."

Later, my wife (who heard "jammy") played the same video, and I could only hear "yanny," and could not for the life of me hear "laurel." And she could only hear "laurel."

There was a fan going in the room the second time, which might be responsible for the difference.

RP said,

May 16, 2018 @ 3:33 pm

While nearly every article on this subject seems to presume that it's a straightforward binary choice, the number of people who hear neither of them, but rather something else, seems far from insignificant.

Mark Meckes said,

May 16, 2018 @ 3:40 pm

I hear something like "yarry", though with the "r" sound pronounced not as in my native accent (highly rhotic midwestern US), but something closer to French. I can see how one could hear it as "yanny", but try as I might, I can't hear any "l" sounds at the beginning or end.

han_meng said,

May 16, 2018 @ 3:50 pm

Man Calls Girlfriend ‘Yanny’ During Sex, Swears He Said ‘Laurel’

http://reductress.com/post/man-calls-girlfriend-yanny-during-sex-swears-he-said-laurel/

Fse said,

May 16, 2018 @ 3:59 pm

To me it sounds like "Laurel" when there is no background noise, but "Yenny" when there is noisy fan in the room.

David L said,

May 16, 2018 @ 4:06 pm

Here's an interesting experiment to try at home. The NYT posted an audio tool that allows you to adjust the audio clip until you hear it change from Yanny to Laurel or vice versa:

https://www.nytimes.com/interactive/2018/05/16/upshot/audio-clip-yanny-laurel-debate.html

It starts in the middle, and I clearly heard Yanny. To get it to sound like Laurel, I had to move the slider to the last marker before the end. But when I started to move the slider back the other way, I still kept hearing Laurel until I got close to the center, and then it turned back to Yanny. Then I repeated the exercise, and again had to get to the last marker before the end to start hearing Laurel.

So there's a curious hysteresis in the system, I suppose because once a certain interpretation of the sound is in my brain, it has to change quite a bit before it is dislodged and goes back to the other sound.

Lane said,

May 16, 2018 @ 4:11 pm

Heard "Yanny". Then listened to the Vocabulary.com file – distinctively lower, and very clearly "Laurel". Could it be because I was primed to hear "Laurel" by the words "Laurel" in huge type on the Vocab.com page? Or the better quality of that clip?

But then I came back here, and I was now getting Laurel minutes after I'd heard Yanny. So priming or expectations or something…

Then I came home and listened on my laptop and now it's Yanny again.

Benjamin Tucker said,

May 16, 2018 @ 4:18 pm

On behalf of Terry Nearey:

We think we have the most likely explanation.

The original from Vocabulary.com is more likely to be heard correctly as “laurel” spoken by a fairly deep voiced (with deep resonances and pitch) adult male. The initial L was in fact a “dark-l” similar to kind used by the NBC anchor Tom Brokaw, though not quite as extreme.

All of our listeners heard “laurel” when played over laptop speaker. One of us heard Yanni when heard over the better quality lab audio system.

A quick look at spectrograms of the original vocabulary.com version versus the ambiguous one shows that the second formant (F2) of the original was much stronger than in the ambiguous copy. [Note: There is also some evidence that the signal was somehow “aliased” in the recording, as noted Ben's post above]

This suggests an explanation related to a phenomenon known as “upward spread of masking” whereby lower frequency sounds can “mask” (blot out) weak higher frequency sounds. The masking tends to get worse with increasing age, see article below. The low F2 region is much weaker in the ambiguous signal than in the original (and it is in a noisier background). Listeners’ prone to more upward spread of masking may not hear the F2 at all, which may be crucial in hearing the initial /l/ (‘ell’) sound, Similar things can be said about the final /l/.

Upward spread of masking:

Check this reference out.

https://www.ncbi.nlm.nih.gov/pubmed/2324393

https://asa-scitation-org.login.ezproxy.library.ualberta.ca/doi/pdf/10.1121/1.398802

Klein, A. J., Mills, J. H., & Adkins, W. Y. (1990). Upward spread of masking, hearing loss, and speech recognition in young and elderly listeners. The Journal of the Acoustical Society of America, 87(3), 1266-1271.

Victor Mair said,

May 16, 2018 @ 4:51 pm

For the video clip and the first audio clip (they seem to be identical), I hear nothing but "Laurel", no matter how many times I play them, and no matter how hard I try to hear something else. For the last two audio clips, I hear *absolutely nothing*.

For the record, I have suffered from severe tinnitus since January 15, 1968 (caused by a very loud explosion next to my head). The ringing in my ears is very high-pitched, like a tea kettle whistling very loudly. From professional examination and personal observation / introspection, the ringing in my ears covers up the higher frequencies of speech, which means most of the consonants, at least their initial features.

I have no problems whatsoever hearing vowels, so I often resort to guessing at what people are saying based on the vowel contours and qualities of their speech (plus lip-reading, gestures, and so forth).

It is particularly agonizing for me to be in a noisy restaurant where there's a lot of background noise and many people talking. In such surroundings, it is almost impossible for me to carry on a conversation with someone, even if they're sitting fairly close to me.

Graham Blake said,

May 16, 2018 @ 5:37 pm

I strongly suspect that there's something akin to the McGurk effect going on here as well, whatever shenanigans may be involved in the frequency domain. I am one of those who can shift whether I perceive Yanny or Laurel at will – very much like one can learn to shift one's perception of the direction of rotation of the dancer in the silhouette illusion. There definitely seems to be a perceptual illusion involved in that sense. When I hear Yanny, it is clearly in a higher pitched voice, and when I hear Laurel it is in a markedly lower pitched voice. The sound like completely different audio tracks. For those with a relatively normal range of hearing and relatively normal playback devices, I think the brain may be "McGurking" the voice and hearing one over the other based on whether the brain tracks it as a voice in a higher or lower register. I would love to see someone do a demonstration of a woman or a child mouthing Yanny vs. an adult male mouthing Laurel with this audio to see if that is enough to trigger a perception based upon the expectations generated by those visual cues.

Laura Morland said,

May 16, 2018 @ 6:30 pm

@John Swindle, Thank you for the first real laugh I've had all day, maybe all week!

And with no disrespect to the wonderful "usual contributors" to LanguageLog, this was one of *the* most fascinating blog posts I've read in a while. Beautifully written and witty as well as informative.

And I love all the contributors' posts: Victor Mair, I even read almost every single post of yours, even though I have no experience at all in Sinitic languages aside from once spending six weeks studying Japanese, in advance of my only trip to Japan. (And I'm so sorry to learn your tinnitus. Since moving to France, I, too, have become an avid lip-reader; thank goodness for eyesight!)

Robot Therapist said,

May 16, 2018 @ 6:33 pm

With the NYT slider app, I get the same hysteresis effect as David L (starting with yanny in the centre initially). At the critical point, I can choose which to hear.

Robot Therapist said,

May 16, 2018 @ 6:39 pm

…and eventually, with some practice, hear both simultaneously

TIC said,

May 16, 2018 @ 6:58 pm

This makes me think back to an enduring memory from my kidhood, sometime in the early 70's… There was an oft-played TV commercial for an album of classics by the well-known singer Mario Lanza… It included an excerpt of him belting out O Sole Mio… I first heard it, repeatedly, on my little TV — with a no-doubt cheap and tinny speaker… And the "Oooo" he sang sounded so "chipmunky" that I thought it was the funniest thing in the world that this supposedly "great" singer sang so oddly… One evening, as the commercial began while we were watching the (big/good) TV in the living room, I primed my family for the "hilarious" excerpt to come… And I was quite confused, and more than a little mortified, moments later when we all heard nothing but Lanza's booming tenor…

PS

NTIRMB I plainly heard Laurel the first time… And then heard something like Yammy every subsequent time — despite really trying to hear Laurel again… Only once did I hear Laurel again — when I heard the sound file unexpectedly (as I walked into the room while my wife was watching a segment on TV)…

Kyle said,

May 16, 2018 @ 8:49 pm

I gave it a listen on my desktop, which has a full-sized audio system and a small boost on the bass response, and then a second listen on my laptop, which has much smaller speakers. Using the NYT app, on the former, my equilibrium point was about 97% (nearly their "pure Yanny" sound), while on the latter I flipped over at about 85%. So different sound reproduction definitely plays a role.

RC said,

May 16, 2018 @ 9:12 pm

I heard both at the same time the first time I listened to it and every time after that. Anyone else?

MD said,

May 16, 2018 @ 9:31 pm

@Philip Taylor: Yes that is possible but that doesn't excuse the fact that spectrogram analysis of the sound clearly indicates [l] and [r] consonants in the recording… so the fact that people are hearing [y] and [n] shows either physical difficulty perceiving correct sounds, listener bias, or inadequate accuracy in identifying speech sounds. :)

a fatal error in this whole debate is the insistence on people "hearing" the correct sounds. The human ear is remarkable and our ability to distinguish sounds is pretty good but with respect to the entire animal kingdom, we suck at hearing sounds. With respect to computer analysis of the sound, we reallllly suck in comparison. A spectrogram and proper training in how to read them is really all we need to identify whether or not this sound is Yanny or Laurel. And it is confirmed, it is Laurel.

Viseguy said,

May 16, 2018 @ 10:12 pm

I hear "yammy", with an M.

@RC: If I move the NYT slider to 5.5, I can hear both.

Jeff W said,

May 16, 2018 @ 10:36 pm

With that New York Times tool and my lousy PC speakers, I heard Yanny clearly at the center 0 point and had to move the pointer to about -3 to hear Laurel.

Most of the time I could hear Yanny moving the pointer back to 0 but at other times, starting at a point where I could hear Laurel left of center, I could hear Laurel all the way to +2.

And, just now, checking the various intervals, I heard Yanny all the way at -5, the far left, and then, moving the pointer, it switched to Laurel.

I don’t mind hearing different things at different intervals but hearing different things at the same intervals was a bit unnerving.

Ashleigh Perez said,

May 16, 2018 @ 11:04 pm

I have been absolutely mind blown by this whole experience. I am now looking into ASHA.ORG to get more information about supporting my local speech-language pathologists and audiologists! Thank you for the suggestion!

Isaac said,

May 17, 2018 @ 12:20 am

I consistently heard Laurel. Then, I listened to the nytimes so with the shutter towards yanny, and I could hear yanny. Then I came back to the original, and now I can hear both consistently.

cliff arroyo said,

May 17, 2018 @ 12:50 am

OT: An interesting take on the interaction of language, ambition and alienation in the Philippines….

https://www.buzzfeed.com/inabarrameda/inglisera-philippines-american-colonialism?utm_term=.rl4Z5bn1L#.sbD5YJ0oV

Bruce said,

May 17, 2018 @ 1:21 am

@TIC

I'm just happy I could decipher what NTIRMB meant :)

rosie said,

May 17, 2018 @ 2:35 am

Definitely "Laurel" ['lɒɹəɫ]. There's a definite [l] at the start, and the consonant between the vowels is definitely [ɹ], not [n]. Using the NYT app, I still hear ['lɒɹəɫ] unless the slider is on either of the rightmost 2 notches, in which case "yearly" ['jɪəli]. Can't hear an [n] at all.

Robot Therapist said,

May 17, 2018 @ 2:45 am

The coolest thing is finding that my response is completely different today!

AntC said,

May 17, 2018 @ 3:10 am

Can't hear "Yanny" or anything like it.

Can't hear "Laurel" or anything like it.

If you asked me to transcribe it: "Yerry" or maybe "Yarry" even "Yeah-wy".

Can't understand what all the fuss is about.

Keith said,

May 17, 2018 @ 3:46 am

I saw the "Yanny or Laurel" question textually several times before listening to the audio clip.

If I pronounce these two words out loud, there is an enormous difference between them, in my British English.

The audio sounds to me to very clearly be American English, and I can accept that the pronunciations may be closer for Americans, generally.

This leads me to think that it would be better to present listeners with the audio, and then ask them to type what they hear, rather than prime the listeners with the written words and ask them later what they heard.

Keith said,

May 17, 2018 @ 4:03 am

Ah, I just followed the link to the NYT tool, and found it very interesting!

I have to move the slider almost all the way to the right before I hear anything other than Laurel. But even then, I don't hear Yanny, I hear something more akin to the old-fashioned name for the Russian letter ы: еры.

Ryan said,

May 17, 2018 @ 4:23 am

I listened to the clip this morning and thought it was clearly saying "yanny". But after a day of listening to my workplace's cheap fluorescent bulbs, I think my auditory nerves have become fatigued to high frequency sounds to the point where it now sounds like "laurel". Still though, I've listened to the clip enough times throughout the day (time better spent elsewhere, I know) so I can hear it both ways at the same time. To me it sounds like two voices speaking simultaneously: a low pitched voice saying "laurel" along with a high pitched voice saying "yanny". Question is, does anyone hear a low pitched voice saying "yanny" or a high pitched voice saying "laurel"?

Yerushalmi said,

May 17, 2018 @ 5:13 am

I heard "Yanny" and only "Yanny" every time I listened to it – until I read the text of the first couple of paragraphs after the embedded video. I then scrolled back up and tried to focus on "Laurel" – and there it was, being said grumpily (and at low volume) by a deep-voiced man at the same time as the nasally-voiced man said "Yanny".

In other words, once I became consciously aware of where Laurel was located on the auditory spectrum, I heard both simultaneously every time.

Lane said,

May 17, 2018 @ 5:20 am

OK, the force of priming is strong with this one. I played with the NYT slider a bit. My default is "Yanny". I could move it over just a bit and get "Laurel". At a certain stage it sounded like both at once, a deep "Laurel" and a nasal "Yanny".

But when I put it back in the middle, I realised that I could look at "Yanny" on the screen and hear "Yanny" clear as day. Then I looked at "Laurel" and it was Laurel, equally clear. Back and forth and back and forth I went, without fail.

JPL said,

May 17, 2018 @ 5:39 am

Ryan and other commenters above have answered the question I had: Can anyone hear both "laurel" and "yanni" simultaneously? (And can someone who initially can't hear both simultaneously be coached to be able to do so?) On the NYT slider at the midpoint I hear only "laurel"; other individuals may hear "yanni" at that point. At the next hash mark to the right I can hear both "laurel" and "yanni" simultaneously, as with two voices over each other, "laurel" lower in pitch, "yanni" higher, not alternating between one and the other as with the visual ambiguity illusions, both at once, and I can hear this all the way to the third hash mark before "yanni" takes over. (The "voice quality" seems to differ as well, with the "yanni" appearing to have (what would be if it were human) retracted tongue root and "laurel" advanced tongue root position.) As with the midpoint and the switch-over point, I would expect there would be individual differences wrt where those who can hear both simultaneously can do that. (Is the mechanism that determines these individual differences purely physiological in character, or is there a condition of a cognitive nature?) Another question: can anyone hear a lower pitched "yanni", simultaneous or not? I would guess that there is a human voice ("laurel") and a robot voice ("yanni") superimposed on the same recording, so while the recording can be called ambiguous, neither of the two word performances in it is strictly speaking ambiguous. What made someone think of doing that?

Ray said,

May 17, 2018 @ 6:54 am

on my teevee's channel 3 it sounds like 'yanny' but on channel 12 it sounds like 'laurel' but then over on channel 6 it sounds like 'yanny' and now on channel 10 it sounds like 'laurel.' I don't think everybody's playing the same identical clip, so it's hard to get excited about all the fuss.

Chelsea Sanker said,

May 17, 2018 @ 7:03 am

I think Julie G has it right. The F1 and F2 are very close in the original 'laurel' production, which is made even harder to hear given the low intensity of the low frequencies. Some listeners nevertheless perceive the distinct F1 and F2, which makes the recording something with a consistent low F1 and F2 (two velarized l's and a very round back vowel) with an F3 that dips in the middle for r; this is 'laurel'. Other listeners perceive a single formant in that range, which results in F3 being perceived as F2, which makes it sound like something with a moderately low F1 and a high F2 (i/j) that dips in the middle; this is what we are hearing as 'yanny' (or something else with a different alveolar sonorant in the middle). This is also probably why people describe 'yanny' as sounding higher; it sounds like speech produced by someone with a shorter vocal tract, because the formants are more spread out.

Rube said,

May 17, 2018 @ 7:14 am

For whatever tiny bit it is worth, myself and my wife (59 and 58), hear "Laurel", and our 17 year old son hears "Yanny".

Robot Therapist said,

May 17, 2018 @ 7:17 am

Again, whatever tiny bit it's worth: same me, same clip, same speakers, the next day it sounds completely different. The high and low frequency response may be part of it, but not all.

Ellen K. said,

May 17, 2018 @ 7:25 am

@MD, if you don't understand how the sounds we interpret at "yanny" are actually there in the clip, perhaps reread this post. Or follow some of the links in it.

Here's the short version, as I understand it. When we hear "yanny" (or "yenny", or "yerry", or any such) in the clip, we are ignoring the lowest part of the sound, interpreting it as noise Which makes it sounds quite different. And the lack of any stops or fricatives in the clip contributes to this.

Ralph Hickok said,

May 17, 2018 @ 7:31 am

I finally got around to trying this and I hear "lammy."

Coby Lubliner said,

May 17, 2018 @ 8:03 am

Ben Zimmer: You mention "performers trained in opera, who were adept at reading the International Phonetic Alphabet." Are you implying (by means of the comma) that opera training routinely teaches the IPA?

Philip Taylor said,

May 17, 2018 @ 8:42 am

Oh, that is weird. When I first reported, I wrote that for me (71, British, using small computer loudspeakers in the hotel) I heard only "yanny". I just tried the same at home using the New York Times program and heard the same, until I moved the slider three notches to the left; then, for the first time, I hear "laurel" and continued to hear "laurel" as I moved the slider right back to the central, neutral, location. The phenomenon is clearly far weirder than I originally thought.

Ben Zimmer said,

May 17, 2018 @ 9:07 am

@Coby: Yes, opera singers learn IPA, and libretti are sold with IPA notation. For more, see the 2008 post I linked to, "Operatic IPA and the Visual Thesaurus," as well as Mark's earlier post.

B.Ma said,

May 17, 2018 @ 9:15 am

I hear both 'laurel' and something else simultaneously.

However, I read that the something else is "supposed" to be 'yanny' before I listened to the clip, so I can only interpret it as 'yanny' even though I might have heard something else if I listened before I read. Now that some commenters have said they heard 'yerri' or even the Russian 'еры', I find that I might be able to hear those too.

What I have yet to understand is how someone who was trying to say 'laurel' was able to simultaneously produce higher frequency waves that sound like a different word.

I wonder whether this sort of thing is the cause of misunderstandings when speaking over a poor phone line, or in a crowded room.

When I visit new countries, I enjoy travelling on public transport in order to listen to the pronunciation of station names, as part of my language-learning quest. When a train is very crowded and noisy, I can't see the map to check how next station name is written at the time it is announced over the speakers. When I arrive at the next station, I often find the actual name bears little resemblance to what I thought I heard!

Lars said,

May 17, 2018 @ 9:52 am

The NYT app is interesting. I have some sort of undiagnosed auditive perceptual disorder, so take this with a grain of salt… but the first time I tried the app, I had to move the slider almost all the way to the left, and even then there was some residual yanniness the first few times. It also seems to me that letting the sound repeat enough times can "switch" my brain from one percept to the other, with a bit of residual yanniness towards the end in particular. This depends on the slider setting, and resets itself after a suitable pause.

Psychoacoustics is weird.

Victor Mair said,

May 17, 2018 @ 10:02 am

This is now spreading in China on Weibo (China's equivalent of Twitter) and other social media platforms.

https://m.weibo.cn/status/4240732514497845?wm=3333_2001&from=1084393010&sourcetype=weixin&featurecode=newtitle

The video clip is interesting — worth a look / listen

From Yixue Yang:

A friend of mine sent me this link this morning. To the pronunciation of one same word, we got totally different results —— I heard "Laurel", whereas my friend heard "Yanny". What about you??

[(bgz) From CNET: "Yanny vs. Laurel has reached China and now it's more confusing than ever"]

VHM: I would dispute CNET's claim that few people in China know English. For the last thirty years or so — a whole generation — all school children in China have been taught English from elementary school (and some even in kindergarten) up through high school and college. Certainly among the sorts of people the journalists are likely to have referenced, the level of English ability is not bad. Of the thousands of graduate students from China that I've been encountering in recent years, their level of English ability is impressively high.

Vulcan With a Mullet said,

May 17, 2018 @ 10:03 am

48 years old American…

I heard "Yanny" exclusively until the slider was about 2/3 to the left (on the NYT site), then I could hear the deeper "Laurel" emerge. For quite a range of frequencies I could easily hear both at once. And like many, I then could hear "Laurel" better as I slid the slider back towards the center, yielding to "Yanny" just at about 45% :)

I can definitely hear both versions layered, though, once the high frequency is rolled off a bit. I found it almost impossible to ONLY hear "Laurel" until the slider was almost at the left end.

Lane said,

May 17, 2018 @ 10:21 am

OK, I managed to pull another trick on myself. I tried to imagine a small person with a high voice (Herve Villechaize came to mind), and I heard Yanny. Then I imagined someone with a deep voice (and the character actor J.K. Simmons, whom I love, came to mind) and I got Laurel. And in changing the visualisation I could get the Laurel/Yanny switch.

Then it got stuck on Laurel for a while, so I found a video of Herve Villechaize giving an interview, and boom, it was Yanny again.

Trogluddite said,

May 17, 2018 @ 10:21 am

I would just like to thank Benjamin for his appeal for understanding and empathy for those of us with auditory, learning and developmental conditions. The Laurel/Yanny clip has indeed generated quite some interest on the autism forums which I frequent, for exactly the reasons suggested.

Like many autistic people, when I'm sat in the corner in silence looking bewildered, it is not because of the all-too-common stereotype that being autistic makes me uncaring and antisocial – it's usually because my perceptual and cognitive impairments mean that I just can't follow what the hell is going on around me, and an excess of sensory information can easily overwhelm my brain to the point that parts of it simply shut down entirely.

The many hours that I've spent talking with other autistic folks have made it very clear to me that many of us are desperate for friendship and companionship. Autistic people rarely lack compassion for others, as many would have us believe. But just like any human being, identifying another person's state of mind, and thus learning the "theory of mind" that we are so often told we lack, is dependent upon the information provided by our senses and how effectively our brains can process this information. For example, if I don't understand that someone has said something sarcastic, it isn't because I don't understand the concept of sarcasm or have no sense of humour, it is because my speech processing does not provide my conscious mind with a correct analysis of the other person's prosody – and this is much more likely when my mind is swamped by other sensory stimuli or I am unfamiliar with that person.

As Benjamin so rightly said, we are often left isolated with our frustration at not being able to understand and to be understood, and the expectation that the onus is entirely on us to attempt to compensate for our innate impairments. Even the simplest of things, such as turning down background music or slowing the rate of conversation can be a huge help and are truly appreciated, even if only as a gesture of solidarity.

Yerushalmi said,

May 17, 2018 @ 10:22 am

I should clarify that the change from initial "Yanny" to hearing both simultaneously happened with the original clip. I haven't used the slider at all.

Victor Mair said,

May 17, 2018 @ 10:39 am

I just used the slider for the first time. Played it about a hundred times at all the different notches.

There are ten notches. It's pure "Laurel" all the way up to notch 8. At notch 9 and 10, I'm still hearing "Laurel", but with a slight, tinny, scratchy "-y" background or undertone ending that is simultaneous with the dominant and much clearer "-el" ending. Otherwise, the "Laur-" remains consistent and clear throughout the entire range. The wispy competition from the "-y" ending only comes at notch 9 and 10.

Remember, I'm the guy with the severe tinnitus.

http://languagelog.ldc.upenn.edu/nll/?p=38274#comment-1550819

BZ said,

May 17, 2018 @ 10:54 am

I'm a 38 year old male and I hear "Laurel" . On the NYT tool it is clearly Laurel until the second dot from the right where I hear both equally. After that Yanny becomes the more pronounced one. If I concentrate really hard I can hear an almost whispered "Yanny" superimposed on "Laurel", which is still the main thing I hear, in the middle.

Scott P. said,

May 17, 2018 @ 11:48 am

Like many here, I hear "Yanny" on the NY Times slider, and if I move it left, it eventually becomes "Laurel" and then moving back to the center, I hear "Laurel".

After listening to the vocabulary.com pronunciation, which is clearly "Laurel" to me, I hear "Laurel" at the neutral position on the NY Times slider.

If I pronounce these two words out loud, there is an enormous difference between them, in my British English.

As there is in American English, which is why this phenomenon is so fascinating.

Robert said,

May 17, 2018 @ 12:15 pm

First I heard Yenni for a few times. Than I put tips of my fingers into ears and heard Laurel. Than, without fingers in my ears – again Yenni.

RP said,

May 17, 2018 @ 12:32 pm

Yesterday on my phone, I started off with "yerry" but on all subsequent playbacks I had a clear "Laurel".

Today on my laptop, using the NYT slider, I get "yeary" in the central position, becoming more of a "yury"/"gyury"/"geary" as I go rightwards, and more of a "leary" as I go leftwards. At first it remained "yeary"/"leary" even in the leftmost position, but after listening for a few minutes it changed to "Laurel". Then moving it rightwards again it remained "Laurel" even in the central position and some way towards the right, when it changed back to "yury". Now it is swapping back and forth between "yury" and "Laurel". I've never heard an /n/ anywhere though!

RP said,

May 17, 2018 @ 12:40 pm

@Rosie,

("Definitely "Laurel" ['lɒɹəɫ].")

The times when I hear "Laurel" in the clip, I almost always hear the vowel as more of a /ɔ/ than /ɒ/. (I personally would pronounce it /ɒ/ but that's not what I'm hearing.) Could just be me though!

Victor Mair said,

May 17, 2018 @ 12:43 pm

From an 87-year-old female German friend who said:

…put on my headphones.

I can't figure how anyone hears Yanny or Laurel! To me the voice

kept repeating "Ely" (the man's name) and with the headphone

it came to "Yarry" (as in Gary, Indiana).

Now I hope I haven't screwed up your day☺.

Ellen K. said,

May 17, 2018 @ 1:03 pm

I suspect the reason many people interpret the middle consonant in the "yanny" hearing as an N is because of the vowel sound we hear before it (a version of /æ/) it is one that can't come before an R. (I'm sure I've heard people speaking who have /æ/ before R in someone words, but not I think said the same way as the vowel sound we hear here.)

Of course, once someone wrote it down that way, the rest of us have been primed by the spelling, which would also be a factor.

Marc-Andre Pelletier said,

May 17, 2018 @ 1:25 pm

I played with the New York Time's frequency-shifting version and found it very interesting:

There is a lot of amusing perceptual trickery. If I start at high frequencies and slide down gradually, I'll keep hearing [ˈjɑːni] or perhaps [ˈjæːni]. If I take a short break and start from the low frequencies or jump straight from high to low, I'll hear [ˈɫɐɹəw] which my brain will parse as /ˈlɔːɹ.əl/. Until I glide the frequency back up to about midpoint where it'll abruptly flip back to [ˈjɑːni].

A point of note is that I perceive that switch as though it were literally two speakers; one with a relatively low pitched voice, and one with a tinny higher pitched one.

Sili said,

May 17, 2018 @ 1:31 pm

Shouldn't it be "prof. Dr. Styles" the way David L. has it? Rather than OP's "Dr. prof."?

Howard C. said,

May 17, 2018 @ 1:49 pm

Thanks for the analysis, and kudos to the NYT site. Before reading up on this, I played the clip on my home PC system with decent speakers and clearly heard Yanny. I speculated that frequency balance might have something to do with it so I turned up the volume, stuck my fingers in my ears, and clearly heard a low-pitched Laurel. It was quite repeatable: fingers in and out, Laurel and Yanni back and forth. I played around with other ways of muffling my ears, e.g., cupped hands, and could get the sound to switch back and forth. I also observed the hysteresis phenomenon by making small hand movements between repetitions of the audio.

Alas, I have not been able to hear both names simultaneously, though I haven't tried all that hard!

Roger Lustig said,

May 17, 2018 @ 3:53 pm

@Victor Mair:

Clear 'Laurel' up to about 8, then *some* shifting? Me too, though my tinnitus is probably not as severe as yours. Mine's like having an old-fashioned TV set in the room–same whine, perhaps a tad louder. Age 61, fwiw.

On the embarrassing side, when I get past 8, what I hear is more like "Yalie."

Doug said,

May 17, 2018 @ 4:19 pm

Can't somebody just run the sound through a speech recognition app and see what a computer says? Surely there's no bias there.

Victor Mair said,

May 17, 2018 @ 4:33 pm

From Kelsey Seymour, a professional flautist who is just now completing her dissertation on early medieval Chinese Buddhist music:

I can hear both words simultaneously, and I can also focus my listening on either the high pitches or the low pitches to hear each word distinctly. I partially suspect this is a skill learned from musicianship training (though perhaps not limited to that!). We spent a lot of time listening to recordings, then writing out the basslines and figuring out the harmonies, so we had to get very used to listening to a number of pitches at the same time and picking specific ones out of the set.

Terrance Nearey said,

May 17, 2018 @ 4:45 pm

@Howard C: Spectral balance also does it for me.

Following up on comments from work we did in our lab yesterday (see Ben Tucker's summary comment above): We fiddled a little with filtering in Praat yesterday, but not quite enough.

Today just before I read Howard C's comment, I looked at long term average spectra of the original Vocabulary.com version and the ambiguous Reddit version. After scaling to equal rms amplitude over the full frequency range, there was a modest +3 dB difference of original over the ambiguous below 1000 Hz. But by about 1200 Hz, the balance had switched and ambiguous was more intense by about 10 dB , with the gap gradually increasing to about 25 dB at 4500 Hz.

I first heard the ambiguous version on Wednesday morning on BBC World Service’s Outside Source on a tinny clock-radio. I heard it consistently as “Yari” over variaous audio devices until after the CTV crew left our lab at 1 pm or so. (See https://edmonton.ctvnews.ca/mobile/u-of-a-linguists-dissect-viral-laurel-and-yanny-audio-clip-1.3932945) It was only after that time that I heard the original Vocabulary version , which I hear reliably as “Laurel” . Eventually, but by no means immediately, I ‘flipped’ on the ambiguous Reddit one and could only hear it as “Laurel” until last night, when I heard it on the CTV clip, where it was back as “Yari”. It stayed “Yari” this morning and so far today (on my Macbook).

Resuming our filtering experiments from yesterday and staying on my Macbook, I found that preemphasis using Praat's factory setting (above +6 dB/octave above 50 Hz ) is enough to tip the Vocablary.com's clear “Laurel" to “Yari" for me (today at least). Conversely, I have to deemphasize the ambiguous one twice (-12 dB/octave above 50 Hz) to get a convincing Laurel.

For me, I think there's clearly an auditory Necker-cube like thing going on (Google it.). I get a very big shift in apparent voice quality (speaker size). Reddit “Yari” sounds a bit like helium speech ( robo-munchkin, extreme Popeye), while the original “Laurel” is a deep baritone male. For the ten minutes or so I heard the ambiguous one as “Laurel” yesterday, I also heard it as the baritone male over a tinny audio channel. I cannot readily change this by trying. Sometimes it just happens.

What I think is going on for me is the relatively high amplitude of the F3 at about 2800 Hz compared to the weak F2 of the initial L of the ambiguous signal lures me into tracking F3 as F2. This is a pretty high F2 (higher than average female /i/ of the Peterson and Barney data), and so is not appropriate for an average adult male, much less our deeply resonant baritone.

As it happens, using Praat’s factory settings formants track just this way. The original Vocabulary.com shows F1 and F2 below 1000 Hz throughout with F3 starting at about 2800 and dipping to about 1660. But for the ambiguous Reddit one, only one formant tracks below 1000 Hz and F2 is always above 1500 Hz, following essentially the F3 track of the original.

I’m not so sure about the upward spread of masking explanation in our lab’s earlier post , but it might be a factor in whether the low amplitude F2’s are likely to be discounted in some kind of perceptual formant tracking process.

Incidentally, my long-term exposure to manipulating apparent "head-size" in synthetic and processed natural speech may make it more likely that I will hear "robo-munchkin" voices more frequently than the average bear.

Terrance Nearey said,

May 17, 2018 @ 6:06 pm

@me

I finally looked up from my plodding Praat work to read more of the earlier posts. I see the NY Times slider app https://www.nytimes.com/interactive/2018/05/16/upshot/audio-clip-yanny-laurel-debate.htm ran circles around my pre-emphasis tricks before I even started. (Scooped by the Times again.)

I get whopping hysteresis depending on which end I start… stiking with that for a long time, then finally switching as I move to the far end. Maybe we need to set up a Bekesy- or PEST- tracking experiment on this and see how listeners differ on the crossover point.

Currently, with the slider in the middle and the Times app and the sound looping in the backgound as I type, I'm hearing both simultaneously, with the munchkin dominating most of the utterance but the baritone in the background ( bari's -el) pops out a bit at the ed,

I have so far not gotten a Necker-cube like spontaneous perspective switch with repeated listening to the same setting.

CORRECTION: Ben Tucker noticed me that Praat is not tracking the Vocabulary clip with default settings the same way mine is. I since determined that my pre-emphasis settings are NOT set at the standards,I moved it off scale (effectively no preemphasis) for some other work I was doing last week and forgot I left it that way.

So to get the Praat formant tracks that jibe with my earlier post today,

In the sound editor :

Formant Settings:

Max Formant: 5500

N. formants : 5

Window length: 0.025

Dynamic range: 30

Advanced Formant Settings:

Pre-emphasis form (Hz): 1000000

BlackHatSEM said,

May 17, 2018 @ 7:29 pm

Like others,I heard "Yanny" the first time and "Laurel" every subsequent time. Very Weird!

Ken said,

May 17, 2018 @ 10:17 pm

When I listened through headphones I heard only Laurel, but through my Macbook speakers I hear Yanny (with Laurel softly under it).

ktschwarz said,

May 17, 2018 @ 10:48 pm

Terrance Nearey's URL is misspelled: it should be "ualberta", not "uaberta", which goes to something predatory that redirects to random ads. Can the management fix this?

Thanks for a terrific post. What does machine speech recognition make of this? My iPhone failed to recognize anything at all on any slider setting. It also gets almost nothing from youtube videos played on my laptop, but does get about 50% right on words pronounced by vocabulary.com (for their original "laurel", it got "moral").

Jonathan Smith said,

May 17, 2018 @ 11:32 pm

To me lots of response to this is missing the madness of it. The idea of shenanigans per se considered in the OP turns out to be wrong… so, this is just (somesortuv) a recording of a guy saying "Laurel". Thus, clearly there exist vast numbers of audio files with equivalent rabbit-duck properties… meaning… holy shit perception is <mindblown.gif>

Globules said,

May 17, 2018 @ 11:48 pm

With Yanny vs. Laurel I can force myself to hear one or the other, but it requires a number of repetitions to make the transition. However, with Brainstorm or Green Needle I can switch between the two instantly.

Rachael said,

May 18, 2018 @ 2:34 am

At first I only heard Laurel, and I had to move the NYT slider all the way right before I could hear Yanny. I also got the hysteresis effect, hearing Yanny on the way back from the right to the middle.

The weirdest thing was that I found a point about 3/4 of the way to the right where I could choose to hear either at will. It feels like being able to control with my mind which of two distinct sound files plays. I absolutely can't hear both at once.

Bill Benzon said,

May 18, 2018 @ 6:20 am

@Roger Lustig: …when I get past 8, what I hear is more like "Yalie."

Me too.

I hear nothing for the 4500-9500 Hz clip, but can hear the other two.

70 years old.

bks said,

May 18, 2018 @ 6:55 am

I get so much hysteresis from the NYTimes slider that I'm convinced that the slider itself has some hysteresis.

bianca steele said,

May 18, 2018 @ 11:03 am

I was hearing the two semi-randomly but a minute ago used the NYT tool that gives you a slider to strengthen one or the other. I was surprised that the same setting, one tick from center, sounded different depending on what had gone before! And now I can hear either one by saying the word silently to myself before it plays.

I guess this is also confirmation that what I perceive as high frequency tinnitus isn’t connected with high frequency hearing loss.

bianca steele said,

May 18, 2018 @ 11:07 am

Actually now I’m wondering, because I’ve been experimenting with notched audio as a tinnitus therapy, whether a similar phenomenon to what’s described in the OP might mean I’m mishearing the tinnitus frequency as well.

Norman Smith said,

May 18, 2018 @ 11:40 am

I heard this first on the television and heard only "Laurel" (repeatedly – it was an annoying feature of the newscast). Here, on my laptop, I hear only "Yanny". I wonder if the speakers on the device makes the difference?

Victor Mair said,

May 18, 2018 @ 12:06 pm

"I guess this is also confirmation that what I perceive as high frequency tinnitus isn’t connected with high frequency hearing loss."

It is for me. This was empirically demonstrated by Duke University physicians (audiologists) who examined me in 1993.

Craig S said,

May 18, 2018 @ 4:32 pm

I tried the New York Times "slider" app at https://www.nytimes.com/interactive/2018/05/16/upshot/audio-clip-yanny-laurel-debate.html.

While it's Laurel for me to start, if I shift the slider to the right I have to go all the way to the far right end of the tool to get to "Yanny". If I shift it back right toward Laurel right away after just one play, then I lose "Yanny" right away — but if I let it play at "Yanny" for about five to ten repetitions before shifting back, then it stays at "Yanny" until I'm all the way over to the far left "Laurel" end.

In other words, I'm definitely having a "what is your brain already primed to be hearing here?" effect that's influencing my perception.

Victor Mair said,

May 18, 2018 @ 6:27 pm

They can't agree on anything! White House releases jokey video of staff – including Ivanka and Mike Pence – trying to settle the 'Yanny vs Laurel' debate, while Trump declares 'all I hear is covfefe'

Ivanka and Kellyanne Conway confidently declare that the clip is saying Laurel

Sarah Huckabee Sanders is Team Yanny, while Mike Pence asks 'Who's Yanny?'

Trump also joins in on the video, referencing his tweet that went viral last May

By Dailymail.com Reporter

Published: 22:34 EDT, 17 May 2018

http://www.dailymail.co.uk/news/article-5743219/White-House-staff-including-Trump-join-Yanny-vs-Laurel-debate.html

Video here:

Trump resurrects "covfefe" in White House video about the Laurel-Yanny debate

CBS New 5/18/18

https://www.cbsnews.com/news/trump-resurrects-covfefe-in-white-house-video-about-the-laurel-yanny-debate/

And here:

Trump, White House staff join in on ‘Yanny vs. Laurel’ debate

By Nicole Darrah, Fox News

May 18, 2018

And, of course, here:

https://www.youtube.com/watch?v=dfqZlMCcbdM

Terrance Nearey said,

May 18, 2018 @ 7:00 pm

Sorry about url error in my previous posts. I've fixed it here but I am unable to edit the earlier ones.

I fiddled with a Praat script doing k-dB per octave boost above cutoff-hz to inplement

a home grown version of the Times slider.

I put up a preliminary working version on in a gist:

https://gist.github.com/tnearey/95a09c47bac19bcdc6ce4741ef14ad03

You'll have to hack for your own seed file. It implements an up-down staircase tracking procedure.

Press near the Y while you hear the target word, near N when you stop.

I get tremendous hysteresis effects starting with the Vocobulary.com "Laurel" as the seed stimulus. After a few up-down cycles, I can hear both (or aspects of both) simultaneously for a fair part of the middle range. Haven't tried it yet starting with the ambiguous Reddit one.

You can look at spectrograms for each of the k-dB per octave modifications.

Matt said,

May 18, 2018 @ 9:11 pm

Ben,

How did you get the audio from the video on twitter into a form you could analyze?

I copied the MP4 into my DAW directly and did not see activity above 6500 Hz in its scope.

Maybe you introduced those higher frequencies somehow?

JPL said,

May 18, 2018 @ 9:28 pm

@Terrance Nearey:

So, is there a signal perceivable and interpretable as "yanni" (or "yari") contained in the original Vocabulary.com recording (I can't hear it there) (or is something there but not perceivable by human ears), or is it only in the "ambiguous Reddit recording"? (The OP seemed to imply that the Reddit version had something added to it. I would have thought that the ability to distinguish two patterns simultaneously would mean that there were two objects (performances), not one.)

Bruce said,

May 18, 2018 @ 11:30 pm

Looks like people have been synthesizing additional versions. This one's remarkable – I can flip between "green needle" and "brainstorm" by priming myself beforehand, just like they claim

https://twitter.com/LiquidHbox/status/997176012113838080

Victor Mair said,

May 19, 2018 @ 12:03 am

@JPL

"I would have thought that the ability to distinguish two patterns simultaneously would mean that there were two objects (performances), not one.)"

Not necessarily so. There really are single performances with overtones and undertones (see the list of posts below). I mentioned "undertone" in this comment above.

http://languagelog.ldc.upenn.edu/nll/?p=38274#comment-1550871

Because of the structure of the head and vocal tract, there probably is a certain amount of this sort of reverberation in all speech and singing. I think what's happening with the Laurel-Yanny conundrum is that certain frequencies are being manipulated, either artificially / intentionally or through some other means, so that sounds one normally would not hear are enhanced and become audible. That's clearly what happened with me and several other commenters who couldn't hear Yanny at all until we got to the highest two notches on the slider, at which point I could hear both Laurel and Yanny simultaneously, though before that I could not hear Yanny at all. Above the tenth notch, at very high frequencies, I can't hear anything at all, because my ears have been damaged by an explosion that destroyed their receptivity to such high frequencies, whereas the low frequencies, which I have no trouble hearing, have been totally removed. No more Laurel.

"Overtone singing" (10/10/14)

http://languagelog.ldc.upenn.edu/nll/?p=15096

"Tooth and Throat Singing" (4/22/13)

http://languagelog.ldc.upenn.edu/nll/?p=4583

"Polyphonic overtone singing" (4/18/18)

http://languagelog.ldc.upenn.edu/nll/?p=37785

"Corsican polyphony" (11/25/13)

http://languagelog.ldc.upenn.edu/nll/?p=8661

Michael Tyler said,

May 19, 2018 @ 2:24 am

I'm leaning towards the "Mushy superformant hypothesis" (https://twitter.com/lisa_b_davidson/status/997117837180194817) where, for yanny, F1 and F2 combine to be perceived as F1, and F3 is perceived as F2. Joe Toscano's lab has provided an excellent explanation of it here: http://wraplab.co/yannylaurel/

JPL said,

May 19, 2018 @ 4:40 am

@Victor Mair

I was going to mention the overtone singing, and of course we have recently had the demonstration from Ms. Hefele, but that involves the overtones as opposed to different and simultaneous articulations involving tongue, lips, palate, etc., e.g., the different tongue positions of the dark /l/ and the high front glide /y/.

Philip Taylor said,

May 19, 2018 @ 4:48 am

JPL: "the different tongue positions of the dark /l/ and the high front glide /y/". Not convinced that these are a valid instance of "compare and contrast". For me, the initial "l" of "laurel" is a clear /l/. not a dark /ɫ/ (tho' the final "l" may well be dark, depending on the speaker), whilst the "high front glide /y/" of "yanny" occurs initially, not finally.

Ralph Hickok said,

May 19, 2018 @ 8:17 am

@Bruce:

No matter how hard I think of "brainstorm," I can hear only "green needle."

KevinG said,

May 20, 2018 @ 3:44 pm

This is a giant hoax.

I have heard both Laurel and Yanni clearly from the same source (CBS Morning News) through the same TV, the same set of ears attached to the same head on 2 consecutive mornings.

I'm convinced that all this scientific mumbojumbo, the spectrographs with pages of attached explanations etc is being put put to baffle us with bullshit.

There are clearly 2 separate audio files being fed to.

Most likely Laurel to PCs that have clicked on a http://www.whatever.com link

and Yanni to cellphones and tablets that are automatically re-directed to m.whatever.com websites.

The NY Times slider thing simply fades between the 2 audio tracks, there is no EQ or audio comb filters or compression involved.

We are being taken for a ride. Made even clearer by a piece I saw on TV yesterday trying to make us feel better about formerly sane friends and relatives falling for the lies and spin of "those others" you know "THEM" and how it's OK to think differently and maybe 2 people who thought they knew each other really well can actually hear things completely differently.

Bullshit! I say!

Remember who filed for bankruptcy this week….yeah Cambridge Analytica, could this be their one final last game played on those stupid Americans?

Why Not?

Anybody got a better idea?

I don't know any group of folks who were together listening to it and didn't all hear the same thing at the same time.

Ellen K. said,

May 20, 2018 @ 4:32 pm

KevinG, your theory ignores a few things. Including, importantly, in some cases, two people listening at the same time, to the same source, have heard it two different ways. (Just because you don't personally know them doesn't mean their testimony isn't true.) See comment from L right here, May 16, 2018 @ 3:28 pm.

And also mentioned here, some people are able to hear both at once.

And some people have heard it different ways from the same link, on the same device. Even sometimes on the same play through. And some of us have been able to change what we hear based on what's going on in our head.

But feel free to ignore the evidence that doesn't fit with what you want to believe, if that suits you.

Philip Taylor said,

May 20, 2018 @ 4:37 pm

I played the New York Times (slider) version to my wife, and she and I heard quite different things; she also differed from me as to the point on the slider at which there was a marked transition from one sound to another.

frank said,

May 20, 2018 @ 8:28 pm

i only hear "yari" anyone else?

Rube said,

May 22, 2018 @ 7:27 am

@KevinG: in case you're being serious, I personally have had the experience of hearing one thing while someone I was with heard something else, from the same recording, at the same time. This is pretty much the essence of what's surprising people.

Terrance Nearey said,

May 23, 2018 @ 3:26 pm

@JPL

"So, is there a signal perceivable and interpretable as "yanni" (or "yari") contained in the original Vocabulary.com recording (I can't hear it there) (or is something there but not perceivable by human ear s), …"

It's "in" the original signal, Vocabulary.com signal. Basically adjusting the equalization

emphasizing high frequencies relatively to low ones can flip me to Yari.

This is roughly similar to what the Times site is doing to the ambiguous one, but only on the right-side of the center point. Maybe if I get a chance I'll modify the program to move in both directions.

The alterations of the Reddit version were I think accidental low-fidelity re recording involving a on some combination of computer or cellphone speakers and re recording using a mic, possibly picking up background noise.

I don't think it's high frequency noise that matters so much as the relatively weak lower frequencies.

Now when I hear the Reddit clip on TV or radio, I usually hear it as Laurel (though I used to hear Yari on the first day) unless they're broadcasting sounds recorded from groups of people

listening to a phone or tablet (weak bass response), when I hear Yari again.

Keith said,

May 24, 2018 @ 2:17 am

@KevinG

I listened to this through earphones, and clearly heard "Laurel" until I pushed the slider almost all the way to the right, where I heard "yery", as described above.

A few days later, I sent the link to a colleague, and several of us listened at the same time through tinny little speakers; I heard "yery" with the slider in the centre, but it quickly became "Laurel" when pushed a little to the left.

So I'm convinced that there are at least three things contributing to what each person "hears".

– the audio playback mechanism (software and hardware filters, amplifier, speaker or headphone

– the ears of the listener (it's widely known that each person has a slightly different sensitivity to each frequency)

– what we expect to hear (culturally influenced, because we try to identify a known word or we have already been asked whether we hear "Laurel" or "Yanny")

Keith said,

May 24, 2018 @ 2:18 am

I forgot to mention the reactions of my colleagues.

Some heard one word, some heard the other; and the switch from one to the other happened at different positions of the slider.

Julie G said,

May 24, 2018 @ 11:33 am

I'm willing to bet that the ambiguity in the brainstorm/green needle recording has to do with some aspect of the sound quality making the high frequency formants of the /s/ sound like the F2 of /i/. The other pairs of interpreted sounds, such as t/d, br/gr, ei/i may also have formant ambiguities. I'm tempted to spectral analyze it in Audacity or sox to try and figure out why the /rm/ can be so readily confused with /ł/.

M.N. said,

May 24, 2018 @ 1:50 pm

I'm another person who hears both "Yanny" and "Laurel" simultaneously. Well, the first time I heard it, it was in a video that played the clip twice, and I heard "Yanny. Laurel." But when I played the video again, I heard both simultaneously.

Usually, if you listen to a short clip of speech played repeatedly, it starts sounding like music; weirdly, I never experienced this with this clip. Maybe it's *too* short?

On the other thing, I can't hear "storm", just "needle".

JPL said,

May 24, 2018 @ 6:45 pm

@Terrance Neary