Ask Language Log: Why are some Chinese PDFs garbled on iPad?

« previous post | next post »

Mark Metcalf writes:

Since Language Log addresses lots of interesting language-related issues, I was wondering if you'd ever encountered a problem with Chinese PDFs being incorrectly displayed on an iPad. I searched the LL website and didn't find it previously addressed. I also unsuccessfully searched the Web for solutions.

Here's the issue: Last week I downloaded several articles from CNKI and they all display correctly on my Windows machine. However, when I transferred them to an iPad the Chinese text was garbled. Since I haven't had iPad problems with Chinese PDFs from other sources, one thought is is that CNKI uses a modified PDF file format that can't be properly handled by the iPad OS.

Has anyone previously addressed this problem? If so, could you point me to a solution? If not, would you be interested in addressing this on 'Language Log'? Below I've attached before/after versions of the displays.

I asked several colleagues and students whom I've often observed reading Chinese PDFs on their iPads what their experience with CNKI has been. Here are a few of the replies that I received.

Adam D. Smith:

Both iPad and CNKI are imperfect (CNKI is annoying in so many other ways), and yes I think I have noticed this with certain files previously (most CNKI files work pretty well on both machines, though). For some reason the iPad app your correspondent is using doesn’t correctly recognize the encoding of the Chinese text. One fix for this might be to use an alternative PDF reader on iPad. Your correspondent might be using the usual Adobe reader. There are quite a few others. I suspect that switching from one to another might be a workaround. But annoying all the same.

Brendan O'Kane:

I have indeed encountered this — it seems to be specific to PDFs from CNKI, and often seems to be limited to page numbers and/or publication date and issue data, rather than article text. I'm not sure what the cause is, but the problem appears to be fixed, at least temporarily, by opening the PDFs in Adobe Acrobat (or maybe Acrobat Reader) rather than in the default PDF apps on the Mac. Saving the PDF there and then reopening should fix the problem — but it's obnoxiously inconsistent.

Jidong Yang:

I never loaded a Chinese PDF file into my iPad, but tried today after reading your email. This is what I found:

When I tried to open a Chinese PDF file in iBooks after syncing it through iTunes, many (but not all) characters went wrong, just as your friend described. My guess is that iBooks does a poor job in treating some of the punctuation marks in the PDF file, thus causing problem in decoding the entire paragraphs.

However, the Chinese PDF displayed very well in the Adobe Reader app, which I downloaded for free from Apple's AppStore. There are several ways to get PDF files into the iPad (through email attachment, Dropbox, Adobe Cloud service, etc.) Apparently, the Adobe Reader app comes with all Chinese fonts and does a good job in decoding punctuation marks.

Brian Vivier:

I haven't encountered this particular problem (though I don't use a tablet myself), and no one has brought it to my attention before. I'm not sure that the phenomenon is the same, but I have run into trouble with CNKI PDFs rendering correctly in Preview, the standard Mac PDF viewer. That program is probably similar to or the same as what the iPad uses, that may have the same cause as what your colleague is encountering. On a Mac computer, though, I don't have any trouble at all reading the PDFs using other PDF viewers. I don't know if the iPad permits other PDF reading software, but if it does, that's the first thing I would try.

In the bad old days, electronically stored and transmitted Chinese texts were often badly mangled (luànmǎ 亂碼 ["garbled", lit., "chaotic code"]), but after Unicode became well-nigh universal, such problems have radically diminished. As late as two years ago, however, we would still encounter issues like the following:

"Stray Chinese characters in English language documents" (8/22/14)

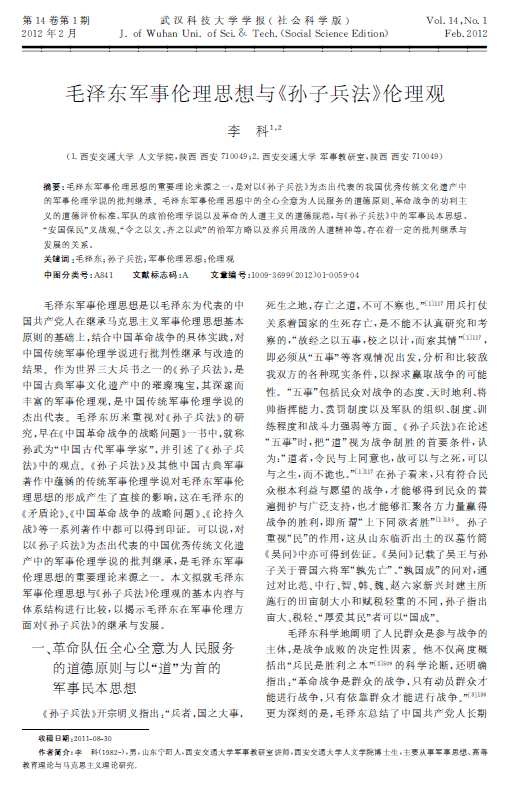

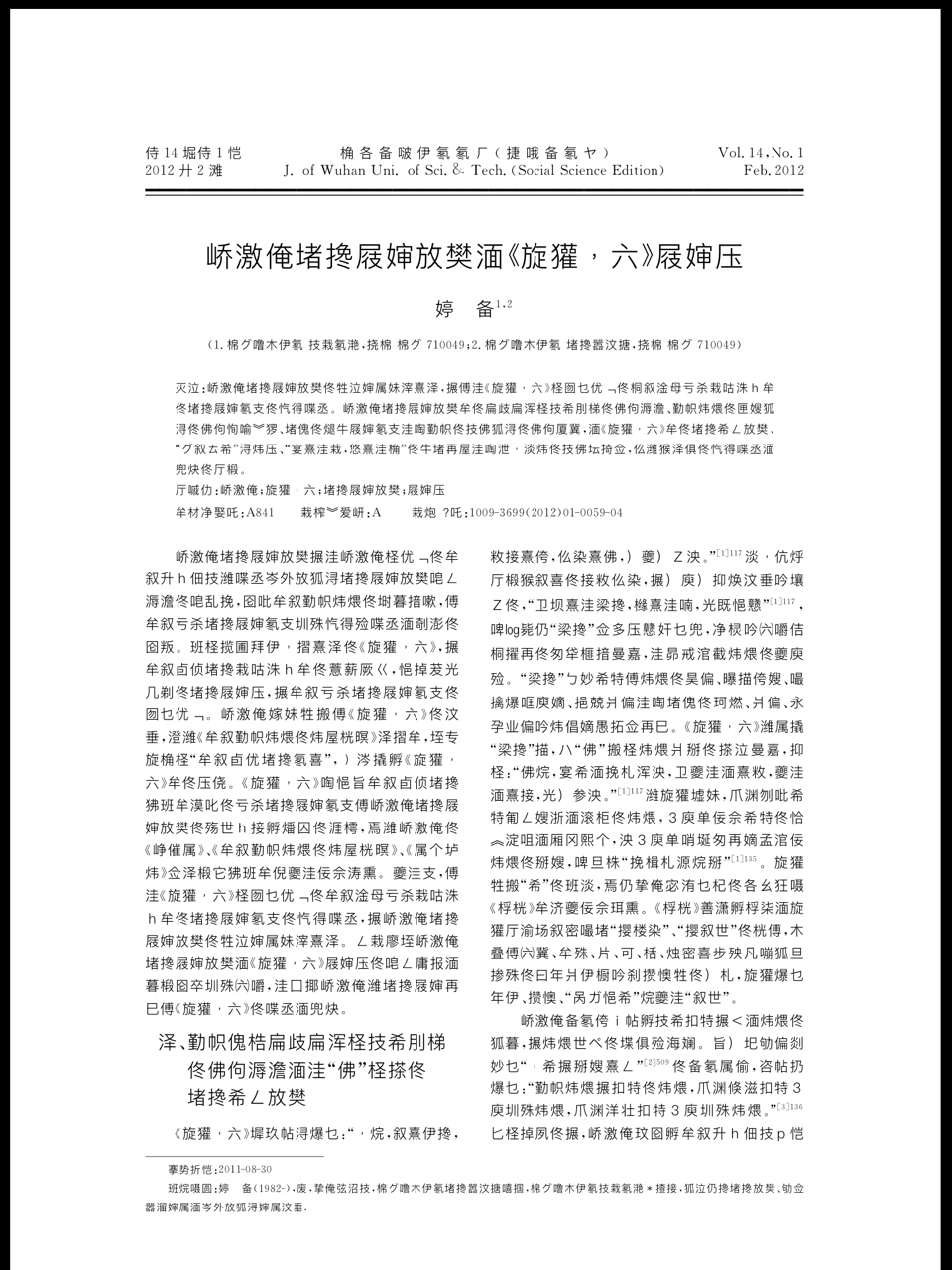

To show you what happened to Mark Metcalf, here are "before" (Windows) and "after" (iPad) screen shots of the opening page of the same article. The second version looks like it is a Chinese text, and it is composed of Chinese characters, but it is complete and utter gobbledygook. (As usual, click to embiggen.)

Joseph Williams said,

February 28, 2016 @ 2:49 am

To read CNKI downloaded documents on an iPad, or iPhone, one must download a free program called CAJ云阅读. If you don't trust Chinese software is not infected by computer malware you can take comfort knowing at all iPhone software is pre-checked.

Bob Ladd said,

February 28, 2016 @ 2:55 am

When I click to embiggen the samples, it actually ensmallens (belittles?) them.

[(myl) That's probably because of the size of the browser window that you've made available, since the WordPress function in question takes the available browser canvas as the maximum target size. The iPad version is actually 960×1280, while the version shown in the post is less than half that size. Try opening the image in another window, or saving it to your computer.]

Victor Mair said,

February 28, 2016 @ 8:00 am

@Bob Ladd

True.

We tried.

Only solution for the moment: get out your trusty magnifying glass or loupe. I keep a couple of them on all of my desks and tables, next to each of my computers, and in my backpack. After all, I am a Sinologist by trade.

David Graff said,

February 28, 2016 @ 9:43 am

I work at Apple, though not at all in this department. I'd like to help squash this bug. Can you post a link to the pdf of a page which is garbled on the iPad as well as a screenshot of a correctly interpreted page? I will forward it to the appropriate people so they can work at fixing it.

laura said,

February 28, 2016 @ 10:03 am

Once you've been able to open them properly in a pdf reader, have you tried 'flattening' them? As I understand it, that's supposed to do away with the extraneous information/ metadata that can make pdfs go wrong. Once it's flattened, it should be equally readable across all pdf readers. (I'm not sure whether that messes up any OCR functionality or not though, so if you need that too, this might not be the solution).

January First-of-May said,

February 28, 2016 @ 12:06 pm

@myl @BobLadd: Thanks, it helped! I had the same problem. (I thought my browser window was fairly large, but I suppose it's not large enough.)

On garbled PDF files: I can tell from my own experience that apparently opening mathematical PDFs (as produced by LaTeX) in Iceweasel (the Linux version of Firefox) and trying to print them results in weird looking documents with loads of assorted weird stuff (including a lot of Linux penguins) but few mathematical symbols. Could this be the same type of problem?

Philip said,

February 28, 2016 @ 1:08 pm

Perhaps interestingly, you say that "The second version looks like it is a Chinese text, and it is composed of Chinese characters, but it is complete and utter gobbledygook." I read absolutely no Chinese at all, but and would never have known the second one meant less than the first, but what I did notice straightaway is that the characters in the second one seem consistently more complex (more strokes per character) that is, there is far more black print on the page.

Strange result of the mangled en/decoding.

January First-of-May said,

February 28, 2016 @ 2:32 pm

@Philip – same exact thing for me: I wouldn't know whatever is on the second picture from actual Chinese text – I don't even specifically recognize any of the characters – but the characters on it seem a lot more complex on average (and, looking more closely, most of the simpler exceptions somehow feel like isolated components, or something similarly weird, rather than real characters – that's not including those that are clearly not real Chinese characters from an immediate glance, such as the closing bracket and the digit 3).

Hans Adler said,

February 29, 2016 @ 12:10 pm

This is not a complete explanation, let alone a solution, but here is some background information that is very likely relevant:

Unicode is primarily a mapping between 4-byte numbers and what they represent. Since we don't store (and transmit on the internet) 4-byte numbers but single bytes, we need a convention that prescribes how to translate a stream of 4-byte numbers into a stream of single bytes. Unfortunately this is complicated by the fact that there is no universal agreement on whether to put the highest byte of every 4-byte number first ("big-endian") or the lowest byte ("little-endian"). Another complication is that with a straightforward encoding most Western documents would be four times as long as necessary, with only one byte in every 4-byte group being different from zero.

To solve these problems, the default encodings in most contexts ("UTF-8") defines precisely how to turn each 4-byte number into a sequence of bytes (8 bits, hence "UTF-8"), and does it in such a way that the most common characters (in particular the entire ASCII set) are represented by single bytes. This is done in such a way that every ASCII document is also a valid Unicode document in UTF-8 encoding.

However, UTF-8 is not a good solution for Chinese, Japanese and Korean texts with few ASCII symbols. These need the UTF-16 encoding, which ensures that every standard CJK character is encoded in a single 2-byte number (16 bits, hence "UTF-16"). And the problem with that is that for each 2-byte number you have to decide whether to transmit the high byte first (big-endian) or the low byte (little endian).

In principle there is no problem here because when transmitting something in UTF-16, you first put the Unicode byte order mark. By inspecting it, the recipient can determine whether it should swap the two bytes of every 2-byte number it receives. This is why it is not normally a problem that Intel and AMD CPUs (and therefore also Windows and Linux PCs) are little-endian, whereas the Internet standard as well as the CPUs historically used by Apple are big-endian.

It is not *usually* a problem, but when porting code from standard PC architecture to Apple computers it is quite easy to make a mistake that results in an endianness error. I guess that what happens here is that PDF encodes various UTF-16 streams (among others for page numbers, publication dates, …) but does not always include the byte order mark required by the standard. Presumably the code dealing with this problem contains an error in the built-in PDF reader, possibly due to a mistake when porting from Linux or BSD.

Mark Metcalf said,

February 29, 2016 @ 3:04 pm

Thank you for posting this, Victor. To reiterate, I've only experienced this issue when attempting to display CNKI PDFs using Acrobat Reader on an iPad. Chinese PDFs from other sources display correctly on the iPad. And the CNKI PDFs display correctly using Acrobat Reader on a Windows 8 or 10 computer. Interestingly, Cute PDF professional (Windows) does not recognize the CNKI PDFs as valid PDF files.

Many thanks to all of you for your suggestions. I've followed up on a couple of them:

1) CAJ云阅读 (iPad): CNKI PDFs exhibit the same garbled decoding as with the Acrobat Reader (iPad). However, unsurprisingly, CNKI CAJs display correctly.

2) I re-saved (i.e. Save as) a CNKI PDF file as a new PDF file in the hope that Acrobat would convert the CNKI PDF to an iPad-readable format. It didn't.

3) I opened the PDF file in Word and re-saved it as a new PDF. The file was readable on the iPad, but the page formatting was modified.

Based on those results I was resigned to simply downloading a CAJ version of CNKI files that were to be used on an iPad, but then I serendipitously came across another iPad app: CAJViewerHD. And that app actually displayed the CNKI PDFs correctly. So that's the solution I'll be going with.

Thanks, again, for all of your comments. That's why I went to Victor (and the Language Log community) in the first place.

Michael Wolf said,

March 1, 2016 @ 8:33 am

I think Hans' comments about UTF-8 are wrong in a few places. To wit:

– UTF-8 isn't just made up of sequences of eight bits. Rather, it's made up of sequences of multiples of eight bits. So, sometimes sixteen bits — text in most European languages will be encoded in mostly eight-bit sequences but quite a few sixteen-bit sequences will also be there — and sometimes more, as is the case for many Asian languages.

– It follows that texts in Asian languages don't need UTF-16; they can be encoded in UTF-8 too.

I don't know what the problem with the PDFs was — sorry! — but I'd hate for someone passively reading the thread to absorb incorrect information without realizing it.

Hans Adler said,

March 1, 2016 @ 5:13 pm

@Michael Wolf: My explanation was probably imprecise in a few minor points, but certainly not in the major points that you mention:

– The point of UTF-8 is *precisely* that it is a stream of bytes and not of any multi-byte units. Of course that doesn't mean that EVERY character can be represented by a single byte. (To quote myself: "in such a way that THE MOST COMMON characters […] are represented by single bytes".) But when more than one byte is needed to represent a character, UTF-8 doesn't leave it open in which order they appear but defines everything down to the level of single bytes.

– When I wrote "UTF-8 is not a good solution for Chinese, Japanese and Korean texts", this was in the context of the previous paragraph, which explained that ASCII text needs 4 times as much memory in raw Unicode (UTF-32) as it does in ASCI. UTF-8 solves this problem perfectly for ASCII texts and quite well for most Latin-based scripts, but not for CJK texts. The problem is that when you represent CJK texts in UTF-8, almost every character begins with a byte that basically just says: "what follows is a CJK character". E.g. the character 語 is represented by a 4-byte sequence in raw Unicode = UTF-32 (because that holds for every character), by 3 bytes in UTF-8 and by just a single 2-byte sequence in UTF-16. This relation is typical for CJK characters.