Machine learning

« previous post | next post »

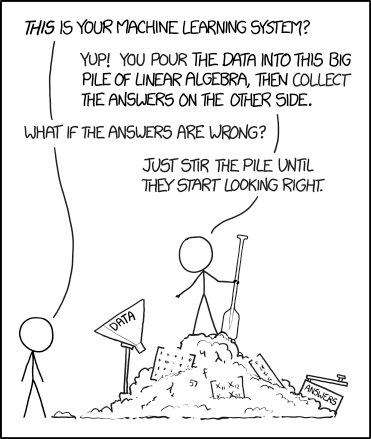

Today's xkcd: slightly unfair, but funny:

Mouseover title: "The pile gets soaked with data and starts to get mushy over time, so it's technically recurrent."

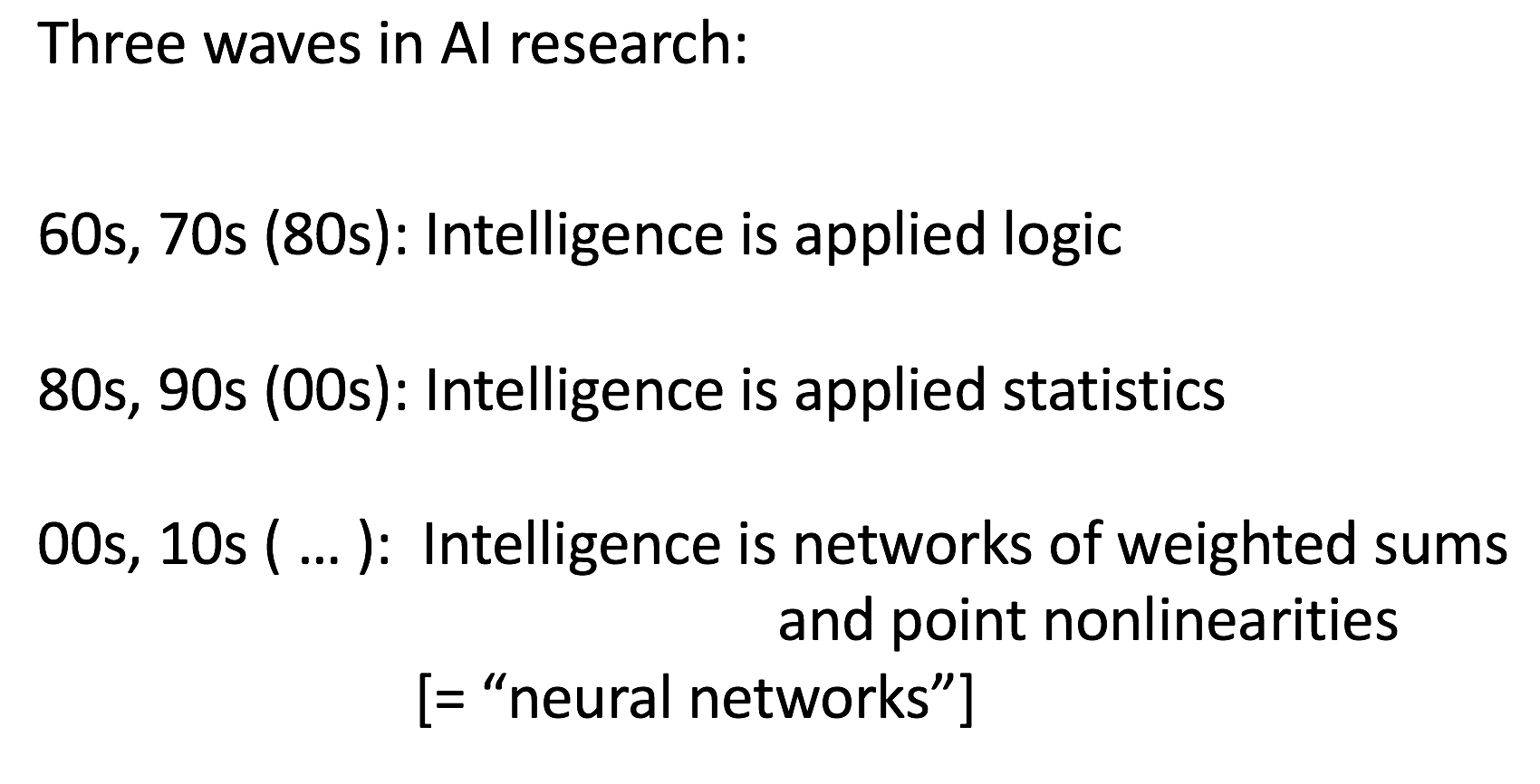

The first slide of my brief presentation about "The past, present, and future of AI" at a Science Café event a couple of weeks ago:

And just for fun:

"Country list translation oddity", 4/10/2017

“What a tangled web they weave“, 4/15/2017

“A long short-term memory of Gertrude Stein“, 4/16/2017

“Electric sheep“, 4/18/2017

“The sphere of the sphere is the sphere of the sphere“, 4/22/2017

“I have gone into my own way“, 4/27/2017

“Your gigantic crocodile!“, 4/28/2017

"More deep translation arcana", 4/30/2017

Though in fairness, the role of third-wave AI in those hallucinatory translations is conjectural.

And I need to confess to having just applied for the "equivalent of 200,000 hours of K80 GPU time" for stirring the piles of linear algebra at the JSALT 2017 workshop…

Dick Margulis said,

May 17, 2017 @ 1:18 pm

So, in other words, it took fifty years for the rest of the AI community to catch up with Frank Rosenblatt's 1957 Perceptron? https://en.wikipedia.org/wiki/Perceptron

Idran said,

May 17, 2017 @ 1:46 pm

@Dick Margulis: No, because the original 1957 perceptron's implementation of neural networks only worked on linearly separable classifications; data groupings whose convex hulls don't overlap, a property which nearly no real-life data sets you'd want to work with have. That's why the AI community dropped single-layer perceptrons, because Minsky proved they couldn't even handle a basic XOR relationship.

Rosenblatt's perceptron wasn't some amazing revolution that people just ignored, it flat out didn't work unless your dataset was just about trivially classifiable to begin with. Neural networks need to be multilayer to overcome that flaw.

[(myl) This is not quite right — as Minsky and Papert (Perceptrons: An introduction to computational geometry, 1969) noted, multiple layers of linear perceptrons reduce by simple algebra to an equivalent single layer.

The crucial innovation was the non-linearities. Minsky and Papert noted that you could overcome the limitations of the linear perceptron that way, but incorrectly asserted that no learning algorithms could be constructed for such systems. I believe that Stephen Grossberg's methods proving them wrong, at least in certain cases, were already around in the late 1960s (though not published until his "Contour Enhancement, Short Term Memory, and Constancies in Reverberating Neural Networks" in 1973), but certainly by the time of J.J. Hopfield, "Neural networks and physical systems with emergent collective computational abilities", PNAS 1982, and David Rumelhart and James McClelland, Parallel Distributed Processing: Explorations in the Microstructure of Cognition (1986), methods such as "back-propagation" were available. There was a substantial blip of PDP enthusiasm in the 1980s, but the main accomplishments of such systems were conceptual rather than practical, and they were eclipsed by statistical machine learning methods, until the 00's, when progress came in the form of some new learning algorithms and (mainly) much more efficient linear-algebra engines, originally developed as graphics units for gaming.

The deep history starts with McCulloch and Pitts, "A logical calculus of the ideas immanent in nervous activity", Bulletin of Mathematical Biophysics, 1943.]

bks said,

May 17, 2017 @ 11:12 pm

I think that the deep history starts with Pitts' adviser, Nicolas Rachevsky.

bks said,

May 17, 2017 @ 11:13 pm

Sorry, that should be: Nicolas Rashevsky.

[(myl) Rashevsky's Wikipedia article says that "In the early 1930s, Rashevsky developed the first model of neural networks" — but it also says "Citation Needed". Can you provide one?]

D.O. said,

May 18, 2017 @ 12:33 pm

In 10 years or so they will discover that non-linear systems have chaotic behavior (period doubling, strange attractors,…) and AI will have turbulent thoughts. (Do we have psychiatrists ready?)

[(myl) Oh, we know, we know…

At least about the chaos. Not sure about period-doubling]

bks said,

May 20, 2017 @ 10:01 am

MYL: From memory of when I was researching Robert Rosen about ten years ago. I no longer have access to journals without going to the Berkeley campus, but it might be in here:

http://www.sciencedirect.com/science/article/pii/S0097848501000791

James Wimberley said,

May 21, 2017 @ 1:35 pm

The only phrase I could understand in myl's response was "originally developed as graphics units for gaming". This is cheering news for all us timewasters, rubberneckers, role-playing addicts, and general idlers. "They also serve who only stand and wait".