More on "culturomics"

« previous post | next post »

The "culturomics" paper that Geoff Nunberg posted about is getting a lot of well-deserved kudos. Jean Véronis writes

When I was a student at the end of the 1970's, I never dared imagine, even in my wildest dreams, that the scientific community would one day have the means of analyzing computerized corpuses of texts of several hundreds of billions of words.

I've contributed my voice to the chorus — Robert Lee Holtz in the Wall Street Journal ("New Google Database Puts Centuries of Cultural Trends in Reach of Linguists", WSJ 12/17/2010) quotes me this way:

"We can see patterns in space, time and cultural context, on a scale a million times greater than in the past," said Mark Liberman, a computational linguist at the University of Pennsylvania, who wasn't involved in the project. "Everywhere you focus these new instruments, you see interesting patterns."

And I meant every word of that. But there's a worm in the bouquet of roses.

Here's a larger sample of my email Q&A with Mr. Holtz:

Q: What's your assessment of this computational approach to the historical lexicon? They suggest that this massive data base offers the foundation for new forms of historical and linguistic scholarship. In your view, are they right in heralding a new era for cultural studies or is this hyperbole? Is this likely to have any impact on scholarly studies?

A: I'd put their work in context this way: 2010 is like 1610. The vast and growing archives of digital text and speech, along with new analysis techniques and inexpensive computation, are a modern equivalent of the 17th-century invention of the telescope and microscope. We can see patterns in space, time, and cultural context, on a scale a million times greater than in the past. Everywhere you focus these new instruments, you see interesting patterns. Look at the sky, and see the moons of Jupiter; look at a leaf, and see the structure of cells.

This paper is an example of the sort of thing that is becoming possible, and will soon be easy. Its main contribution is to create a historical corpus of texts that is many times larger than those used in the past. Its main limitation is to look only at the frequency of word-strings over time.

Q: The researchers clearly see this data base as a tool for more than the study of the evolution of language. They talk about tracking a wide range of social trends — fame, censorship, diet, gender, science and religion — and I wonder how you assess that claim. Can the study of changing language on this scale be a lens to study all that?

A: In principle, yes. Some interesting questions can be reduced to a matter of changes in the frequency of words and word-strings. Other questions still have answers implicit in a historical text collection, but may require other sorts of analysis.

For a small but amusing example of the kind of problem that requires more than "culturomic trajectories", take a look at Giles Thomas's post about the systematic OCR substitution of f for long-s:

Why is it that of four swearwords, the one starting with ‘F’ is incredibly popular from 1750 to 1820, then drops out of fashion for 140 years — only appearing again in the 1960s?

Your first thought might be to do with the replacement of robust 18th-century English — the language of Jack Aubrey — with pusillanimous lily-livered Victorian bowdlerism. But the answer is actually much simpler. Check out this set of uses of that f-word from between 1750 and 1755. In every case where it was used, the word was clearly meant to be “suck”. The problem is the old-fashioned “long S“. It’s a myth that our ancestors used “f” where we would use “s”. Instead, they used two different glyphs for the letter “s”. At the end of a word, they used a glyph that looked just like the one we use now, but at the start or in the middle of a word they used a letter that looked pretty much like an “f”, except without the horizontal stroke in the middle.

But to an OCR program like the one Google presumably used to scan their corpus, this “long S” is just an F. Which, um, sucks. Easy to make an afs of yourself…

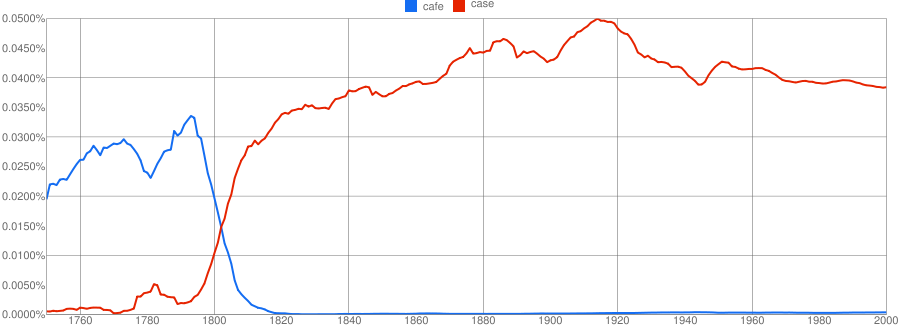

Here's a typical example, cafe vs. case:  And fame vs. same:

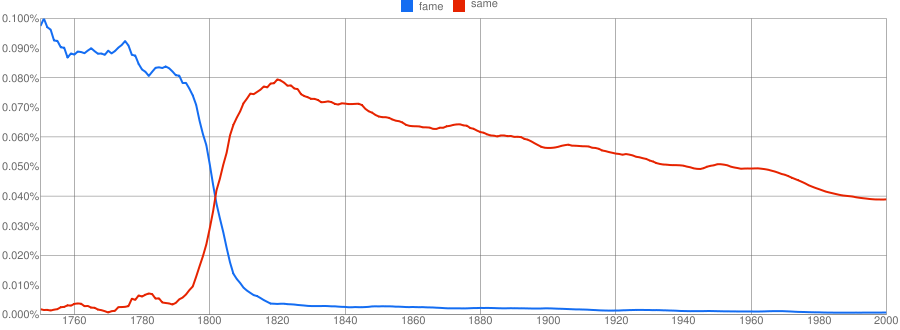

And fame vs. same:

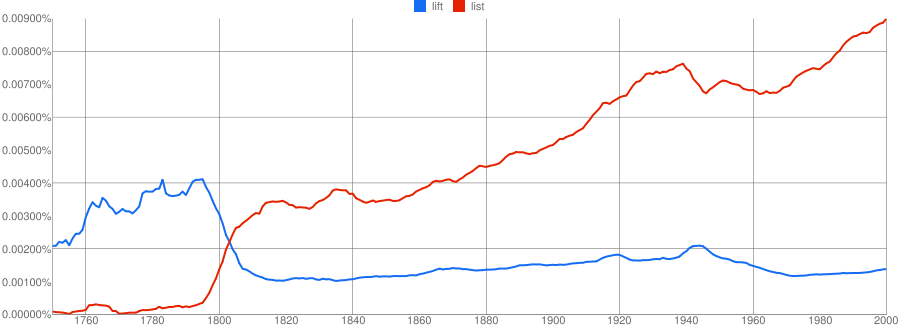

And lift vs. list:

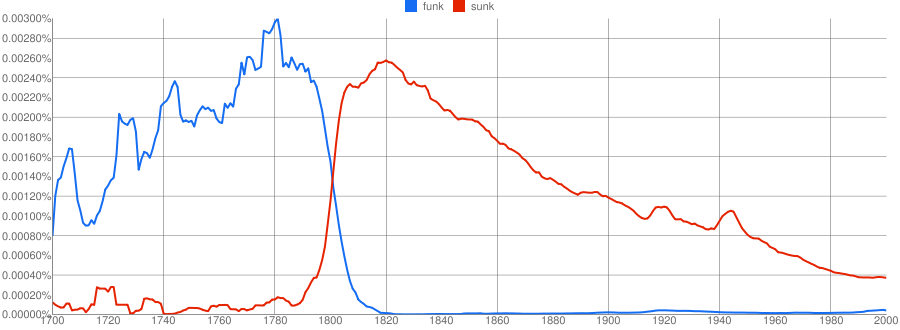

And my personal favorite, funk vs. sunk, showing the absolute peak of funkitude associated with the Siege of Yorktown:

(Coincidence? I think not.)

This s/f confusion, in itself, is a small glitch. Independent of any possible improvements in the back-end OCR programs at Google Books, it would be easy to use the techniques developed by David Yarowsky for word-sense disambiguation in order to clean up the s/f confusions in these texts.

It would be easy — if you had access to the underlying texts, not just to the time-functions of n-gram frequencies. In this case, I have little doubt that the folks at Google Books will take care of the problem. The issue is simple enough, and common enough, and embarrassing enough, to catch their attention and to be assigned an adequate allocation of effort.

But there's an indefinitely long list of cases of genuine sense disambiguation, where the same letter-string in the text corpus — even when correctly OCR'ed — corresponds to several sharply distinct senses, or to a cline of shades of meaning. In each case, someone with access to the underlying corpus can use well-studied techniques to put each instance in its proper semantic place, in a way that is consistent with the opinions of human annotators to roughly the same extent that they are consistent with one another. As things stand, however, no one except the "Google Books Team" has the needed access. And we shouldn't expect the Google Books team to want to do this for every sense distinction of possible interest to any scholar.

The same thing is true for the large class of cases where a given letter-string in the text corpus has an interesting range of different functions. For example, consider the issues about the role of the word-string "the United States" discussed here, here, here, and here. Does it get singular or plural agreement? Is it the subject of a sentence? Is it treated as an agent? Sometimes you can find reasonable n-gram proxies for such questions — but often you can't.

There are also a large number of cases where you'd like to group word-strings into categories: dates, organizations, minerals, place names, novelists, etc., and then treat these categories (rather than words or word strings) as units of analysis. Again, there are well-known techniques for inducing such categories in text collections — but to use these techniques, you need to be able to have the text collection in hand so as to be able to run your algorithms over it.

Many — maybe most — questions about historical texts are like these last few examples: relatively easy to answer if you have a corpus in hand, and not addressed very well (if at all) by a collection of "culturomic trajectories", defined as the year-by-year time-functions of common word sequences. In particular, nearly all questions about the history of the English language fall beyond the grasp of time-functions of n-gram frequencies. This is not to deny the interest and value of such time functions. It's just that they're not nearly enough.

What are the prospects here? The portion of the Google Books corpus before 1922 — which is more than enough for many historical studies — is not encumbered by copyright. However, it belongs to Google, and it is arguably unfair to ask a company to pay for a large database creation project and then to give it away. Nevertheless, this leaves the rest of us in an uncertain situation. Here's the end of my Q&A with the previously-mentioned reporter:

Q: Lastly, as a practical matter, does the use of the Google book library pose any particular challenges to researchers due to copyright issues, the nature of the digitization process, and such? Broadly, what do you think of Google's ambitions to digitize the world's libraries?

A: Google is making an important contribution to the creation of the archives that will make new kinds of work possible. For that, the company deserves everyone's thanks.

But there is a potential problem. As it stands, outside scholarly access to this historical archive will be limited to tracking the frequency of words and word-strings (what they call in the trade "n-grams"). This is useful for addressing some questions, but most questions will require other kinds of processing, which are not possible without having the full underlying archive in your (digital) hands. For the material before 1922, there is no copyright issue. The only barrier is Google's competitive advantage.

This puts the rest of us in a difficult position. Given Google's large, well-run and successful effort to digitize these historical collections, for which the economic returns are fairly small on a per-book basis, it's unlikely that anyone else will duplicate their efforts in the visible future. So we're in the situation that would have existed if the Human Genome Project had been entirely private, rather than shared.

In this analogy, the access to "culturomic trajectories" to be made available at culturomics.org might correspond to information about the relative frequencies of nucleotide polymorphisms across individuals, without access to the underlying genomes.

This is not a wonderful analogy, for various reasons, though maybe it helps to make the point.

The Science paper says that "Culturomics is the application of high-throughput data collection and analysis to the study of human culture". But as long as the historical text corpus itself remains behind a veil at Google Books, then "culturomics" will be restricted to a very small corner of that definition, unless and until the scholarly community can reproduce an open version of the underlying collection of historical texts.

[Full disclosure — Mr. Holtz calls me "a computational linguist at the University of Pennsylvania, who wasn't involved in the project", and this is basically true. However, I discussed the project with some of the authors in a meeting in Cambridge a couple of years ago, as the project was getting underway, and I've corresponded and talked with them from time to time since.

A quantitative caveat — Mr. Holtz quotes me correctly as using the phrase "on a scale a million times greater than in the past". Although that's true as a statement about the scale of linguistic data in general, in this particular case the proximate point of comparison would be Mark Davies' Historical Corpus of American English, which is only about a thousand times smaller. Of course, if you go farther back into the past or forward into the future, the multiplier gets bigger.]

[Update #2: Mark Davies makes the case that his Corpus of Historical American English gives essentially the same results for many searches, is more reliable in other cases, and allows a much wider variety of search types, including useful genre classifications, collocates, part-of-speech searches, etc. I'm a big fan of Mark's work, and these are all good points. What I'd like to see is for Mark to have access to the full-text data behind the Google lists, so that he could give us the best of both worlds.]

Leonardo Boiko said,

December 17, 2010 @ 4:13 pm

The handling of old typography in their OCR definitely subpar. For another example, try to search old books containing the word “Internet” and click a few examples at random. Then notice this particular search (1500–1700) returns no less than 60 pages of results like those (mostly things like interpret, intorquet, interfici, tutemet, ingentes &c. &c.)

[(myl) Although I don't have any numbers, my impression is that the rate of OCR errors in Google Books is much lower than the rate I see in OCR of similar texts from other sources. So, errors yes, "subpar" definitely not.]

Reliable support for things like ſ, þ, or punctuation (e.g. ’ vs ', or ‐ – —) would make the tool interesting for typographers as well.

Twitter Trackbacks for Language Log » More on “culturomics” [upenn.edu] on Topsy.com said,

December 17, 2010 @ 4:14 pm

[…] Language Log » More on “culturomics” languagelog.ldc.upenn.edu/nll/?p=2848 – view page – cached December 17, 2010 @ 3:39 pm · Filed by Mark Liberman under Language and culture, Semantics […]

Dmitri said,

December 17, 2010 @ 4:28 pm

My enthusiasm for the project was somewhat dampened when I noticed that "Derrida" is much more frequent than "Beatles" in their corpus — an effect which I suspect reveals a bias toward small-readership academic publications. (An old-fashioned Google search gives many more "Beatles" hits than "Derrida" hits.)

[(myl) I don't think this is true… Oh wait, yes it is.… But presumably this is a genuine fact about books as opposed to media outlets.

Again, with full access to the corpus, you could classify the texts and compare different categories.

And it's interesting to see that the "theory" wave apparently peaked in 1997-98. (Or else their selection of relevant texts did…)]

When I think about using this as a scholar, this seems to be a real obstacle. It's amusing to chart the relative frequency of "Debussy," "Schoenberg," and "Stravinsky," but the resulting graph leads to a conclusion I don't trust, one which is again inconsistent with old-fashioned Google searches.

All of which just underscores the point that it would be great to have more control over the underlying data.

[(myl) Absolutely.]

Dan T. said,

December 17, 2010 @ 8:03 pm

Some books are also stored with incorrect dates, leading to bogus hits for things from before they actually existed.

Neal Goldfarb said,

December 17, 2010 @ 8:36 pm

Myy (nonexpert) guess is that the unlicensed use of a copyrighted work in a corpus that has the same sort of functionality as COCA would qualify as fair use and therefore wouldn't infringe the copyright. But the problem is that for most institutions that have the resources to create a big corpus, the risk of litigation will be too big for them to go ahead based on just a lawyer's opinion about fair use. A big corpus will contain a lot of potential plaintiffs.

Bill Benzon said,

December 17, 2010 @ 9:00 pm

So, we've got another case where copyright reform is badly needed.

[(myl) A large properly-curated corpus extended to 1922 would be a very big step forward, and would not pose any copyright problems (at least in the U.S.).]

Leonardo Boiko said,

December 18, 2010 @ 4:09 am

I think I was (unconsciously) comparing the OCR to manual typing. The software is subpar when compared to the human standard. Of course, a corpus of this size would be impossible to type and so the only fair comparison is to other OCR software. My bad.

Dan T. said,

December 18, 2010 @ 7:56 am

One might get human assistance in improving the character reading through such things as Mechanical Turk or ReCAPTCHA.

Jane B. said,

December 18, 2010 @ 8:30 am

There's also a problem w/ multiple copies of the same title, from different libraries & in various editions, and listings of rare book sales catalogues being included … fun but sloppy!

Moacir said,

December 18, 2010 @ 9:29 am

Mark Davies has already put up a comparison between COHA and Google's effort:

http://corpus.byu.edu/coha/compare-culturomics.asp

I was frustrated to see people gush all over this, when COHA has been available for a while now with a larger feature set. From my calculations, which may be wrong, Google's jump from 400k titles to 5b has barely any effect on the margin of error :)

Basically, I'm glad I remembered to come to LL to see that there would be like/right thinking people ready to view this achievement with a certain amount of distance, context, and apprehension.

Moacir said,

December 18, 2010 @ 9:41 am

My mistake… The jump is to 500b, not 5b.

Natalie Binder said,

December 18, 2010 @ 10:07 am

I am not as sanguine about the OCR and metadata errors in Google Books. I have digitized books before for academic projects and have always found OCR to be subpar. When I say "subpar," I don't mean "bad in comparison to other OCR software." I mean that OCR in general is not accurate enough to support robust academic research. The ngrams team claims (in a brief aside) that most of the books they're using have a high accuracy score, but I'm not sure how they establish that. Is a human editor going through and manually checking a random sample of books? Are computers checking each other? Because each one one of those methods has serious problems.

The Language Log itself has gone in-depth on Google Books' metadata problems, which also affect the accuracy of ngrams. I won't repeat all that here, but unless Google has made incredible improvements in OCR (which would be a much bigger deal than ngrams), those problems are still here and still serious. Furthermore, you can't expect to crowdsource metadata errors away. It's a nice idea, but there's a reason library cataloging is a specialized professional field. There is a better opportunity for laypeople to correct OCR errors (see Google's ReCaptcha for an example), but Google would have to open up the collection.

I don't want to the the "culturenomics" Eeyore–I see a lot of potential in this type of work. The fact that we're even taking a step in this direction is huge. We're just not there yet.

I'm doing a series on my blog about ngrams. Yesterday I blogged about OCR and metadata: http://thebinderblog.com/2010/12/17/googles-word-engine-isnt-ready-for-prime-time/

Moacir said,

December 18, 2010 @ 1:23 pm

I've started having a blast playing with the "Russian" ngrams part of the googlelab, if just to see the extent of OCR hilarity that ensues.

To its credit, Some efforts to address the 1917 spelling reform are included in the OCR. For example, searches for "Россия" include hits on "Россiя."

I then decided to search on "бес," — devil, imp — which, from my understanding, was rendered "бѣсъ" pre-reform. An interesting mess ensued:

* "бес" includes hits for "все" ("everything")

* "бес" is a prefix ("mal-") as well as a word, and every time it's followed by OCR gibberish, Google counts it as a hit of the word for devil

* Forget putting "ѣ" in your search term. Google refuses it. In OCR, it often appears as "±"

* Finally, I noticed that some of the books in the "Russian" corpus are, wait for it, NOT IN RUSSIAN. One hit came on "бес]"еди," which turns out to be "бесjеди," which turns out to be Serbian.

GeorgeW said,

December 18, 2010 @ 6:38 pm

@Jane B.: If there are multiple copies of the same text, wouldn't a single token get multiple counts?

Yerushalmi said,

December 19, 2010 @ 3:34 am

I noticed something odd in the Google search results. Try searching for "Star Wars" and "Star Trek" from 1800 to 1960. There are a couple of bumps in which they appear long before they were invented. This would not be surprising (it's not weird that occasionally the word "star" randomly happened to appear immediately before the word "wars" in a text) if not for the fact that their frequencies track so much with each other. I think there's a number of books misallocated in the timeline here.

John Cowan said,

December 19, 2010 @ 3:55 pm

Yerushalmi (and other commentators): Again, it's unsafe to assume that these anomalies are metadata errors rather than OCR errors. Check the facts! Do a Google Books search and examine the book yourself.

Rosie Redfield said,

December 19, 2010 @ 4:25 pm

The genome analogy is very apt. The flood of scientific understanding that has come from genome-project data was only possible because we have complete access to the raw data. On their own the frequencies of the base pairs at different positions have very little value.

Around the Web – December 20th, 2010 | Gene Expression | Discover Magazine said,

December 20, 2010 @ 1:28 pm

[…] More on "culturomics". Also see the #ngrams hash-tag. […]

On the imperfection of the Google dataset, and imperfection in general « The Stone and the Shell said,

December 20, 2010 @ 3:56 pm

[…] by Google's decision to strip out all information about context of original occurrence, as Mark Liberman has noted. If researchers had unfettered access to the full text of original works, we could draw much more […]

The Pre-Tarantinian History of “Get Medieval” (and other delights of Google’s Ngram Reader) — Got Medieval said,

December 20, 2010 @ 8:29 pm

[…] graph that plots the search term's frequency in the corpus against the date. Because of the vagaries of OCR, the search engine's inability to track declension, conjugation, and so on, and the selection bias of the texts that get scanned by Google, the graphs produced by the Ngram […]

Ben Schmidt said,

December 20, 2010 @ 11:44 pm

I thought the Google scanning settlements left libraries with copies of the scanned pdfs and OCR. So if they combine, there can be full access to the texts without Google mediation. And that seems to be what HathiTrust is. So the issue of Google lock-in for the pre-copyright texts isn't overwhelming, if the research libraries are willing to give away some text files for free.

[(myl) We can hope. So far, more is available from Google than from Hathi.]

Terry Collmann said,

December 21, 2010 @ 6:30 pm

My impression as a regular user of Google Books' "clip" function, which enables users to copy and paste text out of the scanned versions of old books and into a word processor programme,, is that while it used to struggle with the "long s" in 18th century texts, the service is pretty nearly 100 per cent accurate now in rendering a long s when copied-and-pasted as an s and not an f. Indeed, sometimes it even hypercorrects and turns a real f into an s. So Google certainly knows how to distinguish between suck and fuck: it would seem that the tweaks evidently applied to the "clip" OCR software were not applied to the Ngram OCR software.

ʘ‿ʘ « company of three, black peppermint tea said,

December 22, 2010 @ 6:46 am

[…] [article] More on "culturomics" For a small but amusing example of the kind of problem that requires more than "culturomic trajectories", take a look at Giles Thomas's post about the systematic OCR substitution of f for long-s: […]

Jon Proppe said,

December 22, 2010 @ 9:56 am

Being an Icelander, one of my first tries on the Ngram site was a search for "Iceland" through the English corpus. This returned some fascinating spikes and I clicked through to examine the sources. It turns out that the spikes result from the OCR software misreading Ireland as Iceland (also, incidentally, a common problem at post offices around the world). The fact that the misreadings form distinct spikes would seem to indicate that they are related to some aspect of period typography or printing practice and perhaps one day when I have nothing better to do I will get around to investigating this further.

Wednesday Round Up #134 | Neuroanthropology said,

December 22, 2010 @ 11:24 am

[…] Liberman, More on "Culturomics" *A post that praises the database, but also points out the limitations of only looking at the […]

Buffalo buffalo buffalo buffalo buffalo! « by Erin Ptah said,

February 12, 2011 @ 1:24 pm

[…] Google, in its ongoing quest to Organize Everything Ever, has a database of 500 billion words published in six languages between 1500 and 2008. Even its flaws turn out to be interesting. […]

Culturomics and Google Books – Fully (sic) said,

February 17, 2011 @ 8:00 pm

[…] Several prominent linguists have already discussed these points in detail, e.g. Geoffrey Nunberg, Mark Liberman and David […]

The Future Tense | Paul Farquhar said,

February 25, 2011 @ 6:10 pm

[…] the vantage point of Mark Liberman, a linguist at the University of Pennsylvania, the 2010s are shaping up to be like the 1610s. […]

Ngram | Colorless Green Ideas said,

March 21, 2011 @ 7:23 am

[…] Language Log Blog has a very good overview of the good points and concerns with it. There is also a lot of good links […]

Bookworm | English Teaching Daily said,

September 29, 2011 @ 4:16 am

[…] the Google Ngram Viewer came out, I tempered my enthusiastic praise with a complaint ("More on 'culturomics'", […]

The Grammar Lab | Gender Pronouns in the News said,

August 11, 2012 @ 10:04 pm

[…] The shortcomings of the article and the corpus were quickly addressed by linguists like Mark Liberman and Geoff […]