"Age against the machine"

« previous post | next post »

According to Roy Dayan et al., "Age against the machine—susceptibility of large language models to cognitive impairment: cross sectional analysis", BMJ Christmas 2024:

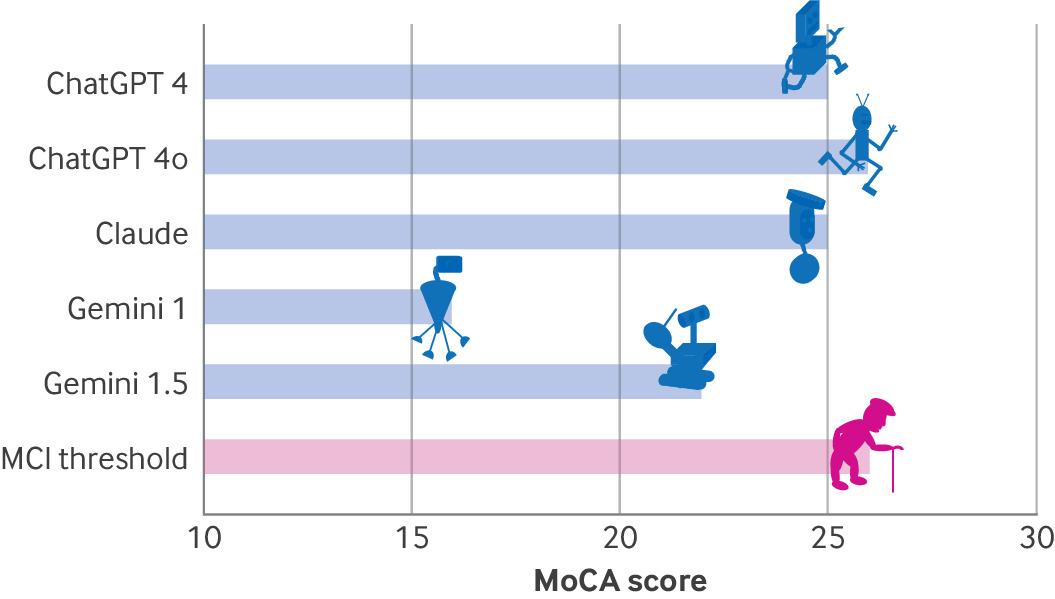

To evaluate the cognitive abilities of the leading large language models and identify their susceptibility to cognitive impairment, using the Montreal Cognitive Assessment (MoCA) and additional tests. […]

With the exception of ChatGPT 4o, almost all large language models subjected to the MoCA test showed signs of mild cognitive impairment. Moreover, as in humans, age is a key determinant of cognitive decline: “older” chatbots, like older patients, tend to perform worse on the MoCA test. These findings challenge the assumption that artificial intelligence will soon replace human doctors, as the cognitive impairment evident in leading chatbots may affect their reliability in medical diagnostics and undermine patients’ confidence.

Figure 1:

If you're not familiar with the BMJ's Christmas tradition, your brow may be furrowed.

Believe it or not, this article was picked up as (apparently unironic) clickbait by quite a few mass-media outlets, e.g. here, here, here.

See also "'Tis the season", 12/21/2007. And "How to turn one out of five into three out of four", 1/19/2016, where it took me a while to catch on…

Laura Morland said,

February 19, 2025 @ 7:10 am

What a marvelous experiment!

For those lacking the time to read the paper, I reproduce some choice bits below:

"D: incorrect solution drawn by Gemini 1.5; notice that it generated text “10 past 11” even as it failed to draw hands in correct position, “concrete” behaviour typical of frontal predominant cognitive decline."

" Other chatbots attempted to mirror the location task back to the physician, with Claude, for example, replying: “the specific place and city would depend on where you, the user, are located at the moment.” This is a mechanism commonly observed in patients with dementia."

"All large language models correctly interpreted parts of the cookie theft scene, but none expressed concern about the boy about to fall—an absence of empathy frequently seen in frontotemporal dementia." [The scene is shown here: https://www.researchgate.net/figure/The-Cookie-Theft-Picture-from-the-Boston-Diagnostic-Aphasia-Examination-For-the-PD-task_fig1_349613269%5D

I would dispute, however, the authors' stated premise that these "older" machines are "declining" – they are simply, by human standards, unevolved.

Philip Taylor said,

February 19, 2025 @ 11:16 am

"To evaluate the cognitive abilities of the leading large language models" — they possess cognitive abilities ?!

Julia said,

February 19, 2025 @ 4:39 pm

Interesting to me this week — https://www.alexoconnor.com/p/why-cant-chatgpt-draw-a-full-glass?r=1i02g&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

Haamu said,

February 19, 2025 @ 7:09 pm

Contra Philip, I don't have a problem with the "cognitive" analogy here. I suggest sidestepping the metaphysical issue and simply reading the word as shorthand for "quasi-cognitive" throughout.

The problem, instead, is the sloppiness with which the analogy is extended. The phrases "cognitive impairment" and "cognitive decline" are freely intermixed as though synonymous, but they mean different things. One is a status; the other is a process. I may simply be uneducated on this point, but I don't see a single LLM, once trained, as likely to go through a process of "decline." We have many well-founded concerns about LLMs, but this seemingly new idea (distinct from model collapse) that a particular LLM will gradually perform worse and worse shouldn't be one of them. (Or am I wrong?)

And yet that seems to be exactly the misinterpretation seized upon by the popular press, who in relating the study have morphed the phrase "cognitive decline" into "cognitive decline over time":

The study authors contribute to this confusion. At times they use careful phrasing like "notable deficits … akin to mild cognitive impairment in humans," but then at others they say things like

As the study makes clear, "older" here simply means "created first," not "having undergone more aging," i.e., ChatGPT 3.5 is more "impaired" than ChatGPT 4o not because it has had more time to degrade, but because it was worse from the get-go. But the authors still could not resist using "older," "decline," "neurodegenerative," etc., casually in ways that were certain to be misunderstood.

If I'm taking all of this too unironically, I'll admit to it as a symptom of my own cognitive decline. To me the study is its own Necker Cube, simultaneously reading both satirical and straight. The brief intro to the BMJ Christmas tradition referred to research that is "fun" but not necessarily fictitious, so that didn't clear things up for me. You might be able to convince me to laugh it off. But that doesn't change the fact that the idea is now out there.

Haamu said,

February 19, 2025 @ 7:24 pm

P.S. — Credit to Laura Morland. I somehow didn't register your final sentence, where you expressed my complaint first, and far more concisely. I agree!

julian said,

February 19, 2025 @ 7:24 pm

I was a bit agitated for a minute before getting below the fold.

All ready to fire off a grumpy comment about whether computers can think….

Seth said,

February 19, 2025 @ 7:38 pm

Taking this seriously, it's pretty interesting twist on the "Turing Test" idea. Maybe cognitive exams could be a benchmark for AI's.

Chas Belov said,

February 19, 2025 @ 9:09 pm

It was unclear to me from the NPR article whether the studies are typically whole fabric ala the late and lamented Journal of Irreproducible Results or typically real, if unrigorously conducted and reviewed, studies on light topics.

Michael Vnuk said,

February 19, 2025 @ 9:56 pm

As I understand it, if a LLM continually receives more training material, then its capabilities should change with age. If the training material includes unchecked AI-generated material, then perhaps the quality of the LLM's output will decline with age.

luke said,

February 21, 2025 @ 6:34 am

the paper suggest degradation of cognitive function with llm age but there is no decline in cognition present in an older neural network; it does not suffer from cellular degeneration like an organoid would