The history of "artificial intelligence"

« previous post | next post »

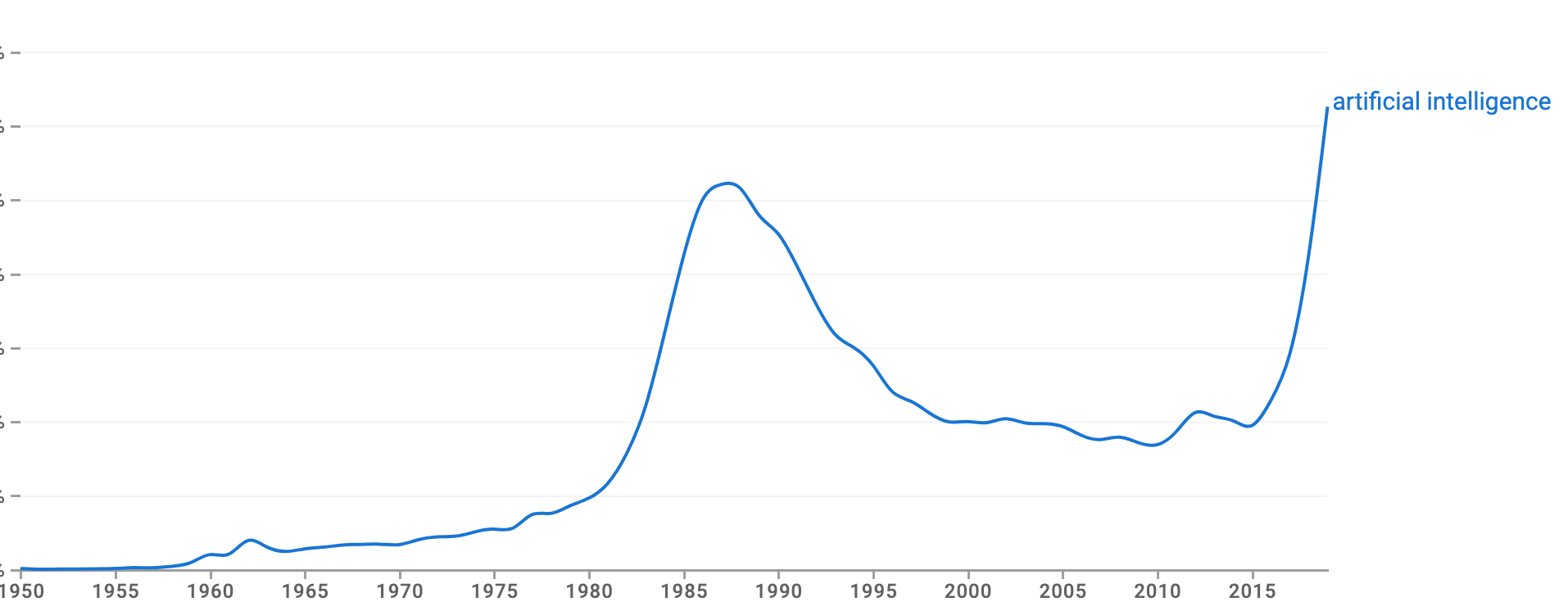

The Google Books ngram plot for "artificial intelligence" offers a graph of AI's culturomics:

According to the OED, the first use of the term artificial intelligence was in a 13-page grant application by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, "A proposal for the Dartmouth summer research project on artificial intelligence", written in the summer of 1955:

We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.

The proposal uses the phrase repeatedly without quotation marks, capitalization, or any other indication of its status as a neologism, suggesting that it was in common conversational usage before that (apparently) first publication, and/or that the authors thought its compositional meaning was obvious.

There's no question that the concept had been under discussion for a decade or so at that point, with analogous ideas to be found hundreds of years earlier. And there are older uses of the phrase "artificial intelligence", in interestingly divergent contexts, also going back hundreds of years.

That 1955 proposal is worth reading — it includes a list of seven "aspects of the artificial intelligence program", and a page or two from each of the four authors giving their individual "Proposal for Research". They ask for $13,500 (though it's not clear to me who the proposed funder was).

Their "conjecture" — that the solution will be for "every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it" — is characteristic of the era of "classical AI", which Ken Church and I called "AI as applied logic". Still, the document contains seeds of the other approaches (AI as applied statistics, AI as applied neural nets), which again is consistent with our view that all of these ideas have been in play together from the start.

There's a clear presentation of the "artificial intelligence" concept (though without the cited phrase) in Alan Turing's famous 1950 Mind paper, "Computing Machinery and Intelligence", which begins:

I PROPOSE to consider the question, ‘Can machines think?’ This should begin with definitions of the meaning of the terms ‘machine’ and ‘think’. The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous. If the meaning of the words ‘machine’ and ‘think’ are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, ‘Can machines think?’ is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.

He then proposes the first version of the still-famous Turing Test — though this first version of the "imitation game" involves a two-layer gender identification problem that today's readers are likely to find strange (detecting a machine pretending to be a man pretending to be a women):,

It is played with three people, a man (A), a woman (B), and an interrogator (C) who may be of either sex. The interrogator stays in a room apart from the other two. The object of the game for the interrogator is to determine which of the other two is the man and which is the woman. He knows them by labels X and Y, and at the end of the game he says either ‘X is A and Y is B’ or ‘X is B and Y is A’. […]

In order that tones of voice may not help the interrogator the answers should be written, or better still, typewritten. The ideal arrangement is to have a teleprinter communicating between the two rooms. Alternatively the question and answers can be repeated by an intermediary. The object of the game for the third player (B) is to help the interrogator. The best strategy for her is probably to give truthful answers. She can add such things as ‘I am the woman, don’t listen to him!’ to her answers, but it will avail nothing as the man can make similar remarks.

We now ask the question, ‘What will happen when a machine takes the part of A in this game?’ Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, ‘Can machines think?’

Wikipedia's Turing Test article notes that Turing "had been running the notion of machine intelligence since at least 1941". The article also cites philosophical precursors in Descartes (1637), Diderot (1746), and Ayer (1936), and fictional precursors from Pygmalion to Pinocchio, though it fails to mention von Kempelen's Mechanical Turk or Leibniz's lingua generalis.

Meanwhile, there's an earlier history of the phrase "artificial intelligence", which takes us on a tour of several different senses of the words artificial and intelligence.

Following Wittgenstein's dictum that Etymology is Destiny, artificial can be traced to Latin artificiālis via artificium ("skill") from ars ("skill") and facere ("to make"); and intelligence, skipping a few steps, can be traced to Latin intellego from inter ("between") + legō ("choose"). I've selected a few examples from this tour below — they don't tell us anything much post-1955 AI, they just illustrate how the meaning of words and phrases change. (And it might be interesting to see what various LLMs make of them.)

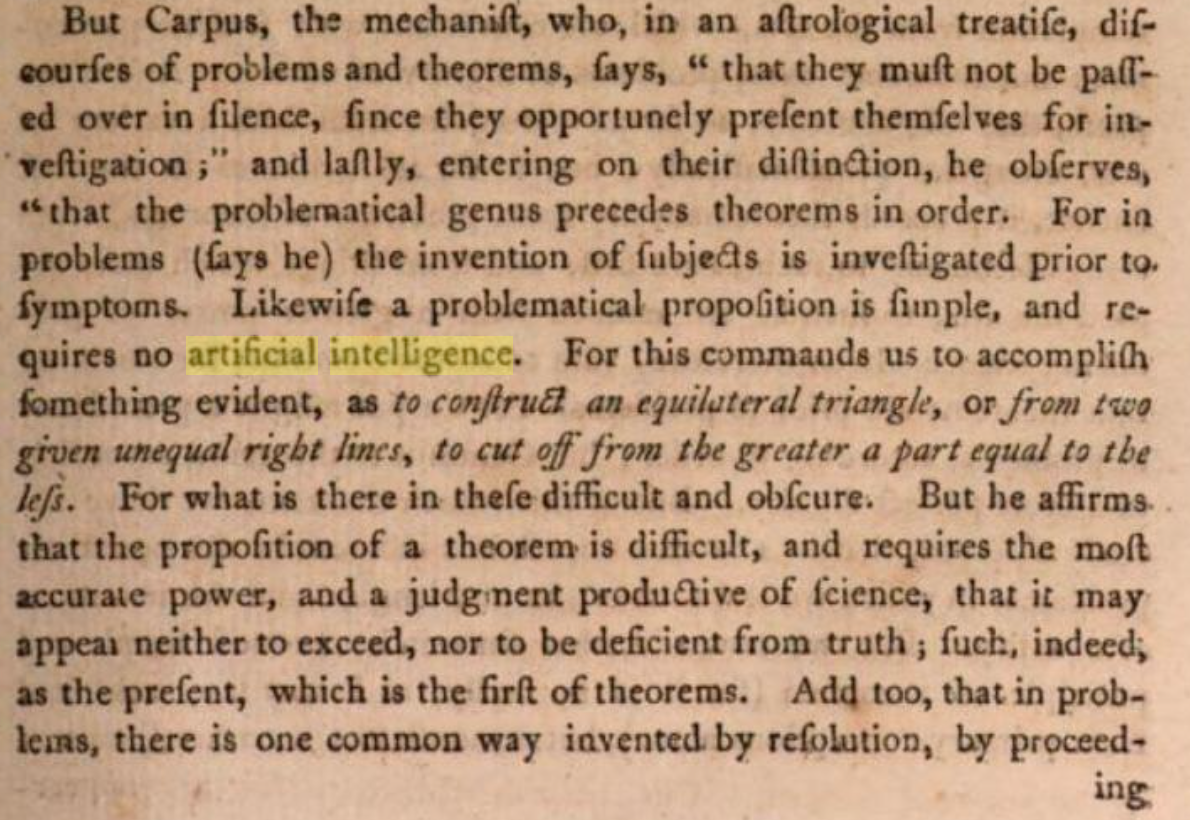

We can find the phrase "artificial intelligence" in the 1792 publication of The Philosophical and Mathematical Commentaries on the First Book of Euclid's Elements, to which are Added, a History of the Restoration of Platonic Theology, by the Latter Platonists; and a Translation from the Greek of Proclus's Theological Elements:

This citation seems to involve a sense of artificial in the OED's (obsolete) group III, with a gloss like "displaying technical skill; expert, ingenious"; and intelligence in the sense of "the faculty of understanding". So the meaning of the phrase artificial intelligence becomes something like "expert understanding", and the author's point is that a problem that "commands us to accomplish something evident" "is simple, and requires no artificial intelligence", i.e. no expert understanding.

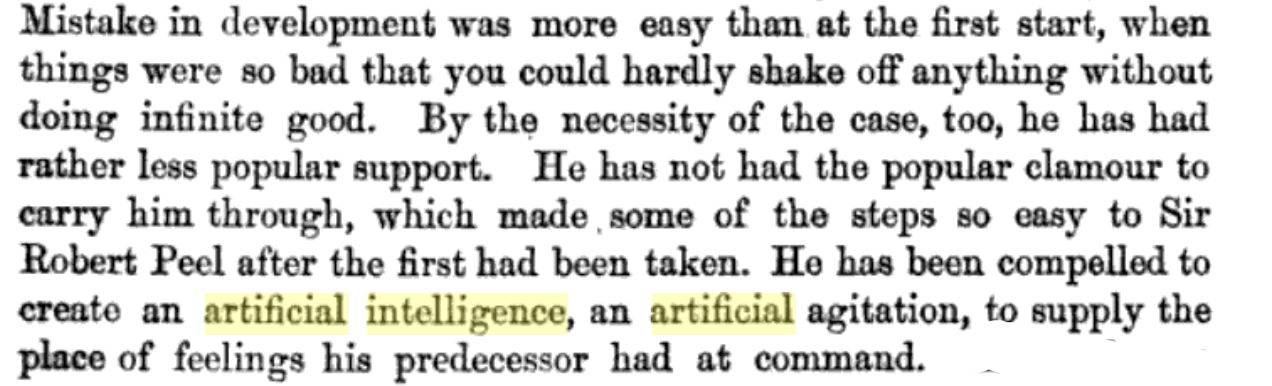

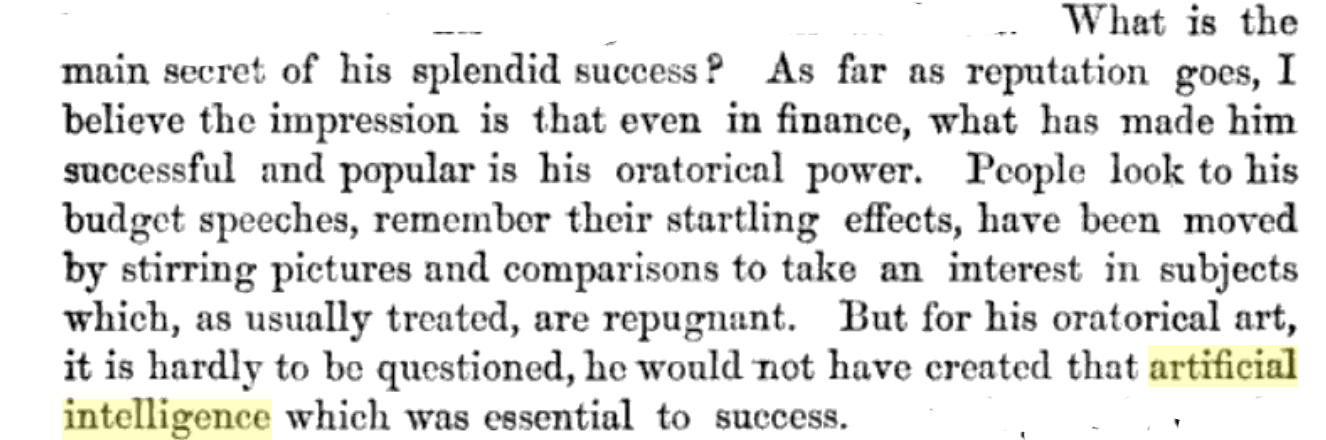

In "Mr. Gladstone's Work in Finance", from The Fortnightly Review in 1869, we find a couple of examples:

I'll leave it to the reader to decide exactly what these uses of artificial and intelligence mean — though it seems clear that we're still in the space where artificial has positive connotations.

On p. 232 of Zhuangzi, Chuang Tzŭ: Mystic, Moralist, and Social Reformer, 1889, things seem different:

Here intelligence again means something like "understanding", but artificial now means something like "tricky" or maybe "unnatural".

In the 1891 third edition of the second volume of Robert Kerr's "History of the Modern Styles of Architecture", we find

And here artificial again means something like "unnatural", and intelligence means something like "(ways of) thinking".

And in William Allen Sturdy's 1907 The Degeneracy of Aristocracy, there are no fewer than 8 uses of the phrase, e.g.

Again, artificial is opposed to Nature, and artificial intelligence is some kind of unnatural trickery.

DJL said,

November 13, 2023 @ 12:17 pm

Never ceases to amuse to see that McCarthy et al thought real advances could be accomplished in one summer.

Philip Taylor said,

November 13, 2023 @ 12:46 pm

But would it have equally amused you at the time that the proposal was written, or might you then have considered the timescale not unreasonable ?

Philip Taylor said,

November 13, 2023 @ 1:19 pm

Mark — " (though it's not clear to me who the proposed funder was)" — the Rockefeller Foundation. "The Rockefeller Foundation is being asked to provide financial support for the project on the following basis:". See p.~3, para.~10 (l.~-10 et seq.).

Jerry Packard said,

November 13, 2023 @ 1:49 pm

On the Zhuangzi citation – the artificial knowledge would seem to be subjective knowledge; objective knowledge would seem to be ‘natural’ or non-subjective. Knowledge here therefore seems to be something like ‘reality’, which I guess can be seen as an interesting trope for ‘intelligence.’

Jonathan Smith said,

November 13, 2023 @ 2:29 pm

Re: Zhuangzi the English sentence seems to approximate "不開人之天,而開天之天,開天者德生,開人者賊生"… so no words corresponding very well to 'artificial' or to 'intelligence'… the contrast instead seems to be between developing/actualizing the capacities/endowments of "heaven" (desirable) vs. those of "man/people" (less desirable), with the Zhuangzian twist being that "heaven" and "endowments" are the same word tian1 天 (cf. Mand. tian1fu4 天賦 tianacai2 天才 etc.)

Mark Liberman said,

November 13, 2023 @ 2:34 pm

@DL: "Never ceases to amuse to see that McCarthy et al thought real advances could be accomplished in one summer."

It didn't stop. See Seymour Papert, "The Summer Vision Project", 1966.

Bill Benzon said,

November 13, 2023 @ 5:41 pm

This is very interesting, Mark. I took a look at that 1955 proposal, which is very interesting indeed. I wonder how many of the proposed seven areas were actually investigated in the conference. Of course they are all so general and it would have been easy enough to make a claim about having taken them up. I notice that self-improvement is number 5 on the list. That’s very much a gleam in the eye of many a current AI researcher today, especially when prefixed with “recursive,” yielding recursive self-improvement. It’s also much feared by the prophets of AI Doom, who believe that once an autonomous AI becomes capable of self-improvement, it’s only a matter of months, weeks, days, or even hours before it becomes a superintelligence and takes over the world, generally to our detriment.

P.S. McCarthy, Newell, Simon and them all thought that chess was the key to everything. Some years later McCarthy wrote a paper entitled, “Chess as the Drosophila of AI.” I’ve been told that someone once quipped that if geneticists had dealt with Drosophila the way AI researchers dealt with chess, we’d have a lot of fast fruit flies but not much genetics.

Language was second on the list. As far as I know, artificial intelligence didn’t take up the study of language in earnest until the late 1960s. But a different group of researchers did so at roughly the same time AI was getting itself organized. My teacher, David Hays, was one of them. As you know, he headed the RAND Corporation’s work on the subject.

This was a much more highly focused research area, and one with a practical goal. In America, that goal was to translate Russian technical documents into English. While much interesting work was done, by the early 60s there seemed little immediate prospect of achieving the practical goal. The Federal Government commissioned a study, in which Hays participated, which reached two conclusions: 1) No practical work was forthcoming, but 2) the creation of new conceptual tools makes theoretical work very promising. The government paid attention to the first, ignored the second, and the field lost its major source of funding. In consequence it proceeded to rebrand itself as computational linguistics.

Hays left RAND in, I believe, 1969 to become the founding chair of the Department of Linguistics at SUNY Buffalo. I undertook Ph.D. study in the English Department there in the Fall of 1973 and a year later joined Hays’s research group in computational linguistics. That was a tremendous experience for me.

All of that was done under the rubric of what is now called symbolic AI, or, as John Haugeland put it, Good Old-Fashioned AI (GOFAI). GOFAI began giving way to machine learning in the 1980s and 1990s and, with the development of deep learning (that is, many-layered artificial neural nets) in the first decade of this century…. Well, we all know that story, to date. Just where things go from here is not at all clear.

Bill Benzon said,

November 13, 2023 @ 5:42 pm

P.S. McCarthy, Newell, Simon and them all thought that chess was the key to everything. Some years later McCarthy wrote a paper entitled, “Chess as the Drosophila of AI.” I’ve been told that someone once quipped that if geneticists had dealt with Drosophila the way AI researchers dealt with chess, we’d have a lot of fast fruit flies but not much genetics.

Sean said,

November 14, 2023 @ 1:36 am

The Historical Dictionary of Science Fiction has a citation by E. Hamilton from Startling Stories in 1951: "Grag, the towering manlike giant who bore in his metal frame the strength of an army and an artificial intelligence equal to the human, rumbled a question in his deep booming voice." https://sfdictionary.com/view/440/artificial-intelligence

Benjamin Geer said,

November 14, 2023 @ 2:54 am

Some more information on this workshop: https://en.wikipedia.org/wiki/Dartmouth_workshop

Jerry Packard said,

November 14, 2023 @ 5:22 am

Jonathan, thanks for finding the original citation. I read 天 here more as ‘reality’ than as heaven or endowments.

Bill Benzon said,

November 14, 2023 @ 5:43 am

From that Wikipedia entry (thanks for the link, Benjamin Geer): "It was not a directed group research project; discussions covered many topics, but several directions are considered to have been initiated or encouraged by the Workshop: the rise of symbolic methods, systems focused on limited domains (early expert systems), and deductive systems versus inductive systems." There you have it, GOFAI in a nutshell.

J.W. Brewer said,

November 14, 2023 @ 8:33 am

The peak in the google n-gram trendline is around 1986, which was the year I took an "Artificial Intelligence" class – in my university's Computer Science department but targeted for non-majors and maybe I got to count it for credit toward the Linguistics major. Maybe that was right at the very end of the "we're only a few years away from the big breakthrough" era w/r/t computers being able to handle natural language that had been going on for 20+ years at that point? I don't know if final disenchantment with that hope is what caused the subsequent decline in usage of the bigram, but would be interested in alternative theories of why the phrase became less common for a while.

Seth said,

November 14, 2023 @ 9:33 am

@ J.W. Brewer – There's been a few cycles of AI hype over the last few decades. Not necessarily in terms of science advances, but in terms of the tech business press and venture capital fads. That may be part of the reason for the boom-bust trend on the graph.

Bill Benzon said,

November 15, 2023 @ 6:09 am

I'm in the process of reading a fascinating article by Richard Hughes Gibson, Language Machinery: Who will attend to the machines’ writing? It seems that Claude Shannon conducted a simulation of a training session for a large language model (aka LLM) long before such things were a gleam in anyone's eye:

After some elaboration and discussion:

Next thing you know, someone will demonstrate that the idea was there in Plato, and that he got it from watching some monkeys gesticulating wildly in the agora.

DJL said,

November 15, 2023 @ 3:02 pm

Didn't know the Paupert summer project – thanks for sharing. I only wish I could be so productive in one summer (or even, simply, so optimistic!).

Bill Benzon said,

November 15, 2023 @ 4:56 pm

BTW, looking at the graph in the OP, I strongly suspect that the extension of "artificial intelligence" is different at the right hand side than it is from the left up thought 1985 and through to, I don't know, let's say 1995. On the left if refers to symbolic AI, aka GOFAI (good old-fashioned artificial intelligence) while at the right it probably refers to some kind of machine learning or neural networks. Symbolic AI hasn't disappeared completely, but it's a relatively small part of the mix.

I've just written a rather long post, A dialectical view of the history of AI: We’re only in the antithesis phase. [A synthesis is in the future.], that tells the story of how one set of concepts and techniques gave way to another from the mid-1950s up through the present. In that I have a graph with three curves: artificial intelligence, neural networks, and machine learning. Neural networks starts moving up at around 1985 (when artificial intelligence peaks), crosses over AI at about 1990, and hits it peak at about 1995. Machine learning moves up slowly from 1985 through 2014 or so and then jets sharply up to cross both AI and neural nets at the end.