Bad AI performance

« previous post | next post »

It's clear that text-to-speech programs have gotten better and better over the past 60 years, technical details aside. The best current systems rarely make phrasing or letter-to-sound mistakes, and generally produce speech that sounds pretty natural on a phrase-by-phrase basis. (Though there's a lot of variation in quality, with some shockingly bad systems in common use.)

But even the best current systems still act like they don't get George Carlin's point about "Rhetoric as music". Their problem is not that they can't produce verbal "music", but that they don't (even try to) understand the rhetorical structure of the text. The biggest pain point is thus what linguists these days call "information structure", related also to what the Prague School linguistics called "communicative dynamism".

Before we get to the fancy stuff, let's illustrate some old-fashioned problems that still surprisingly plague systems in common use. If I deploy the default way to "Have your Mac speak text that’s on the screen", I get "Samantha", who makes many elementary text-analysis mistakes as well as sounding unnaturally choppy.

For example, the system's performance of start of this Wikipedia page divides phrases very badly, and mispronounces the world "multicoloured":

A rainbow is an optical phenomenon that can occur under certain meteorological conditions. It is caused by refraction, internal reflection and dispersion of light in water droplets resulting in a continuous spectrum of light appearing in the sky. The rainbow takes the form of a multicoloured circular arc. Rainbows caused by sunlight always appear in the section of sky directly opposite the Sun.

Google Cloud's synthesis console, using the voice en-US-neural2-A, does a much better job on the same passage — though it still sounds a bit robotic to me, a string of decontextualized sentences with too many of the words given equal and excessive weight:

(I'm sure that Apple's developers have systems of comparable quality — I'm just surprised that what they deploy as the default in macOS 13.6 is so bad…)

It's easy to find examples where the Google Cloud system's information-structure blindness creates more strikingly unnatural speech. For example, here's a short passage from Iris Crawford's 2012 novel A catered St. Patrick's Day: a mystery with recipes (retrieved from the COCA corpus):

In the first place, Brandon had sounded really tense. In the second place, he never turned off his phone. And in the third place, Brandon was never one to ask her for help if there was any other possibility.

And it's instructive to compare and contrast how a human being deals with analogous issues. Here's a sentence spoken by Richard Knox (from "Your Stories Of Being Sick Inside The U.S. Health Care System", NPR Morning Edition 5/21/2012):

And now she regrets even taking her father to the hospital in the first place.

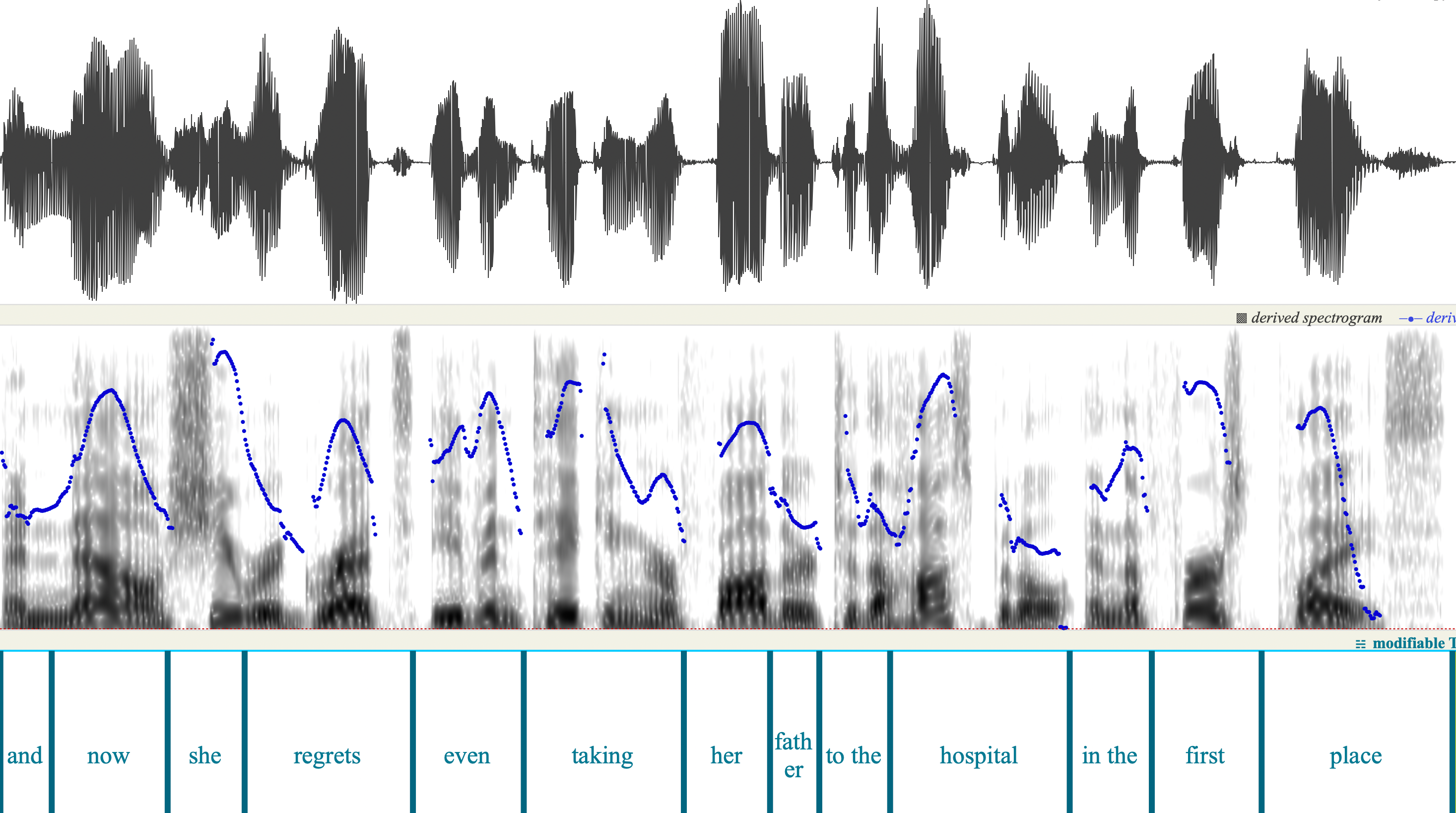

The Google Cloud version of course has the same robotic lack of de-accentuation on "place", as well as the excessive and tiresome prosodic modulation of other words:

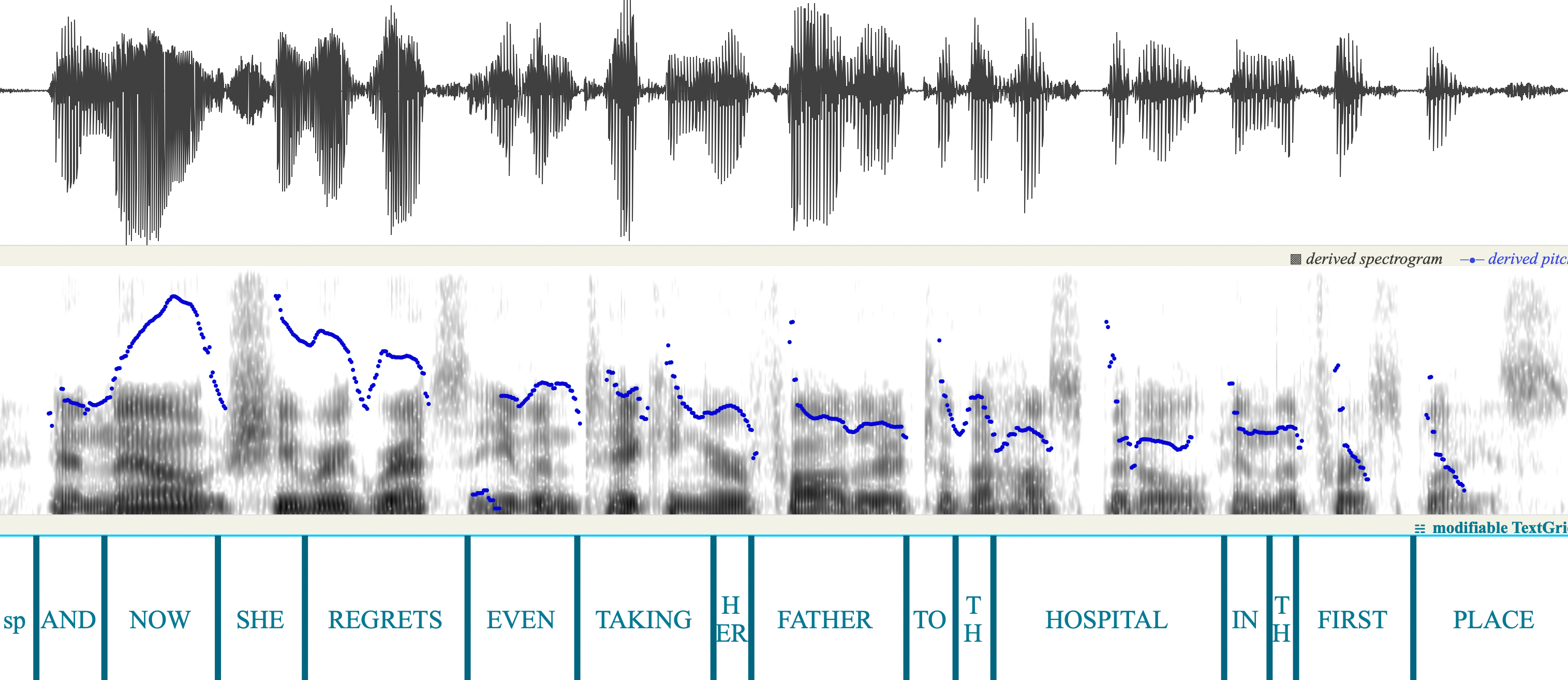

The spectrograms and F0 tracks show us a visual version of what we hear. First Knox:

And then Google:

I believe that such failures of prosodic rhetoric are connected to failures of discourse analysis. Or more properly, connected to the lack of any real attempt at discourse analysis, much less understanding. Of course, others believe that ever-larger "deep" models of surface letter-string co-occurrences will eventually solve everything. The specific error highlighted in this post could easily be fixed by triggering on strings like "in the Nth place" — though that won't get us very far into the universe of explicitly and implicitly contrastive phrases, the effects of anaphoric reference, etc., etc. …

[Note that I've ignored excellent text-to-speech systems from Amazon, IBM, Microsoft, and others — this post aims to focus attention on a currently unsolved problem, not to present a comparative evaluation.]

John Swindle said,

September 24, 2023 @ 7:17 am

The emphasis on "in the first place" might be more appropriate for "in first place."

They're reaching the finish line! In first place—Brandon, who sounds really tense! In second place—Brandon's phone, which he never turned off! And now, in third place, it's Brandon again, never one to ask for help unless he has to!

Cervantes said,

September 24, 2023 @ 7:20 am

Well sure. Humans understand what they are saying. Machines are a long way from understanding anything. That's the simple way to put it.

Philip Taylor said,

September 24, 2023 @ 7:43 am

"Machines are a long way from understanding anything" — indeed so. From which an obvious question is "and will they ever do so", which in turn invites the question "Do we (Homo sapiens, that is) really understand what "to understand" involves, and if not, will we ever do so ?".

Cervantes said,

September 24, 2023 @ 8:00 am

PT — people have been asking this question at least since the Greeks. John Locke wrote a whole book about it, from which I quote:

"All that can fall within the compass of human understanding, being either, first, the nature of things, as they are in themselves, their relations, and their manner of operation: or, secondly, that which man himself ought to do, as a rational and voluntary agent, for the attainment of any end, especially happiness: or, thirdly, the ways and means whereby the knowledge of both the one and the other of these is attained and communicated; I think science may be divided properly into these three sorts."

The key here is that signs in some way map onto reality. Locke's division here is similar to Habermas's in The Theory of Communicative Action, or Searle's and Austin's Speech Act Theory. There's been a whole lot written about this, but I'm not saying it yields answers, the problem being that even to consider the question is to enter an infinite recursion.

Philip Taylor said,

September 24, 2023 @ 8:22 am

"the problem being that even to consider the question is to enter an infinite recursion" — that very thought did occur to me as I was composing my earlier comment …

Gregory Kusnick said,

September 24, 2023 @ 11:25 am

It's worth noting that physics has made enormous progress in understanding the world by jettisoning any notion of "the nature of things, as they are in themselves" and focusing entirely on "their relations, and their manner of operation". This strongly suggests that ideas of essentialism and intrinsic natures are an impediment rather than an aid to understanding.

If this view is correct — if all understanding is relational — it offers hope that "machine understanding" is as feasible as human understanding (though whether LLMs actually have it is of course a different question).

Tim Leonard said,

September 24, 2023 @ 12:28 pm

"Have your Mac speak text that’s on the screen" uses a program that comes for free with a Mac, and that can run locally on a small and slow Mac.

Google Cloud's synthesis is not free, and has access to orders of magnitude more compute resources.

It would be quite disappointing if the cloud synthesis were not vastly superior.

Jonathan Smith said,

September 24, 2023 @ 1:58 pm

FWIW my suspicion is that the capabilities already achieved via god-tier DL are better than is currently publicly available — Google's Chinese text-to-speech through e.g. the Translate interface was dialed back glaringly-obviously on the order of a yearish ago.

/df said,

September 24, 2023 @ 4:57 pm

"Humans understand what they are saying." Sometimes you really do wonder, though obviously my personal understanding is beyond criticism, and probably that of LL contributors too.

"Machines are a long way from understanding anything." I may have suggested this before, but whenever someone says something like this, or, as rather often recently, "the software is only predicting the next word based on what it has seen before and does not **understand** what it is producing", I hear Searle's Chinese Room chuckling to itself.

More on topic, according to an Amazon product manager interviewed on BBC this year, text-to-speech on their loss-leading domestic spyware product (he didn't actually use those words) is now implemented by a neural network system directly from the text input whereas last decade's implementation merged recorded words.

Nikita said,

September 24, 2023 @ 11:35 pm

I might be wrong, but I think some of these prosodic features are already on display in the speech synthesis solution offered by 11 labs. It's varied and often unpredictable. But there's evidence of a response to the text on a discourse level.

https://elevenlabs.io/speech-synthesis

Jarek Weckwerth said,

September 25, 2023 @ 2:34 am

This is very interesting. I have been commercially involved with TTS, on and off (and in admittedly superficial and limited capacities) since 2012, and the quality of the leading systems was Rather Good already back then. But I keep seeing people unaware of that even today.

Siri has been around from 2010. That is en example of what a reasonbly good TTS system sounds like.

@Tim Leonard: "run locally on a small and slow Mac". Mark was genuinely disappointed because today there are scores of voices that are more than excellent and can happily run on a local system, even a phone. It's usually a download of a couple of hundred gigs, and can be plugged into the local TTS framework. Try https://tinyurl.com/2p8em5ef (Microsoft) for example.

@/df: "last decade's implementation merged recorded words". I don't think there ever was a version of Alexa doing that. That approach today is limited to legacy systems e.g. on public transport. Your local railway station or tram may have announcements made this way. But I'm 100% confident that Alexa, like Siri, was simply TTS (i.e. "synthesis" straight from text) right from the very beginning. What the Amazon manager was saying, probably, was that the "synthesis" was "concatenative". But that means concatenating diphones or triphones, not words.

Jarek Weckwerth said,

September 25, 2023 @ 2:37 am

Pff comments and links don't go together do they. If the link in my post above doesn't work, then it's here: Microsoft voices for Immersive Reader.

/df said,

September 25, 2023 @ 7:24 pm

Yes, di/tri/phones: https://www.wired.com/story/how-amazon-made-alexa-smarter/. Probably the speaker on BBC was avoiding using the actual word "concatenative" for a lay audience.

Jonathan Smith said,

September 28, 2023 @ 2:31 pm

This was in the news and I guess belongs here

chatgpt voice

see e.g. under "voice samples"

vastly better than the OP samples but again in my (false?) memory google had already achieved similar

ktschwarz said,

October 1, 2023 @ 2:30 pm

I've been studying Hungarian using Duolingo, which uses machine-generated speech. I find it more tolerable than the examples in the post, though it's still a bit choppy and missing information structure, and I think that's why it's tiring to listen to over the long term (I can tell the difference in recordings of actual native speakers). But the really serious flaw is that Duolingo doesn't even try to represent the distinctive Hungarian question intonation, which is generally the only difference between yes-no questions and statements — there's no change in syntax. If I hadn't checked out some other sources, I wouldn't even know I was missing something, and I wouldn't be able to recognize when someone was asking me a question. Caveat emptor.