COURTHOUHAING TOGET T ROCESS.WHE

« previous post | next post »

HE HAS ALL THE SOU OF COURSE

0:05 AND LOADED, READTOO.K

0:11 TING

0:16 A TVERY CONFIDENT.CONWAY

0:21 COURTHOUHAING TOGET T ROCESS.WHE

0:28 COIDATE'

0:30 TTACUTION'S CATHATE'

0:36 SE.

0:36 CHCEN'T KNHA

0:37 TAER OFURDI

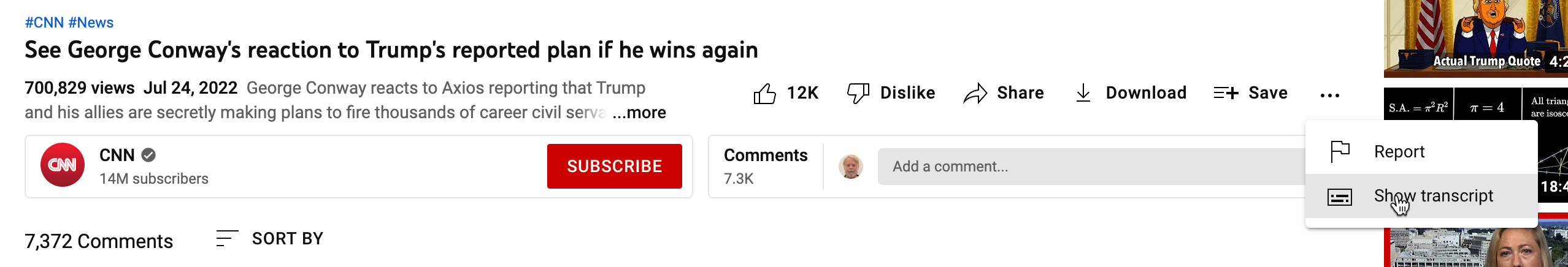

That's the start of the automatically-generated transcript on YouTube for "See George Conway's reaction to Trump's reported plan if he wins again", CNN 7/24/2022.

This appears to be an instance of an end-to-end speech-to-text system going seriously off the rails. (Of course I don't know what has really gone wrong, and other explanations are possible — in addition to being hopelessly garbled, that transcript is also puzzlingly sparse…)

In any speech-to-text system, the input is samples of the audio waveform (numbers representing local variation in air pressure, 8000 to 48000 samples per second) and the output is a stream of characters representing text, in English perhaps 10 to 30 characters per second.

Until recently, this process involved several intermediate stages based on traditional science and engineering: local perceptually-weighted amplitude spectra, the dictionary pronunciation of words rendered in terms of "phones", probability distributions over spectra of phones in context, the probability of alternative word sequences, and so on. When that kind of system is wrong, the output is still going to be a series of actual word-forms, usually in a somewhat-plausible (if incorrect) sequence.

"End-to-end" systems discard the traditional intermediate representations, in favor of a complex multi-layered network of matrix multiplications interspersed with point non-linearities. This network maps acoustic waveform samples directly to textual character sequences — or at least without any intermediate representations other than those implicit in the layers of numbers in such "deep nets". These systems often work well, given large amounts of training data, although no one really knows why they succeed or when they are likely to fail. As such a system apparently did in this case.

For some other (more amusing) amusing examples, see the posts in our "Elephant semifics" topic. The category name was taken from some end-to-end Google Translate weirdness, courtesy of Smut Clyde.

Google Translate has somewhat cleaned up its end-to-end act, so that character repetitions and random character strings are much less likely to generate surrealist poetry. And whatever system is screwing up the cited CNN transcript will probably also improve over time. You can check it by turning on closed captioning:

…or asking YouTube for the whole transcript:

Other recent CNN stories on YouTube sometimes suffer from similar problems (the transcript for that one is similarly garbled but not so sparse) and sometimes don't (that one's transcript is pretty good), which is puzzling…

Update 7/26 — I had totally forgotten that I noticed something similar a couple of years ago, and documented it in "Crazy Captions", 11/13/2020. The consensus of the commenters was that the garbled captions are from CNN, not from YouTube, and (less certainly) that they probably result from some glitch in the operation or output processing of an old-fashioned stenotyping keyboard.

Cervantes said,

July 25, 2022 @ 8:15 am

My best guess is this is an input problem — it doesn't have a good quality link to the audio. That would account for the sparseness.

[(myl) That would be a plausible explanation, except for the fact that the audio quality is fine — about 27 dB signal-to-noise ratio, typical for YouTube broadcast news links, and about the same as the other two cited CNN stories, one of which has a garbled but not sparse transcript, while the other one has a pretty good transcript (and a slightly lower SNR, in fact…).]

Rick Rubenstein said,

July 25, 2022 @ 2:54 pm

I suspect what happened is that sh e.eg mdep's c ccc cl.unagent stringg.

[(myl) I agree.]

Cervantes said,

July 26, 2022 @ 2:12 pm

Just because the audio is fine when you watch it on YouTube doesn't mean that the speech recognition app necessarily had a good connection.