"Significance", in 1885 and today

« previous post | next post »

There's an ongoing argument about the interpretation of Katherine Baicker et al., "The Oregon Experiment — Effects of Medicaid on Clinical Outcomes", NEJM 5/2/2013, and one aspect of this debate has focused on the technical meaning of the word significant. Thus Kevin Drum, "A Small Rant About the Meaning of Significant vs. 'Significant'", Mother Jones 5/13/2013:

Many of the results of the Oregon study failed to meet the 95 percent standard, and I think it's wrong to describe this as showing that "Medicaid coverage generated no significant improvements in measured physical health outcomes in the first 2 years."

To be clear: it's fine for the authors of the study to describe it that way. They're writing for fellow professionals in an academic journal. But when you're writing for a lay audience, it's seriously misleading. Most lay readers will interpret "significant" in its ordinary English sense, not as a term of art used by statisticians, and therefore conclude that the study positively demonstrated that there were no results large enough to care about.

Many past LL posts have dealt with various aspects of the rhetoric of significance. Here are a few:

"The secret sins of academics", 9/16/2004

"The 'Happiness Gap' and the rhetoric of statistics", 9/26/2007

"Gender-role resentment and the Rorschach-blot news reports", 9/27/2007

"The 'Gender Happiness Gap': Statistical, practical and rhetorical significance", 10/4/2007

"Listening to Prozac, hearing effect sizes", 3/1/2008

"Localization of emotion perception in the brain of fish", 9/18/2009

"Bonferroni rules", 4/6/2011

"Response to Jasmin and Casasanto's response to me", 3/17/2012

"Texting and language skills", 8/2/2012

But Kevin Drum's rant led me to take another look at the lexicographic history of the word significant, and this in turn led me back to the Oregon Experiment — via a famous economist's work on the statistics of telepathy.

The OED's first sense for significant has citations back to 1566, and in this sense, being significant is a big deal: something that's significant is "Highly expressive or suggestive; loaded with meaning". This is the ordinary-language sense that makes the statistical usage so misleading, because a "statistically significant" result is often not really expressive or suggestive at all, much less "loaded with meaning".

The OED gives a second sense, almost as old, that is much weaker, and is probably the source of the later statistical usage: "That has or conveys a particular meaning; that signifies or indicates something". Not necessarily something important, mind you, just something — say, in the modern statistical sense, that a result shouldn't be attributed to sampling error. A couple of the OED's more general illustrative examples:

1608 E. Topsell Hist. Serpents 48 Their voyce was not a significant voyce, but a kinde of scrietching.

1936 A. J. Ayer Lang., Truth & Logic iii. 71 Two symbols are said to be of the same type when it is always possible to substitute one for the other without changing a significant sentence into a piece of nonsense.

And then there's an early mathematical sense (attested from 1614):

Math. Of a digit: giving meaningful information about the precision of the number in which it is contained, rather than simply filling vacant places at the beginning or end. Esp. in significant figure, significant digit. The more precisely a number is known, the more significant figures it has.

The OED gives a few additional senses that are not strikingly different from the first two: "Expressive or indicative of something"; "Sufficiently great or important to be worthy of attention; noteworthy; consequential, influential"; "In weakened sense: noticeable, substantial, considerable, large".

And then we get to (statistically) significant:

5. Statistics. Of an observed numerical result: having a low probability of occurrence if the null hypothesis is true; unlikely to have occurred by chance alone. More fully statistically significant. A result is said to be significant at a specified level of probability (typically five per cent) if it will be obtained or exceeded with not more than that probability when the null hypothesis is true.

In an earlier post, I characterized sense 5. as "[a]mong R.A. Fisher's several works of public-relations genius". However, I gave Sir Ronald too much credit, as the OED's list of citations shows:

1885 Jrnl. Statist. Soc. (Jubilee Vol.) 187 In order to determine whether the observed difference between the mean stature of 2,315 criminals and the mean stature of 8,585 British adult males belonging to the general population is significant [etc.].

1907 Biometrika 5 318 Relative local differences falling beyond + 2 and − 2 may be regarded as probably significant since the number of asylums is small (22).

1925 R. A. Fisher Statist. Methods iii. 47 Deviations exceeding twice the standard deviation are thus formally regarded as significant.

1931 L. H. C. Tippett Methods Statistics iii. 48 It is conventional to regard all deviations greater than those with probabilities of 0·05 as real, or statistically significant.

In 1885, Sir Ronald's birth was still five years in the future. So who came up with this miracle of mathematical marketing? It seems that the credit is due to Francis Ysidro Edgeworth, better known for his contributions to economics. The OED's 1885 citation for (statistically) significant is to his paper "Methods of Statistics", Journal of the Statistical Society of London , Jubilee Volume (Jun. 22 – 24, 1885), pp. 181-217:

The science of Means comprises two main problems: 1. To find how far the difference between any proposed Means is accidental or indicative of a law? 2. To find what is the best kind of Mean; whether for the purpose contemplated by the first problem, the elimination of chance, or other purposes? An example of the first problem is afforded by some recent experiments in so-called "psychical research." One person chooses a suit of cards. Another person makes a guess as to what the choice has been. Many hundred such choices and guesses having been recorded, it has been found that the proportion of successful guesses considerably exceeds the figure which would have been the most probable supposing chance to be the only agency at work, namely 1/4. E.g., in 1,833 trials the number of successful guesses exceeds 458, the quarter of the total number, by 52. The first problem investigates how far the difference between the average above stated and the results usually obtained in similar experience where pure chance reigns is a significant difference; indicative of the working of a law other than chance, or merely accidental.

So the first use in print of "(statistically) significant" was in reference to an argument for telepathy!

Edgeworth gives no detailed analysis of the "Psychical Research" data in this article, though he notes that

we have several experiments analogous to the one above described, all or many of them indicating some agency other than chance.

But he went over the issue in great detail in a paper published in the same year ("The calculus of probabilities applied to psychical research", Proceedings of the Society for Psychical Research, Vol. 3, pp. 190-199, 1885), which begins with an inspiring (though untranslated) quote from Laplace ("Théorie Analytique des probabilités", 1812):

"Nous sommee si éloignés de connaître tous les agents de la nature qu'il serait peu philosophique de nier l'existence de phenomènes, uniquement parcequ'ils sont inexplicables dans l'état actuel de nos connaissances. Seulement nous devons les examiner avec une attention d'autant plus scrupuleuse, qu'il parait plus difficile de les admettre; et c'est ici que l'analyse des probabilités devient indispensable, pour determiner jusqu'à quel point il faut multiplier les observations ou les expériences, pour avoir, en faveur de l'existence des agents qu'elles semblent indiquer, une probability supérieure à toutes les raisons que l'on peut avoir d'ailleurs, de la réjéter."

"We are so far from knowing all the agencies of nature that it would hardly be philosophical to deny the existence of phenomena merely because they are inexplicable in the current state of our knowledge. We must simply examine them with more careful attention, to the extent that it seems more difficult to explain them; and it's here that the analysis of probabilities becomes essential, in order to determine to what point we must multiply observations or experiments, in order to have, in favor of the existence of the agencies that they seem to indicate, a higher probability than all the reasons that one can otherwise have to reject them."

Edgeworth then undertakes to show that telepathy is real:

It is proposed here to appreciate by means of the calculus of probabilities the evidence in favour of some extraordinary agency which is afforded by experiences of the following type: One person chooses a suit of cards, or a letter of the alphabet. Another person makes a guess as to what the choice has been.

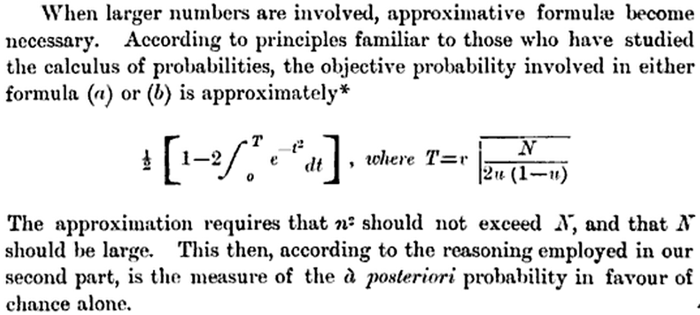

After considerable application of somewhat complex mathematical reasoning, e.g. the passage below, Edgeworth concludes that the probability of obtaining the cited results by chance — 510 correct card-suit guesses out of 1833 tries — is 0.00004.

Even if we grant his premises, Edgeworth's estimate seems to have been quantitively over-enthusiastic — R tells us that the probability of obtaining the cited result by chance, given his model, should be a bit greater than 0.003:

binom.test(458+52,1833,p=0.25,alternative="greater") Exact binomial test data: 458 + 52 and 1833 number of successes = 510, number of trials = 1833, p-value = 0.003106 alternative hypothesis: true probability of success is greater than 0.25

But the p-value calculated according to Edgeworth's model — whether it's .00004 or .003 — is not an accurate estimate of the probability of getting the cited number of correct guesses by chance, in an experiment of the cited type. That's because his model might well be wrong, and there are plausible alternatives in which successful guesses are much more likely.

Recall his description of the experiment:

One person chooses a suit of cards, or a letter of the alphabet. Another person makes a guess as to what the choice has been.

He assumes that the chooser picks among the four suits of cards with equal a priori probability; and that the guesser, if guessing by chance, must do the same. But suppose that they both prefer one of the four suits, say hearts? If the chooser always chooses hearts, and the guesser always guesses hearts, then perfect psychical communication will appear to have taken place.

More subtly, we only need to assume a slight shared bias for better-than-chance results to emerge. And the bias need not be shared in advance of the experiment — since the guesser learns the true choice after each guess, he or she has plenty of opportunity to estimate the chooser's bias, and to start to imitate it. In other words, this is really not a telepathy experiment, it's the world's first Probability Learning experiment! (See "Rats beat Yalies", 12/11/2005, for a description of this experimental paradigm.)

If the result is equivalent to a shared uneven distribution over the four suits, then things are very different. With shared probabilities of 0.34, 0.33, 0.17, 0.16, for example, the probability of getting at least 510 correct guesses in 1833 trials is about 55%. And I assert without demonstration that such an outcome could easily emerge from a probability-learning process, without any initial shared bias.

My point here is not to debunk psychical research, but to observe that here as elsewhere, it's important to pay attention to the the details of the model, the data, and the outcome, rather than just looking at the p value.

And this brings us back to the contested paper, Katherine Baicker et al., "The Oregon Experiment — Effects of Medicaid on Clinical Outcomes", NEJM 5/2/2013.

Since this post has already gone on too long, I'll try to make this fast (for the two of you who are still reading…) Here's the background:

In 2008, Oregon initiated a limited expansion of its Medicaid program for low-income adults through a lottery drawing of approximately 30,000 names from a waiting list of almost 90,000 persons. Selected adults won the opportunity to apply for Medicaid and to enroll if they met eligibility requirements. This lottery presented an opportunity to study the effects of Medicaid with the use of random assignment.

Specifically

Our study population included 20,745 people: 10,405 selected in the lottery (the lottery winners) and 10,340 not selected (the control group).

They were able to interview 12,229 people, 6387 lottery winners and 5842 among the controls, about two years after the lottery.

But it's not quite as simple as that:

Adults randomly selected in the lottery were given the option to apply for Medicaid, but not all persons selected by the lottery enrolled in Medicaid (either because they did not apply or because they were deemed ineligible).

A more detailed picture of the lottery process is given in the paper's Supplementary Appendix:

In total, 35,169 individuals—representing 29,664 households—were selected by lottery. If individuals in a selected household submitted the appropriate paperwork within 45 days after the state mailed them an application and demonstrated that they met the eligibility requirements, they were enrolled in OHP Standard. About 30% of selected individuals successfully enrolled. There were two main sources of slippage: only about 60% of those selected sent back applications, and about half of those who sent back applications were deemed ineligible, primarily due to failure to meet the requirement of income in the last quarter corresponding to annual income below the poverty level, which in 2008 was $10,400 for a single person and $21,200 for a family of four.

In other words, we would expect that only about 30% of the lottery winners in this paper's sample were actually enrolled in the insurance program. Thus the lottery selection was random, but the enrollment step for lottery winners was not: and the various reasons for failing to get insurance are presumably not neutral with respect to health status and outcomes.

When I first began reading this paper, this seemed to me to constitute a huge source of non-sampling error, since the lottery winners who actually enrolled may constitute a very different group from the lottery participants as a whole.

However, it turns out that this doesn't matter. Although the paper's title announces itself as a study of the "Effects of Medicaid on Clinical Outcomes", the authors did not compares the outcomes of those who were actually enrolled against the outcomes of those who were not. Instead, they compared the outcomes of those who won the lottery—and thus were given the opportunity to try to enroll—against the outcomes of those who didn't win the lottery (even though some of these did have health insurance anyhow). So realistically, it's a study of the "Effects of an Extra Opportunity to Try to Enroll in Medicaid on Clinical Outcomes".

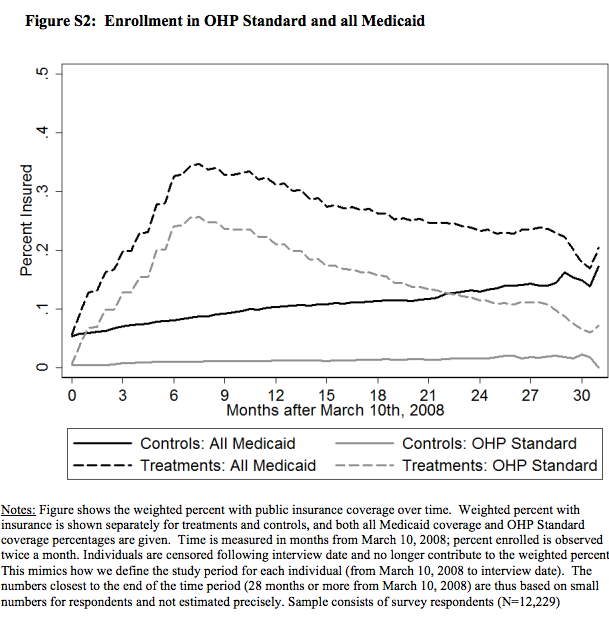

Of the 6,387 survey responders who were lottery winners, 1,903 (or 29.8%) actually enrolled in Medicaid at some point. A reasonable number of the control group had OHP or Medicaid insurance as well, and by the end of the period of the study, the differences between the two groups in enrolled in proportion enrolled in public insurance had nearly vanished (we're given no information about how many might have had employer-provided insurance, but presumably the proportion was small):

Of course, the study's authors set up their model to compensate for this situation:

The subgroup of lottery winners who ultimately enrolled in Medicaid was not comparable to the overall group of persons who did not win the lottery. We therefore used a standard instrumental-variable approach (in which lottery selection was the instrument for Medicaid coverage) to estimate the causal effect of enrollment in Medicaid. Intuitively, since the lottery increased the chance of being enrolled in Medicaid by about 25 percentage points, and we assumed that the lottery affected outcomes only by changing Medicaid enrollment, the effect of being enrolled in Medicaid was simply about 4 times (i.e., 1 divided by 0.25) as high as the effect of being able to apply for Medicaid. This yielded a causal estimate of the effect of insurance coverage.

And there was indeed a period of differential enrollment proportions between between the lottery winners and losers, as well a period of different enrollment-opportunity proportions, and so it's plausible to look for effects across the sets of winners and losers as a whole.

But the main physical health outcomes that the study examined (blood pressure, cholesterol, glycated hemoglobin, etc.) are age- and lifestyle-related measures that are not very likely to be seriously influenced by a short period of differential access to insurance. The things that were (both statistically and materially) influenced — self-reported health-related quality of life, out-of-pocket medical spending, rate of depression — are much more plausible candidates to show the impact of having a brief opportunity to get insurance access.

And as in F. Y. Edgeworth's analysis of card-suit guessing, the p values in the regression are not as important as the details of what the observations were, and what forces plausibly shaped them.

[Note: For more on the subsequent history of psychic statistics, see Jessica Utts, "Replication and Meta-Analysis in Parapsychology", Statistical Science 1991.)

MonkeyBoy said,

May 17, 2013 @ 8:51 am

You left out the whole issue where many take "significant" to mean "meaningful". For example if I give IQ test to 10,000 people with brown eyes and 10,000 with blue, I might find a "significant" difference in their scores but one say so small (.05 IQ points) to make it meaningless in any application – say only hiring those with a particular color.

Some discussion of this and pointers to rhe literature can be found at the post "Fetishizing p-Values".

[(myl) I didn't leave it out — I referred you to a list of previous posts where it's discussed at length, e.g. here ("statistical significance without a loss function"), here ("my objection was never about statistical significance, but rather about effect sizes and practical significance"), here (difference between statistical and clinical significance in drug studies), or here ("There's a special place in purgatory reserved for scientists who make bold claims based on tiny [though statistically significant] effects of uncertain origin").

But in this case, the critical issue is neither the p values nor the loss functions nor the effect sizes, but the details of the experiment.]

Mr Punch said,

May 17, 2013 @ 10:14 am

There was a somewhat similar controversy in the '70s over "bias" in intelligence tests. To charges that the tests were racially biased, defenders such as Arthur Jensen of Berkeley responded that there was no bias – which was clearly true in the technical terminology of their field.

Eric P Smith said,

May 17, 2013 @ 1:13 pm

I think that Kevin Drum is unduly lenient on the researchers.

The researchers say, “We found no significant effect of Medicaid coverage…”, and “We observed no significant effect on…”. In both those cases the full (untruncated) statement is true, with the reasonable interpretation of “significant” as “statistically significant”. But the researchers go on to say, “This randomized, controlled study showed that Medicaid coverage generated no significant improvements…” Kevin Drum remarks, “It's fine for the authors of the study to describe it that way”, but it's not fine at all. The full (untruncated) statement is false, and no amount of hiding behind statistical language makes it true. When an experiment is done and the result is not statistically significant, it does not show anything.

Rubrick said,

May 17, 2013 @ 1:20 pm

Is there any really sound argument against making the standard "magic percentage" smaller, say 3%? As an outsider it seems as though the main "drawback" would be that researchers would be able to publish far fewer studies, especially ones of dubious value, but perhaps I'm being overly cynical. A 1-in-20 chance of a result being total bunk, even if the experiment is perfectly designed (which it isn't) has always struck me as absurdly high.

Deuterium Oxide said,

May 17, 2013 @ 1:39 pm

@Rubrick. It's unrealistic to require from a research paper to establish the Holy Truth. And it is not a problem if some published research is a bunk. The real goal is to move our understanding forward. If the method of doing it sometimes misfires it's OK. Imagine, for example, that you have a method that moves you a step forward 9 times out of 10 and backwards 1 out of 10 and another that guarantees the forward motion, but works at only half speed. You are definitely better off under the first method.

The real problem is how to bring forward research with more substantial results, not merely crossing the threshold of statistical significance (and maybe not crossing it at all), but something that has a potential to really improve our understanding of the things.

A propos, Mr. Edgeworth apparently lost √ π in his equation.

P.S. My old pen name, D.O., is apparently consigned to spam bin.

Eric P Smith said,

May 17, 2013 @ 3:06 pm

@Rubrick

No, there is no overriding argument against making the required p-value smaller. The required p-value is (in principle) chosen by the experimenters and it may take several factors into account. These may include: How inherently unlikely is the effect tested for generally perceived to be? How much larger and more expensive would the experiment need to be for it to have much hope of meeting a more stringent p-value? How serious would the consequences be of getting a false positive? And, not least, what is the tradition in the field? In most life sciences, 5% is quite usual. In controversial fields like parapsychology, 1% is more usual (reflecting a general perception that the effects tested for are inherently unlikely). In particle physics, the standard is 5 sigma, ie p=0.0000005 approximately, reflecting (amongst other things) the relative ease of meeting such a stringent p-value in that field.

Incidentally, passing a statistical test at the 5% level does not mean that there is a 5% probability that the effect is not real. It means that, if the effect is not real, there was a 5% probability of passing the statistical test. This point is often misunderstood but it is important.

Rubrick said,

May 17, 2013 @ 4:14 pm

@Eric

"How much larger and more expensive would the experiment need to be for it to have much hope of meeting a more stringent p-value?"

I suspect this is a big factor; requiring a more stringent p-value in, say, behavioral psychology would seem to spell the end of "Ask 20 grad students and write it up". I'm not entirely sure this would be a bad thing, given the frequency with which flimsy results are A) trumpeted in the media, and B) built upon without giving enough thought to whether they might be entirely bogus.

Your point about the meaning of the 5% value is important, but in terms of the weight one should give to the results of a study, I think the important interpretation is that there's a 5% chance that we don't actually know any more than if the study hadn't been run at all.

Dan Hemmens said,

May 18, 2013 @ 8:14 am

Your point about the meaning of the 5% value is important, but in terms of the weight one should give to the results of a study, I think the important interpretation is that there's a 5% chance that we don't actually know any more than if the study hadn't been run at all.

I'm not sure that's strictly true either. It's not that we have a 5% chance of knowing no more than we did before the test was run. We know more, but part of what we know is that there is a chance that our conclusion is wrong (and this chance is not necessarily 5%, it could be higher or lower).

We would only know *no more* than we did before the test was run if there really was no correlation whatsoever between reality and the outcome of the test. If we were, for example, to try to "test" a particular hypothesis by tossing a coin and reading heads as "yes" and tails as "no" we would actually get a "correct" result half of the time, but such a test would clearly tell us no more information than we had before the test was run, because a false positive is exactly as probable as a true positive.

Yuval said,

May 18, 2013 @ 4:58 pm

Got a little typo there – "the authors did not compares".

Jonathan D said,

May 19, 2013 @ 8:27 pm

I agree with Eric P Smith that 'significant' was misused in the quote. However useful it may be as PR, in the statistical sense we dont' say that the effects (of Medicaid or whatever else) are or aren't significant. It's the details in the data that are or aren't signficant, that is, suggest there may be an effect.

And Rubrick, the interpretation of a 5% p-value given by Eric is correct. There may be many other interpretations that would be more important in the sense that could be more useful, but they are incorrect.

De la significativité (statistique), suite | Freakonometrics said,

October 28, 2013 @ 1:49 am

[…] Siegle, M.V. (2008) Sound and fury: McCloskey and significance testing in economics, Liberman, M. (2013). "Significance", in 1885 and today et le passionnant Hall, P. and Selinger, B. […]