Farther on beyond the IPA

« previous post | next post »

In "On beyond the (International Phonetic) Alphabet", 4/19/2018, I discussed the gradual lenition of /t/ in /sts/ clusters, as in the ending of words like "motorists" and "artists". At one end of the spectrum we have a clear, fully-articulated [t] sound separating two clear [s] sounds, and at the other end we have something that's indistinguishable from a single [s] in the same context. I ended that post with these thoughts:

My own guess is that the /sts/ variation discussed above, like most forms of allophonic variation, is not symbolically mediated, and therefore should not be treated by inventing new phonetic symbols (or adapting old ones). Rather, it's part of the process of phonetic interpretation, whereby symbolic (i.e. digital) phonological representations are related to (continuous, analog) patterns of articulation and sound.

It would be a mistake to think of such variation as the result of universal physiological and physical processes: though the effects are generally in some sense natural, there remain considerable differences across languages, language varieties, and speaking styles. And of course the results tend to become "lexicalized" and/or "phonologized" over time — this is one of the key drivers of linguistic change.

Similar phenomena are seriously understudied, even in well-documented languages like English. Examine a few tens of seconds of (even relatively careful and formal) speech, and you'll come across some examples. To introduce another case, listen to these eight audio clips, and ask yourself what sequences of phonetic segments they represent:

In IPA-ese, I hear the first two of these as something like

- ˈgɪɾɚ

- ˈilɚ

roughly as if they had been spelled "gitter" and "eeler" — and the rest are variations on those themes. Listen for yourself and see what you think.

In fact, the first seven clips present the final two syllables of the word "regular" and the first syllable of the word "attendance", in the seven available performances of TIMIT sentence SX64 "Regular attendance is seldom required". The eighth clip is the final two syllables of the Wiktionary pronunciation of the word "regular". You can listen to the full contexts below:

These examples illustrate three general features of American English phonetics:

- Intervocalic post-stress consonants are often lenited, in this case /g/ sometimes becoming so weak that (if played in initial position) it sounds like a glide.

- The reduced vowel in the unstressed second syllable of "regular" assimilates with the /j/ onset to form a high front vowel [i] or [ɪ] — this can happen in other similar contexts.

- A word-initial unstressed vowel can often assimilate to a preceding word-final unstressed vowel — as here with the first syllable of "attendance" and the last syllable of "regular" — so that the residue of the original pair of syllables is just a (marginally longer) unmodulated merger.

Thus when the TIMIT developers gave the first four syllables of MRLD0_SX64

the ARPAbet transcription

r eh g y ix l axr ix

so that what they transcribe as

g y ix l axr ix

sounds like this

they were surely affected by the phoneme restoration effect.

In this context, the following paper is not only plausible but also overdue — Jialu Li and Mark Hasegawa-Johnson, "A Comparable Phone Set for the TIMIT Dataset Discovered in Clustering of Listen, Attend and Spell", NIPS 2018:

Listen, Attend and Spell (LAS) maps a sequence of acoustic spectra directly to a sequence of graphemes, with no explicit internal representation of phones. This paper asks whether LAS can be used as a scientific tool, to discover the phone set of a language whose phone set may be controversial or unknown. Phonemes have a precise linguistic definition, but phones may be defined in any manner that is convenient for speech technology: we propose that a practical phone set is one that can be inferred from speech following certain procedures, but that is also highly predictive of the word sequence. We demonstrate that such a phone set can be inferred by clustering the hidden nodes activation vectors of an LAS model during training, thus encouraging the model to learn a hidden representation characterized by acoustically compact clusters that are nevertheless predictive of the word sequence. We further define a metric for the quality of a phone set (the sum of conditional entropy of the graphemes given the phone set and the phones given the acoustics), and demonstrate that according to this metric, the clustered LAS phone set is comparable to the original TIMIT phone set. Specifically, the clustered-LAS phone set is closer to the acoustics; the original TIMIT phone set is closer to the text.

As exemplified above, the TIMIT phonetic transcriptions often reflect expectations from the formal dictionary-based pronunciation standard, which is influenced by the spelling even before any continuous-speech reductions set in — so matching TIMIT's performance on this paper's "metric for the quality of a phone set (the sum of conditional entropy of the graphemes given the phone set and the phones given the acoustics)" should not be all that difficult. Still, no one has ever done it before, so this research is an important contribution.

The relationship between phonetic variation and lexically-stable phonological categories remains an open theoretical question, in my opinion, but work like this is one very useful direction of inquiry.

Rose Eneri said,

January 18, 2020 @ 10:35 am

In the exemplars of the full context, aren't the last recording in the first column and the third recording in the second column identical (same speaker, same incidence)?

[(myl) Oops — slip of the mouse (or brain) — good catch, thanks!]

Philip Taylor said,

January 18, 2020 @ 10:43 am

"These examples illustrate three general features of American English phonetics" — for me, example eight illustrates what I think is an unrelated phenomenon : the brain (well, my brain at least) "hears" something quite different when a sound occurs in context and out of context. Were it not for the fact that I trust the author implicitly, I could not otherwise believe that example 8a (the brief excerpt) forms any part of example 8b (the full word "regular"). I hear a clear /gj/ in 8b, whereas in 8a I hear only /gɪ/.

[(myl) That's certainly a well-known phenomenon. In some cases, starting or stopping an audio sample in midstream can create genuine acoustic artefacts, e.g. due to artificially abrupt onsets or offsets — in any sound sample, not just in speech. In this case, the starting and stopping points are zero-crossings in silent or low-amplitude regions. So the gating effects are perceptual ones, based on the absence of contextual cues.

I could make the same point by analyzing spectrograms rather than asking for acoustic perceptions — the point here being that "phonetic transcription" is often strongly influenced by top-down lexical expectations rather than by purely bottom-up acoustic (or articulatory) facts. It's true that there are gestalt effects in acoustic perception (e.g. acoustic streaming) that are not dependent on lexical or other linguistic influences, but I think it's the lexical influences that are dominating here.]

Christian DiCanio said,

January 18, 2020 @ 11:47 am

I'd like to point your attention to an interesting paper by Bouavichith and Davidson (2013) where they investigate post-tonic lenition of voiced obstruents in English in depth. https://www.karger.com/Article/Abstract/355635

Linguists are more familiar with lenition patterns like the ones you observe when they appear as discrete allophonic rules, like Spanish /g/ > [ɣ]/V_V. English seems to have the same process but it's conditioned by stress and the representation of the pattern is rather more complex and probabilistic (see Bouavichith & Davidson 2013). Since phonologists have focused so heavily on one particular speech style (careful, elicited speech), they have missed a whole slew of probabilistic phonetic processes occurring all around them. Your statement "Similar phenomena are seriously understudied, even in well-documented languages like English." is absolutely true and it means that, for most languages, we have vastly under-estimated the range of variation.

[(myl) Indeed — and it's not just voiced obstruents. Intervocalic post-stress /k/, as in "taking" or "making", is more often than not realized as a fricative, and often a voiced fricative. Here are two (literally) random examples from my personal Fresh Air archive, from a phrase spoken by Daniel Torday, starting at 25:35 of this interview —

Here's "taking" by itself, and "making" by itself:

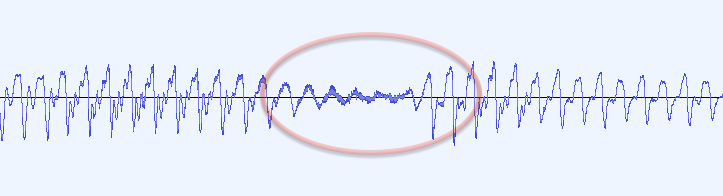

Here's the audio waveform for "taking":

And for "making":

(In both cases with the underlying /k/ circled in red…)

Here's "kicking", in a phase spoken by Stephen King, from 31:33 of this interview, followed by an image of the waveform showing the striking difference between the initial /k/ (green oval) and the medial one (red oval):

]

Andrew Usher said,

January 18, 2020 @ 8:32 pm

I remember taking notice of this effect long ago, as I posted in detail when we discussed Trump's 'big league' vs. 'bigly'. And they say English doesn't have velar fricatives! (Yes, the voiced fricatives are often realised as approximant, a universal tendency.)

I am the original discoverer of this; I first noticed it in my pronunciations of

'league' /lig/

'leagues' /liɣz/ (ignoring length, barely different from lees/Lee's)

Of course I _can_ say /ligz/ and no doubt sometimes do.

I immediately understood on listening that all the clips were renditions of the same word(s) even before reading onward. I wonder how much of the variation is due to choice in where to 'clip' the starting /g/. And the flapping of the /l/ in the first seems to be a genuine speech error, as if she were reading 'regutar' (or is it a feature of her accent?).

k_over_hbarc at yahoo.com

Antonio L. Banderas said,

January 19, 2020 @ 5:18 am

In hip hop music pairs like "say – stay" are only getting harder and harder to distinguish.

[(myl) I haven't noticed that — can you give an example? ]

Andrew Usher said,

January 19, 2020 @ 6:59 pm

That would be a different thing – if say/stay seem to be less distinguishable, it would be because of over-articulation of the /s/.

Y said,

January 19, 2020 @ 10:44 pm

For an example from a supposedly simple phonology, here's Comstock et al. (2017), He nui nā ala e hiki aku ai: Factors influencing phonetic variation in the Hawaiian word kēia, Language Documentation & Conservation Special Publication no. 13, pp. 65-93:

https://scholarspace.manoa.hawaii.edu/handle/10125/24749

The appendix lists the following variations in pronunciation of the word for 'this', normatively kēia, as transcribed from a corpus of Hawaiian spoken by native speakers: ke, keː, keʻi, keʻia, keʻe, keːia, keːʻia, kea, kaʻi, kei, keia, keʻea, kaʻia, ki, kia, kai, keʻɪ, keo, keːi, keu, ēia, gʰe, gu, ka, keːa, keʻa, keʻiː, kuja, te, keːʻi, teːia.

Andrew Usher said,

January 20, 2020 @ 10:01 pm

But that is a grammatical word, and they are often subject to extreme reduction in any language.

'Regular' would not fall in that category – and, by the way, I am now even more convinced that the first woman was saying 'regutar' in contrast to all the others, making it a little biased to choose that one to present first.

Leo said,

January 21, 2020 @ 5:29 am

Clip #3 (first in the second row) may have been the model for Daniel Craig's performance as a Louisiana gentleman in Knives Out…