AI is brittle

« previous post | next post »

Following up "Shelties On Alki Story Forest" (11/26/2019) and "The right boot of the warner of the baron" (12/6/2019), here's some recent testimony from engineers at Google about the brittleness of contemporary speech-to-text systems: Arun Narayanan et al., "Recognizing Long-Form Speech Using Streaming End-To-End Models", arXiv 10/24/2019.

The goal of that paper is to document some methods for making things better. But I want to underline the fact that considerable headroom remains, even with the massive amounts of training material and computational resources available to a company like Google.

Modern AI (almost) works because of machine learning techniques that find patterns in training data, rather than relying on human programming of explicit rules. A weakness of this approach has always been that generalization to material different in any way from the training set can be unpredictably poor. (Though of course rule- or constraint-based approaches to AI generally never even got off the ground at all.) "End-to-end" techniques, which eliminate human-defined layers like words, so that speech-to-text systems learn to map directly between sound waveforms and letter strings, are especially brittle.

The paper's authors put the problem this way:

The acoustic and linguistic characteristics of speech vary widely across domains. Ideally, we would like to train a single ASR system that performs equally well across all domains. Building an E2E ["end-to-end"] ASR ["Automatic Speech Recognition"] system that generalizes well to multiple domains is particularly challenging since E2E models learn all system components directly from the training data. In this work, we investigate the generalization ability of streaming E2E ASR systems – specifically, the recurrent neural network transducer (RNN-T) – to determine its robustness to domain mismatches between training and testing. We find that the tight coupling results in a large performance degradation due to such a domain mismatch.

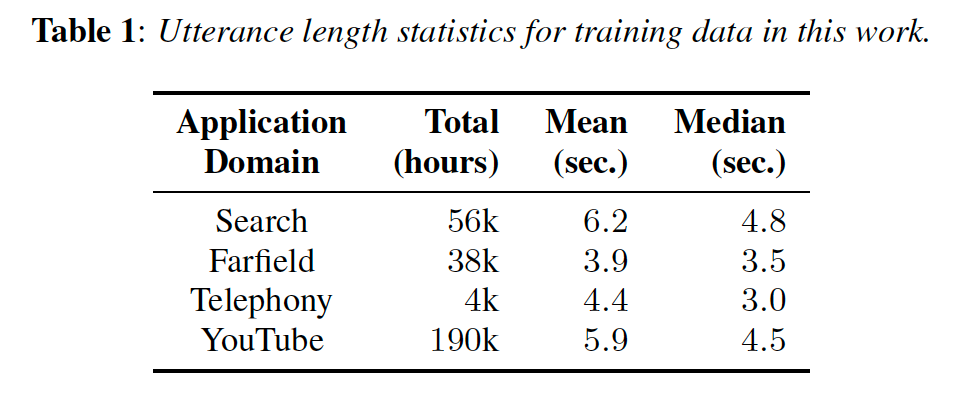

Narayanan et al consider four "domains" of training data:

All of these sources combined (which they call the "Multidomain" set) thus amounts to 288,000 hours. Let's note in passing that a human being who hears four hours of speech each day is exposed to 4*365*10 = 14,600 hours of speech per decade. 288,000 hours amounts to approximately 197.3 years of such experience.

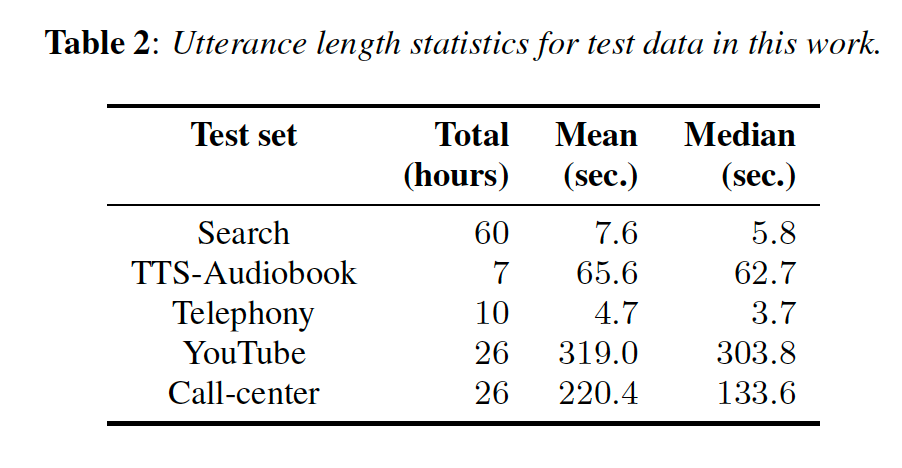

The authors use test data from five domains:

They don't tell us much about what any of these datasets are like, or give us any samples — it's worth noting that categories like "YouTube" or "Call-center" (or for that matter "Search" and "Telephony") could span a very wide range of topics, formats, styles, recording qualities, and so on, raising issues of generalization within as well as across such "domains".

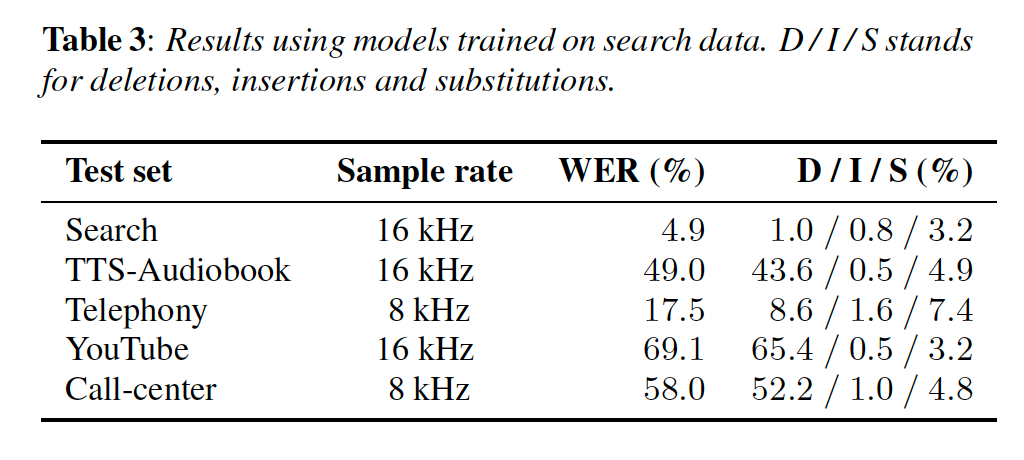

The results, for a system trained on 60,000 hours of data from the "Search" domain, exemplify lack of cross-domain generalization to an extreme degree:

Their discussion of these basic results:

As can be seen, the model performs well on the in-domain search test set, obtaining a WER of 4.9%. The model is trained with a mix of 8 kHz and 16 kHz data, which helps with the performance on the telephony test set, which is at 17.5%, but performance on the other sets is poor. On the YouTube set, this model achieves a WER of 69.1%. On the acoustically simpler TTSAudiobook set, the model obtains a WER of 49.0%. These results clearly indicate that the model trained only on a single domain with unique acoustic characteristics, fails to generalize to new conditions.

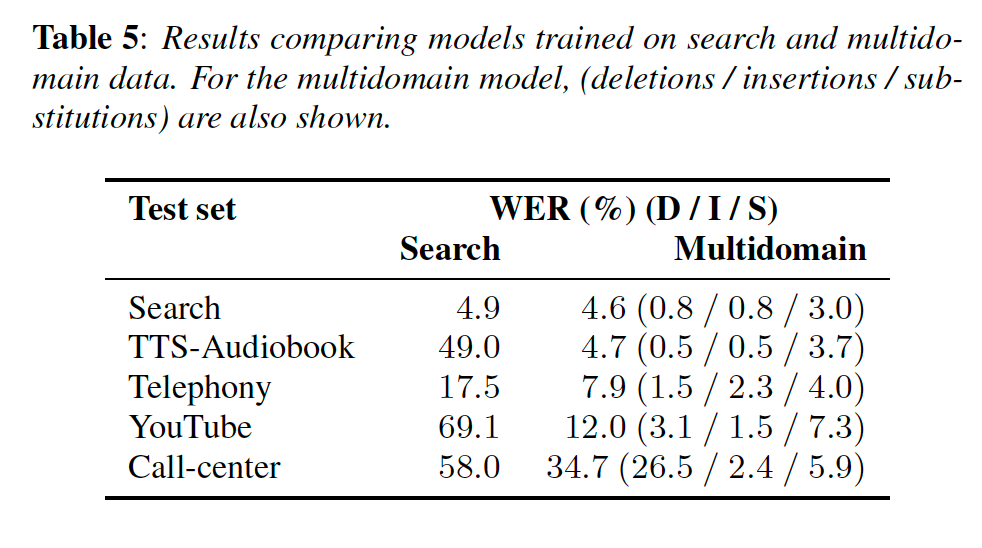

Their solution is to train a system on all 288,000 hours from the "Multidomain" set — which indeed does make things better:

But the "Call-center" data still has a 34.75 WER — this is relative to transcriptions produced by ordinary literate humans. Again, the authors don't tell us anything much about the nature of these datasets, but in general, independent human transcriptions of such material should yield WER numbers in the low single digits. And of course the patterns of human errors will be very different from the patterns of system errors.

"Human Language Technology" has made great advances over the past few decades, and many applications are in effective everyday use. But serious issues remain — and lack of reliable generalization is one of them. This applies not only to ASR, but also to every other area, including machine translation and (notably) autonomous vehicle control.

[h/t Neville Ryant]

Philip Taylor said,

December 11, 2019 @ 5:28 am

"Search", "Telephony", "Youtube", etc., all made perfect sense, but what on earth is "Farfield" ?

[(myl) "Farfield" is a standard way to refer to audio recorded using a microphone at a distance from the sound source, which will therefore have higher-level background noise as well as various sorts of multipath effects due to room acoustics. See e.g. here.]

KeithB said,

December 11, 2019 @ 9:39 am

The neural net in my brain does not seem all that brittle. Is there any research as to why not? Is it a combination of rules and learning?

[(myl) Biological neural nets have their own ways of going wrong. But the systems of matrix multiplications and point non-linearities that we sometimes call "neural nets" share only a deeply misleading metaphor with what's in your head. The Greeks thought the brain was like a hydraulic system. Descartes thought the brain was like a clockwork machine. Logic circuits and sets of pattern-action rules called "expert systems" had their day. In a few centuries, "deep learning" systems will seem just as quaint a metaphor as plumbing and clockwork seem to us now. ]

KWillets said,

December 11, 2019 @ 7:58 pm

Youtube Music (owned by Google) has some of the weirdest speech-to-text I've ever seen. I listen to a lot of Korean music, and it attempts to caption the lyrics in a mixture of Korean and English, but 99% of the time it makes no sense in either language.

I collected a few screenshots, which I'll transcribe here:

(from a song with no English:)

[음악] cow to do good to go to a go where we we

5w 보짓 1 to woo woo

(a different song entirely in Korean:)

we r who we r wh we r here we

(a third in Korean:)

사람이 noon 50 to be 아 아아아 각하며

아 아 oh 오 오 오 오 오 오 오

으 ceo lee hoo ooh ooh

It seems to be a mixture of Korean and English phonetic transcription, without every converging on the original speech. After watching many of these I've come to see a hint of a pattern where a common Korean verb form might sound like "50", and there's the more obvious 사랑/사람 substitution, but there's no reason for these effects to survive even a rudimentary word-frequency check.

Don said,

December 12, 2019 @ 8:57 am

@KWillets – out of curiosity, what’s the verb form that sounds like 50?

KWillets said,

December 12, 2019 @ 11:52 am

When I wrote that I thought it was something like the middle of 하고싶다 being interpreted as …오십…, but I just relistened and found it's from 아쉬워, which isn't even the same vowels.

The phrase is 사랑이 너무 아쉬워, so it got the first syllable and worked its way downhill from there.

The song in this case is 사랑참 by 장윤정; correct lyrics are easy to find, but I believe you have to play it in the Youtube music application to see the speech-to-gibberish.

Brett Reynolds said,

December 13, 2019 @ 8:24 am

Wouldn't they be able to build a bunch of these systems, specialized for various domains, and then build on system to rule them all that identifies the data and sends it to the system best suited to handle it?

[(myl) Variations on this theme have been developed in many areas for many years. The problem is that the space of variation has many dimensions — in the case of speech, there are many aspects of recording characteristics, many aspects of language variety, many aspects of individual variation, many aspects of style and genre, many aspects of communicative interaction, many aspects of topic, and so on. So adaptation would be better than preparation, though application-specific training is almost always necessary for good performance.

The thing about human listeners is that they do a pretty good job of adapting across situations without much or even any specific training or even experience. Our tendency to anthropomorphize machines leads us to assume similar flexibility for AI systems — and this is often a big mistake.]