Eigenfeet, eigenfaces, eigenlinguistics, …

« previous post | next post »

The latest xkcd:

(As usual, click on the image for a larger version.)

I think that this is the first reference to eigenanalysis in a widely-read comic strip. (It's not a first for popular culture: Dudley Eigenvalue, DDS, was an important secondary character in Thomas Pynchon's novel V. But that's another story.)

As the wikipedia page on Eigenvalues and Eigenvectors explains,

The eigenvectors of a square matrix are the non-zero vectors which, after being multiplied by the matrix, remain proportional to the original vector (i.e. change only in magnitude, not in direction). For each eigenvector, the corresponding eigenvalue is the factor by which the eigenvector changes when multiplied by the matrix. The prefix eigen- is adopted from the German word "eigen" for "innate", "own". The eigenvectors are sometimes also called proper vectors, or characteristic vectors. Similarly, the eigenvalues are also known as proper values, or characteristic values.

So why might the prince use eigenvectors to match shoes to their owners? This is a reference, I think, to a well-known pattern-recognition technique, in which eigenanalysis is used to create a lower-dimensional representation of the members of a set of high-dimensional objects like pictures of faces.

In the eigenface technique, we start with relatively high-resolution pictures of faces — say 320×240 = 76,800 pixels. We accumulate a collection of such pictures. If these are grey-scale pictures, we can think of each picture as a weighted sum of 76,800 "basis pictures", each of which has a zero value everywhere except at one of the pixel locations. The coefficients of this weighted sum are then just the picture's original pixel values, and the sequence of coefficients for a given picture is, by trivial definition, a vector in a 76,800-dimensional space. (This seems like a lot of work for no purpose, but the general approach turns out to be extraordinarily useful.)

We'd like to find a way to project these vectors into a much lower-dimensional subspace, such that as much as possible of the differences among the faces in our collection is preserved. With a bit of linear algebra, we can show that we can do this by choosing, as our new "basis pictures", the first few eigenvectors of the covariance matrix of our picture collection. Each of these new basis vectors will be a 76,800-dimensional vector, i.e. in this application a sort of a picture, and one of the pictures in our collection (or more important, a new picture) can now be represented as a linear combination (a weighted sum) of these basis vectors.

But these new basis vectors, instead of being blank everywhere but at one pixel location, as our original basis vectors were, have some distribution of non-zero values over the whole picture area, in some cases looking somewhat like fuzzy faces — hence the term "eigenfaces".

Furthermore, if we order the eigenvectors by the magnitude of the associated eigenvalues, and keep just the first few of them, we can get a perhaps-surprisingly good approximation to the original pictures by adding up a weighted combination of a surprisingly small number of these "eigenfaces" — here say something like 32 or 64 or 128. The weights involved are then a radically smaller representation of each picture — a vector with 128 elements rather than 76,800 elements.

In this new space, it seems that simple measures of similarity between vectors — perhaps as simple as euclidean distance, i.e. the square root of the sum of squared element-wise differences — give us a metric that does a rather good job of identifying faces. We take a new picture, calculate its representation in the "eigenface" space, and then compare it (in that space) to each of the pictures in our collection.

So the idea behind the first xkcd panel is that the prince would create, or have access to, some sort of biometric database of foot representations, and would use it to create a space of "eigenfeet" in which newly-encountered foot shapes could be compared against existing entries, as a suitably dream-nerdy translation of trying the abandoned slipper on every female foot in the kingdom.

What does this have to do with linguistics?

Well, the number of connections may be surprising to those who are not used to the unreasonable effectiveness of mathematics. I'll mention just three of the many ways that people have applied eigenanalysis (or closely related ideas) to speech and language.

The first one goes under the name of "latent semantic indexing" or "latent semantic analysis". In this approach, rather than finding a useful low-dimensional approximation to pictures, we find a useful low-dimensional approximation to documents (and also to words, which are, after all, just one-word documents). The details are somewhat different, involving singular value decomposition, which is a generalization of eigendecomposition to non-square matrices, but the basic idea is the same. You can follow the links to learn more.

The second one is the application of "functional principal components analysis" to tonal analysis. A number of people have tried a number of different ways to approach this in recent years. One interesting example is John A. D. Aston et al., "Linguistic pitch analysis using functional principal component mixed effect models", J. Royal Stat. Soc.: C, 59 (2): 297–317, 2010.

I've given some examples of how to apply these ideas to a database of Chinese sentences (taken from Jiahong Yuan's thesis data), in the background of a problem set found here. You can access the details — include data and code — in the linked page, but I'll give a taste of the results here, in the form of some fragments of Octave (or Matlab) code and a couple of graphs.

Despite enormous variation by speaker, by phrasal position, by tonal context, and by stress or focus status, the mean contours by tone-class are surprisingly well behaved. (In the code below, the variable f0data1 has 7101 rows (different syllables) and 30 columns (the time-normalized pitch values in each syllable); the variable f0inds1 has the same 7101 rows and its columns give the values of various features of each syllable, with the 10th column being the tone class.)

tonemeans=zeros(4, 30);

for tone=1:4

tonemeans(tone,:) = mean(f0data1(f0inds1(:,10)==tone,:));

end

plot(tonemeans')

legend(' 1',' 2',' 3',' 4','location','northwest')

The first few eigenvectors of the covariance matrix look very much like orthogonal polynomials:

[V L] = eig(cov(f0data1));

% Note that eigenvectors are in columns of V

% with eigenvalues ordered from smallest to largest

basis5 = V(:,30:-1:26)';

figure(1); plot(basis5'); title('Five eigenvectors')

We will find that these five eigenvectors do quite a good job of approximating (a sample of) the original contours:

for n=1:6 coeffs = basis5'\f0sample(n,:)'; synth = basis5'*coeffs; subplot(2,3,n); plot(1:30,f0sample(n,:),'b',1:30,synth,'r'); end

Four components do nearly as good a job; three is still OK, and a two-eigentone representation is marginal. A representation in terms of the just the first eigentone would be just a slightly bent line (the first eigenvector, which is the roughly horizonal blue line in the picture above) moved up and down according to its coefficient. Adding the second eigentone (the falling dark-green line in that picture) lets us represent different degrees of overall rise fall; and so on.

The last of my three samples of eigenlinguistics takes us into the area of spectral graph theory, which as wikipedia tells us is not the latest development from Ghostbusters' Laboratories, but rather

… is the study of properties of a graph in relationship to the characteristic polynomial, eigenvalues, and eigenvectors of matrices associated to the graph, such as its adjacency matrix or Laplacian matrix.

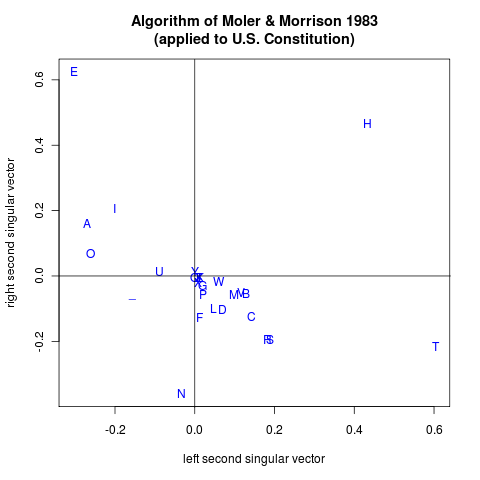

Most of what little I know about this area I learned from Partha Niyogi, who used such ideas extensively in various areas of machine learning, including in an effort to add some mathematical substance to an idea of mine about the development of lexical consensus in a speech community. But the particular case that I'm going to cite here comes from Cleve Moler and Donald Morrison, "Singular Value Decomposition of Cryptograms", American Mathematical Monthly, 1983.

In this case, we're using singular value decomposition to find some simple approximations to the bigram statistics of the letters in (a collection of) documents, with the goal of figuring out which letters are vowels and which are consonants. The first singular vectors tell us something about the overall frequency of individual letters, in somewhat the same way as the first eigenvector of the syllabic pitch contours told us something about the overall pitch level. Because vowels and consonants tend to alternate, in a statistical sense, Moler and Morrison explain that

where "the cryptanalyst's problem" here is just to distinguish vowels from consonants in a simple substitution cipher (a really easy problem for a cryptanalyst to have, but still…).

A sample application of this idea to the text of the U.S. constitution works reasonably well. For simplicity, we map all alphabetic characters to uppercase, and all punctuation and white-space characters to space, yielding this 27×27 bigram frequency table. Then a few lines of R are sufficient to yield this diagnosis, which does indeed separate vowels from consonants:

Vowels:

[1,] "-0.27" "0.161" "A"

[2,] "-0.304" "0.624" "E"

[3,] "-0.201" "0.207" "I"

[4,] "-0.263" "0.07" "O"

[5,] "-0.09" "0.013" "U"

Consonants:

[1,] "0.129" "-0.053" "B"

[2,] "0.143" "-0.124" "C"

[3,] "0.071" "-0.103" "D"

[4,] "0.011" "-0.126" "F"

[5,] "0.018" "-0.03" "G"

[6,] "0.007" "-0.005" "J"

[7,] "0.014" "-0.005" "K"

[8,] "0.046" "-0.1" "L"

[9,] "0.099" "-0.057" "M"

[10,] "0.02" "-0.056" "P"

[11,] "0" "-0.006" "Q"

[12,] "0.183" "-0.194" "R"

[13,] "0.188" "-0.195" "S"

[14,] "0.603" "-0.216" "T"

[15,] "0.116" "-0.05" "V"

[16,] "0.061" "-0.018" "W"

[17,] "0.008" "-0.019" "X"

[18,] "0.008" "-0.003" "Z"

Neutrals:

[1,] "0.432" "0.466" "H"

[2,] "-0.032" "-0.36" "N"

[3,] "0.002" "0.014" "Y"

[4,] "-0.155" "-0.052" "_"

For a more extensive, more accessible (and more linguistic) discussion of graph partioning and spectral graph theory as applied to quasi-phonological analysis, see John Goldsmith and Aris Xanthos, "Three methods for learning phonological categories", University of Chicago Department of Computer Science TR-2008-08.

Jeff Parker said,

March 15, 2011 @ 7:57 am

If I may return to issue eigenvectors and glass slippers, assume a spherical foot, and then measure the stretch needed to resize this to an ellipsoid of the right shape: think length, width, and height.

While you may feel that 3 numbers are not enough to capture the details of your foot, note that 3 measurements is one more than the 2 measurements that are routinely taken in the shoe store.

Of course the shoemaker does not start with a sphere, but with a standard model of a human foot.

MattF said,

March 15, 2011 @ 8:47 am

There's also Google's "Page Rank" algorithm, also known as "the $25,000,000,000 eigenvector."

[(myl) Right — the crucial bit is the role of the eigenvectors of the link graph of web pages. I considered adding that one, but decided not to because (a) the post is already way too long, and (b) the connection to speech and language is less strong than in the other cases.]

Chris said,

March 15, 2011 @ 9:16 am

Here's another eigen-reference in a popular online comic strip:

http://www.qwantz.com/index.php?comic=359

John Cowan said,

March 15, 2011 @ 9:37 am

… and those of us who have deep feet have endless trouble getting shoes that fit, precisely because of this lack of the third dimension in the system. Sometimes a reduction in dimensionality is the Wrong Thing.

[(myl) Well, excessive reduction in dimensionality is *always* the wrong thing.]

Jerry Friedman said,

March 15, 2011 @ 9:39 am

On a peripherally linguistic note, my professor for linear algebra, the late Gilbert Hunt, said "characteristic vector" and "characteristic value" because he couldn't stand combining the German eigen with the Latin vector or the French value. He didn't tell us students what to say, though.

empty said,

March 15, 2011 @ 9:44 am

I think that The Brannock Device would be a good title for a Dan Brown novel.

Dennis Paul Himes said,

March 15, 2011 @ 10:09 am

When I first read the xkcd I thought your post was going to be about subject/verb agreement ("people who falls asleep" vs. "people who fall asleep").

richard said,

March 15, 2011 @ 10:14 am

And in other news, academics in all sorts of disciplines do exactly this: I've woken up while allegedly reading a Winnie the Pooh story, but actually talking about reforestation practices, to be greeted by my daughter's stunned look.

More than once.

(Sigh.)

jmk said,

March 15, 2011 @ 10:19 am

@Jerry: If your professor maintained that vector is Latin (as opposed to English), how could he stand combining it with English characteristic? Shouldn't he have gone all Latin with sua vector or vector proprium?

Or, all German with Eigenvektor.

Dan S said,

March 15, 2011 @ 10:44 am

Note to future antedaters: as of now, the only ghits on "eigenlinguistics" are for this posting, and the reposts and references thereof.

Nightstallion said,

March 15, 2011 @ 12:18 pm

»Jerry: If your professor maintained that vector is Latin (as opposed to English), how could he stand combining it with English characteristic?«

Don't you mean »Ancient Greek characteristic«? ;)

Jerry Friedman said,

March 15, 2011 @ 1:15 pm

@jmk and Nightstallion: I wondered that too, but the sort of person I was couldn't have asked the sort of person he was that sort of question, and nobody else asked either.

Two theories: the interword space was enough, or characteristic felt sufficiently normal and domesticated while eigen- was too obviously German.

@empty: No doubt many of us are waiting for The Eigensanction.

Jerry Friedman said,

March 15, 2011 @ 1:19 pm

@MYL: "If I can't have too much reduction in dimensionality, I'll do without reduction in dimensionality."

Somehow it works better with "too many truffles".

Yerushalmi said,

March 15, 2011 @ 4:55 pm

In response to Chris, here's yet another eigenreference:

http://irregularwebcomic.net/2011.html

Jason said,

March 15, 2011 @ 6:21 pm

So, like, I was deluded to contemplate a major in linguistics because I knew full well I was good at language and terrible at maths? Just as well I never got very far with it.

[(myl) Nah, it's only cartooning where you really need serious math skillz.]

R. Wright said,

March 16, 2011 @ 12:47 am

I'm delighted to hear of others who experience this phenomenon. It amazes me sometimes when I catch myself going astray while reading a book to one of my kids while half-awake. Some of the connections my brain makes in that state are quite honestly eery, and often eye-opening.

D.O. said,

March 16, 2011 @ 1:01 am

For those familiar with Russian and especially Russian fairy tales here's a classic. I am not familiar with any English translation. The title goes something like How three vectors put a determinant to zero.

Scotler said,

March 16, 2011 @ 1:55 am

"It's not a first for popular culture: Dudley Eigenvalue, DDS, was an important secondary character in Thomas Pynchon's novel V."

Works like The DaVinci Code and The Simpsons are popular culture. The output of Thomas Pynchon, although he is said to be a Simpsons fan, is not popular culture. Its inherent difficulty and denseness makes it a priori un-popular culture.

[(myl) In these days of brow inflation, where "a Spiderman sequel, and a 21 Jump Street remake, and a ride at Disneyland are defined as 'highbrow'", it's not clear that the distinction matters very much. And The Crying of Lot 49 is #5,369 in books at amazon.com today, while The Da Vinci Code is #8,559. So the difference in "popularity" is not as crystal clear as you might a priori like it to be.]

Bobbie said,

March 16, 2011 @ 4:17 pm

~ those of us who have deep feet have endless trouble getting shoes that fit~

I have never heard of deep feet before! In which case, I have shallow feet (otherwise known as flat arches).

Hermann Burchard said,

March 16, 2011 @ 5:18 pm

English mathematician could say own values, as in the matrix [[0,-1],[1,0]] has no real own values.

Often told my students the French would NEVER say valeur eigen.

Singular vectors, values were invented by Hermann Weyl, born Elmshorn near Hamburg, and furthered by Gene Golub. He visited GM Res Labs when I just had rediscovered them. Not sure anymore why, but it had to do with data smoothing of automobile surfaces, much to the amusement of my office neighbor, Carl de Boor, who succeeded much better using bicubic splines.

Steve Harris said,

March 16, 2011 @ 6:30 pm

As a mathematician of 30 years, I have never heard a reference to "own values"; it is almost universally "eigenvalues" (with a very rare reference to "characteristic values") in any English-language mathematics I have come across.

(Dream-commentary on "a point on the manifold that's not a 3-sphere" is likely a reference to the fairly recent proof by Perelman of the Poincare' conjecture, stating that a compact simply-connected manifold–what we might dreamily suppose a grasshopper to emulate in abstraction–is necessarily a hyper-sphere.)

I have also found myself continuing to read aloud while falling asleep. I wonder what the state of my linguistic functionality is in that condition–and what that might tell us, if anything.

Spell Me Jeff said,

March 16, 2011 @ 7:37 pm

It took a little squinting, but when I finally figured out the Little Pigs joke, I really laughed my ass off. (Okay, I haven't finally figured it out . . .)

Hermann Burchard said,

March 16, 2011 @ 9:32 pm

@Steve Harris:

It was meant as a suggestion.

Randy E said,

March 16, 2011 @ 11:36 pm

Holy crap. My main research interest is in spectral graph theory. I've taken an interest in linguistics for a long time now. I never that I'd see a connection between linguistics and spectral graph theory. New research directions? Hmmm….

cameron said,

March 17, 2011 @ 9:27 am

French uses propre rather than the German eigen-. Hence in French mathematical contexts you see terms like "valeurs propres" and "vecteurs propres" and so forth. I suppose English mathematicians could have use proper in a similar manner. But "proper" in English is more often used in one of the many figurative senses it has acquired, rather than in the literal sense it still carries in French.

jmk said,

March 17, 2011 @ 12:19 pm

Proper is used in English in quite a few mathematical idioms, most often to indicate something that excludes a trivial case; for example

* a proper subset of a set X is a subset other than the trivial case of X itself;

* a proper divisor of a number n is a divisor other than n itself (1 might also be excluded)

(Somewhat similarly, a strict inequality "<" excludes the possibility of equality, while ≤ does not and is not "strict")

Because of this established usage, it would not be a good idea to overload proper to refer to eigenvalues and eigenvectors.

Robert Furber said,

March 17, 2011 @ 1:54 pm

Presumably the term "latent" in latent semantic indexing refers to an old term for an eigenvalue, a "latent root". I first encountered this terminology in reading an oldish book by Dudley Littlewood, where he refers to eigenvalues and eigenvectors as "latent roots" and "poles" of matrices (with no reference to the more general setting of linear operators).

Eigenvalues are of course important in quantum mechanics, where an eigenvalue generalizes the value of a function, and where the corresponding eigenvector is the state that the "wavefunction" is in when the function has that value.

Hermann Burchard said,

March 17, 2011 @ 7:45 pm

This avant guarde set! Not one who will consider a novelty term, like "own vector?"

BTW, always liked latent root. Not sure it translates into German.

My point about the French liking their valeurs propres was having a little joke on them when teaching my class about eigenvalues.

Jose Lopez said,

March 17, 2011 @ 10:41 pm

This has inspired me to do my linear algebra homework. Thanks!

rgove said,

March 18, 2011 @ 6:05 am

Given how often LL flames against writers who engage in baseless linguistic speculation, you'd better have the evidence to back that up.

David Nash said,

March 19, 2011 @ 7:28 am

Related to Mark's second example (principal components analysis) is multidimensional scaling (MDS), which is also based on eigenanalysis. The speech analysis software Praat includes MDS, but I hadn't known how phoneticians would normally use it. MDS has been used in lexical categorization, language classification and dialectology, and recently in linguistic typology (eg Croft & Poole 2008). I see a nice overview lecture has slides here.

ENKI-2 said,

March 24, 2011 @ 3:17 pm

I had assumed, when I read this, that the joke was about QM, rather than pure mathematics (I've only heard the terms 'eigenvector' and 'eigenvalue' in the context of whether or not waveforms are collapsed). If a shoe came in contact with a foot, the particles would be entangled, meaning that the collapse of particles on one would affect the way that the particles on the other collapse — so you could, in fact, use the eigenvalues to match [it would be very farfetched, of course — your feet entangle with all sorts of other things too].

Walking Randomly » Carnival of Math #76 said,

April 10, 2011 @ 4:13 am

[…] Every now and then I get asked the question 'Eigenvectors….so what are they good for?' I've got a few stock answers but Language Log's Mark Liberman goes the extra mile when he considers how they might have been used in Cinderella and goes on to discuss how they are used in linguistics. Are you suitably intrigued? Check it out in Eigenfeet, eigenfaces, eigenlinguistics, … […]

Weekend miscellany — The Endeavour said,

April 15, 2011 @ 8:06 pm

[…] Eigenfeet, eigenfaces, eigenlinguistics ATLAS: Automatically tuned linear algebra software Data hand tools […]