Brain Wars: Tobermory

« previous post | next post »

Joe Pater's Brain Wars project "is intended to support the preparation of a book directed at the general public, and also as a resource for other scholars":

Joe Pater's Brain Wars project "is intended to support the preparation of a book directed at the general public, and also as a resource for other scholars":

The title is intentionally ambiguous: “wars about the brain” and “wars between brains”. As well conveying the some of the ideas being debated, their history, and their importance, I plan to talk a bit about the nature of intellectual wars: Why do they happen? What are their costs and benefits? I hope that I’ll finish it by 2021, the 50th anniversary of Frank Rosenblatt’s death.

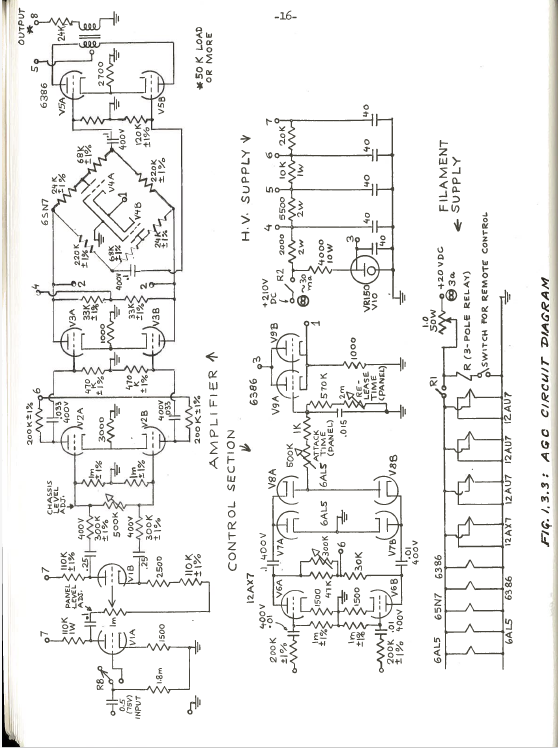

The planned book is about debates in cognitive science, and Joe is currently focusing on Frank Rosenblatt. The most recent addition describes Tobermory, a 1963 hardware multi-layer neural network for speech recognition:

Nagy, George. 1963. System and circuit designs for the Tobermory perceptron. Technical report number 5, Cognitive Systems Research Program, Cornell University, Ithaca New York.

If you're not familiar with Frank Rosenblatt, you could check his Wikipedia page — or read this Biographical Note from his 1958 paper "Two theorems of statistical separability in the perceptron":

Frank Rosenblatt, born in New Rochelle, New York, U.S.A., July 11, 1928, graduated from Cornell University in 1950, and received a PhD degree in psychology, from the same university, in 1956. He was engaged in research on schizophrenia, as a Fellow of the U.S. Public Health Service, 1951- 1953. He has made contributions to techniques of multivariate analysis, psychopathology, information processing and control systems, and physiological brain models. He is currently a Research Psychologist at the Cornell Aeronautical Laboratory, Inc., in Buffalo, New York, where he Is Project Engineer responsible for Project PARA (Perceiving and Recognizing Automaton).

Rosenblatt opens that paper with a quote from John von Neumann's Silliman Lectures:

Logics and mathematics in the central nervous system…must structurally be essentially different from those languages to which our common experience refers … When we talk mathematics, we may be discussing a secondary language, built on the primary language truly used by the central nervous system. Thus the outward forms of our mathematics are not absolutely relevant from the point of view of evaluating what the mathematical or logical language truly used by the central nervous system is… Whatever the system is, it cannot fall to differ considerably from what we consciously and explicitly consider as mathematics.

Rosenblatt builds on von Neumann's quote in this way:

What von Neumann is saying here deserves careful consideration. The mathematical field of symbolic logic, or Boolean algebra, has been eminently successful in producing our modern control systems and digital computing machines. Nevertheless, the attempts to account for the operation of the human brain by similar principles have always broken down under close scrutiny. The models which conceive of the brain as a strictly digital, Boolean algebra device, always involve either an impossibly large number of discrete elements, or else a precision in the "wiring diagram" and synchronization of the system which is quite unlike the conditions observed in a biological nervous system. I will not belabor this point here, as the arguments have been presented in considerable detail in the original report on the perceptron. The important consideration is that in dealing with the brain, a different kind of mathematics, primarily statistical in nature, seems to be involved. The brain seems to arrive at many results directly, or "intuitively", rather than analytically. As von Neumann has pointed out, there is typically much less "logical depth" in the operations of the central nervous system than In the programs performed by a digital computer, which may require hundreds, thousands, or even millions of successive logical steps in order to arrive at an analytically programmed result.

Viewed from the perspective of the recent evolution of these ideas, this is partly prescient and partly wrong or even obtuse.

It's certainly true that the dominant current understanding of Artificial Intelligence is that it's properly considered to be applied statistics, as Rosenblatt argued, rather than applied logic, as Minsky believed. But given the dominant metaphor of "depth" in "deep learning", and the racks full of GPUs churning away on current problems in this paradigm, it's ironic that part of the original argument for statistics over logic was based on the argument that whatever brains are doing, it can't involve very many steps. This argument is explicit in von Neumann as well as Rosenblatt — here's more from the Silliman Lectures:

Just as languages like Greek or Sanskrit are historical facts and not absolute logical necessities, it is only reasonable to assume that logics and mathematics are similarly historical, accidental forms of expression. They may have essential variants, i.e. they may exist in other forms than the ones to which we are accustomed. Indeed, the nature of the central nervous system and of the message systems that it transmits indicate positively that this is so. We have now accumulated sufficient evidence to see that whatever language the central nervous system is using, it is characterized by less logical and arithmetical depth than what we are normally used to. The following is an obvious example of this: the retina of the human eye performs a considerable reorganization of the visual image as perceived by the eye. Now this reorganization is effected on the retina, or to be more precise, at the point of entry of the optic nerve by means of three successive synapses only, i.e. in terms of three consecutive logical steps. The statistical character of the message system used in the arithmetics of the central nervous system and its low precision also indicate that the degeneration of precision, described earlier, cannot proceed very far in the message systems involved.

It's true that the depth of even "deep" nets these days is limited to one- or two-digit integers, typically 5-10 layers. But the learning process in such systems — and the update of nodes in recursive networks — depends for its success on the residue of millions or even billions of previous steps.

By the way, if you're wondering what Nagy's 1963 device has to do with the Isle of Mull, the answer is "nothing". From the cited document's summary:

Tobermory, named after Saki's eavesdropping talking cat, is a general purpose pattern recognition machine roughly modelled on biological prototypes.

That would be this talking cat, who is introduced in a discussion around Lady Blemley's tea-table:

"And do you really ask us to believe," Sir Wilfrid was saying, "that you have discovered a means for instructing animals in the art of human speech, and that dear old Tobermory has proved your first successful pupil?"

"It is a problem at which I have worked for the last seventeen years," said Mr. Appin, "but only during the last eight or nine months have I been rewarded with glimmerings of success. Of course I have experimented with thousands of animals, but latterly only with cats, those wonderful creatures which have assimilated themselves so marvellously with our civilization while retaining all their highly developed feral instincts. Here and there among cats one comes across an outstanding superior intellect, just as one does among the ruck of human beings, and when I made the acquaintance of Tobermory a week ago I saw at once that I was in contact with a "Beyond-cat" of extraordinary intelligence. I had gone far along the road to success in recent experiments; with Tobermory, as you call him, I have reached the goal."

What happens in that story might give you pause for thought if you think about Siri, Google Voice, Cortana, and Amazon Echo listening quietly in the background of your everyday life:

"What do you think of human intelligence?" asked Mavis Pellington lamely.

"Of whose intelligence in particular?" asked Tobermory coldly.

"Oh, well, mine for instance," said Mavis with a feeble laugh.

"You put me in an embarrassing position," said Tobermory, whose tone and attitude certainly did not suggest a shred of embarrassment. "When your inclusion in this house-party was suggested Sir Wilfrid protested that you were the most brainless woman of his acquaintance, and that there was a wide distinction between hospitality and the care of the feeble-minded. Lady Blemley replied that your lack of brain-power was the precise quality which had earned you your invitation, as you were the only person she could think of who might be idiotic enough to buy their old car. You know, the one they call 'The Envy of Sisyphus,' because it goes quite nicely up-hill if you push it."

cameron said,

March 31, 2016 @ 11:32 am

Very interesting. I must say, I really like the word "perceptron".

Gregory Kusnick said,

March 31, 2016 @ 12:28 pm

Perhaps I'm misunderstanding von Neumann, but his claim that "logics and mathematics are similarly historical, accidental forms of expression" sounds like what Dan Dennett calls a deepity: trivially true in a shallow sense, but profoundly wrong in a deeper sense.

In this case it's trivially true that the surface language of mathematics — the particular notation we use for expressing mathematical theorems — is the result of an accumulation of historical accidents. But the theorems themselves (if the word "theorem" is to mean anything) must be independently verifiable by any reasoning being using any (sufficiently capable) system of notation. So the implication that the substance of mathematics is the result of historical accident is profoundly wrong, and smacks of the sort of obtuse postmodernism that insists there are no actual facts, just culturally-laden narratives.

I have to say I'm surprised to hear this sort of nonsense coming from von Neumann, if this is indeed what he's trying to say.

Brett said,

March 31, 2016 @ 12:46 pm

@Gregory Kusnick: I don't think what he's saying is so trivially, obviously true on a shallow level. I have often wondered what kinds of mathematics extraterrestrials might use to represent basic physics, and it seems to me that there is an awful lot of room for differences between how we think of things and how others might. At some level, they representations have to be mathematically equivalent, but there could be profoundly different viewpoints. For example, what if alien mathematicians discovered logarithms before exponents? It may not seem likely to happen, but if it did it would lead to a very different way of thinking about many concepts in algebra and analysis. Yet many people seem to miss these possibilities.

I was at a talk by a famous physicist about searches for extraterrestrial intelligence. Somebody asked him a thoughtful question about how we would communicate with very alien life forms, and he blew it off, saying we would surely share a common scientific language to start with. Specifically, he asserted that they would have to talk about electrodynamics in terms of electric and magnetic fields. Yet it is certainly possible to formulate all of electrodynamics without those fields. There are reasons, historically, why humans invented E and B, but physicists know now that they do not give the most fundamental way of thinking about the subject. Maybe alien scientists might bypass them entirely and use a very different, but equivalent formulation of their theory of electrodynamics.

Gregory Kusnick said,

March 31, 2016 @ 1:00 pm

Brett: "what if alien mathematicians discovered logarithms before exponents?"

But even this way of putting it assume that logarithms and exponents are facts awaiting discovery, not accidental artifacts of human culture.

Matt Goldrick said,

March 31, 2016 @ 2:04 pm

"But the learning process in such systems — and the update of nodes in recursive networks — depends for its success on the residue of millions or even billions of previous steps."

It seems to me that these are necessary features of many systems. If learning is gradual, and requires the accumulation of many instances of experience (certainly true in many, but not all domains), then it will necessarily involve many steps. Viewed from a discrete-time perspective, any continuous system (as the networks are intended to emulate) is going to consist of many steps (or it can be seen as a smooth path of evolution).

[(myl) Right. But von Neumann and Rosenblatt are echoing an important lesson of 1950-era telecommunications and information processing, namely that the noise in analog systems inevitably accumulates as the number of process steps increases. This was a key driver of the development of digital signal transmission and digital computation. But it seems that there are other kinds of processing where errors don't accumulate, and in some cases where the "noise" in the system is actually crucial to success. As far as I know, the real theory behind this class of computations remains to be written.]

Jerry Friedman said,

March 31, 2016 @ 3:00 pm

Gregory Kusnick: I think you are misunderstanding von Neumann because his comparison to Greek and Sanskrit is a little misleading. As far as I can tell from >a href="https://books.google.com/books?id=k0xjK0iaMz4C&pg=PA237">here (did you find a more complete text?), he's not saying that there are different truths of mathematics, or anything related to postmodern theory or contingency on human history. He's saying that what our brains do when we do math isn't like what our math comes out as on paper or like what computers do when they compute. It's based on imprecise interactions among nerve cells that we can describe statistically but not algorithmically. Therefore there's an imprecise, non-algorithmic way to do math that's different from our way of doing it. This is what I understand by Prof. Liberman's "other kinds of processing" above.

As Greek and Sanskrit can express the same facts (up to your favorite level of Whorfianism), neuronal math and math on paper can calculate the same results and prove the same theorems—but the difference between the two ways of doing math isn't the result of human history the way the difference between Greek and Sanskrit is.

The paradox, of course, is that math on paper is the result of that quite different operation of neurons.

(I don't know enough about neurobiology to endorse von Veumann's picture of it, though.)

Jerry Friedman said,

March 31, 2016 @ 3:01 pm

Darn it! I read an excerpt from v. N.'s lecture here.

Y said,

March 31, 2016 @ 3:25 pm

Not to give away the ending to Tobermory, but it involves a very unkind disparagement of German verb inflections.

Gregory Kusnick said,

March 31, 2016 @ 4:48 pm

Jerry: OK, I grant that the charge of postmodernism may be unwarranted. But I don't take von Neumann to be saying anything in particular about "what our brains do when we do math" (if by that you mean how mathematical reasoning is represented in the brain). Rather, he's talking about how the kind of signal processing that neurons do (e.g. in the retina) differs from symbolic logic. That much I have no quarrel with, though I'm puzzled why he thinks that somehow reduces symbolic logic to an accident of history, or why he (apparently) finds it surprising that analog signal processing can, in its domain of applicability, accomplish more in fewer steps than Boolean algebra applied to the same problem.

He almost seems to be suggesting (and here again I may be reading too much into it) that there's some alternate universe of computation accessible to neurons but not to formal mathematical systems. Penrose has championed this view, but this is the first I've heard of it from von Neumann.

On a side note, I'd argue that "what our brains do when we do math" is, at least for software engineers, a conscious and deliberate emulation of the formal systems we're trying to manipulate. Our neurons may be doing something quite different at the physical level, but then so are the transistors in a digital computer.

AntC said,

March 31, 2016 @ 5:05 pm

@myl the "noise" in the system is actually crucial to success

Yes. Take so-called 'junk DNA'. 10 ~ 15 years ago, we were being told that humans are 90% earthworms or some such. Now it turns out all that 'junk' is crucial to gene expression. Somehow the genome encodes millions of years of evolution to keep embryo development on track.

So the (apparent) redundancy in human languages is partly historical hangover, but partly there for good signal-processing reasons.

Keith M Ellis said,

March 31, 2016 @ 7:39 pm

@Gregory Kusnick, at the very least, read the Philosophy of Mathematics entry from the Stanford Encyclopedia of Philosophy site. And maybe just resist the habit to invoke "postmodernism" because reasons.

Scott said,

March 31, 2016 @ 8:04 pm

Didn't we discover logarithms before exponentiation? I seem to recall reading that logarithms were studied for a while without being connected to exponents.

Rubrick said,

March 31, 2016 @ 9:22 pm

it's ironic that part of the original argument for statistics over logic was based on the argument that whatever brains are doing, it can't involve very many steps.

By my reading, this involves a misunderstanding. I take Von Neumann's central point to be that what brains are doing can't involve many steps simply because in a fundamental sense analog, statistical processes don't involve any "steps" at all. Asking how many steps the brain takes to reach a conclusion is akin to asking what bitrate a 45rpm vinyl record was recorded at.

[(myl) But von Neumann and Rosenblatt identify a simple analog of the number of logical steps in a digital calculation, namely the number of synaptic connections between the input and the output of a neural calculation — that's what they mean by "depth".]

Regarding "the racks full of GPUs churning away on current problems in this paradigm", I think that's a bit of a red herring. We've just gotten so good at manipulating discrete bits that approximating fundamentally analog systems (in this case networks of neuron-like objects) using highly optimized digital hardware is more effective for us at this point in history than building suitable analog hardware to do the same thing. I think Von Neumann is essentially right. When AlphaGo decides on a move, the trillions of floating point operations needed to drive its networks don't really correspond to anything going on in a biological brain. Only what's happening above that level echoes what's going on in human thought (very excitingly!)

[(myl) But training an analog DNN computer would still involve millions or billions of pseudo-synaptic transmissions in determining the resulting state of any individual pseudo-neuron in the system. And even after training, any recursive architecture (RNN, LSTM etc.) would continue using the residue of thousands of earlier pseudo-neuron values, propagated through the processing of a time series in millions of pseudo-synaptic transmissions.

In fact, a simple version of this issue comes up in training even the simplest early linear perceptrons — while it's true that the final calculation is just a thresholded inner product of inputs and weights, representing a (highly over-simplified) model of a neuron's integration of its synaptic inputs, the weights are the result of many stages of training, each of which involves a "step" in Rosenblatt's sense.]

flow said,

April 1, 2016 @ 3:49 am

Reading Von Neumann I think he might be hinting to the difference between a digital and a mechanical (analog or otherwise) computer doing some kind of calculation. When you take a mathematical problem and have it both a mechanical and an electronic 'brain' solve it, you will find that the steps involved and the concepts that are applied are very different between the two; at least that will be true for a comparison between a digital and an analog computer. But the abstract maths behind those two modes of operation must needs be the same, or at least that's what we believe. On a simpler level, compare multiplying using pen and paper vs using a slide rule.

Incidentally, research into mechanical integration devices used as ballistic calculators aboard vessels and aircrafts lead to the term 'dithering' that we are still using today. So as for @myl's remark, "it seems that there are other kinds of processing where errors don't accumulate, and in some cases where the "noise" in the system is actually crucial to success", that's for reals, you can read about it in https://en.wikipedia.org/wiki/Dither#Etymology

Some links for the curious about mid-century (19th and 20th, actually) computing:

https://www.youtube.com/watch?v=NAsM30MAHLg (Introducing a 100-year-old mechanical computer)

https://www.youtube.com/watch?v=s1i-dnAH9Y4 (Mechanical – Basic Mechanisms In Fire Control Computers)

https://www.youtube.com/watch?v=FlfChYGv3Z4 (about Babbage's Analytical Engine)

https://www.youtube.com/watch?v=hIinz4fKGpo (UCLA's Mechanical Brain: 1948)

bks said,

April 1, 2016 @ 7:40 am

An amoeba has zero synapses, yet is quite capable of complex goal directed behavior.

<[(myl) Indeed. Similarly a kudzu vine or a fungus spore or an ice crystal.]

KeithB said,

April 1, 2016 @ 9:36 am

AntC:

*Some* of the junk is used that way. Most is still junk. If you were referring to ENCODE, they used an invalid means of judging whether DNA was useful or not.

If you want all the gritty details, refer to Larry Moran's Sandwalk blog.

David L said,

April 1, 2016 @ 12:25 pm

Ice crystals display goal-directed behavior? Whoa, that's heavy, dude.

[(myl) Dig it:

]

AntC said,

April 1, 2016 @ 11:42 pm

cool video ;-)

How does each arm of the flake keep symmetrical with the others? (well, close enough)

Do we posit a neural connection? Same mechanism as arms of a starfish or octopus? Or legs of a spider or a mammal?

AntC said,

April 2, 2016 @ 12:39 am

Thanks @KeithB. I'm no creationist, but it does show how hard it is for the general reader to keep up.

So nowadays how much DNA is reckoned to be junk? Was ENCODE in 2012 over-reaching any wose than Ohno was under-reaching in the 1970's?

How sure can I be that more science won't discover more subtlety in the pattern of DNA? At risk of sounding too teleological/ID-ist why is there all that much complexity if it serves no purpose? I'm more inclined to a narrative in terms of the monkey-brains of 'scientists' than the wastefulness of random mutations and natural selection over millions of years, and in which humans could very easily never have happened.

Talking of which … I see in another Sandwalk piece that " the DNA of humans and bonobos is 98.6% identical in the areas that can be aligned. " Why thet qualification? What's the significance of areas that can't "be aligned"? Is this as much weasel-words as ENCODE's "biochemical function"? Nevertheless the diiference is of the order of 22 million mutations.

The meme that humans are (insert large %) earthworm is now as entrenched in the pop science culture as snowclones or that human communication is (insert other large %) non-verbal or that brains are (ditto) unused [thanks William James].

So what % eartthworm is my DNA? And can I get an authorative answer from somebody who is better-brained than a monkey by significantly more that 1.4%?

David L said,

April 2, 2016 @ 10:50 am

@myl: your definition of goal-directed is clearly different from mine.

Brett said,

April 2, 2016 @ 11:02 am

@Scott: Integer exponents are older than logarithms, and Napier must have understood the logarithm as an inverse of exponentiation when he invented the former in the early seventeenth century. The logarithm as a continuous function on the positive reals was well established a few decades later with the development of calculus in the 1660s and 1670s, and it may actually predate the notion of a the continuous exponential function.

This sounds like a topic for a lunch discussion.

Douglas Bagnall said,

April 2, 2016 @ 6:22 pm

This is now months out of date: http://arxiv.org/abs/1512.03385

People have estimated the depth of various neural pathways using this kind of formula: if a well defined neurological reaction takes n milliseconds, and a neuron can't react faster than m milliseconds, then the maximum processing depth is n/m. From memory the answer is often 10 or less. This is of course for things like catching a ball at close range, not complex tasks like composing a sentence or trying to remember where you read a particular claim before deciding to ascribe it to “people”.

[(myl) I should have said "is usually limited to…"

But that's really not the point I wanted to make, which is that once you include recursive calculations of various kinds — or the lengthy iterations involved in training — the effective "depth" of processing, in terms of the number of sequential pseudo-synaptic transitions involved in computing the answer, becomes very large indeed. And even though "neural nets" are very different from real brains, this property is apparently shared by both kinds of computation. Wherefore von Neumann was wrong, at least with respect to the general idea that all "neural" computation must involve only a few synaptic transitions.]

Fernando Pereira said,

April 3, 2016 @ 11:31 am

I'm late to the von Neumann circuit-depth party, but I think that what he was saying was both true and subtle, and certainly non-obvious even today (I have a conversation like this every week, it seems). Recall that that the notion of universal computing machine was just 20 years old then, general-purpose digital computers even newer, and that a lot of the debates and papers then were trying to understand the relationship between neural and Boolean computations. JvN was as founder of modern functional analysis, of general digital computation, of Monte Carlo computation methods, so he brought to the discussion insights that even now might be missed. On to circuit depth. While the MLP universal approximation theorem (https://en.wikipedia.org/wiki/Universal_approximation_theorem) was only formally proved in print in 1989, it's the kind of result that JvN would have figured out and not bothered to write up between two bites of his breakfast, given everything he had been involved in. It's easy to find functions {0, 1}^n -> {0,1} that can approximated pretty well in l^2 norm in R^n-> R with an MLP, but would require a very deep Boolean circuit to represent (in practical machine-learning terms, compare MLPs or polynomial kernel machines with decision trees). Of course the MLP implementation would be in general an approximation of the target function, but so that's exactly the point of the contrast with "logical" computation. There's an additional point, which I have no idea whether JvN would have considered (but FR might by virtue of his perceptron work), that there are information-theoretic and computational limits to what kind of function can be learned from a finite sample, and those limits are representation-dependent. Les Valiant's wonderful "Probably Approximately Correct" (http://www.probablyapproximatelycorrect.com/) is what you want to read to flesh out those points. The fact that practical digital implementations of MLPs, with GPUs, say, have much greater unfolded Boolean circuit depth (because of the iterative implementation of multiplication, among other factors) is irrelevant to this argument, because the lack of availability of linear threshold circuits in silicon is not a fundamental question, but a matter of applied physics and economics. Actual neurons have no such limitation.

[(myl) This is all true and important, but it doesn't change the fact that every bit of the output of a modern "deep learning" system depends on a VERY large number of prior pseudo-synaptic transitions, certainly those involved in training and also those involved in recursive steps for RNNs and LSTMs etc.. And this is not a fact about the implementations but about the algorithms.]

Fernando Pereira said,

April 3, 2016 @ 2:39 pm

@myl: We should distinguish (1) combinatorial circuit depth, which is what is relevant for *inference* and the universal approximation theorem, and what I think JvN and FR were talking about, from (2) unfolded depth of a learning system, which can't be any less than O(N) where N is the number of training instances. (2) is a somewhat bogus construct because it relies on some form of persistent memory (eg. LSTM state vector) as a "channel" from the past to the future in inference and from the future to the past in training. The combinatorial depth needed for training from a single training instance (eg. in backprop) is comparable to the depth needed for inference; that is, the hardware inventory needed the forward computation (inference) and backward computation (learning) are comparable in size.

KeithB said,

April 4, 2016 @ 8:27 am

AntC:

Read Larry Moran for the details – I am hardly an expert, but the ENCODE guys had a very strange definition of functionality: If a piece of DNA could code for a protein it was functional, never mind if the protein was useful or not.

AFAIK, very little of the previously identified junk is useful for expression, I think they have only changed about 10% from junk to non-junk.

Dan Curtin said,

April 4, 2016 @ 12:00 pm

Nicole Oresme studied the compounding of ratios in the late 14th century. We would now view some of this as equivalent to calculating with fractional exponents. A careful study of his work might shed some light on the issue of whether or not (or to what extent) the mathematics is independent of its setting. (A good brief (1.5 pp) intro can be found in Victor Katz, "A History of Mathematics:an Introduction." look in the index under Oresme -I don't have the current edition at hand.)

Andrew said,

April 7, 2016 @ 3:41 pm

"Deep learning" is still much more "wide" than deep. It's deep by comparison with the one- and two- layer networks that dominated before techniques were developed that enabled the successful training of deeper networks. Those millions and millions of operations are spent exposing the network to a large variety of data, and exposing it to data repetitively, but the operations that it does on a single example, in training or in actual use, are relatively few. The reason GPUs have been so effective at making machine learning faster is because they allow relatively simple operations to be carried out in parallel on thousands of pieces of data at once. The kinds of "recursive" things that are going on are either relatively limited in depth (convolutional nets processing images in ways reminiscent of the visual cortex), or they're of unlimited depth, but that depth is *temporal* — like forming an impression of a passage of text by having it presented a word at a time. The amount of work done goes up with the number of words, but the amount of work *per word* is circumscribed. A trend has been away from using complicated, designed logic to extract features from input before presenting it to learning algorithms, and towards presenting raw data and letting the computer figure out which features are important, which is another decrease in logical depth in the sense that Rosenblatt means. While not perfect, 50 years out, I'd say he was actually pretty on the ball.