Um, there's timing information in Switchboard?

« previous post | next post »

We start with a psycholinguistic controversy. On one side, there's Herbert Clark and Jean Fox Tree, "Using uh and um in spontaneous speaking", Cognition 2002.

The proposal examined here is that speakers use uh and um to announce that they are initiating what they expect to be a minor (uh), or major (um), delay in speaking. Speakers can use these announcements in turn to implicate, for example, that they are searching for a word, are deciding what to say next, want to keep the floor, or want to cede the floor. Evidence for the proposal comes from several large corpora of spontaneous speech. The evidence shows that speakers monitor their speech plans for upcoming delays worthy of comment. When they discover such a delay, they formulate where and how to suspend speaking, which item to produce (uh or um), whether to attach it as a clitic onto the previous word (as in “and-uh”), and whether to prolong it. The argument is that uh and um are conventional English words, and speakers plan for, formulate, and produce them just as they would any word.

And on the other side, there's Daniel C. O'Connell and Sabine Kowal, "Uh and Um Revisited: Are They Interjections for Signaling Delay?", Journal of Psycholinguistic Research 2005:

Clark and Fox Tree (2002) have presented empirical evidence, based primarily on the London–Lund corpus (LL; Svartvik & Quirk, 1980), that the fillers uh and um are conventional English words that signal a speaker’s intention to initiate a minor and a major delay, respectively. We present here empirical analyses of uh and um and of silent pauses (delays) immediately following them in six media interviews of Hillary Clinton. Our evidence indicates that uh and um cannot serve as signals of upcoming delay, let alone signal it differentially: In most cases, both uh and um were not followed by a silent pause, that is, there was no delay at all; the silent pauses that did occur after um were too short to be counted as major delays; finally, the distributions of durations of silent pauses after uh and um were almost entirely overlapping and could therefore not have served as reliable predictors for a listener. The discrepancies between Clark and Fox Tree’s findings and ours are largely a consequence of the fact that their LL analyses reflect the perceptions of professional coders, whereas our data were analyzed by means of acoustic measurements with the PRAAT software (www.praat.org). […] Clark and Fox Tree’s analyses were embedded within a theory of ideal delivery that we find inappropriate for the explication of these phenomena.

I haven't seen any recent defenses of the Clark & Fox Tree position on this issue, which I think is too bad, since the core of their position (that filled pauses are part of the linguistic signaling system, rather than simply symptoms of its malfunction) seems worth preserving. But the debate is apparently still alive, since there are recent publications like Ian Finlayson and Martin Corley, "Disfluency in dialogue: An intentional signal from the speaker?", Psychonomic Bulletin and Review 2012

[P]articipants were no more disfluent in dialogue than in monologue situations, and the distribution of types of disfluency used remained constant. Our evidence rules out at least a straightforward interpretation of the view that disfluencies are an intentional signal in dialogue.

So I thought I'd report, FWIW, on a Breakfast Experiment™ that looks at the duration distribution of um, uh, and adjacent silences in the Switchboard corpus. This exploration is connected to our recent flurry of posts on UM and UH (see here for some links), but it also underlines the curious disconnect between speech science and speech technology, in ways that I'll underline as they emerge.

Here's what Clark and Fox Tree say about their data:

The primary evidence for our proposal comes from the London–Lund corpus (hereafter LL corpus). It consists of 170,000 words from 50 face-to-face conversations (numbered S.1.1 through S.3.6) from the Svartvik and Quirk (1980) corpus of English conversations. […]

Brief pauses “of one light foot” are marked with periods (.), and unit pauses “of one stress unit” with dashes (-). When we need a measure of pause length, we treat the unit pause as 1 unit long, and the brief pause as 0.5 units long, so “. -” is a 1.5 unit pause, and “- – -” is a 3 unit pause. […] Prolonged syllables are marked with colons (:), as in “u:m”. Uh and um were sometimes pronounced in brief or normal form, which we will write “uh” and “um”, and other times in prolonged form, which we will write “u:h” and “u:m”. The surreptitiously recorded speakers produced 3904 fillers (“uh” 898, “u:h” 1213, “um” 530,

“u:m” 1263).

They add a few words about some other data sources.

For auxiliary analyses, we draw on an answering machine corpus (AM corpus), the switchboard corpus (SW corpus), and the Pear stories (Pear corpus). The AM corpus consists of 5000 words in 63 calls to telephone answering machines, section S.9.3 in the full computerized version of the LL corpus. It contains only 319 fillers (“uh” 69, “u:h” 166, “um” 6, “u:m” 78). The SW corpus is a 2.7 million word corpus of telephone conversations (Godfrey, Holliman, & McDaniel, 1992). It marks uh, um, and sentence boundaries, but not prolongations or pauses; it contains 79,623 fillers (uh 67,065 and um 12,558).

This is strange. As Clark and Fox Tree note, the Switchboard corpus is about 2700000/170000 = 16 times bigger than the portion of the London/Lund corpus that they used. And while it doesn't have symbolic notations like "unit pause" and "prolongation" and so on, even the original 1993 publication had word-level time alignments, which offer a detailed phonetic account of pauses and prolongations. An improved version of these alignments was prepared for the WS'97 workshop at Johns Hopkins, and the alignments were checked and corrected at Mississippi State in 2001-2003. The 1997 version of the alignments was certainly available when Clark and Fox Tree were preparing the Cognition paper.

So it puzzles me that Clark and Fox Tree didn't use this information. I'll ask them, but perhaps the reason is that the cultural gap between the world of speech technology (from which Switchboard comes) and the world of speech science (including psycholinguistics) was even larger in 2002 than it is now. They should have been aware that word-level time-marking existed for Switchboard, since Godfrey et al. 1992, which they cite, has a section titled "Time-aligned Transcription", which states that

The SWITCHBOARD conversations are also time aligned at the word level. The time alignment is accomplished using supervised phone-based recognition, as described in a companion paper by Wheatley. This process produces phone by phone time marking, which are then reduced to a word by word format for publication with the transcripts.

And it's even more puzzling that O'Connell & Kowal based their 2005 critique only on duration measurements from "six media interviews of Hillary Clinton". It's an interesting collection, but it's fairly small — about 100 times smaller than Switchboard, by the metric of the number of UMs and UHs — and limited to 7 speakers, with Clinton providing about 90% of the UMs and UHs. By 2005, the Mississppi State hand-checked alignments had been available for several years, so no painful acoustic measurements in Praat were necessary. So why not use Switchboard, in addition to if not in place of the interview dataset?

Based on the creation times of the files involved, my work on this material was spread over two periods, one of 34 minutes and another of 7 minutes. This included more than a few minutes of tending to a miserable chest cold. I say this not to boast, but to underline the fact that once you know where the files are (and they can be freely downloaded from the ISIP site), it doesn't take a lot of work to extract the counts and distributions shown below. And given that the issue raised by the cited papers is still a live one — as the Findlayson & Corley 2012 paper suggests — it's surprising that in the dozen years since Clark and Fox Tree 2002, no one (as far as I can tell) took the necessary 41 minutes.

Here are the counts of UM and UH in Switchboard (from the ISIP transcriptions), with a breakdown of how many are preceded or followed by a silent pause:

| all UM | 21076 | |

| SILENCE UM SILENCE | 8251 | 39% |

| SPEECH UM SILENCE | 7358 | 35% |

| SILENCE UM SPEECH | 2938 | 14% |

| SPEECH UM SPEECH | 2521 | 12% |

| all UH | 68991 | |

| SILENCE UH SILENCE | 9231 | 13% |

| SPEECH UH SILENCE | 25150 | 36% |

| SILENCE UH SPEECH | 12681 | 18% |

| SPEECH UH SPEECH | 21696 | 31% |

UM is followed by a silent pause 74% of the time, whereas UH is followed by a silent pause only 49% of the time.

In the other direction, UH is embedded in speech without silence on either side 31% of the time, whereas UM is in that situation only 12% of the time.

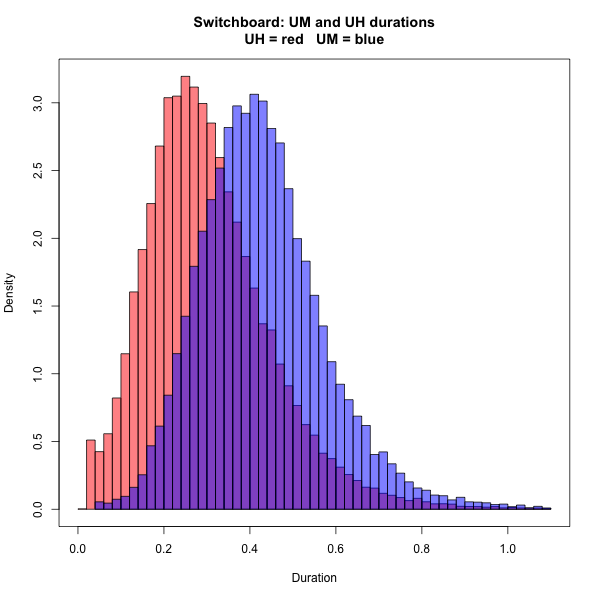

Here's the distribution of durations for the UM and UH themselves. UM is a bit longer — median of 417 msec. vs. 286 msec. for UH, or 131 msec longer, which is about what's expected for a word-final nasal murmur. But there's quite a bit of overlap:

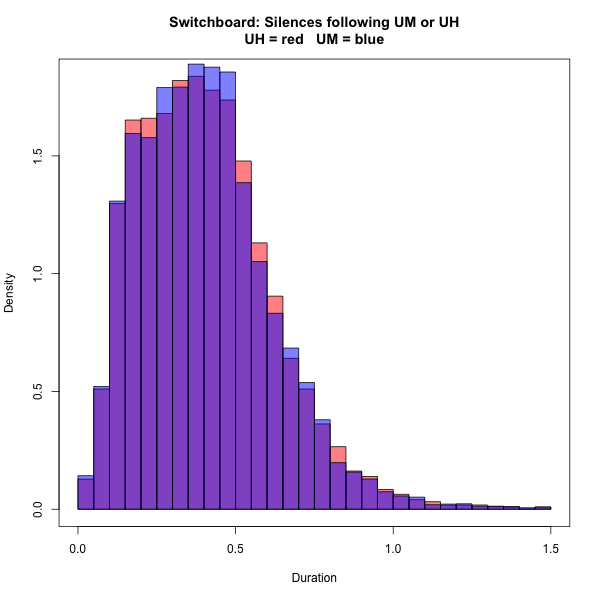

Here's the distribution of durations for silent pauses following UM or UH (15609 silent pauses after UM, 34381 silent pauses after UH):

Again, pauses after UH are in red, and pauses after UM are in blue — but purple, which the region of the histogram where the distributions are the same, clearly predominates to a massive extent. I was surprised to see that the distributions are so nearly identical, and wasted a few minutes looking for a bug in my code.

O'Connell and Kowal argued that in their corpus of Hillary Clinton interviews,

the distributions of durations of silent pauses after uh and um were almost entirely overlapping and could therefore not have served as reliable predictors for a listener.

As we've just seen, in the Switchboard corpus, which is 100 times larger and much more representative, the distribution of durations of silent pauses after uh and um is about as close to identical as real-world distributions ever are. So why in the world would O'Connell and Kowal have failed to make use of this freely-available and easy-to-analyze dataset? It can't quite be true that they didn't know of its existence, because Clark and Fox Tree mention it, though falsely implying that timing information wasn't available for it.

I don't mean to criticize these four psycholinguists in particular. Clark, Fox Tree, O'Connell, and Kowal are all major figures in the field, who have made important contributions. But my point is that the field of psycholinguistics has been culturally estranged from research in speech technology for several decades. So it's not a surprise that this disciplinary blind spot afflicts even these four major researchers.

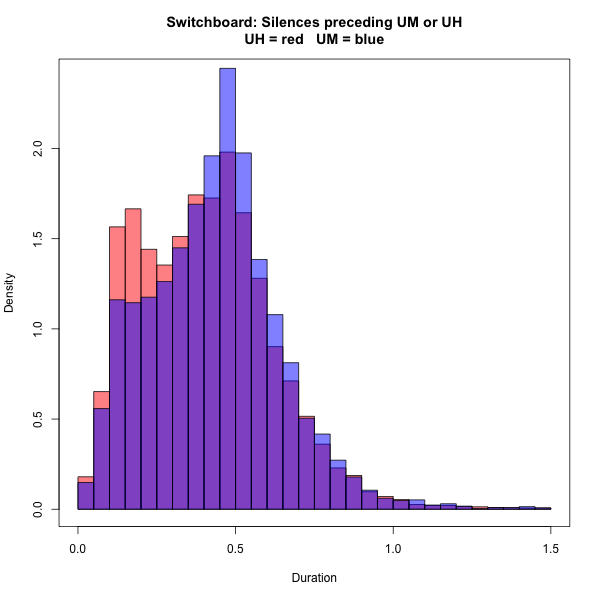

The distribution of durations of pre-filled-pause silences in Switchboard is also nearly identical for UM and UH. This time, the distribution is clearly bimodal, and there's a small shift from the shorter to the longer mode in UMs compared to UHs. But the durations of the pauses preceding UM and UH still clearly come from the same distribution:

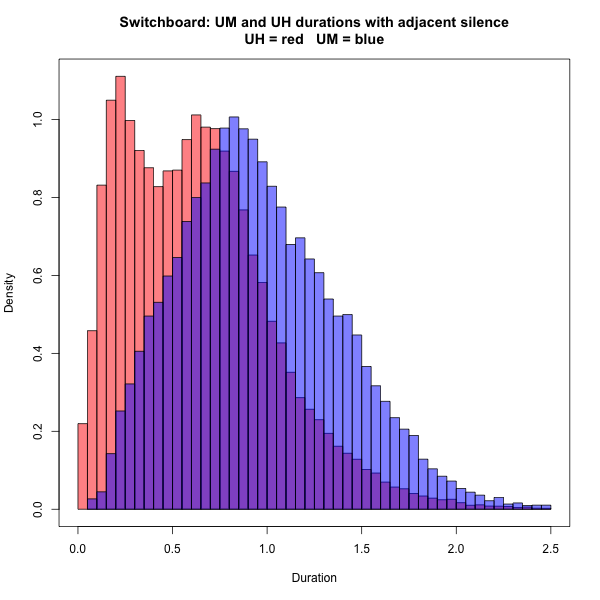

Finally, here's the distribution of summed durations for preceding silence (if any), UM or UH, and following silence (if any). UH is clearly bimodal, with a distribution of short interventions representing UH without silence, and a distribution of longer periods corresponding to UH plus one or two silences. In the case of UM, the distribution is clearly "fattened" by a similar effect, but there's not such a clear multimodal structure:

Overall, these results echo the argument that O'Connell & Kowal made, but add the authority of a 100-times-larger and much more representative dataset. I'm not convinced that their conclusion thereby follows, at least in its strongest form. It seems likely to me that UM and UH have somewhat different (though perhaps overlapping) communicative functions. But I AM convinced that psycholinguists need to learn about the tools and resources available in the technological world.

Dre said,

October 5, 2014 @ 9:19 am

I haven't been following UH-UM too closely, I admit, but — has anyone considered whether there may be a mechanical / articulatory component? Just as "a" versus "an" arises in part due to the difficulty of articulating two vowels consecutively, maybe the choice of "uh" vs "um" is determined, in part, by whether "uh" + [phoneme] or "um" + [phoneme] is easier to say. This isn't to discount the notion that "short" versus "long" delay plays a part in the choice, just that there may be a phonetic-context dimension as well.

Presumably this analysis could be partially performed using automatic examination of a corpus, but it might require a human to determine the phoneme after the uh/um utterance if the pronunciation is ambiguous in print.

[(myl) This is a good idea, though it seems unlikely to explain the sex and age effects, and of course it doesn't apply to the 74% of UMs and 49% of UHs followed by silent pauses. For the rest, on the face of it there's a small but real effect. For UM, there are 5467 instances not followed by silence, and of these, 2039 (37%) precede a word that starts with a vowel. For UH, there are 34520 instances not followed by silence, and of these, 10526 (30%) precede a word that starts with a vowel.

To get a glimpse of how discourse-level and phonetic-level factors may interact, here are counts for the ten commonest post-UH words in Switchboard (restricting attention to cases where there is no silent pause intervening), with normalized frequency per thousand words in the middle column:

And the same thing for UM:

]

Bessel Dekker said,

October 5, 2014 @ 9:59 am

Not to spawn a red herring, but I'm not convinced that the form "a" is due to any difficulty of articulating two consecutive vowels. It seems to be the other way around: "an" was weakened to "a", except where the "n" was protected by a following vowel. This protection is now failing, esp. in AmE, it seems — that variety at least has no problem with two consecutive vowels.

Likewise, is there really any evidence that "uh" is easier to pronounce than "um", or conversely, if followed by a phoneme? And if so, how is this case different in that the ensuing phoneme might be either a vowel or a consonant?

Dre said,

October 5, 2014 @ 10:45 am

What I was trying to get at with the a/an example was that there's a phonetic-based distinction between the two that has to do with ease of articulating the next sound — can't say I'm actually familiar with the evolution of "a" and "an," so it could well be that the evolution happened the other way around from how I expressed it in my first comment.

As for uh and um, I guess to be clear, I'm not trying to suggest that the choice of "um" is the cause of a following pause — I definitely think there's credence, in a plurality of cases, for "um" to be a pragmatic marker for "hey, longer pause coming up". But in the cases where there is no/minimal pause after the uh/um utterance, maybe the choice of uh or um is related to the following sound, in a similar way to the selection of a/an.

This seems plausible inasmuch as it's easy to turn an "uh" into an "um" by just doing "uh"+[m], so if the person were preparing to utter the next sound, maybe the [m] gets inserted as a way to smooth out the transition. The pattern that I think we would want to look for is whether there's some class (or classes) of phoneme that are more likely to follow "uh" than to follow "um", or vice versa — specifically in the cases where the pause after um/uh is minimal.

From MYL's response, the palette of subsequent words is interesting and pretty disjoint (I like how the fifth most common utterance after "uh" is "uh"), but my undergrad-level linguistic chops are not robust enough to really see a pattern.

Nor would I expect such a distinction to account for the other interesting patterns that have been pointed out — gender, age, etc. But I think there's a well-defined class of uh/um utterances we could look at where the subsequent phoneme might play a role in the choice between uh and um.

OK, so, refined research question: in the space of utterances of "um + (no pause)" and "uh + (no pause)", is there a class/classes of phoneme more likely to follow "uh" than "um" or vice versa?

Bessel Dekker said,

October 5, 2014 @ 11:49 am

Dre, your "What I was trying to get at with the a/an example was that there's a phonetic-based distinction between the two that has to do with ease of articulating the next sound" seems to me unfounded, as I indicated (even disregarding such strings as four-syllable "maaari" in Tagalog). So I still disagree. But no matter. This is rather tangential.

Dre said,

October 5, 2014 @ 11:53 am

Oh, I'm definitely not arguing that it's impossible or even "hard" in any meaningful way to make multiple, distinct, consecutive vowel sounds. Just that there are certain phoneme patterns that are more preferred (at least in English) which the "a/an" dichotomy serves to facilitate.

D.O. said,

October 5, 2014 @ 7:23 pm

I guess we have a new story. "Um" lasts significantly longer then "uh" (obvious), more often is preceded by silence (53% vs. 31%) and difference is even more dramatic if "um/uh" are embedded in silence and when preceded by silence "um" tends to follow a slightly longer silence. "Um" is also significantly rarer.

Michael Watts said,

October 5, 2014 @ 9:31 pm

Our evidence rules out at least a straightforward interpretation of the view that disfluencies are an intentional signal in dialogue.

I have a problem with that conclusion. Here's a snatch of my own conversation with another person:

—

How is it? Someone else just complained to me that they were learning to drive and they hated it

Maybe driver's ed in China is more, um, intensive than it is in America :/

—

This took place over wechat, a textual medium, so the use of "um" can't signal a pause, since pauses don't exist. Rather, the "um" is there because I felt it was necessary for other purposes – in this case, signaling euphemism or understatement.

Haamu said,

October 5, 2014 @ 9:48 pm

A pause "of one light foot" would be a brief pause indeed.

Rubrick said,

October 6, 2014 @ 6:25 pm

A tangent on the topic of "um", "uh", and speech technology: I often use speech-to-text on my iPhone, and I like to use "um", "uh", "huh", "ah" and the like in text messages. It seems impossible to convince my phone that I really actually do want to say something like "Uh, are you sure that's a good idea?" It always tries to replace "Uh" with a not-especially-similar-sounding word, or leaves it out entirely.

GeorgeW said,

October 7, 2014 @ 4:15 pm

I use various speech features in emails and text messages like hmm, ah, huh, wow, oops and the like. However, I am confident I have not used uh and I don't think I have used um. But I feel like um would be more likely than uh.

JS said,

October 7, 2014 @ 11:01 pm

I use English "ummm"/"uhhh" to teach learners of Mandarin Chinese to produce the first/level tone — if these are "words" of English, they are two of the very few that feature something we might call lexical tone. Incidentally, questioning "huh?" > 2nd tone, (some varieties of) cute baby "awwww" > 3rd tone, "huh!" of recognition/discovery > 4th tone. And you can create some tonal minimal pairs/sets with various possible contours of "hmm", etc…

JS said,

October 7, 2014 @ 11:14 pm

@Michael Watts

The possibility that "um" could relate in some way to the length or nature of a pause surely isn't refuted by its use in text(ual representations of speech)… yours is even surrounded by commas, also useful orthographic pointers to the same feature.

Tangentially, I am very fond of the Chinese texting convention (no idea how widespread?) of setting off by space exhortatory (= "ya hear?", as opposed to exclamatory, etc.) sentence final "a" — thus 听话 啊 and the like.

Michael Watts said,

October 8, 2014 @ 4:27 am

@JS

The fact that "um" and related words appear in textual communications disproves the idea that they're unintentional; everything appearing in text is intentional (or a typo — different question).

Note that what I took issue with was the conclusion "our evidence rules out […] the view that disfluencies are […] intentional". Saying you've ruled out the idea that disfluencies such as "uh" are intentional signals is ludicrous on its face, since it's trivial to demonstrate that people use them on purpose and, as Rubrick shows, are distressed when they can't use them, even in non-spoken contexts. That's sufficient to prove immediately that, while they may be produced inadvertently on occasion, they are intentional signals.

Michael Watts said,

October 8, 2014 @ 4:40 am

Expanding further: since it takes less than one second of armchair rumination to conclusively prove that "uh" and "um" are produced intentionally, it disturbs me to see a published paper conclude that they've ruled out the theory that "uh" and "um" are produced intentionally. It reminds me of when I read an economics blogger refer, by name, to a "theorem" stating that coins and bills of the same denomination can't circulate together (I can't find this now, but if a reader happens to recognize this theorem and can let me know the name, I'd appreciate it). The problem with the theorem is that it's easy to observe real-world economies where bills and coins of the same value are both in common circulation. I don't understand the process where something with easily-observable counterexamples gets published as a "theorem", but I don't like it, and I don't like Finlayson and Corley's conclusion here.

Breffni said,

October 8, 2014 @ 3:44 pm

Michael Watts: what you're describing is not what these posts are talking about. 'Uh/um', as markers of irony, only mimic the genuine hesitation markers that Finlayson and Corley (etc.) are talking about, but they're functionally distinct. To take your written example, "Maybe driver's ed in China is more, um, intensive than it is in America", that's roughly paraphrasable as "how shall I put this": it mimics a word search, as though you're having a hard time finding the most diplomatic phrasing. You could have used it that way in speech too. But a genuine hesitation in speech might have occurred elsewhere with no ironic intent: "Maybe, um, driver's ed in China is more intensive than it is in America". And you clearly wouldn't write that.

You can make an analogy with throat-clearing. You can make a throat-clearing noise to attract attention or express disapproval in speech, or use "ahem" for some analogous effect in writing, but that's not to say it's the same phenomenon as actually clearing a congested throat.

Michael Watts said,

October 8, 2014 @ 8:43 pm

Breffni:

You're too generous to Finlayson and McCorley. They make no distinction between intentional and involuntary "um" and "uh"; they even cite, in their introduction, a paper suggesting that "um" and "uh" are not like other disfluencies. They devote much of their introduction to pointing out that, while disfluencies do fulfill communicative purposes, that doesn't mean that their production is voluntary, any more than fire chooses to produce the smoke that signals its presence (that example is theirs). They continue the analogy to fire:

Moreover, although disfluencies affect listeners, both immediately and in the longer term (e.g., Arnold, Tanenhaus, Altmann, & Fagnano, 2004; Corley et al., 2007; Fox Tree, 2001; Swerts & Krahmer, 2005), one cannot conclude from this that speakers use them to communicate, any more than the fact that a hand is withdrawn from the flame proves that the fire uses pain to affect behaviour. Although evidence is consistent with the view that disfluencies are uttered with communicative intent, it remains possible that they are simply a consequence of delays to the speech plan.

You know what you can use to conclude that speakers use pause fillers to communicate? The explicit insertion of pause fillers into written dialogue. There is literally zero possibility that written "um"s are a mere consequence of hiccups in the speech plan. F&M take no note of this, although it would have been quite common at the time they wrote their 2012 paper.

Their experiment sets up a situation where disfluencies overwhelmingly arise from the speaker's actual difficulty in coming up with a word. They investigate whether, in such a circumstance, disfluencies are more common in attempting to name difficult-to-name objects such as "llama" (yes) and whether the presence of a second person in the room affects the number or kind of disfluencies produced (no). Since the second person doesn't affect the disfluencies produced in the experiment, they reject the hypothesis mentioned in their intro that disfluencies may be intentionally produced for communicative purposes.

But this is an artifact of their experiment. If, instead of naming innocent but esoteric objects, they had their subjects e.g. read aloud from sex scenes in romance novels, I bet they'd find that disfluencies increased in the presence of an observer. I also bet they'd need to add another category of disfluency — laughter — to their experiment, which in itself tells us that they're not providing an adequate treatment of the subject (the five categories of disfluency they recognize are silent pause, um, uh, prolongation, and repetition; false starts are mentioned in their introduction but apparently, like laughter, do not occur in their experiments).