31% more meaningless: Because algorithms

« previous post | next post »

In "Whom loves ya?", 2/12/2014, Geoff Pullum riffed on one of the outcomes of "a large-scale statistical study of what correlates with numbers of responses to online dating ads", namely that "men who use 'whom' get 31% more contacts from opposite-sex respondents".

It suited Geoff's rhetorical purposes to take this number at face value, but I wondered about where it really comes from, and what if anything it means.

That "large-scale statistical study" was reported in just one place: Caitlin Roper, "How to Create the Perfect Online Dating Profile, in 25 Infographics", Wired 2/3/14. Here's what we learn about the design of the study:

Here at WIRED, we couldn’t help but think there might be a better way to optimize your chances, so we pulled massive amounts of data from OkCupid and Match.com, searching for tips that might help you master Internet dating and find someone awesome.

Call it the algorithm method: Working with data crunchers at the dating sites, we put together 25 tips for writing the perfect profile, selecting the right photo, and really understanding your audience. We analyzed the 1,000 most popular words on both men and women’s profiles, tabulated the most popular movies and TV shows, and crunched stats on what people consider their best feature vs. what features their potential dates are attracted to.

The "algorithm method"? Even Science magazine wouldn't accept that description as an appropriate Methods section. Ms. Roper does tell us more:

We couldn’t have done any of this without the help of the data maestros at Match and OkCupid: Christian Rudder, cofounder and president of OkCupid, and Jim Talbott, director of consumer insights at Match.com. These guys and their data teams ran queries of all kinds and pulled spreadsheet after spreadsheet of information to try and answer our strange questions. We also needed OkCupid to get permission from their users to enable us to publish those popular profile pics. In short, we couldn’t have scraped all this data and derived this advice without the help of these talented data crunchers who are as dedicated to data analysis as we are.

But this is equally empty. Here's the infographic illustrating what Roper calls "One of the clearest findings: Higher-brow preferences make you sexier":

We don't learn the overall size of the samples of profiles and raters; or how the "attractiveness ratings" were calculated; or what the relationship was between the number of profiles mentioning a movie or series and the (average?) attractiveness rating of those profiles; or what the error bars on the attractiveness ratings were. The oddest thing about this "result" is the interpretation in terms of brow height: In what universe is Homeland highbrow and Dr. Who lowbrow?

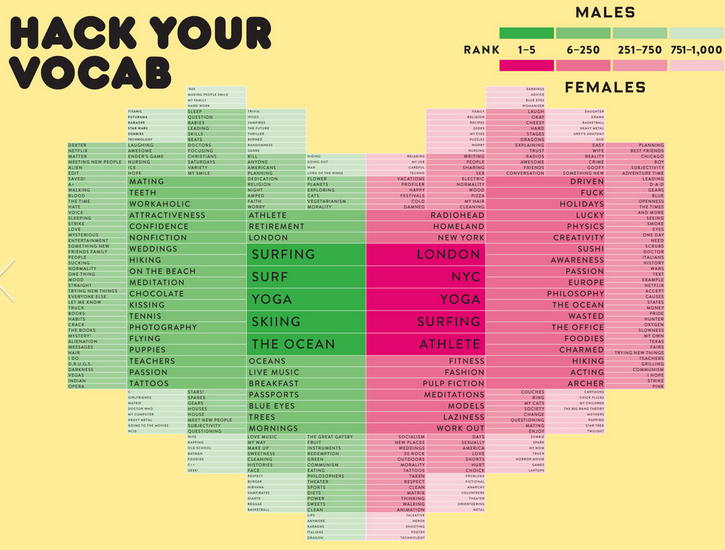

The core numerical analysis is much more puzzling — the lead infographic is a chart that "shows 380 of the top 1000 most commonly used words in profiles on OkCupid.", where "the color-coding shows the average attractiveness rating of the people using those words":

But the choice can't have been simply "the top 1000 most commonly used words in profiles on OkCupid", since that list would have been dominated by things like "the" and "of". And in fact this is not a list of "words" at all, since it includes phrases like "the ocean", "on the beach", "work out", and "trying new things". So maybe they have a method for finding significant collocations? Or maybe someone just skimmed a bunch of profiles and wrote down a list of words and phrases that caught their eye? And then maybe combined item frequency with subject's attractiveness ratings, or with some quantity derived from number of responses, or …?

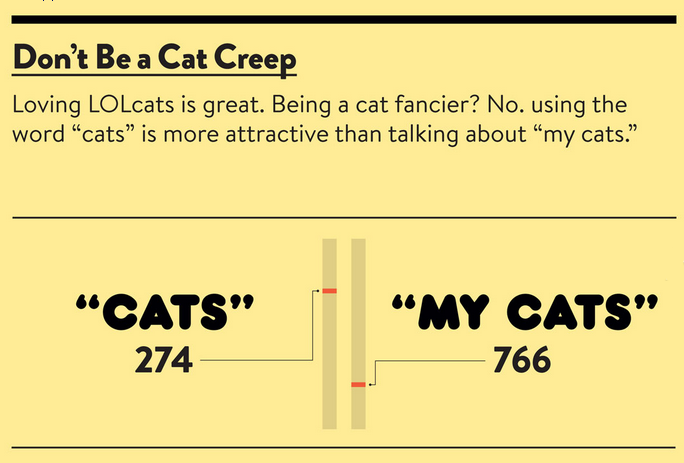

One of the other infographics adds to the uncertainty:

Were profiles that include the string "my cats" excluded from the attractiveness averaging of profiles that included the string "cats"? Is this the male list, or the female list, or both combined? And was the ranking of features actually determined by the average attractiveness rating of profiles containing the feature in question, or was some sort of regression technique used to try to sort out the differential effects of correlated features?

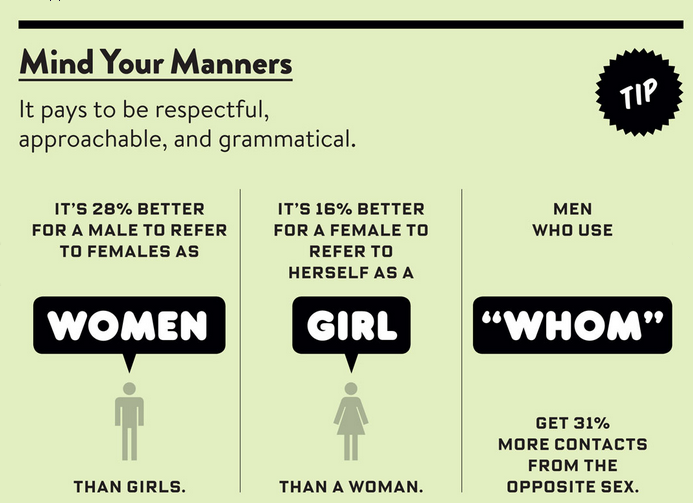

The infographic that Geoff reacted to is the most puzzling of all:

In what sense is it "28% better for a male to refer to females as women than girls"? Does this mean that women's rating of profiles containing such references is 28% higher, on average? Does it mean that profiles containing such references get an average of 28% more responses?

And then there's the critical "whom" factoid: What does it actually mean that "Men who use 'whom' get 31% more contacts from the opposite sex"?

At this point, we really need to pay attention to those silly little questions about what is being compared to what, and what the counts were, and what the confidence intervals are.

For some perspective, let's take a look at a case where we actually know what's going on.

The Internet Archive's collection of forum posts contains 409,411 items, of which 32,937 contain the word who, and 870 contain the word whom. There were 134 items containing both the word who and the word cats, while the word whom and the word cats co-occurred in 3 items.

So simple arithmetic shows us that people who use who are 18% more likely to talk about cats than people who use whom, right?

(134/32937)/(3/870) = 1.18

Well, maybe. Suppose there had been one more post containing both whom and cats — then arithmetic tells us a rather different story:

(134/32937)/(4/870) = 0.88

Now it seem that who-ers are 12% LESS likely to talk about cats than whom-ers. (And never mind that I didn't check for co-occurrence of who and whom…)

Or what if we compare posts that use whom to the whole body of forum posts? Cats are mentioned in only 670 of 409,411 indexed forum posts, so it seems that whom-ers are 111% more likely to talk about cats than the average forum participant is:

(3/870)/(670/409411) = 2.11

But if there had been only 1 whom+cats forum post rather than 3, whom-ers would have seemed 30% LESS cattish:

(1/870)/(670/409411) = 0.70

How likely are these various outcomes? Well, a binomial test tells us that with 3 successes (i.e. mentions of cats) in 870 trials (i.e. forum posts containing whom), the 95% confidence interval for the underlying probability of success is 0.0007116827 to 0.0100439721 (corresponding to between 0 and 8 mentions of cats). Thus to make a long story short, these counts tell us (almost) nothing about whether the use of whom predicts a propensity to mention cats.

In fact, the situation is much worse than this. A 95% confidence interval just means that an observed set of counts would be generated by random statistical fluctuations one time in 20. But suppose we check 1,000 words? 1/20 of 1,000 is 50 — and therefore we expect 50 words to show a "statistically significant effect", unless we correct for multiple comparisons. The Wired article tells us that the "data teams ran queries of all kinds and pulled spreadsheet after spreadsheet of information" in the course of their "algorithm method", which is a clue that some Bonferroni correction would have been appropriately added to the statistical significance tests that they didn't run.

And then there's the problem of inferring causation from correlation, even when the correlation is a meaningful one.

So what about whom as a chick magnet? There are several layers of reasons to suspect that the cited factoid ("Men who use whom get 31% more contacts from the opposite sex") is worthless, other than as a way to attract eyeballs to Wired. Like, maybe there were 6 guys who used whom in their profile, and they got an average of 6.9 responses, compared an average of 5.27 responses for the whole site. Or maybe there were tens of thousands of whom-ers, who mostly said that they were looking for someone with whom to share their lottery winnings or their villa in Ibiza.

Or maybe the evidence that "it pays to be grammatical" was more robust and believable — but when a journalist or PR person uses the "algorithm method" to create a sexy number, without giving any supporting details, we should suspect the worst.

glasserc said,

February 16, 2014 @ 10:49 am

If it's from OkCupid, maybe they're using some of the same techniques from http://blog.okcupid.com/ . In particular, I think the posts "The Mathematics of Beauty" and "Exactly What To Say In A First Message" might be relevant (although every post is really interesting). "Attractiveness" probably refers to the "rating" users on the site get. I think they have a 5-point rating but I've never used the site. Another salient approach they've used in the past has been to measure reply rates — if person X writes a bunch of people messages saying "Hello, let's go out", what percentage of them write back (thereby presumably indicating interest)?

Of course, none of that addresses the other methodological issues in the WIRED piece..

Ken MacDougall said,

February 16, 2014 @ 11:09 am

This is really interesting, but Prof Pullum's post was stonkingly fabulous.

[(myl) Yes, and as a result, the whom statistic ascended into the category of Factoids that are Too Good To Check. But here at Language Log, we've been Checking the Too Good To Check for more than a decade.]

Fred Moore said,

February 16, 2014 @ 11:09 am

I'm fairly certain "the algorithm method" was a play on "the rhythm method." So, a sex (well, family planning) joke.

Y said,

February 16, 2014 @ 12:11 pm

So "Cats whom surf" is irresistible, right? Married women, avert your eyes!

GH said,

February 16, 2014 @ 12:32 pm

Of course, as Prof. Pullum is no doubt aware, even if we take the finding at face value it does not imply what he presents it as implying. Wired reports no data on correct/incorrect usage of "whom" in the profiles, and it could easily be the case that correct "whom"-use accounts for all of the positive effect. Incorrect use might have no effect, a small negative effect that gets swamped, or even a large negative effect (if it makes up only a small percentage of the examples).

The finding is quite probably bunk because of the methodological issues outlined in this post, but if it were true, wouldn't it be an interesting example of the social signaling purpose of language snobbery actually working?

Y said,

February 16, 2014 @ 1:23 pm

Correction: I should have said, "I am one whom cats surf." More grammatical and more realistic, though algorithmically equally fetching.

Victor Mair said,

February 16, 2014 @ 1:30 pm

MYL: "…here at Language Log, we've been Checking the Too Good To Check for more than a decade."

Here are a few that I just dug up:

"Factlets"

http://languagelog.ldc.upenn.edu/nll/?p=3997

Bible Science stories

http://itre.cis.upenn.edu/~myl/languagelog/archives/003847.html

The "million word" hoax rolls along

http://languagelog.ldc.upenn.edu/nll/?p=972

986,120 words for snow job

http://itre.cis.upenn.edu/~myl/languagelog/archives/002809.html

On "Cronkiters" and "Kronkiters"

http://languagelog.ldc.upenn.edu/nll/?p=1604

< Too Good to Check http://www.tvnewsinsider.com/4152

D.O. said,

February 16, 2014 @ 1:33 pm

Doing even semi-serious statistics research in connection to that Wired article is like trying to validate "men are from Mars, women are from Venus" by consulting census of birthplaces. Nevertheless, there are tens of millions people subscribed to online dating sites and presumably millions who wrote a profile. Not seeing even a single one of them, I will hazard a guess that on average they consist of 10 words or more. Google n-grams informs us that in contemporary American Book English whom has 1/1000 word frequency. Of course, on-line romantic ads are not exactly like books, so let's discount its frequency by a factor of 10. It still leaves us with thousands of ads with whom. And I didn't read anything about attempts to correlate whom with cats. Or am I missing something?

[(myl) We aren't told whether the data maestros looked at word usage in tens of millions of profiles or just at a sample, perhaps a small one. We also aren't told how many features they scanned in order to pick out the effect of whom as something worth featuring. Nor are we told what "get 31% more contacts" means — 31% more than what control group? And 31% more than what expected number of contacts? We aren't told what the standard error of contact numbers for the whom-users was. And we aren't told anything about the distribution of whom contexts — maybe, as I suggested, a meaningful percentage of whom uses were in phrases of the general form "looking for someone with whom to X", where X is something nice, so that what's being tested is not the use of whom but the expressed desire to do nice things together.

If you think these are silly questions, just take a look at some other quantitative "studies" cooked up by PR people and journalists. A good example is the one that "proved" that email and texting "lower the IQ more than twice as much as smoking marijuana" (discussed here and in some links therein). The experimental design involved asking a few people to solve matrix equations while being by pestered by ringing cellphones and flashing email messages, along with a cursory 1000-person survey of digital media usage. But this was described (e.g. by the Guardian and the Times and Bloomberg Wire) as a "study of 1,000 adults" in "80 clinical trials", showing that email and texting "are a greater threat to IQ and concentration than taking cannabis".

As for whom and cats, you're free to pick your own illustrative example. But in general, my experience has been that it's hard to invent satirical versions of such reports that are nearly as preposterous as the truth…]

Rubrick said,

February 16, 2014 @ 4:50 pm

This explains why my profile which begins "Whom whom whom whom whom…" hasn't netted me many dates. It scored pretty well with Hemingway, though….

D.O. said,

February 16, 2014 @ 5:00 pm

I've never suggested that Wired or "data maestros" made a sound statistical job. Obviously, we have no way of knowing and the whole exercise is simply a fluff Valentine piece, wired-flavored. I just pointed out that they probably have enough data to do it right. BTW, the original "research" never suggested that whom should be used ungrammatically. It's Prof. Pullum's interpretation. As for desire to do nice things together, why not? After all we also do not know whether cats means that someone likes them or hates them or wants to meet somebody who likes or hates or has or doesn't have them.

richardelguru said,

February 16, 2014 @ 8:02 pm

"algorithm method"

Birth control for Catholics who care about global warming?

Either that or it's pretty close to tautology.

Neal Goldfarb said,

February 16, 2014 @ 10:53 pm

I have it on good authority that it's not uncommon for women on OKCupid to list "good grammar" as one of the characteristics they're looking for in a man, and to mention their (the women's) facility with the Oxford comma as one of their desirable characteristics (along with being good at parallel parking—go figure).

This is perhaps better evidence than the Wired story of a link between grammar (or at least garrmra) and sexual success. And if so, it could provide additional evidence for the view that language evolved as a result of natural selection, despite the Chomskyans' claim to contrary. On this issue, see Sex and Syntax: Subjacency Revisited by Ljiljana Progovac.

Mar Rojo said,

February 17, 2014 @ 9:05 am

Kinda dampened the fun of Pullum's post.

Hitchcock said,

February 17, 2014 @ 3:54 pm

Prof. Pullum has updated his original post to address (in a manner of speaking) Prof. Liberman's concerns. Liberman appears to be in the unfortunate position of doing Zeppo opposite Pullum's Groucho.

Graeme said,

February 21, 2014 @ 6:00 am

Did Bo Diddley (or Jim Morrison) luck out when they sang 'Who Do You Love?'?