Ask a baboon

« previous post | next post »

Sindya N. Bhanoo, "Real Words or Gibberish? Just Ask a Baboon", NYT 4/16/2012:

Sindya N. Bhanoo, "Real Words or Gibberish? Just Ask a Baboon", NYT 4/16/2012:

While baboons can’t read, they can tell the difference between real English words and nonsensical ones, a new study reports.

“They are using information about letters and the relation between letters to perform the task without any kind of linguistic training,” said Jonathan Grainger, a psychologist at the French Center for National Research and at Aix-Marseille University in France who was the study’s first author.

Some other media coverage: Seth Borenstein, "If you're reading this, you might be a baboon", AP (reprinted in Christian Science Monitor, 4/13/2012); Sharon Begley, "This is Dan. Dan is a Baboon. Read, Dan, Read", Reuters (reprinted in the Chicago Tribune, 4/12/2012); "See Dan read: Baboons can learn to spot real words", Fox News, 4/12/2012; "Baboons leave scientists spell-bound", ABC Science; "Baboons 'trained to read English'", ITN, 4/13/2012; "Baboon Word Skills Cause Linguistics Rethink"; "Reading time at the zoo: the baboons that excel at English"; "Baboons have a way with words"; "Baboons can recognize written words, study finds"; "Baboons touch on evolution of reading"; etc., etc.

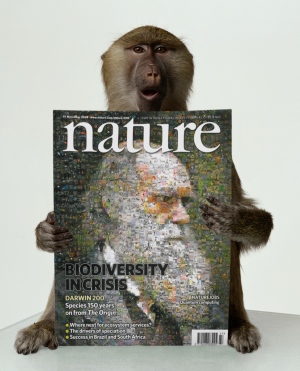

Nature covered this story in its news section under the headline "Baboons can learn to recognize words", illustrated with a picture of a baboon holding up an issue of their journal, over the caption "Baboons can learn how to work out when a four-letter word is real English, and when it's nonsense".

The paper behind all the buzz is Jonathan Grainger, Stéphane Dufau, Marie Montant, Johannes C. Ziegler, and Joël Fagot, "Orthographic Processing in Baboons (Papio papio)", Science 336 (6078) pp. 245-248, 4/13/2012. The press release was published in Science Daily as "Baboons Display 'Reading' Skills, Study Suggests; Monkeys Identify Specific Combinations of Letters in Words", 4/16/2012.

There are two sad facts about this situation. The first sad fact — no less sad because it's completely predictable — is that the study itself is far more circumspect than the press coverage:

Over a period of a month and a half, baboons learned to discriminate dozens of words (the counts ranged from 81 words for baboon VIO to 308 words for baboon DAN) from among a total of 7832 nonwords at nearly 75% accuracy (Fig. 2 and table S1). This in itself is a remarkable result, given the level of orthographic similarity between the word and nonword stimuli. More detailed analyses revealed that baboons were not simply memorizing the word stimuli but had learned to discriminate words from nonwords on the basis of differences in the frequency of letter combinations in the two categories of stimuli (i.e., statistical learning). Indeed, there was a significant correlation between mean bigram frequency and word accuracy [correlation coefficients (r) ranged from 0.51 for baboon VIO to 0.80 for baboon DAN, all P values < 0.05; see supplementary materials]. More importantly, words that were seen for the first time triggered significantly fewer “nonword” responses than did the nonword stimuli (Fig. 3). This implies that the baboons had extracted knowledge about what statistical properties characterize words and nonwords and used this information to make their word versus nonword decision without having seen the specific examples before. In the absence of such knowledge, words seen for the first time should have been processed like nonwords.

That is, the study's key claim is not that baboons can learn to read or to spell or to distinguish English words from non-words in a general sort of way, or even that they necessarily can memorize the spelling of 70-300 specific English words, but rather than the baboons in this study learned something like differences in bigram (letter-pair) frequencies, or perhaps other differences in "the frequency of letter combinations", and used this knowledge to distinguish a smallish set of English words from a larger set of non-words, where "distinguish" means forced-choice discrimination at about 75% correct, where chance would be 50%.

The second sad fact — and this one is also, alas, predictable for a paper related to language published in Science — is that the study's (relatively circumspect) conclusions are in fact not supported by the evidence provided. The problem should be obvious to anyone with general scientific abilities who reads the paper somewhat carefully — and it takes just a few minutes to establish this point quantitatively.

In particular, when we discussed this paper yesterday in a small class on mathematical modeling of linguistic phenomena, the students immediately asked whether bigrams were really needed. Perhaps only the first letter would be enough? Or maybe the words and non-words could be distinguished purely on the basis of their unigram frequencies (that is, the frequencies of single letters, regardless of context)? Such questions were obvious ones given the paper's description of how the two sets were chosen:

A set of 2,235 English four-letter words and their printed frequencies was extracted from the CELEX word-frequency corpus (23). Bigram frequencies were calculated for each of the three contiguous bigrams of a word (letters 1&2, letters 2&3, letters 3&4) counting the number of times these bigrams appeared in the corpus at the same position. Mean bigram frequency was calculated for each word by averaging the three positional bigram frequencies. The 500 words with the highest mean bigram frequency were selected as “word” stimuli. A set of 10,091 four-letter nonwords was created using all the bigrams that appeared at a particular position (initial, medial, terminal) in the CELEX English four-letter word list. All nonwords were composed of one vowel letter and three consonants, and the vowel could be at any position. Each nonword was associated with a mean bigram frequency that was calculated using positionspecific bigram frequencies as with the word stimuli. We then selected 7,832 nonwords that had a mean bigram frequency that was less than the lowest mean bigram frequency of the word stimuli (mean bigram frequency for words, 3.60×10-4; mean bigram frequency for nonwords, 5.96×10-5)

According to the list of words and non-words in the paper's Table S2, these were the overall single-letter percentages in the data presented to Dan (the best-performing baboon),

| Letter | In word list | In non-word list |

| a | 7.0% | 5.5% |

| b | 2.4% | 3.2% |

| c | 2.5% | 4.4% |

| d | 3.4% | 3.1% |

| e | 15.6% | 5.3% |

| f | 1.4% | 3.7% |

| g | 1.5% | 3.8% |

| h | 5.2% | 2.8% |

| i | 5.5% | 4.9% |

| j | 0.2% | 0.9% |

| k | 3.0% | 4.0% |

| l | 4.0% | 7.5% |

| m | 4.4% | 3.5% |

| n | 4.9% | 5.8% |

| o | 8.6% | 4.9% |

| p | 2.9% | 4.1% |

| q | 0.0% | 0.2% |

| r | 6.3% | 8.1% |

| s | 6.4% | 8.2% |

| t | 7.5% | 5.3% |

| u | 1.3% | 4.4% |

| v | 0.8% | 1.4% |

| w | 3.6% | 2.8% |

| x | 0.2% | 0.8% |

| y | 1.3% | 0.0% |

| z | 0.2% | 1.4% |

These unigram percentages are certainly different — but are they different enough?

In the context of the Enigma cryptanalysis during WWII, Alan Turing suggested a way to combine multiple pieces of individually weak evidence in order to decide between two hypotheses: for each piece of evidence, take the log of the ratio of its estimated probability given hypothesis X to its estimated probability given hypothesis Y, and then add up these log likelihoods for all the available evidence. A positive value indicates that the evidence favors hypothesis X; a negative value favors hypothesis Y.

This idea is now a commonplace one — it's a feature of undergraduate computer science or statistics courses. And Joshua Gold and Michael Shadlen ("Banburismus and the Brain", Neuron 36:299-308, 2002) have presented neurological evidence that monkeys use a similar method in performing a direction-discrimination task (see also "Bletchley Park in the Lateral Interparietal Cortex", 1/9/2004).

We can apply the same method to Dan's overall performance record. The log likelihood ratios implied by the table above are

| Letter | log(P(L|Word)/P(L|NonWord)) |

| a | 0.24397 |

| b | -0.31128 |

| c | -0.56538 |

| d | 0.10260 |

| e | 1.07683 |

| f | -0.97082 |

| g | -0.96058 |

| h | 0.63644 |

| i | 0.12105 |

| j | -1.33214 |

| k | -0.28564 |

| l | -0.63208 |

| m | 0.23143 |

| n | -0.17147 |

| o | 0.55914 |

| p | -0.35151 |

| q | -7.62213 |

| r | -0.25265 |

| s | -0.25893 |

| t | 0.34875 |

| u | -1.21085 |

| v | -0.51273 |

| w | 0.25023 |

| x | -1.62841 |

| y | 9.47496 |

| z | -1.78036 |

(In order to avoid infinities, I've substituted 1/1000000 for the zeros in the probability tables, corresponding in this case to q for words and y for nonwords.)

The first word on the list supplied from Dan's data was ACME: the log likelihood ratios of its letters are

0.24397 -0.56538 0.23143 1.07683

with a sum of 0.98684, indicating a (correct) guess of "word" with estimated odds of exp(0.98684) = 2.7 to 1. (This is the "first" word in collating order — we aren't given the actual order of presentation.)

The first non-word on Dan's list is ABBS, with unigram log likelihood ratios of

0.24397 -0.31128 -0.31128 -0.25893

and a sum of -0.63753, indicating a (correct) guess of "not a word" with estimated odds of 1.9 to 1.

If we apply this method to all of Dan's data, the resulting guesses would be correct 75% of the time for words, and 76% of the time for non-words, which is roughly how the baboons performed.

Of course, this is not a very plausible model of Dan the baboon's learning process, since at the start, he doesn't know anything about any properties of word vs. non-words, including their relative unigram frequencies. But in fact, his actual task was an easier one, not a harder one. He's not being asked to decide between arbitrary words and arbitrary non-words — he only needs to distinguish between the specific list of words that he's learned up to a given point in the experiment, and everything that's not on this (initially small) list.

Thus in the first phase, all he has to do is to distinguish between ACME and a bunch of things that aren't ACME (SHOC, FETT, PLUD, NURT, KNIG, HULP, NAMB, WOTS, JARF, ONTT, etc.). Then he has to distinguish e.g. ACME and HALL from a similar list of non-words; and so on.

As a result, in the earlier stages of the experiment, estimated unigram frequencies are a much better cue than they are later on — and Dan would be able to do much better than 75% if unigram frequencies were the only cue he was using.

That was the point we arrived at in a few minutes of class discussion yesterday afternoon. Last night, I got email from Fernando Pereira, pointing me to some notes by Yoav Goldberg ("Do Baboons really care about letter-pairs?") describing some more elaborate experiments on imitating the the incremental performance of one of the other baboons using a simple on-line learning algorithm. Yoav starts his note this way:

… the claim is that baboons learn patterns based on the frequency of a letter in a certain position within a word, and maybe about the frequency of different pairs of adjacent letters (bigrams).

Is that really the case? maybe. But in my view it is very hard to tell: while frequency probably had something to do with it, I am not sure the positional information is used. Why do I think so? because you can learn to predict with the same performance of the monkeys, without looking at position information.

The algorithm that he used is known as the "linear perceptron" — it was invented by Frank Rosenblatt in 1957, and it's a simple on-line method for finding the weights of a linear classfier. It's trivial to implement, and most undergraduate computer science majors (I think) know about it. Yoav provides his Python code, if you want to replicate his experiments or try some other ones.

Yoav started with a more complex feature set:

If we assume that the linear-model and the perceptron learning method are simple enough to be a lower bound on what monkeys can do (meaning that the monkeys’ representation and learning process are more elaborate than those used by the perceptron algorithm — not an unreasonable assumption in my view considering what we know about brain structure), we can see what kind of information is needed to perform the task at a certain level by looking at what the perceptron algorithm is capable of under different word representations. Maybe it’s sufficient to look at the first letter of each word and ignore the rest? Or maybe we need something much more elaborate?

Just the first letter was not quite good enough:

Maybe we could get good prediction by looking only at the first letter of each word? Under this representation, the word “TALK” is represented as a single property “T” while the word “ARCK” is represented as “A”. This is clearly a dumb representation. Still, the algorithm can get an accuracy of 60% using this representation, and of almost 62% by looking only at the third letter. The baboons probably looked at more than one letter.

However, the first two letters were too much information:

What if we look at the pair of the first two letters? Under this representation, the word “TALK” will be represented as a single property “TA” and the word “ARCK” as “AR”. The algorithm learns to predict with 80% accuracy. Better than the apes.

The middle two letters were even better (and thus even worse as a model of the baboons):

How about the middle letter-pair? Under this representation, the word “TALK” will be represented as “AL” and the word “ARCK” as “RC”. Now the algorithm learns to predict with 85% accuracy — much better than the apes.

Full bigram information is way too good:

What if we look at all the consecutive letter pairs? Here “TALK” is represented as the 3 properties “TA, AL, LK”. The algorithms learns to predict with a striking 97% of accuracy.

But the decontextualized unigram distributions are just about right:

Another representation would be the set of letters in a word, without the position information. Under this representation, both “TALK” and “KTAL” would be represented as the four properties “T, A, K, L”. We look at the letters that compose each word, but not at the relative order between them. Accuracy is 76%, very similar to what the monkeys did.

(As Yoav observed, this is a kind of lower bound on performance, since a linear perceptron applied to this problem is not an especially fast-learning algorithm. Other choices would do better for a given feature set.)

His conclusion:

I have shown that a simple algorithm can learn to do just as good as the baboon by looking at only at the different letters in a word, without regarding their order. Can we conclusively say that baboons don’t consider letter position when “reading”? Not really. Perhaps the baboons are not as smart as our algorithm. Maybe they do look letter-pairs and letter positions. But what we can conclusively say is that in the specific data presented to the monkeys, the letter frequencies are sufficiently informative to perform on their level, using a simple learning method. This means that while the baboons certainly learned to “read”, it is not clear what kinds of information they used to do so. Every dataset contain patterns to be discovered. Some patterns are informative, others are less so. Some make sense, some less so. These patterns can be picked up by either monkeys or machines, and it is very difficult, by just looking at the performance, to tell which kinds of patterns are being learned.

The main claim of the paper is interesting and valid: one does not need to know a language in order to distinguish real words in that language from some other letter sequences. It can be done by statistical algorithms, and it can be done by monkeys. But the secondary claim, that this is achieved by the monkeys looking at letter-positions and letter-combinations, is far less convincing.

I have a different impression about the relative importance of the paper's claims. I don't think there would have been nearly as much buzz if the only result had been the suggestion that baboons can learn to recognize letters, and the demonstration that they can distinguish letter textures with one unigram distribution from letter textures with a rather different unigram distribution. And in terms of the conclusions advanced by the authors, their assertion that this tells us something interesting about the evolutionary substrate for reading would be much less plausible:

Our findings have two important theoretical implications. First, they suggest that statistical learning is a powerful universal (i.e., cross-species) mechanism that might well be the basis for learning higher-order (linguistic) categories that facilitate the evolution of natural language. Second, our results suggest that orthographic processing may, at least partly, be constrained by general principles of visual object processing shared by monkeys and humans. One such principle most likely concerns the use of feature combinations to identify visual objects, which would be analogous to the use of letter combinations in recent accounts of orthographic processing. Given the evidence that baboons process individual features or their combinations in order to discriminate visual objects, we suggest that similar mechanisms were used to distinguish words from nonwords in the current study. Our study may therefore help explain the success of the human cultural choice of visually representing words using combinations of aligned, spatially compact, ordered sequences of symbols. The primate brain might therefore be better prepared than previously thought to process printed words, hence facilitating the initial steps toward mastering one of the most complex of human skills: reading.

Martyn Cornell said,

April 19, 2012 @ 9:29 am

Two questions: has anyone tried this experiment using (eg) American undergraduates and (eg) Korean, to see if and how well they can tell words from non-words in an alphabet they don't understand; and if baboons have this ability, how does it relate to skills they need in the real world?

[(myl) The literature on "artificial language learning" (or, as I prefer to call it, "pseudo-linguistic texture perception") includes many experiments somewhat similar to what you're asking about. But I don't think that there is anything exactly comparable.

One barrier to doing precisely-comparable experiments is the sheer scale of individual participation. The touch-screen training stations (which provided food rewards) were available to the baboons 24/7 for a month and half, and the Materials and Methods section explains that "the baboons regularly perform between 1000 and 3000 trials/day [ … or] about 50,000 trials/baboon".

After 50,000 trials over a period of 6 weeks, I suspect that undergraduates, American or Korean, would become quite well familiarized with an alphabet of 26 novel glyphs (at least if you could find a way to motivate them to pay attention over that period of time…) In fact, I suspect that any reward system adequate to motivate humans to participate in 50,000 trials would also motivate them to apply some conscious analysis to the problem.]

Jerry Friedman said,

April 19, 2012 @ 12:33 pm

You might be able to get a lot of undergrads to do this by telling them it's the first step to learning magic spells and they can get experience points for doing it. (Or maybe I just said that because I'm reading REAMDE by Neal Stephenson.)

cclarinet said,

April 19, 2012 @ 1:25 pm

Could you explain further why:

"But in fact, his actual task was an easier one, not a harder one. … in the earlier stages of the experiment, estimated unigram frequencies are a much better cue than they are later on"?

I'm asking because the unigram perceptron model used by Goldberg, even though it's exposed to each stimulus serially, learned with 76% accuracy, about as well as your simpler unigram model. Perhaps it's due to the different datasets used (Goldberg used words from VIO's dataset, not DAN's)?

[(myl) A linear perceptron is far from an optimal way to learn this particular discriminant function. An algorithm that just kept track of unigram frequencies seen so far in each class should make a much better-performing classifier in early stages; an algorithm that used somewhat more clever unigram-frequency stimation techniques could do even better.

As Yoav observes, the performance of a linear perceptron is a lower bound, not an upper bound or even a representative number for appropriately-deployed machine learning techniques.]

Steve Kass said,

April 19, 2012 @ 6:55 pm

Did any of the monkeys know how to type?

Because believe it or not, all six baboons performed much better at discriminating words from non-words when the letters in the stimulus all came from the right-hand side of the typewriter keyboard!

This QWERTY BABOON effect was especially pronounced for non-words. For each and every monkey, the percentage of non-words correctly identified as such was a strictly increasing function of RSA (QWERTY right side advantage). The difference in success rate between RSA = -4 words and RSA = +4 words was astonishing and ranged from 14% (Cauet) to a mindblowing 31% (Arielle).

Monkey Dan identified non-words with an RSA of 4 (such as hilm) as non-words in 88.7% of the trials. He did much less well with RSA words comprising only left-side letters (like grga).

I hope someone will check my work. Ironically, I did this in a hurry because I have yet to finish writing the statistics exam I'm giving my class tomorrow, and because I could hardly wait to say something about it.

[(myl) Wow.]

Just another Peter said,

April 19, 2012 @ 8:10 pm

@Steve: That difference could possibly be accounted for by the fact that the right-hand side letters appear more distinct than left-hand side letters. On the left we have E-F, C-G-Q, A-R, S-Z (assuming the words were provided in capital letters).

Mark Liberman said,

April 19, 2012 @ 10:20 pm

A bit earlier, a commenter asked what the purpose of this study was. I answered, roughly as below, but then hit "delete" rather than "save" through a slip of the mouse (the buttons are adjacent, not that this is a good excuse). So first, apologies for the deletion.

As for the study's motivation, a Google Scholar search for the first author's name will let you know that he's interested in theories of reading and especially in models of visual word recognition, e.g. this paper or this one or this one or this one.

In that context, it makes sense to ask about "visual word recognition" in a species whose visual system is similar to humans' visual system, but which has no linguistic substrate (whether phonological or orthographic) involved in the process.

Since positional letter coding, letter n-grams, etc., are known to be involved in human visual word recognition, it would also make sense to check for the value of such things in explaining baboon "word recognition". Unfortunately, in this case neither the authors nor the reviewers seem to have asked a fairly obvious question about the efficacy of even simpler models.

Richard Futrell said,

April 19, 2012 @ 10:54 pm

Interestingly Baayen et al. (2010, link to a draft) model a surprising range of effects in lexical decision time using what is essentially just a perceptron that learns to discriminate atomic "meanings" for words given only letter unigrams and bigrams, with no intermediate representation at all.

While this does include bigrams, it suggests that what we humans are doing might not be that different from what the baboons are doing.

D.O. said,

April 19, 2012 @ 11:10 pm

Prof. Liberman, thank you for your reply. It was my question that got deleted. Unfortunately, I still don't understand what was the purpose of making categories words/not-words for baboon training experiment coinside with what are the words/not words in English language. It seems to be to study some implication which I completely miss.

[(myl) In a way, this is like asking "Why do people hide decorated hen's eggs for children to find at Easter time?" There is some relevant logic (spring/new life/resurrection) but mostly the answer has to be "that's part of the culture". Psychologists have been studying "lexical decision" tasks (where the subject is asked to decide whether or not a given stimulus is a word), and "visual word recognition" tasks, since the 1950s at least, and there are tens of thousands of related experiments and papers, and dozens of models of aspects of the results.

If you have some theory about linguistic processing, such experiments are one of the standard tools you can use to try to figure out what's going on. There are some easy-to-specify dependent measures (accuracy, reaction time), you can manipulate properties of the choice of stimuli and their presentation in innumerable ways, etc. There are lots of ways to decorate the eggs, and lots of places to hide them.

Here the experimenters substituted for "word versus non-word" a different distinction: "letter sequence (of the type that) the animals are trained to put in category X" versus "letter sequence (of the type that) the animals are trained to put in category Y". To make the analogy as complete as possible, since "non-word" just means "any letter string that's not a word", they made the training highly asymmetrical. But once they'd done that, they were on familiar ground, or as familiar as you can be in trying to teach baboons to "read".]

Chris Cox said,

April 20, 2012 @ 9:21 am

This is a really great article—the possibility that the baboons were tracking unigrams (rather than bigrams) is something I hadn't considered but makes a great deal of sense given the simulations you report. I wonder, though, if the baboon's performance (and whatever strategy they utilized to achieve that performance) actually reflects the extent of the baboon's ability for tracking statistical regularities. I think again the simulations you report might hold insight: how do the learning curves look on the unigram vs. the bigram perceptron? If it is the case that the unigram model performs better *earlier* than the bigram model, this might be telling. The baboon has no idea what these stimuli are—the fact that they are words is irrelevant. They were not trained to appreciate the function of letters, or that words are "constructed". Therefore, there might be a strategic aspect to these results. The baboons tracked the most accessible regularities, and because that won them their reward more often than not, and because exploring a superior but more complex strategy may have deprived them of reward for some period of time (that they wouldn't know would have an end and would bear fruit), they stuck where they were. I realize there is a lot of anthropomorphism in this suggestion. However, I don't think it is fair either to argue "between strategies X and Y, Y is superior for this task. Baboons do not have results consistent with using Y, therefore baboons are incapable of Y." I don't feel like that is what you are arguing, but I feel like it bears mentioning.

Just a thought. Thanks for the post!

[(myl) I would be very surprised if baboons were not sensitive to distributions of pairs or triples of local features, under appropriate circumstances. The point here is just that the data presented in the paper doesn't clearly support the hypothesis that they were paying attention to such distributions in this case.

It might be that careful modeling of the more detailed experimental records might change this. As Yoav notes, the paper's supplementary materials don't actually give us the details of what order things were presented in, and what the responses to individual presentations were.]

Steve Kass said,

April 20, 2012 @ 1:17 pm

I think one should be careful interpreting Yoav’s perceptron results. His results do indicate that there’s sufficient information in the unigram frequencies from which to construct an algorithm that achieves the baboon’s success rate, and that near-perfect success is (mathematically) achievable given the bigram frequencies. This latter finding, though, is not “striking”; it’s by design. The word/nonword lists were constructed specifically so that a linear classifier based on bigram frequencies could succeed perfectly. Strings were only put into the nonword list if their mean bigram frequency was less than the mean bigram frequency of any string in the word list.

The fact that the bigram frequencies are sufficient to determine the type of string (word or nonword) with near-100% success along with the fact that the baboons did not reach near-100% success – these facts together don’t invalidate the possibility that the baboons were in fact assessing digrams and making decisions based on their frequency.

Without suggesting this was Yoav’s point, it would in any case be wrong to say that “if the baboons were processing the digram frequencies, they should have done better, so they weren’t processing the digram frequencies.” Nevertheless, he seems to come close to making this point when he says

I wouldn’t agree that the linear perceptron should provide a lower bound on what the baboons can do. (If primates could do anything describable by logical processes simpler than our brains perform, I would be a much better backgammon player, for example.)

Unlike trained linear classifiers, learned baboons may not give consistent responses to identical stimuli – they may remain imperfect in their ability to apply things they’ve learned. Baboon Dan, for example, might never learn to consistently classify “farm” as a word and “furm” as a nonword; he might get to the point where he deems “farm” a word 80% of the time and “furm” a word 30% of the time. A trained classifier, on the other hand, will assess some stimuli correctly 100% of the time and some incorrectly 100% of the time.

(I am guessing here, because as Yoav noted, the time sequence of baboon stimuli is absent from the article’s supplemental data, and this makes it impossible to find out how they performed once they had “learned” as much as they were able to learn.)

Steve Kass said,

April 20, 2012 @ 1:24 pm

I hadn't read Chris Cox's comment when I wrote and posted mine, and I see that Chris already raised the same concern I did about interpreting the perceptron results. I might have saved some time paying attention and just saying "I also agree with Chris Cox," which I do.

David Fried said,

April 20, 2012 @ 3:52 pm

I don't understand the statistical arguments at all, unfortunately, but with some trepidation I'd like to ask a question anyway. what would be the effect of including nonwords that conform to English morphology, say "tord" or "mard" or "cowel"? At a trivial level, this would make plain that the baboons are not distinguishing English words from 'nonwords' or 'nonsense,' but doing shape/pattern recognition, showing that this experiment has absolutely nothing to do with the language or reading capabilities of baboons.

In fact, the inclusion of such 'nonwords' should help the baboons learn faster, by not limiting the sample field to letter combinations that happen to exist while excluding others that are equally likely.

But then you might vary the acceptable "nonwords" by introducing systematic small variations from acceptability, such as a single impossible consonant cluster like "tlowel." In effect, you would be opposing impossible "tlowel" to real or possible words like "towel" "cowl," "cowel", "rowel," "towel," "dowl," "dowel," "blowel", etc. How long would it take the baboons to recognize that certain combinations, like initial "tl" are impermissible?

I think this is going somewhere, but I'm not sure where . . .

Sparky said,

April 21, 2012 @ 1:02 am

Oh, dear lord, how sweet it would be, at my advanced age, and with my lack of leisure, to attend a "small class on mathematical modeling of linguistic phenomena" . . . .

un malpaso said,

April 21, 2012 @ 9:26 pm

At risk of jumping heedlessly into the explosive primate language debate without a professional ax to grind (how's that for a bizarre metaphor?), I just wanted to ask…

– in what way is "shape/pattern recognition" not relevant to an investigation of language's neural substrates?

– And is it actually such a radical thing, post-Chomsky, to claim that human and nonhuman mammals do exhibit certain similar cognitive processes, some of which may be relevant to the study of the origin of language in a biological continuum?

(I know this kind of precision isn't the issue in mass media accounts, but we are beyond that point here, surely)

Soon they’ll be blogging | Stats Chat said,

April 22, 2012 @ 12:10 am

[…] at which two-letter pairs appeared more frequently in the real words. In fact, as Mark Liberman describes at Language Log, it was probably even simpler than that. If the baboons just recognised the shapes of […]

/df said,

April 25, 2012 @ 12:04 pm

What evidence is there that any individual letters were separately perceived by the baboons? Perhaps they perceived the stimulus words just as combinations of lines: horizontal, vertical, diagonal, curved. Shouldn't one expect to have demonstrated the subjects' knowledge of the character set before testing them for lexical tasks, and before testing hypotheses based on recognising character combinations?

Jess Tauber said,

April 25, 2012 @ 1:28 pm

I've thought for a long time now that it would be fascinating to examine the ability of animals to deal with phonosemantically transparent formations, such as ideophones, from languages that have very many of them. Even tried to get the Rumbaughs to try this some years ago. Would the animals 'get' the mappings? In most cases ideophonic meanings lack the higher levels of abstractness present in many normal lexical class items. They deal with types of sensation, motion, material distribution, emotions and other things that at least higher animals will be familiar with, even if they have neither need nor ability to express them communicatively. The big question I have is whether they build their concepts up from relatively discrete primitives the way we often do, and and if so can they be trained to associate them with particular representational devices.

A.M. said,

April 25, 2012 @ 8:29 pm

I'd just add another point — even assuming that the baboons learned to recognize individual letters and were sensitive to letter position, there is no particular reason to assume that they attended to adjacent letter pairings (bigrams), as opposed to other combinations — such as attending to the end most letters (first/last). (e.g. is M _ _ T a word? what about X _ _W ?)

Also, as an additional point to frustrate the statisticians, the fact that all 6 baboons learned to distinguish familiar & quasi- familiar letter patterns from unfamiliar, does not mean that each of the 6 relied on the same mental process for ascertaining salience of features. In fact, the differential performance of the individual baboons could easily be attributable to the top-performer (Dan) employing a more effective strategy for feature recognition than the weakest performer.

In any case, it's quite likely that the primates were relying on a combination of salience & feature recognition strategies. For example the bigram TH is fairly common at the beginning and end of English words, so it's presence would be a useful cue. But that is not true of all bigrams. In some cases, single letters would be a more significant cue as to nonword status; for example, most 4-letter sequences containing the letter Q in any position will be nonwords. To the baboons, the ability to identify nonword status was as significant as identifying words — their task was to match a word sequence to a disc or a cross; either way the got a treat.

In fact, some baboons might have employed a different sort of statistical analysis: the baboons were presented word sets in groups of 100, with each set evenly divided among words/nonwords. ("Words and nonwords

were presented randomly in blocks of 100 trials. The 100-trial sessions were composed of 25 presentations of a novel word to learn, 25 presentations of words randomly selected from already

learned words, and 50 nonword trials.") So within each set, there will be equal distribution between treat-producing presses of the cross and disc symbols. This is similar to coin-toss probability analysis, with the constraint that at the end of the series, the results will be evenly divided.

Dom Massaro said,

April 27, 2012 @ 4:41 pm

The reanalysis of the baboon study reveals a situation usually endemic to behavioral inquiry. Manipulating one variable necessarily necessitates variation in other variables. In the baboon study, manipulating position bigram frequency changed overall single letter frequency. To overcome this limitation in this type of study, we have carried out individual item analyses to determine how several variables predict performance on the individual items. With human readers, we have found that position sensitive log bigram frequency is a much better predicted than single letter frequency. This would be a telling analysis to carry out on the baboon data. Hopefully, the authors have the performance measures for both the “word” and “nonword” individual items and, if they do, they will provide us with these analyses.

On a more positive note, regardless of what variable best predicts the baboon behavior, the results show that the baboons processed the items in terms of their individual letters. From my vantage point, this result is a new and strong finding.

Massaro, D.W., Taylor, G.A., Venezky, R. L., Jastrzembski, J.E., and Lucas, P.A. (1980). Letter and Word Perception: the Role of Visual Information and Orthographic Structure in Reading. Amsterdam: North-Holland.

Massaro, D.W.,& Cohen, M.M. (1994). Visual, Orthographic, Phonological, and Lexical Influences in Reading. Journal of Experimental Psychology: Human Perception and Performance, 20, 1107-1128. http://mambo.ucsc.edu/publications/all-papers.html#1994

Massaro, D.W.,& Jesse, A. (2005). The Magic of Reading: Too Many Influences for Quick and Easy Explanations. In T. Trabasso, J. Sabatini, D.W. Massaro,& R.C. Calfee (Eds.), From orthography to pedagogy: Essays in honor of Richard L. Venezky (pp.37-61). Mahwah, NJ: Lawrence Erlbaum Associates.

http://mambo.ucsc.edu/publications/all-papers.html#2005

Garrett Wollman said,

April 27, 2012 @ 9:09 pm

Several days late, I know, but I just wanted to point out that my undergraduate computer science education, two decades ago, didn't get anywhere near machine-learning algorithms (unless that was discusssed in one of the algorithms prof's 8 AM lectures that I missed). If modern CS undergrads actually do get into that sort of material, without taking any AI electives, the field has really made substantial progress.

Dan Scherlis said,

May 4, 2012 @ 1:50 pm

For the record, here's another example of some over-interpreted and overly-credulous reporting on this work, from National Geographic.

A 2-minute video shows the experiment in action.

The narrator tells us:

Of course, Mark gives us plenty of alternate debunkings for that "long-held notion", and those "ancient abilities" might include vision, pattern-recognition, and frequency-estimation.

From: http://video.nationalgeographic.com/video/news/animals-news/baboons-learn-words-vin/

Mike Jones said,

November 22, 2013 @ 6:59 pm

Does the following have any relevance to the experiment?

Only first letter and last letter matter in spelling.

("I cnduo't bvleiee taht I culod aulaclty uesdtannrd waht I was rdnaieg.")

http://www.ecenglish.com/learnenglish/lessons/can-you-read