Replicate vs. reproduce (or vice versa?)

« previous post | next post »

Lorena Barba, "Terminologies for Reproducible Research", arXiv.org 2/9/2018:

Reproducible research—by its many names—has come to be regarded as a key concern across disciplines and stakeholder groups. Funding agencies and journals, professional societies and even mass media are paying attention, often focusing on the so-called "crisis" of reproducibility. One big problem keeps coming up among those seeking to tackle the issue: different groups are using terminologies in utter contradiction with each other. Looking at a broad sample of publications in different fields, we can classify their terminology via decision tree: they either, A—make no distinction between the words reproduce and replicate, or B—use them distinctly. If B, then they are commonly divided in two camps. In a spectrum of concerns that starts at a minimum standard of "same data+same methods=same results," to "new data and/or new methods in an independent study=same findings," group 1 calls the minimum standard reproduce, while group 2 calls it replicate. This direct swap of the two terms aggravates an already weighty issue. By attempting to inventory the terminologies across disciplines, I hope that some patterns will emerge to help us resolve the contradictions.

This is a careful, exhaustive, and detailed survey of an unfortunate development that has introduced considerable confusion into a crucial area of science and engineering.

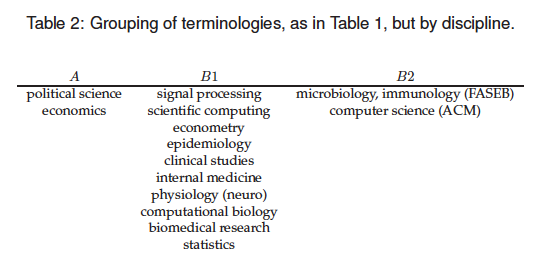

Barba notes that the current confusion divides up by discipline this way:

Her summary of the historical events that led to this debacle:

The Claerbout/Donoho/Peng terminology [(myl) her B1] is broadly disseminated across disciplines (see Table 2). But the recent adoption of an opposing terminology by two large professional groups—ACM and FASEB—make standardization awkward. The ACM publicizes its rationale for adoption as based on the International Vocabulary of Metrology, but a close reading of the sources makes this justification tenuous. The source of the FASEB adoption is unclear, but there’s a chance that Casadevall and Fang (2010) had an influence there. They, in turn, based their definitions on the emphatic but essentially flawed work of Drummond (2009).

And her recommendations for the future:

It seems that any attempt to unify terminologies can only start by conversations with ACM and FASEB to explore how slim the chance might be that they backtrack their adoption to align with the predominant usage. Conversations with leaders in the fields of political science and economics, where ‘replication‘ is predominant, should follow. Use of the term here often applies to a new study that aims to confirm findings reported in a publication (and thus aligns with Peng’s), but it also spills over to calling the research compendia ‘replication files.’ This custom in the American Journal of Political Science is unlikely to be reversed, but it can be absorbed into the Claerbout/Donoho/Peng convention, as reproducible research is also necessary for successful replication. Whereas economics and political science are predominantly empirical disciplines, using software for statistical analysis is ubiquitous. A feasible way out would be if these fields accept calling ‘reproducible research’ that which openly archives full sets of ‘replication files.’

Some past LLOG coverage of the general issue of reproducibility:

"Reproducible research", 11/14/2008 (see also here)

"Reproducible Science at AAAS 2011", 2/18/2011 (see also here and here)

"Literate programming and reproducible research", 2/22/2014

"Reliability", 2/28/2015

And a note about the terminological mess that Prof. Barba documents and explains in depth and detail:

"Replicability vs. reproducibility — or is it the other way around?", 10/31/2015

John Spevacek said,

February 15, 2018 @ 6:55 am

Interesting work. I hope that a third party will be able to re____________ the results.

(Sorry, couldn't help myself. )

mgh said,

February 15, 2018 @ 12:26 pm

This passage from the linked arxiv article is not consistent with placing biomedical research in B1 (rather than A):

"The National Institutes of Health (NIH) maintains a website titled “Rigor and Reproducibility,” but does not provide terminology.4 It set up requirements for strengthening reproducibility of biomedical research in January 2016, providing instructions for grant proposals. NIH focuses on rigor (concerning methods and materials) and transparency (concerning publication). Their defini- tion of reproducibility is the straight combination of those two notions, while making no particular distinction with the word ‘replication’"

It also does not seem factually correct, as the NIH does seem to be clear that it is using reproducibility to mean different scientists following the same experimental design:

"Two of the cornerstones of science advancement are rigor in designing and performing scientific research and the ability to reproduce biomedical research findings. The application of rigor ensures robust and unbiased experimental design, methodology, analysis, interpretation, and reporting of results. When a result can be reproduced by multiple scientists, it validates the original results and readiness to progress to the next phase of research."

"Replicates" are usually used (at least in biomedical research) to mean the same scientists performing the same experiments with the same equipment (ie, technical replicates). As reflected in the NIH standards, "reproducibility" usually refers to different scientists performing the same experiments with different equipment.

Ethan said,

February 15, 2018 @ 1:18 pm

As a biomedical scientist, I agree with the comment of mgh. However I think there are actually two distinct uses of "reproduce" that are relevant in this field and are generally clear in context. One refers to recalculation or reevaluation of conclusions from the same original data, for example when a referee notes "I cannot reproduce the claimed level of significance from the values reported in table 3". This is a case of "same data, same method, different scientist" (B1). The second use, as noted by mgh, is an attempt by different scientists to re-perform the original experiment and analyze the new data using the original method (B2). I would not expect the word "replicate" to be used for either of these cases.

KevinM said,

February 15, 2018 @ 3:22 pm

And don't forget the Journal of Irreproducible Results.

http://www.jir.com/

For what it's worth in the usage survey above, this scientific humor magazine was founded in 1955 by a virologist and a physicist.

Ned said,

February 15, 2018 @ 3:42 pm

For biomedical scientists there is another definition of replicates, as opposed to repeats. Suppose you extract something (RNA, protein, blood sample, whatever) from a mouse, and then run some analysis on this material in triplicate. The assay in triplicate can tell you something about the reliability of the assay, for example if you made a pipetting error with one of the three. However, as far as *biological* variability is concerned you still have n = 1 mouse, and you need to analyze more mice to provide evidence for the reproducibility of the results. For statistical analysis, 5 mice assayed in triplicate is n = 5, not n = 15.

As someone who deals with a lot of manuscripts I still find authors who are not clear on this. I generally suggest that they read this paper from David Vaux: Vaux DL, Fidler F, Cumming G. Replicates and repeats–what is the difference and is it significant? A brief discussion of statistics and experimental design. EMBO Rep. 2012 Apr 2;13(4):291-6. doi: 10.1038/embor.2012.36. PubMed PMID: 22421999; PubMed Central PMCID: PMC3321166.

Vaux would call the results of the triplicate assays replicates and those with different mice repeats.

DCA said,

February 16, 2018 @ 5:42 pm

Perhaps (off the cuff without yet reading the paper), the distinction can be clarified by the noun used? I'd say "reproducible research" as opposed to "replicable results" or "replicable findings". So far as I know "replicate" as used (for a very long time) by philosophers of science means "do the whole thing over from scratch and get the same answer", where "the thing" they have in mind is a lab experiment: so, different data because you collected the results anew.

I'm certainly biased by having read Claerbout's paper soon after it came out, and thinking, I should aim to make this possible for myself: his focus was on, can you reproduce what you yourself did a few years back?