Are "heavy media multitaskers" really heavy media multitaskers?

« previous post | next post »

A few days ago, I asked for help in tracking down some of the scientific support for Matt Richtel's claims about the bad effects of "digital overload" ("More factoid tracking", 9/1/2009). One of the more trackable factoids was the "study conducted at Stanford University, which showed that heavy multimedia users have trouble filtering out irrelevant information — and trouble focusing on tasks". And sure enough, The Neurocritic quickly came up with a reference that fits: Eyal Ophir, Clifford Nass, and Anthony Wagner, "Cognitive control in media multitaskers", PNAS, Published online before print 8/24/2009.

I believe that the full paper is freely available at the link given above (please let me know if this is wrong), and if you're interested in this topic, I urge you to read it. As in the case of the last paper by a Stanford psychologist that was discussed here, you should start by asking "Never mind the conclusions, what's the evidence?". And again, you may conclude that the descriptions of this research — in the popular press and even in the original paper — lead readers pretty far beyond the interpretations warranted by the research itself.

Here's the paper's abstract:

Chronic media multitasking is quickly becoming ubiquitous, although processing multiple incoming streams of information is considered a challenge for human cognition. A series of experiments addressed whether there are systematic differences in information processing styles between chronically heavy and light media multitaskers. A trait media multitasking index was developed to identify groups of heavy and light media multitaskers. These two groups were then compared along established cognitive control dimensions. Results showed that heavy media multitaskers are more susceptible to interference from irrelevant environmental stimuli and from irrelevant representations in memory. This led to the surprising result that heavy media multitaskers performed worse on a test of task-switching ability, likely due to reduced ability to filter out interference from the irrelevant task set. These results demonstrate that media multitasking, a rapidly growing societal trend, is associated with a distinct approach to fundamental information processing.

Note, by the way, that the authors (unlike Matt Richtel) carefully avoid any suggestion of causation, instead writing about "systematic differences in information processing styles" and "a distinct approach to fundamental information processing". This way of putting it is consistent with media multitasking causing cognitive disabilities (in this experiment's sample), or cognitive disabilities causing more media multitasking, or some third variable influencing both. But as we'll see, it's not completely clear that the claimed association (between media multitasking and certain information-processing deficits) even exists.

What's the evidence? It starts, of course, with that "trait media multitasking index". They administered a 20-minute online questionnaire to 262 Stanford undergraduates, presumably from the Psych Department's subject pool.

The questionnaire addressed 12 different media forms: print media, television, computer-based video (such as YouTube or online television episodes), music, nonmusic audio, video or computer games, telephone and mobile phone voice calls, instant messaging, SMS (text messaging), email, web surfing, and other computer-based applications (such as word processing). For each medium, respondents reported the total number of hours per week they spend using the medium. In addition, they filled out a media-multitasking matrix, indicating whether, while using this primary medium, they concurrently used each of the other media “Most of the time,” “Some of the time,” “A little of the time,” or “Never.” As text messaging could not accurately be described by hours of use, this medium was discarded from the analysis as a primary medium, although it still appeared as an option in the matrix (meaning respondents could still report text messaging while being engaged in other media).

They then created a "Media Multitasking Index" (MMI).

Step 1 was to translate verbal responses about concurrent media usage into numbers, on a scale from "Never" = 0 to "Most of the time" = 1.

Step 2 was to create the sum mi of the numeric responses about concurrent media use for each of the 11 primary media. Thus a student who indicated that while web surfing (the 10th medium in the list), she listened to music "Most of the time", engaged in voice phone calls "Some of the time", checked email "Some of the time", engaged in IM conversations "Some of the time", and responded to SMS messages "Some of the time", would get m10 = 1+0.67+0.67+0.67+0.67 = 3.68.

Step 3 created the individual's MMI as a weighted sum of the mi values,

where hi the number of hours per day that the student estimated she spent using primary medium i, and and htotal is the sum of all media hours. Note that multimedia hours are counted multiple times, so that a respondent's htotal may be larger than her number of waking hours. Unsurprisingly, therefore,

Media multitasking was correlated with total hours of media use, r (260) = 0.46, P < 0.001.

The authors observe that

The MMI produced a relatively normal distribution, with a mean of 4.38 and standard deviation of 1.52. This suggests that there is not a bimodal distribution of “heavy multitaskers” and “nonmultitaskers.” Nonetheless, we can identify individuals who very frequently use multiple media and those who tend to limit their use of multiple media. […]

Based on the questionnaire, those students with an MMI less than one standard deviation below the mean (LMMs) or an MMI greater than one standard deviation above the mean (HMMs) were invited to participate. The invitation yielded 22 LMMs and 19 HMMs who gave informed consent and participated in the study for course credit.

(In fact, the number actually participating in the various subexperiments varied, down to 15 "Low Media Multitaskers" (LMMs) and 15 "High Media Multitaskers" (HMMs).)

Before going on to the behavioral experiments and their results, it's worth saying a few things about this Media Multitasking Index. First, high MMI values may correspond to many different patterns of life experience. You might be someone who has music coming into at least one earbud during many other life activities, media-related or otherwise; you might be someone who likes to keep a baseball or basketball game going in the background while studying or websurfing; you might be someone who's often talking on the phone or IMing friends while surfing the web. Each of these patterns could contribute the same amount to the MMI, but it's plausible that their psychological causes and effects (and their associations with other relevant lifestyle variables) are quite different.

Given this likely diversity of HMM experience (and we'll see later that the HMM group tends to have more variable performance on the behavioral tests), the rather small number of subjects is a reason for concern. Given that the study aimed to understand the effects of interactions among 12 different forms of "media", it's troubling that there were only 15-19 "High Media Multitaskers" tested, and that we don't know what sorts of media multitasking they actually reported engaging in. (And while we're focusing on this issue, it's worth noting that the pool of survey responders with MMIs at least one sd above or below the mean should have been (1/3)*262 ≅ 87, raising as usual the possibility that the transition from 87 possibilities to 19+22=41 volunteers might have introduced some sort of selection bias.)

This is Yet Another Reason to publish raw data — there's no reason that the authors couldn't have published (in a convenient digital form) all their media-use matrices, keyed to the performance of individual subjects on the various behavioral tests. This might make it possible to consider various alternative theories about which aspects of the media-use responses explained how much of the observed differences in performance (to the extent that the small number of subjects permits).

But this brings up a second — and much more important — issue with the MMI: it's entirely based on self-report. It's well known that self-reported measures of life patterns are not very reliable. Specifically, according to Bradley Greenberg et al., "Comparing survey and diary measures of Internet and traditional media use", Communication Reports 18(1):1–8, 2005:

Between the survey and diary methods, correlations range from .20 for listening to music off-line to .58 for email sent. Internet estimates are correlated .39 and television time estimates are correlated .35.

In general, subjects tend to over-report such activities in survey responses — by about 25% overall, in the just-cited study. But more important, the divergences between facts and self-reports are subject to several forms of recall bias, as a result of which conclusions based on self-reported data always need to be interpreted with great caution.

Let me give a personal example that may be relevant to this case. If you were to ask me to estimate how many stair-steps I encounter per day, the answer I give today would be significantly higher than the answer I would have given before August 3, when I tore some ligaments in my left knee. For someone on crutches, with one leg unable to bear weight, stairs are a lot more salient than they are for someone who can bound up and down them without any problems or concerns. There are a surprising number of stairways around Penn's campus that I never really noticed before, even though I go up and down them almost every day.

So it wouldn't be surprising to find that people with certain physical handicaps tend to give a higher estimate of the number of stairsteps they encounter in daily life, just because climbing stairs is more salient and thus more memorable to them. If we use such self-reported estimates as the basis for an epidemiological study of the effect of chronic stair-climbing on physical agility, we risk being led astray.

Similarly, it's plausible that people who "have trouble filtering out irrelevant information — and trouble focusing on tasks" might find the distractions of everyday multitasking more salient and thus more memorable, and therefore might tend to give somewhat higher estimates of how much of such multitasking they engage in. So given noisy estimates of media multitasking, and even a modest salience effect of this kind, the set of subjects with high MMIs may be significantly enriched in individuals who are more distractable or otherwise cognitively less able to deal with the demands of such multitasking.

I'm not going to discuss all of this paper's experimental results in detail, but I'd like to point out three things that seem to apply across all of them: the differences between the LMM and HMM groups are rare (that is, they appear only in a fraction of the experimental conditions where they might have been found), modest in effect size, and associated with higher variance in the HMM sample.

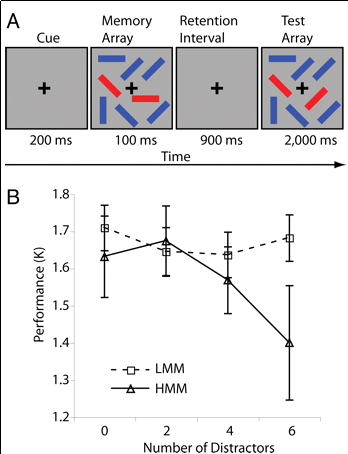

Thus consider their "Filter Task", where "participants viewed two consecutive exposures of an array of rectangles and had to indicate whether or not a target (red) rectangle had changed orientation from the first exposure to the second, while ignoring distractor (blue) rectangles":

Fig. 1. The filter task. (A) A sample trial with a 2-target, 6-distractor array. (B) HMM and LMM filter task performance as a function of the number of distractors (two targets). Error bars, SEM.

The only statistically significant difference between the LMM and HMM groups appears in the 6-distractor case, where there is a difference of about 0.3 (1.4 to 1.7) in the performance measure K. K is a somewhat complex measure, described in Edward Vogel et al., "Neural measures reveal individual differences in controlling access to working memory", Nature 438:500–503, 2005:

We computed visual memory capacity with a standard formula that essentially assumes that if an observer can hold in memory K items from an array of S items, then the item that changed should be one of the items being held in memory on K/S trials, leading to correct performance on K/S of the trials on which an item changed. To correct for guessing, this procedure also takes into account the false alarm rate.

In the crucial six-distractor case, the standard error for the HMM group was about 0.15 items, which corresponds to a standard deviation of about 0.15*sqrt(19) = 0.65, or more than twice the difference in the groups' mean values. The standard error for the LMM group was about a third of that. We don't have the raw data to check, but this is consistent with a pattern in which the lower performance of the HMM group was driven mainly by a few individuals with especially low scores, who are in the HMM group not because they actually engage a lot media multitasking, but because they are "multitasking handicapped" and so overestimate their consumption.

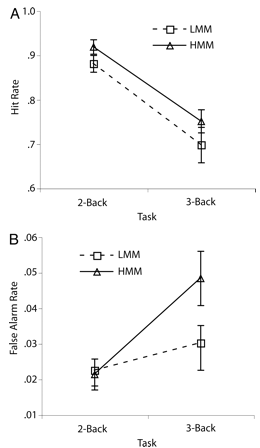

I'll end with their "two- and three-back" task, a version of a classic short-term memory experiment:

[P]articipants were presented a series of individual letters in the middle of the screen. Each letter was presented for 500 ms, followed by a white screen for 3,000 ms. Upon presentation of a letter, participants were to indicate whether or not the present letter was a “target,” meaning that it matched the letter presented two (for the two-back task) or three (for the three-back task) trials ago.

Before seeing the data, you might expect that the HMM group would be more likely to forget the correct letter in all conditions, and even more likely to do so in the three-back condition compared to the two-back condition. But what happened was this:

There was no significant difference in the hit rate for the 2-back or 3-back conditions, or in the false alarm rate for the 2-back condition. The only difference was in the false alarm rate for the 3-back condition. Again, the difference was modest in terms of effect size: about 4.8% vs. 3.2%, or a difference of about 1.6% in false alarm rate in the 3-back condition, where the HMM group has a standard error of about 0.7, or a standard deviation of 0.7*sqrt(15) = 2.7, corresponding to an effect size of about d=0.6.

The authors also performed a more complicated piece of data analysis:

This [pattern of performance] indicates that the HMMs were more susceptible to interference from items that seemed familiar, and that this problem increased as working memory load increased (from the two- to the three-back task). This problem also became more acute for HMMs as the task progressed, because proactive interference from irrelevant letters accumulated across the experiment. Specifically, a general linear model of the likelihood of a false alarm in the three-back task revealed: (a) no main effect of HMM/LMM status nor of time, but (b) a significant HMM/LMM status*time interaction, such that the number of false alarms increased over time more rapidly for HMMs, B = 0.081, P < 0.001. These data demonstrate that HMMs are not only less capable of filtering out irrelevant stimuli from their environment, but also are less capable of filtering out irrelevant representations in memory.

This seems plausible. But it leaves entirely open the question of causation. Are the members of the HMM group "less capable of filtering out irrelevant stimuli from their environment [… and] less capable of filtering out irrelevant representations in memory" because their brains have been damaged by media multitasking? Or are they in the HMM group, not because they actually do more media multitasking, but because they're less able to deal with it and therefore more likely to remember and report it? Or, perhaps, are they more tolerant of media multitasking because they just don't care as much about the details of what happened where and when?

This paper also leaves open the question of what sorts of media multitasking are really in play here, and what other variables might be associated with MMI differences. The authors did consider the problem of associated variables:

[A] new group of 110 participants filled out the MMI questionnaire as well as their SAT scores, gender, the Need for Cognition index questionnaire, the Big Five Trait Taxonomy (including measures of extraversion, agreeableness, conscientiousness, neuroticism, and openness), and a creativity task derived from the Torrance Tests of Creative Thinking (TTCT). The data from participants with an MMI one standard deviation or more above and below the mean (16 HMMs and 17 LMMs) were compared.

They found no significant differences — but again, it's a very small sample, and differences that would be rejected in this test as plausibly due to sampling error might still have been large enough to affect the experimental results in the earlier sample.

If we had access to the raw media matrix and the associated experimental scores, we might find (say) that their HMM group happened to include a subgroup whose MMI is high because they (report that they) listen to music during all other life activities, and who tend to smoke pot more often than their peers. Alternatively, Ophir et al.'s 15-19 HMM students might happen to include a substantial mix of driven over-achievers who network obsessively while studying and web-surfing, and are also among the substantial percentage of Stanford undergraduates who take Ritalin or Adderall in order to improve their academic performance and decrease the need for sleep.

From a certain point of view, all of this criticism is unfair. You can always think of alternative models to fit and new controls to run, to check and perhaps to challenge the conclusions of any study. And compared to some attempts to enlist science in discussions of social policy, Matt Richtel's (implict) reference to this paper rates very highly: the research is actually on the topic, and supports the desired conclusion.

But in my opinion, it doesn't support it nearly strongly enough. What's at stake here is a set of major choices about social policy and personal lifestyle. If it's really true that modern digital multitasking causes significant cognitive disability and even brain damage, as Matt Richtel claims, then many very serious social and individual changes are urgently needed. Before starting down this path, we need better evidence that there's a real connection between cognitive disability and media multitasking (as opposed to self-reports of media multitasking). We need some evidence that the connection exists in representative samples of the population, not just a couple of dozen Stanford undergraduates enrolled in introductory psychology. And we need some evidence that this connection, if it exists, has a causal component in the multitasking-to-disability direction.

What does all this have to do with language? Well, most of the types of "media" at issue here involve speech or text. This is also an interesting example of the rhetorical trope "studies show that …". And it's part of my on-going investigation of the interpretation of generic plurals. Of course, all of these connections are minor compared to the possibility that digital media are ruining our brains.

Clifford Nass said,

September 4, 2010 @ 1:26 pm

Thank you for the extremely thoughtful discussion of the paper. I think that your concerns are valid.

To the concerns of sample sizes, I am very happy to say that many other labs are replicating and extending our research. This is a much better way to deal with a small sample than to do internal analyses (although those are certainly a very good thing). We will see what happens.

The issue of causality is hugely important, both theoretically and practically. You are right that we do not make claims about cause and effect because they cannot be supported by the data. We are looking at doing training studies in which we have high multitaskers change their behavior for two weeks. We're also thinking about fMRI and other techniques to triangulate what's happening.

Your point about music at the end of the article is particularly interesting. We are about to start an experiment in which people will perform the tasks described in the paper while listening to music with lyrics, the same music without lyrics, and no music at all.

Elizabeth Zwicky said,

September 4, 2010 @ 1:35 pm

There's another factor here. The author assumes that listening to music is increasing cognitive load. But as anyone who works in a dorm or a cubicle will tell you, many people with headphones on are explicitly attempting to decrease distraction and cognitive load. They are not "multitasking", they are blocking out distractions. Thus, you would expect more distractible people to do more "multitasking", if using a computer while listening to music is considered multitasking.

[(myl) A good point. On airplane trips, I usually listen to music while simultaneously reading, and I almost never do these two things together otherwise. The motivation while traveling is clearly to reduce distraction, not to increase it.

Personal music-reproduction devices didn't exist while I was an undergraduate and lived in crowded circumstances with other active and noisy people. But if it had been possible, I probably would have listened to music while reading or studying or writing, for just the same reason.]

Blake Stacey said,

September 4, 2010 @ 2:06 pm

Ah, injury stories!

When I tore a meniscus in my left knee last year, I discovered all sorts of stair steps I had never even known were there. The same goes for the seating in subway stations — who notices how many benches there are until they're needed?

Good point. And it's not likely that all music is equal for such purposes. Beyond the simple distinction of lyrics/no lyrics, there's lyrics in a known language versus lyrics in a foreign tongue the listener does not understand, familiar lyrics versus unfamiliar, intelligible versus distorted, and so forth.

Blake Stacey said,

September 4, 2010 @ 2:10 pm

I'm able to download it from home, so it appears to be freely available.

Jarek Weckwerth said,

September 4, 2010 @ 4:05 pm

@C. Nass: We are about to start an experiment in which people will perform the tasks described in the paper while listening to music with lyrics, the same music without lyrics, and no music at all.

You could add lyrics in a second language (that is, one that the subjects do speak but not natively, e.g. Spanish). I have a feeling that the distracting (?) power of e.g. television is quite different in your native language and a second language.

Debbie said,

September 4, 2010 @ 4:24 pm

I think that with regard to music there are further considerations: whether or not the music is familiar or unfamiliar and, whether or not you want to focus on it. If it’s unfamiliar but I’m enjoying it, I

May focus on it. Conversely, if I find a piece disagreeable, I may have difficulty tuning it out just as I would a piece that I enjoy. Having said that, I may also find it easier to tune out familiar music. Have studies not been done in the past to determine performance with white noise, voices, music, or silence as backgrounds?

John Cowan said,

September 4, 2010 @ 4:28 pm

I don't notice how many benches there are in subways because they are either all empty or all full, depending on the time of day.

I've often wondered how people can listen to music (as opposed to treating it as white noise) and do verbal processing of any sort at the same time. I can't do it at all; there is something about listening to music, especially the more complex kinds like classical music, that "occupies" my verbal mind, though I don't hear notes as words or anything.

Richard Feynman once did a little study of who can mentally count while talking and who can't. He found that he could not, because he was saying the numbers to himself, whereas John Tukey could, because he was reading them off a visualized ticker-tape reeling off the numbers.

Rubrick said,

September 4, 2010 @ 6:08 pm

I feel psychology research is caught (perhaps has always been caught) in an unfortunate cycle: Someone performs an experiment with a level of rigor that would make a physicist cry laughing and draws unwarranted conclusions that sound socially important; the media distorts these conclusions further; other researchers, needing to secure grant money, design more such experiments.

I suspect that anyone who proposed an experiment that might really establish a fact — any fact — about the effects of media multitasking on task-switching performance would be laughed out of school. Such an experiment would, at a minimum, involve first monitoring (ideally secretly, but obviously there are ethics concerns there) the media-consuming habits of hundreds or thousands of participants of diverse demographics to determine their actual media multitasking habits. That's just to try to get sufficiently large pools of light and heavy multitaskers whose usage profiles are sufficiently similar to be worth testing. Expensive? Yes. Good science often is. The reward is that at the end you might, if you're lucky, get a result that's actually worth a damn.

I don't think anything can or should be concluded from the experiment described above beyond "Possible slight inverse correlation between self-reporting of heavy media multitasking and task-switching performance among Stanford psychology undergrads." Any generalization beyond that is unwarranted.

I dunno. So much of what I see reported in the field of psychology strikes me as barely qualifying as science. The emperor, while perhaps not completely naked, would certainly get kicked out of most respectable restaurants. I think the field would benefit enormously from admitting that it hardly knows anything (yet), dropping its "profound implications", and getting down to baby steps. Sure, science journalists will have to transition from wild misinterpretation to outright fabrication for their headline-grabbing stories, but I'm sure they can adjust.

Rubrick said,

September 4, 2010 @ 6:13 pm

Oh, and sorry about the knee.

[(myl) Thanks! But I think you're too hard on psychology as a field — in my opinion there's a lot of solid and interesting psychological science. Perhaps the biggest current weakness, in my opinion, is an occasional tendency to try to answer big questions (about sex differences, for example) on the basis of small samples of undergraduate psychology students. And you're right that doing such studies convincingly would require large-scale research that would be relatively expensive. But note that some work on the needed scale is already being done — by media research firms like Nielsen, by polling organizations, by pharmaceutical companies, and so on. So I don't think that the idea of funding psychological studies that use representative samples would be dismissed as foolish, even though it would certainly now be beyond NSF's normal budget for such things.

It seems likely to me that advances in networked digital hardware and software will make it possible before to do very-large-scale experiments fairly cheaply, by in effect instrumenting the entire lives of a large number of people. Probably the biggest current barrier to doing this is establishing credible procedures to ensure privacy and confidentiality.]

Evan said,

September 4, 2010 @ 9:21 pm

"studies [of small groups of undergraduate psychology students] show that…"

doesn't quite have the same ring to it

Caelevin said,

September 4, 2010 @ 11:21 pm

I just want to shout in my single, highly subjective data point as regards music and work over the seventeen years of my career.

When programming (almost exclusively with Object-Oriented Programming languages*) the rule is:

When writing new code, music with lyrics is okay.

When debugging, I can only listen to music without lyrics, unless it is in a language I do not understand.

I mention the style of programming because I am pretty sure OOP programmers only have about 40% of our DNA in common with Procedural programmers.

Quick Links | A Blog Around The Clock said,

September 5, 2010 @ 1:45 pm

[…] Are 'heavy media multitaskers' really heavy media multitaskers? […]

groki said,

September 5, 2010 @ 5:23 pm

myl: very-large-scale experiments … Probably the biggest current barrier to doing this is establishing credible procedures to ensure privacy and confidentiality.

that barrier may collapse long before the procedures are established, though: people–or enough of them, anyway–may simply cease expecting/requiring privacy before participating in the very-large-scale experiments. (assuming, of course, that they are given the choice.)

after the Zuckerberg Facebook privacy kerfuffle earlier this year, PCWorld reported that Pew found evidence suggesting such a shift in attitude.

and it was all the way back in 1999 when Scott McNealy, CEO of Sun Microsystems, scolded: "You have zero privacy anyway."

Rubrick said,

September 5, 2010 @ 5:30 pm

[(myl) Thanks! But I think you're too hard on psychology as a field — in my opinion there's a lot of solid and interesting psychological science. Perhaps the biggest current weakness, in my opinion, is an occasional tendency to try to answer big questions (about sex differences, for example) on the basis of small samples of undergraduate psychology students.]

I'm sure you're right. I was going to insert a caveat about my not really knowing about 99+% of psych research, but I'd already spent long enough on my rant for someone like you to author an entire paper, so I decided to leave my concerns overblown.

[It seems likely to me that advances in networked digital hardware and software will make it possible before to do very-large-scale experiments fairly cheaply, by in effect instrumenting the entire lives of a large number of people. Probably the biggest current barrier to doing this is establishing credible procedures to ensure privacy and confidentiality.]

While not exactly doing life-instrumentation (at least not yet), my friend Lauren Schmidt (former grad student under Lara Boroditsky) has founded the startup Headlamp Research, aimed specifically at making large-scale online studies easier, more effective, and more rigorous.

John Cowan said,

September 5, 2010 @ 7:16 pm

Caelevin: I've been both over the 30 years of my career: does that make me a hybrid?

Roger Lustig said,

September 5, 2010 @ 8:45 pm

@Cliff:

How've you been? I'm interested in the continuation and replication of these studies, not least because a friend has been volunteering for quite a few of the fMRI studies at the local university, some of which sound much like what you were after here.

And I'll be doubly interested in any music-plus-task studies you do. I can't do anything work-like when the radio's on. Driving, gardening, cooking–no problem, but working with words, images or symbols is right out. If I choose what's to be played it's even worse. Part of me wants to analyze the music–Haydn, Terry Riley, Alla Rakha or Kanye–and most of the rest wants to follow all the associations and memories and analogies that come up. Even boring music (the only bad music, as Rossini said) raises too many questions as it goes by.

Not knowing the literature, I can't say how much has been done on the actual effect of Muzak, radio, Walkman, iPod mixes, etc. But I suspect there are several types of listener, several styles of work-plus-listening interaction, several kinds of differences in the effect of music one likes vs. music one doesn't like.

Mind you, I carry earbuds and an mp3 player/phone, and occasionally listen to something on the train if I've planned it out. But more often than not, I put them in my ears and the cord in my pocket, and people leave me alone to work, work out or whatever.

I look forward to hearing that I'm a member of a very small minority, and that some music can enhance most people's work.

dirk alan said,

September 5, 2010 @ 9:18 pm

elephants are multituskers.

Nanani said,

September 5, 2010 @ 11:47 pm

Very glad to see it stated early in the comments that not all music is equal, cognitively speaking.

As someone whose only waking hours without music are involuntary so, I can't fathom how music counts as a "distraction". If it's a distraction, it must be the wrong music for the task.

For more anecdata:

I work as a translator, and any music with lyrics in a language other than the target language is OK (that is, any lyrics in the language I am writing in can break concentration); music without lyrics is prefered.

When I was a student, I found that nothing got my brain pumping for math and physics assignments better than heavy metal. Speed or Power for preference.

I even sleep with music on.

Waffles said,

September 6, 2010 @ 9:13 pm

One thing that strikes me is that Stanford students probably have different multitasking habits then other groups of people.

I don't study psychology at Stanford, but I have recently started studying animation at CalArts.

Before I started college, I did various menial assembly line jobs. These are jobs without much opportunity to multitask; you have to be able to hear what anybody else on the line says, so no headphones, and obviously you can't text or surf the web. And I have the feeling I didn't multitask much outside of work, because I didn't really have many responsibilities; If I wanted to goof off, I just did it.

Meanwhile, in college I have a huge amount of work, but almost no supervision. So pretty much whenever I animate I bring my laptop along to watch Hulu or listen to music. And college work takes up a gigantic chunk of my time; towards the end of the semester I do nothing but work and sleep, so ANY leisure activity during that time is multitasking.

Not that I have any hard evidence, but given the comment about Stanford students taking Ritalin and Adderall, I suspect a lot of them are in the same boat.

So I wonder if/how multitasking behaviors vary among different demographics.

D.O. said,

September 7, 2010 @ 3:07 am

Great post!

A small point: there is a 2/3 probability to end up within +/- 1 stdev for the normal distribution. So the number of plausibly available LMM/HMM subjects was 262/3 = 87.

As if lots of people would listen. Just look at drinking and smoking.

E said,

September 7, 2010 @ 12:45 pm

Further anecdata:

I find music distracting only if it's unfamiliar to me, or just familiar enough that I find myself trying to remember where I have heard it before. If the music is something I've heard many times before, I can drown it out– even to the point where I forget I'm listening to music, and momentarily wonder if the player has shut off.

What I find most distracting, though, is the spoken word– from radio, TV, or real people.