More on GPT-5 pseudo-text in graphics

« previous post | next post »

In "Chain of thought hallucination?" (8/8/2025), I illustrated some of the weird text representations that GPT-5 creates when its response is an image rather than a text string. I now have its recommendation for avoiding such problems — which sometimes works, so you can try it…

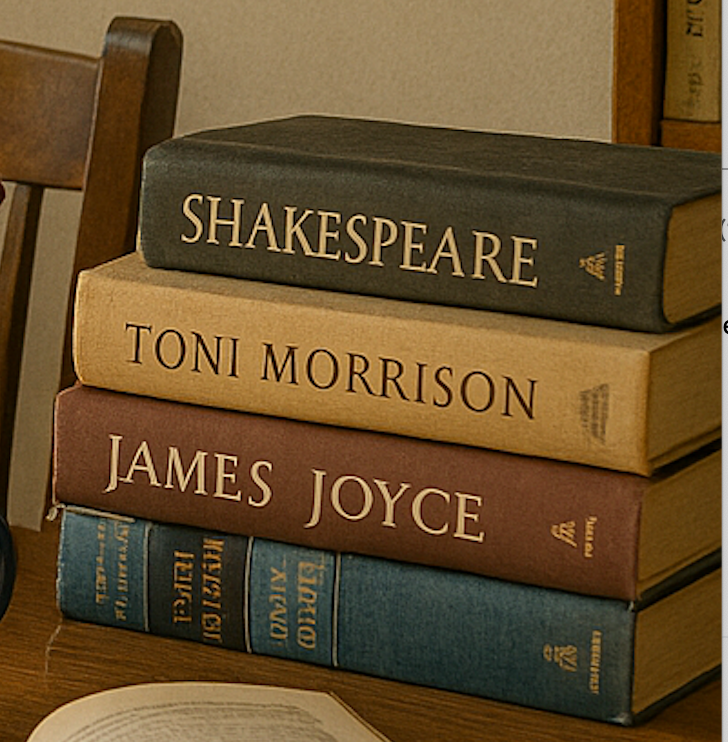

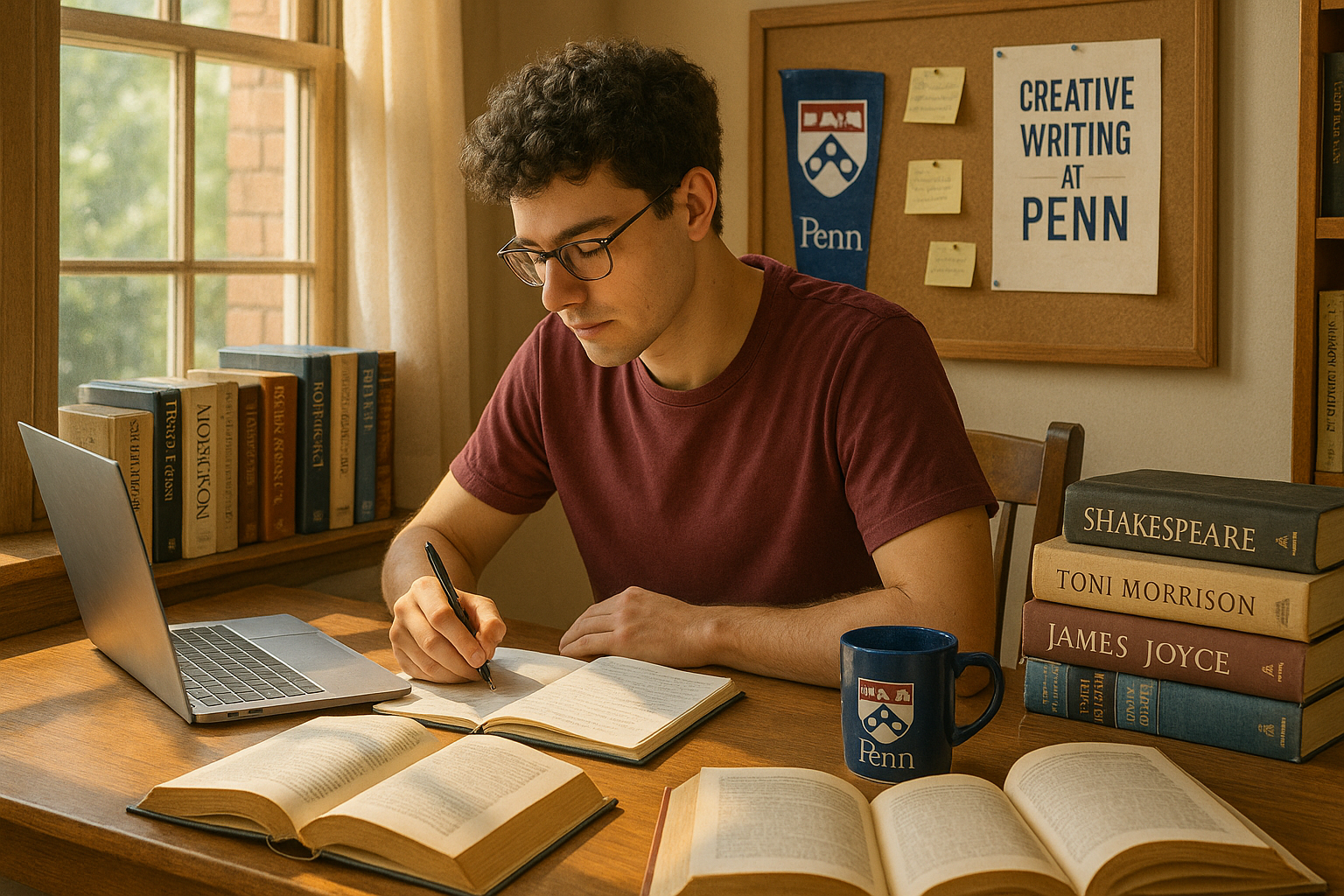

Yesterday I asked GPT-5 for an image to illustrate a planned presentation for first-year students about literary studies at Penn, and the impressive response included a stack of relevant books. GPT-5 chose the titles, and in fact the whole idea of the book stack was part of its response:

The blue book on the bottom of the stack is labelled in some alien non-alphabet, but still…

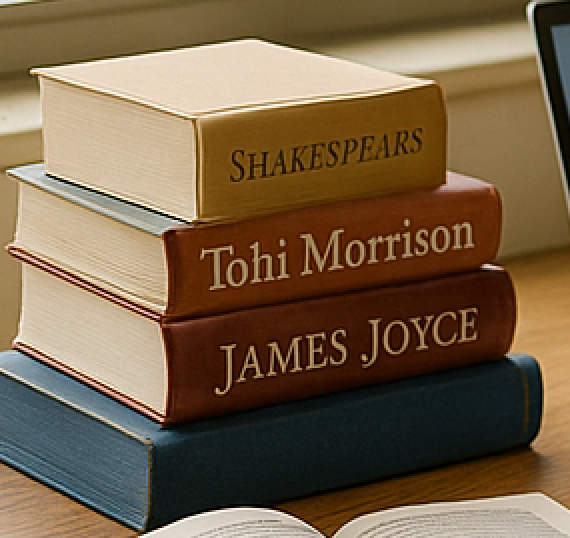

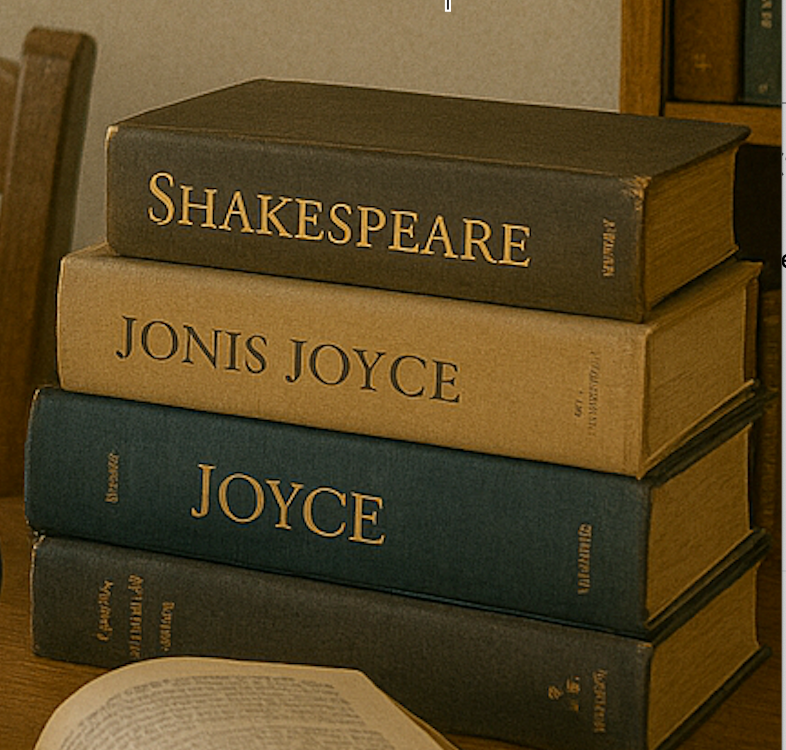

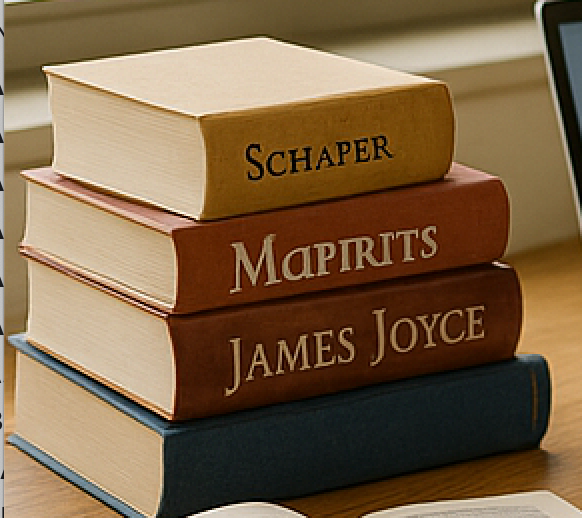

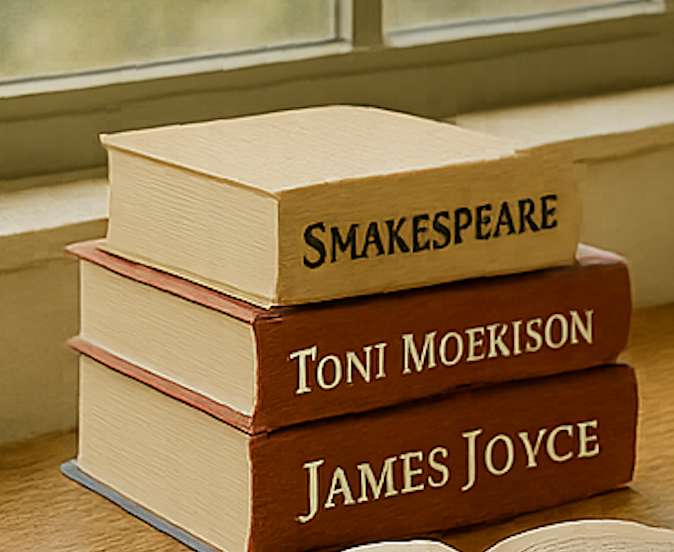

I asked for some changes to the picture, and GPT-5 generally did as I asked. But as our dialogue continued, the labelling of the book stack got weirder and weirder, despite my specific instructions to fix the oddities and restore the original titles:

When I complained somewhat more vividly, GPT-5 responded:

You’re absolutely right — thank you for pointing that out. The AI rendering of book spines often hallucinates text, which is why you’ve been getting “Jonis Joyce,” “Tohi Morrison,” “Maprits,” etc., instead of the clean, correct author names.

To get this right, we’ll need to constrain the design more tightly. A couple of options:

Minimal-text approach:

Instead of generating the book spines with full names, we can request only generic books with solid-colored spines — and then we can overlay real text afterward (I can generate a clean, high-resolution image of the names “Shakespeare,” “Toni Morrison,” and “James Joyce” in matching fonts for you to place on the spines).

Post-edit overlay:

I can generate the correct author names separately as text graphics, then position them digitally on top of the book stack in your chosen image. That way, you’ll have exact, error-free spines.

Typography lock-in:

I can tell the generator explicitly: “Use only the exact names ‘Shakespeare,’ ‘Toni Morrison,’ and ‘James Joyce’ on the spines, no variations” — but even then, the model sometimes slips. The most reliable solution is #2 (overlay).

Do you want me to try another direct fix with stricter prompts, or would you prefer I generate clean text overlays of the author names that you can apply to whichever image you like best?

I asked for the "Post-edit overlay". The first result (a male version) was pretty bad in terms of book-stack titles — "SMAKESPEARE" and "TONI MOEKISON":

I asked for a female version, without commenting on the errors — and the result was correct, at least in terms of book-stack titles:

There may be a magic prompt wording that will work more than half the time, but that's all I've got time for just now.

Laura Morland said,

August 24, 2025 @ 10:34 am

I've also experienced the frustration of GPT-generated images. The responses are so weird that the laughter dampens the frustration.

It was amazingly difficult for GPT to create an image of a group of five adults around a table — it would render 7; on one occation,10. One try produced five people, but removed all their legs! I finally ended up photoshopping a couple of the images.

If only GPT's results were as on target as its admissions of failure.

I'll try your workarounds next time.

nnn said,

August 24, 2025 @ 2:52 pm

What is the benefit of using GPT for this, with all the trial and error that apparently entails, rather than just pulling up a stock or public domain image of a stack of books, or just grabbing a quick photo of whatever stack of books you have immediately on hand?

AntC said,

August 24, 2025 @ 4:06 pm

I'd read the mashup of Janis Joplin/James Joyce.

Mark Liberman said,

August 24, 2025 @ 4:41 pm

@nnn "What is the benefit of using GPT for this, with all the trial and error that apparently entails, rather than just pulling up a stock or public domain image of a stack of books, or just grabbing a quick photo of whatever stack of books you have immediately on hand?"

The book stack is just a small part of the pictures — starting with the first one:

And the experimentation (including its failures) is interesting.

Rick Rubenstein said,

August 24, 2025 @ 6:36 pm

I can certainly see why this studious young man is focusing on those books rather than consulting the ones on his (discontinuous?) window shelf. :-)

In all seriousness, I think LLMs' frequent tendency to get worse at tasks as you provide feedback, rather than better, is a key indicator of the difference between their modes of "thought" and those of a human.

Julian said,

August 24, 2025 @ 6:49 pm

There's still something ineffably bland about these images, isn't there?

The handsome, cleancut figures. The impossibly neat array of post it notes on the corkboard. The Mona Lisa smile…

Of course I'm being snooty with the wisdom of hindsight. I'm not claiming that if these images were cleaned up and mixed with real ones in a 'guess which is the AI' test, I would guess correctly.

DDeden said,

August 24, 2025 @ 7:16 pm

The near right corner has a very hard to publish book. It jarred me just seeing it.

Chester Draws said,

August 24, 2025 @ 8:26 pm

The bad logo for Penn is what gets me about AI.

–Instead of a standard shield shape it has rounded ends for the chevron, destroying the smooth curve.

— The book-fish-book across the top is badly mangled to the point of incomprehensibility.

— The "Penn" is dodgily kerned.

How does it even get that wrong? It literally needs to copy what it "sees" on the internet, all of which are basically exactly the same. Not a single shield has the shape it gives.

The rest of the errors involve some sort of mash-up of concepts and pictures. But the logo is straight copying done weirdly.

JPL said,

August 24, 2025 @ 8:27 pm

Reminds me of those old "What's wrong with this picture?" puzzles.

AntC said,

August 25, 2025 @ 12:18 am

very hard to publish book

Yes at first sight, I assumed that was two open books piled on top of each other — which would at least be injecting a bit of messy reality. But no, it's one book with three leafs. (If that's even the way to describe it.) From what/where could it possibly have hallucinated such a thing?

(discontinuous?) window shelf.

The Mona Lisa smile…

Aha! The horizons each side of La Giaconda's head are mis-matched.

Mark Liberman said,

August 25, 2025 @ 8:01 am

@DDeden, AntC "a very hard to publish book", "one book with three leafs":

That's definitely weird — not a problem with graphical text, but with common-sense knowledge —

David Marjanović said,

August 25, 2025 @ 9:03 am

Evidently, the logo is too large to be a single token, so it cannot simply be copied. Same with the double-spined book: a whole open book is too large.

LLMs arrange tokens based on their probability of proximity to other specific tokens. For text, the tokens are somewhat larger than words, AFAIK. For images, are they pixels?

Brett Altschul said,

August 25, 2025 @ 12:17 pm

The minor detail that I found most jarring was that the Post-It notes are different sizes.