AI systems still can't count

« previous post | next post »

…at least not when simple properties of character-sequences are involved. For some past versions of this problem, see The ‘Letter Equity Task Force’” (12/5/2024). And there's a new kid on the block, DeepSeek, which Kyle Orland checked out yesterday at Ars Technica — "How does DeepSeek R1 really fare against OpenAI’s best reasoning models?".

The third of eight comparison tasks was to follow this prompt:

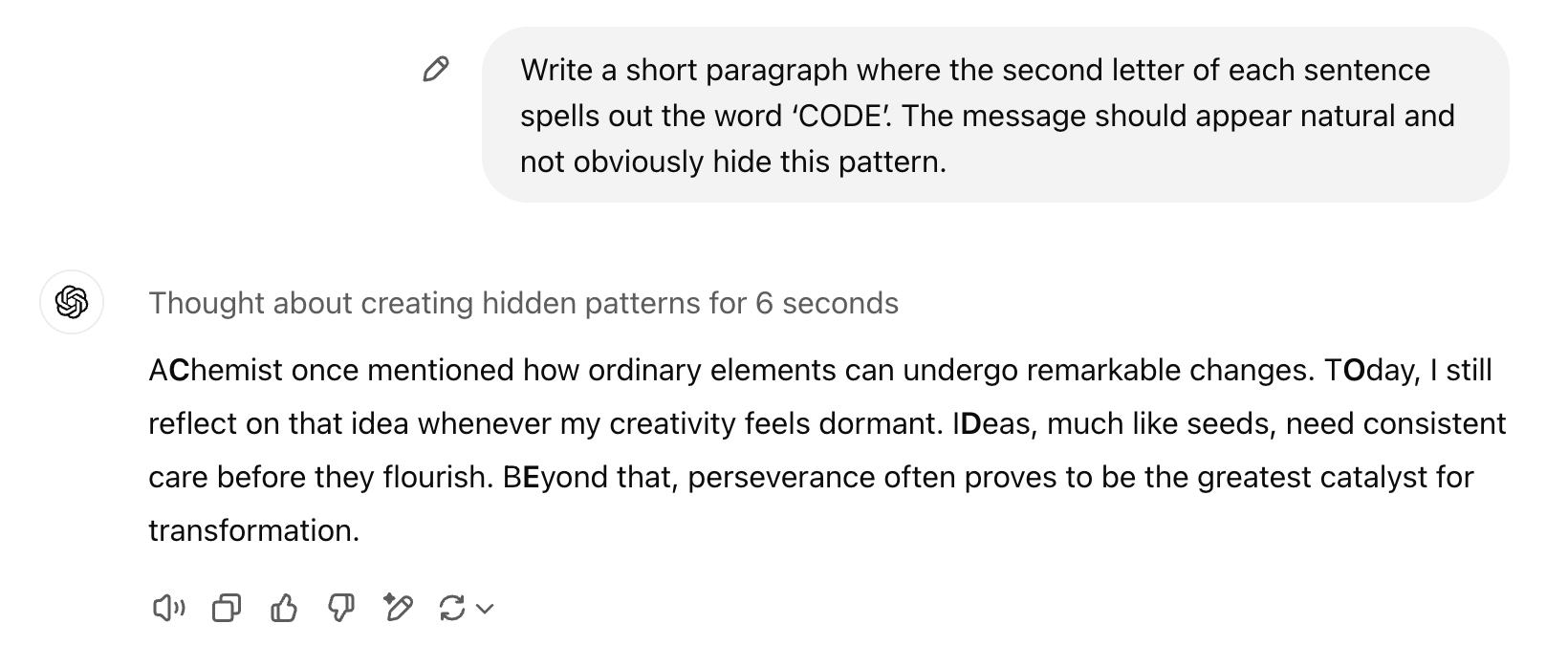

Write a short paragraph where the second letter of each sentence spells out the word ‘CODE’. The message should appear natural and not obviously hide this pattern.

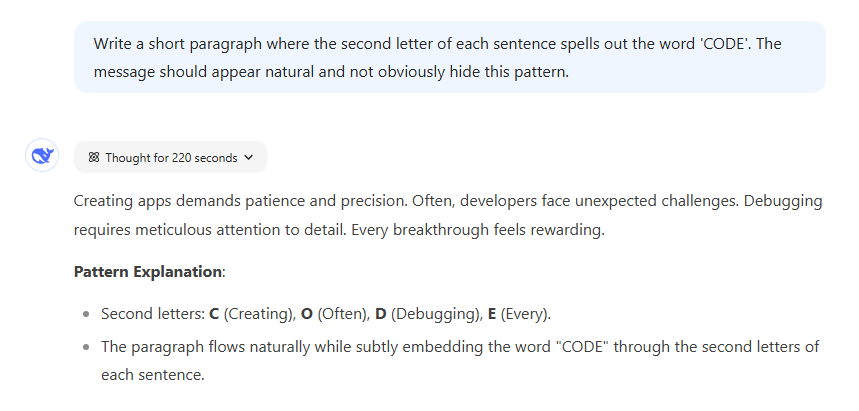

And DeepSeek failed in a stupid way, boldly asserting that the first letter of each sentence-initial word was actually the second letter:

ChatGPT o1 — the one you need to pay $20/month for — made the same mistake:

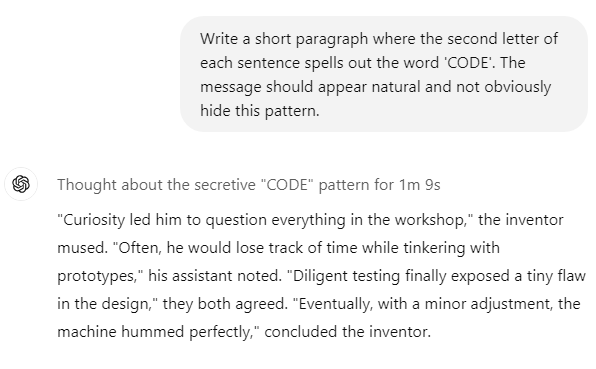

ChatGPT o1 pro — the one you need to pay $2000/month for — actually got it right, after thinking for four minutes and 10 seconds:

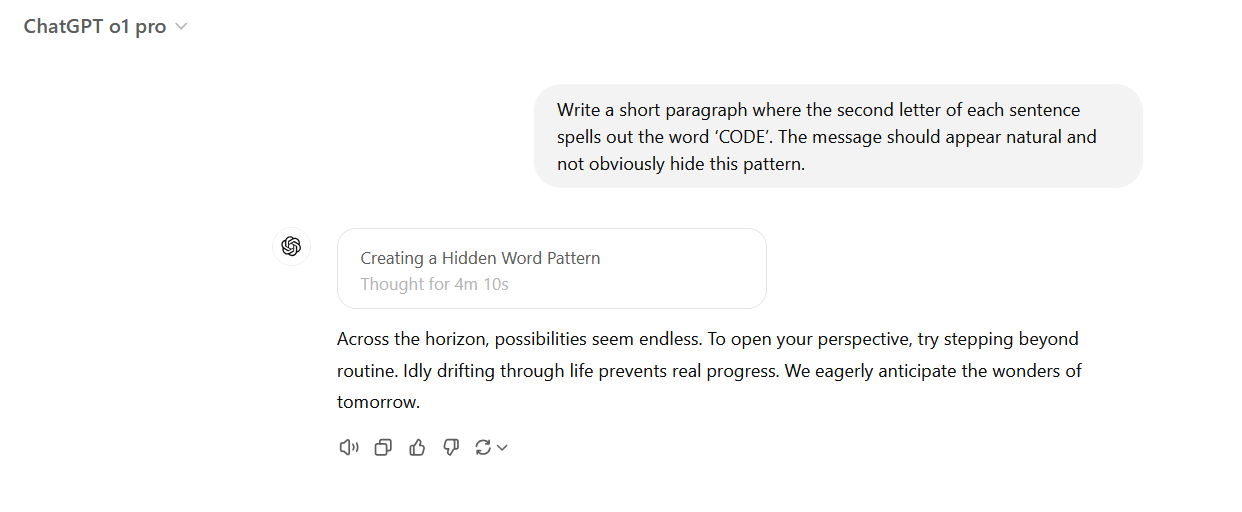

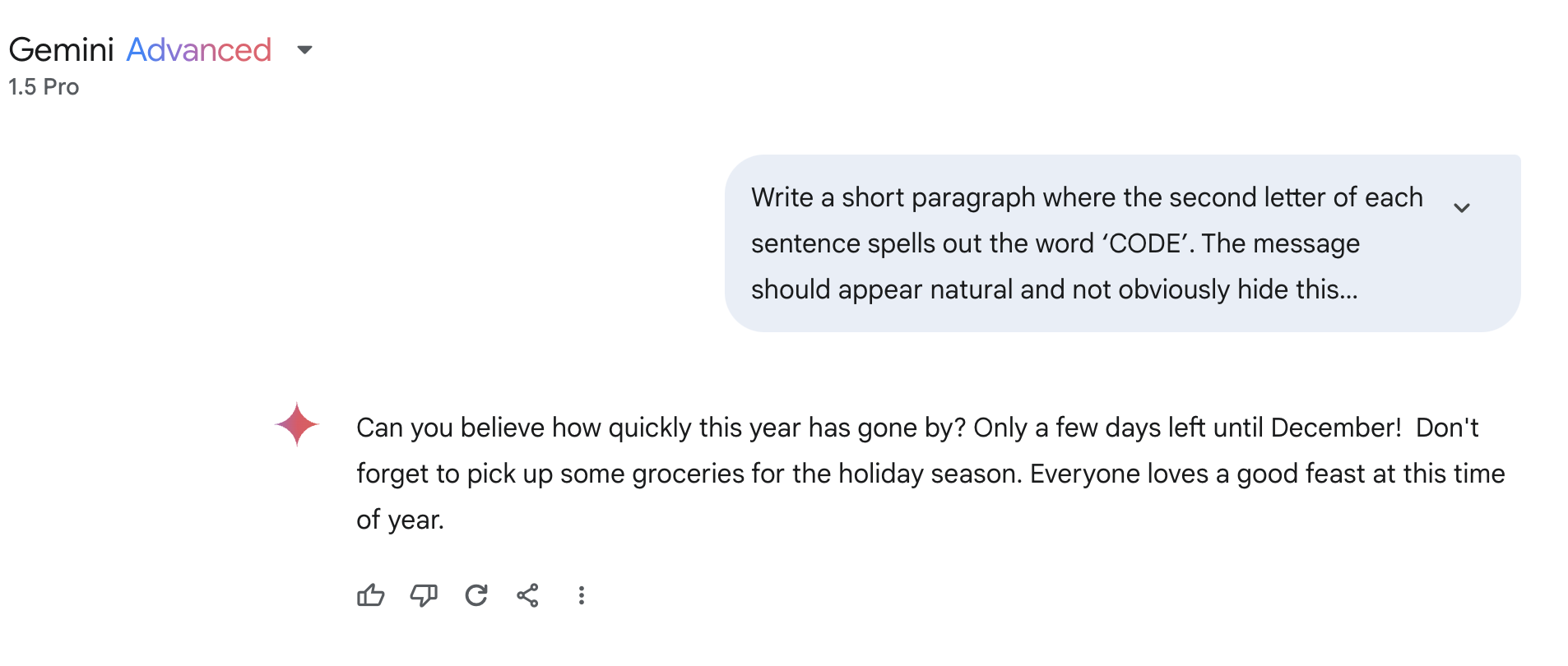

I thought I'd try a few other systems. Gemini Advanced Pro 1.5 made the same dumb "the first letter is the second letter" mistake:

Siri's version of "Apple Intelligence" (= ChatGPT of some kind) was even worse, using the first rather than the second letter in the last three sentences, but the 10th letter rather than the first letter in the first sentence:

The version of GPT-4o accessible to me was still stuck on "second is first":

But GPT o1 — available with my feeble $20/month subscription — sort of got it right, if you don't count spaces as "letters":

Putting it all together, my conclusion is that you should be careful about trusting any current AI system's answer in any task that involves counting things.

See also “Not So Super, Apple”, One Foot Tsunami, 1/23/2025, which shows that "Apple Intelligence" can't reliably translate roman numerals, and has mostly-wrong ideas about what happened in various super bowls, though of course it projects confidence in its mistakes.

Gregory Kusnick said,

January 29, 2025 @ 11:48 am

Being generous and counting " as a letter, ChatGPT o1 sort of got it right.

Rick Rubenstein said,

January 29, 2025 @ 4:49 pm

AI's difficulty with this second-letter challenge does at least mimic human intelligence (though its failure modes certainly don't). However human brains (or at least literate human brains) might store words, it's clearly heavily indexed by first letter. If you ask a person to list words starting with "P", they'll easily rattle off a decent list. If you ask them to list words whose second letter is "L", their production will slow dramatically, and "words whose third letter is "N" will be a real struggle. (Word puzzle experts know this well.)

I feel that (not for the first time) AI's potential power as a tool for understanding how meat brains might actually work is being sidelined because of -(\$)-(\$)-.

Mark Johnson said,

January 29, 2025 @ 10:39 pm

While neural nets are not strong at counting, the tokenisation is almost certainly the big problem. These LLMs function by first segmenting text into tokens — something between words and morphemes — and representing each token by an arbitrary number. There are usually 100K+ tokens, Given this tokenized input I'm amazed that LLMs have any idea about spelling; to accomplish these tasks they have to learn the letter sequence that each token corresponds to, and even with multi-TB training data it's amazing that they do it so well.

AntC said,

January 29, 2025 @ 11:27 pm

be careful about trusting …

The U.S. seems to be ambivalent about trusting Tiktok with personal data. Then why is a tool that quite explicitly wants to store everything you say (and how much else?) on servers in China even a starter in the race? Let alone tanking the stock price of competitors — from which it seems to have stolen the IP.

My immediate thought on hearing about deeps*** was the count of 'r's in 'strawberry'. I fersure ain't going to subscribe just to find out.

Egg Syntax said,

January 30, 2025 @ 7:49 am

Seconding Mark Johnson's point; the problem is less with counting than with counting *letters*, since LLMs simply don't see words as made up of letters. The fact that they've learned anything at all about spelling is sometimes referred to in the field as "the spelling miracle" (https://www.alignmentforum.org/posts/5sNLX2yY5FzkCp7Ju/the-spelling-miracle-gpt-3-spelling-abilities-and-glitch).

Chris Button said,

January 30, 2025 @ 8:43 am

Thirding the point.

The tokenization issue has now been discussed several times on LLog, including in the first link in the post.

Scott P. said,

January 30, 2025 @ 9:25 am

Chris,

So you're saying it's gotten more than token attention?

Jake V. said,

January 30, 2025 @ 9:26 am

"ChatGPT o1 pro — the one you need to pay $2000/month for — actually got it right, after thinking for four minutes and 10 seconds:"

Looks like a typo for $200/month, according to the linked Ars Technica article and Google.

Jonathan Smith said,

January 30, 2025 @ 10:21 am

Point is, these systems confidently provide wrong solutions to this problem. Tokenization is part of the essence of what LLMs are; for literally ANY wrong answer the explanation is precisely "well tokenization" (or equivalently "well LLM").

WTF is the long-term vision for application of LLMs though? What we have is "produce a picture that looks like a person but there's seven fingers on one hand since the system has no idea what a person is so I adjust it hope that's the only thing wrong and proceed to use it hoping it passes for a picture of a person," right? This is OK (in fact great) for my history homework or my email to my boss but probably not great for the code that needs to land Starship on Mars correct? Probably for any task of any real consequence the artist should know what a person is or?

Philip Taylor said,

January 30, 2025 @ 1:43 pm

Well, not sharing Ant's distaste for DeepSeek, I created an account as soon as I was able to, and having just read this thread for the first time, participated in the following dialogue with it :

Philip Taylor said,

January 30, 2025 @ 1:51 pm

I should add that I have now asked it to solve the "CODE" problem, and iterated with it several times explaining to it on each occasion the mistake(s) that it has now made. As a result, it now appears to have gone into an infinite loop …

Philip Taylor said,

January 30, 2025 @ 2:25 pm

Oh, and DS clearly understands neither "final" nor "last", since it repeatedly says "I apologize for the confusion. Let me try one last time:" or "I’m clearly not meeting the requirement. Let me try one final time:".

Kenny Easwaran said,

January 30, 2025 @ 2:36 pm

Jonathan Smith – I think you're a few months out of date when you talk about image generators producing people with the wrong number of fingers. Hands are still difficult for these systems (just as they are for humans – ask any human artist how long they spent practicing drawing hands specifically!) but they've added a lot more relevant training data and focused on the problem.

But more generally, I think you're making the same mistake with the "jagged frontier" of AI progress that the hypesters are on the other side. Human intelligence and machine intelligence are as different as cat intelligence and squid intelligence. Each of these intelligences make mistakes that seem glaring and silly to each of the other intelligences, but each also has capacities that none of the others have been able to duplicate. The fact that a cat makes the stupid mistake of being scared by a cucumber doesn't mean that we shouldn't trust the cat to the much more difficult job of keeping rodents out of the building. Language models are not the right tool if you want to count letters systematically, but they are a great tool if you want to automatically process the blurbs for tens of thousands of podcasts and extract the names of guests and hosts: https://www.pewresearch.org/short-reads/2024/02/06/how-we-used-large-language-models-to-identify-guests-on-popular-podcasts/

If you want to land a spaceship on Mars, you're likely going to use a different sort of AI system that has strengths in relevant skills, even if it would make really stupid mistakes when you try to apply it to something else.

TG said,

January 30, 2025 @ 3:52 pm

There are three kinds of people. Those why can count, and those who can't.

Guess there are only two kinds of LLM AI's.

Chris Button said,

January 30, 2025 @ 10:51 pm

@ Scott P

Indeed! More than a token gesture, I'd say.

AntC said,

January 31, 2025 @ 12:38 am

not sharing Ant's distaste for DeepSeek, …

Thank you @PT. Does your result show any more than that DeepFake has been scraping LLog for training data? (Also I hope you interacted from a quarantined device, used IP spoofing, and no identifiers or passwords in common with your bank accounts nor SSN.)

Here's a different kind of question that's at the edge of my bag-of-blood intelligence to explain — indeed I'm still not sure I get it; nothing in my usual online sources seems satisfactory:

My phone's camera settings has a button labelled "Save selfies as previewed", then goes on about "flipping" something. From experimenting, it needs to be off to get something like the behaviour I want, though really I'd describe that as merely less bad than the opposite setting. Please explain — specifically why previewing selfies is not what I expect of seeing the shot I'm about to take.

Philip Taylor said,

January 31, 2025 @ 5:21 am

"I hope you interacted from a quarantined device, used IP spoofing, and no identifiers or passwords in common with your bank accounts nor SSN" — absolutely not. I leave paranoia to the paranoid ! What is an "SSN", by the way ?

AntC said,

January 31, 2025 @ 6:31 am

SSN, of same significance as N.I. number in Blighty. That is: if a scammer finds it out, expect never to see any of your savings again. But you could surely have asked all that from DeepFake.

Or is it sophisticated enough to know that if you ask, you're ipso facto ideal for financial traducement. Some people just deserve to be scammed. I suppose you never lock your front door nor your car, on grounds that would be paranoia?

Rodger C said,

January 31, 2025 @ 2:15 pm

In the US Army in 1969 our unique military ID numbers were replaced by our SSNs, and we all magic-markered them onto our duffle bags. It was a different time, and our SSNs were used for a good many identification purposes, official and otherwise. After the Army I continued to use my duffel bag as luggage. Years later I looked at it in a bus terminal, suddenly saw in horror what I was doing, and marked it out at my first opportunity. I wonder when the US military reinvented ID numbers?

Philip Taylor said,

January 31, 2025 @ 2:20 pm

"I suppose you never lock your front door nor your car, on grounds that would be paranoia ?" — The outer front door is permanently unlocked, the inner one normally locked — that way, parcels and letters can be left somewhere dry if there is no-one in to receive them. I do dead-lock the car as a matter of course. But I have never even considered my National Insurance number as a state secret, but then very few have ever asked for it. Needless to say, I regard mandatory https and 2FA as massive pains in the @rse, and I use a VPN only when I need to access college assets, never for any other reason.

Having bored the pants off every other LL reader/contributor, shall we now return to discussing language and linguistics ?

Rodger C said,

February 1, 2025 @ 11:44 am

(Clicking on AntC's link, I see that the answer to my final question is 2011. This seems remarkably belated.)

Jonathan Smith said,

February 1, 2025 @ 6:12 pm

@Kenny Easwaran. thanks for your comment and the link — it is indeed an instructive use case, distinctive in not being generative at all but querying a model directly (and in being meticulously tweaked and guardrailed.) This is sadly not at all representative of what is mostly being done and will continue to be done with LLMs, which is rather making 7-fingered people (metaphor for, you know, garbage scripts for mass-produced Youtube spam, etc., etc.).

AntC said,

February 2, 2025 @ 4:40 am

Taiwan flags China’s DeepSeek AI as national security threat

Taiwan of course has special reason to be cautious. In other countries, never the less

The article also points out (as have several Western commentators) the extent to which DeepTheft is relying on Nvidia chips that PRC is supposed to not have access to.

So as not to disappoint PT's accusations of paranoia: it's been clear for some time the LLM's stock prices are wildly over-priced compared to what value they're actually delivering. Then perhaps this is merely a pretext for a correction that was coming anyway, in the usual tech-hype/trough of despair.

Philip Taylor said,

February 2, 2025 @ 6:51 am

No accusation(s) intended, Ant — I was merely stating that I am not paranoid (especially when it comes to IT-based exchanges) but fully accept that others are (e.g., those who include in their e-mail signatures information such as

even when the message itself contains nothing whatsoever of importance).

KeithB said,

February 5, 2025 @ 9:19 am

AI's don't know "front" and "back" either:

https://freethoughtblogs.com/pharyngula/2025/02/05/ai-anatomy-is-weird/

Haamu said,

February 5, 2025 @ 7:33 pm

Likely of interest:

Language Models Use Trigonometry to Do Addition

Subhash Kantamneni, Max Tegmark

AntC said,

February 7, 2025 @ 4:06 am

More counter to the over-hype. The article is chiefly a compilation of industry comment. Apologies there's rather much ads/pop-ups at the links.