AMI not AGI?

« previous post | next post »

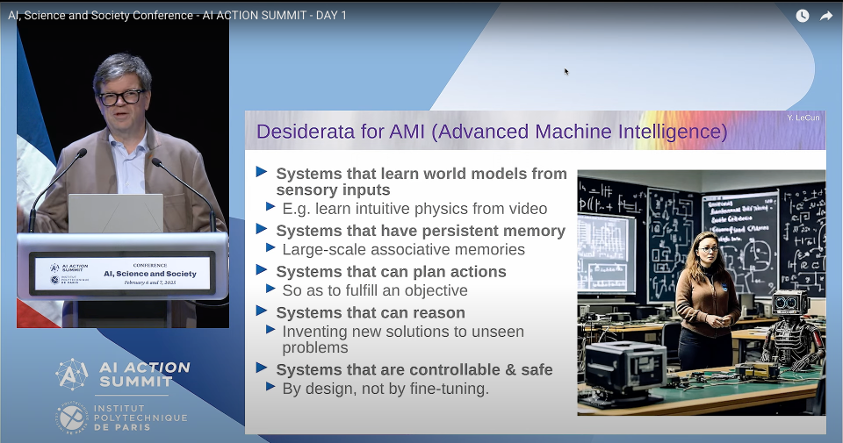

From Yann LeCun's presentation at the AI, Science and Society event in Paris last February:

We call this Advanced Machine Intelligence at Meta.

We don't like the term AGI, Artificial General Intelligence,

the reason being that

human intelligence is actually quite specialized,

and so calling it AGI is kind of a misnomer.

Um so we call this AMI

we actually pronounce it "ami"

which means "friend" in French.

Um so we need systems that um learn world models

from sensory input,

basically mental models of how the world works

so that you can manipulate

in your mind,

learn intuitive physics um from video let's say

systems that have consistent memory

systems that can plan actions

uh possibly hierarchically

so as to fulfill an objective

and systems that can reason.

Um and then systems that are controllable and safe

by design

not by fine-tuning which is the- the case for LLMs.

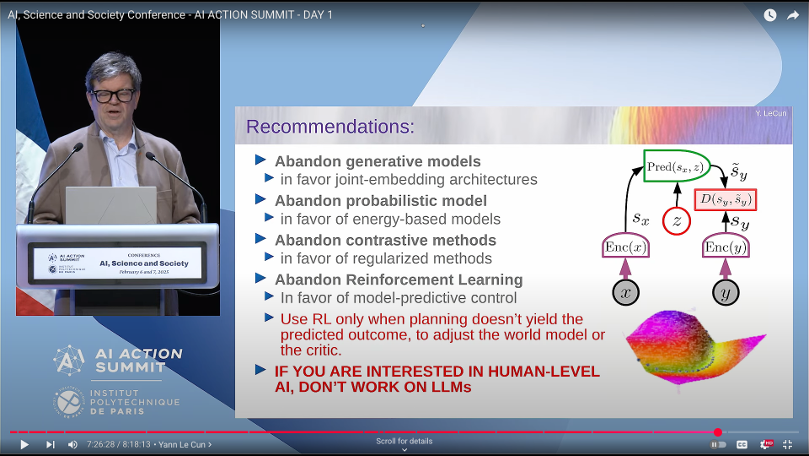

LeCun's whole 45-minute Paris presentation is worth watching, a few of the usual annoying tech issues with slide projection aside. Near the end of the talk, he makes these recommendations to researchers:

He emphasizes the reasons for his "Don't Work on LLMs" advice in his May 2025 interview with Alex Kantrowitz, which starts this way:

We are not going to get to human-level AI

by just scaling up LLMs.

This is just not going to happen.

OK?

LeCun is well known for arguing that LLMs can't reach cat-level intelligence. There's a sketch of what he thinks is the right approach in the body of the Paris talk, or in the essentially identical April 2025 talk at the Flatiron Institute in New York.

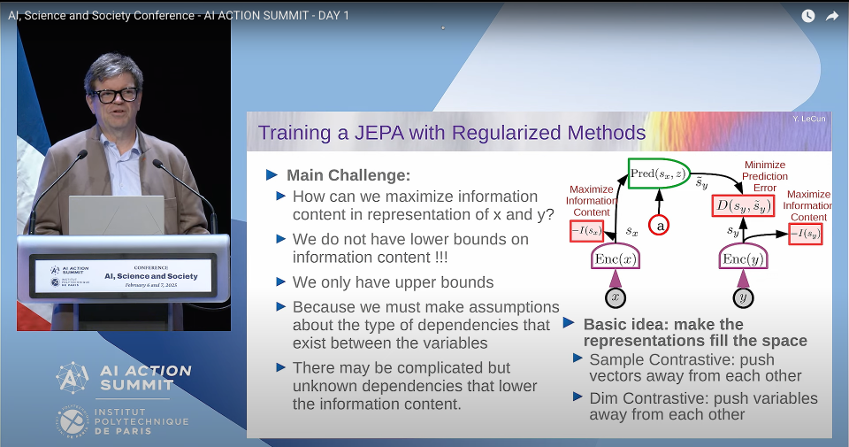

His proposal is a particular version of "SSL" = "Self Supervised Learning". There's a more technical example of the proposed approach in the June 2025 paper "V-JEPA 2: Self-supervised video models enable understanding, prediction and planning". The "V" part is short for "video"; "JEPA" means "joint embedding predictive architecture", and "2" means it's their second try.

LeCun explains the JEPA idea at length in the Paris talk, starting with this:

The V-JEPA 2 paper's abstract:

A major challenge for modern AI is to learn to understand the world and learn to act largely by observation. This paper explores a self-supervised approach that combines internet-scale video data with a small amount of interaction data (robot trajectories), to develop models capable of understanding, predicting, and planning in the physical world. We first pre-train an action-free joint-embedding-predictive architecture, V-JEPA 2, on a video and image dataset comprising over 1 million hours of internet video. V-JEPA 2 achieves strong performance on motion understanding (77.3 top-1 accuracy on Something-Something v2) and state-of-the-art performance on human action anticipation (39.7 recall-at-5 on Epic-Kitchens-100) surpassing previous task-specific models. Additionally, after aligning V-JEPA 2 with a large language model, we demonstrate state-of-the-art performance on multiple video question-answering tasks at the 8 billion parameter scale (e.g., 84.0 on PerceptionTest, 76.9 on TempCompass). Finally, we show how self-supervised learning can be applied to robotic planning tasks by post-training a latent action-conditioned world model, V-JEPA 2-AC, using less than 62 hours of unlabeled robot videos from the Droid dataset. We deploy V-JEPA 2-AC zero-shot on Franka arms in two different labs and enable picking and placing of objects using planning with image goals. Notably, this is achieved without collecting any data from the robots in these environments, and without any task-specific training or reward. This work demonstrates how self-supervised learning from web-scale data and a small amount of robot interaction data can yield a world model capable of planning in the physical world.

Kenny Easwaran said,

August 2, 2025 @ 1:08 pm

I find it notable that the teams at OpenAI and Google/DeepMind are very committed to the concept of AGI and have it as their target, while the teams at Anthropic and Meta don't believe that AGI is meaningful (with a further disagreement that the team at Meta doesn't even believe that current directions of development will lead to intelligences comparably powerful to humans, despite being different).

Viseguy said,

August 2, 2025 @ 4:44 pm

It's obvious when you spell it out, but AI that's "safe [b]y design, not by fine-tuning" should be a lodestar for all future research, LLM-based or otherwise. (Though maybe the train has left the station as far as LLMs are concerned.) I'm not steeped in the history of AI, but it seems to me that things went fundamentally wrong early on, when the quest was defined as mimicking (ELIZA-like), then equaling and surpassing, human intelligence, rather than developing systems of machine learning that complement that intelligence without purporting to compete with it. If this is what LeCun is getting at with his AMI — and I'm not qualified to say whether it is — then his theories are amiable indeed.