The effect of AI tools on coding

« previous post | next post »

Joel Becker et al., "Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity", METR 7/10/2025:

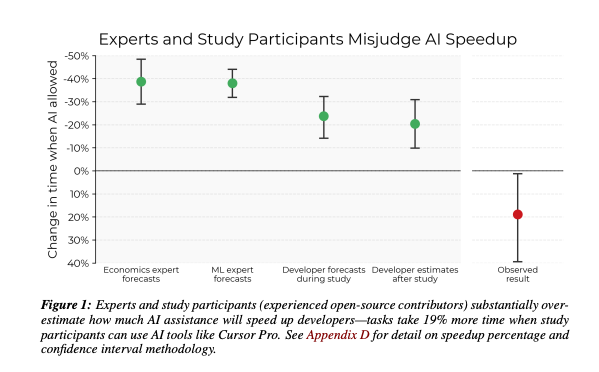

Despite widespread adoption, the impact of AI tools on software development in the wild remains understudied. We conduct a randomized controlled trial (RCT) to understand how AI tools at the February–June 2025 frontier affect the productivity of experienced open-source developers. 16 developers with moderate AI experience complete 246 tasks in mature projects on which they have an average of 5 years of prior experience. Each task is randomly assigned to allow or disallow usage of early-2025 AI tools. When AI tools are allowed, developers primarily use Cursor Pro, a popular code editor, and Claude 3.5/3.7 Sonnet. Before starting tasks, developers forecast that allowing AI will reduce completion time by 24%. After completing the study, developers estimate that allowing AI reduced completion time by 20%. Surprisingly, we find that allowing AI actually increases completion time by 19%—AI tooling slowed developers down. This slowdown also contradicts predictions from experts in economics (39% shorter) and ML (38% shorter). To understand this result, we collect and evaluate evidence for 20 properties of our setting that a priori could contribute to the observed slowdown effect—for example, the size and quality standards of projects, or prior developer experience with AI tooling. Although the influence of experimental artifacts cannot be entirely ruled out, the robustness of the slowdown effect across our analyses suggests it is unlikely to primarily be a function of our experimental design.

(See also this version…)

A graph of their results:

This Swedish thesis confirmed those survey results, but did not test actual development time — the METR results show that users' opinions about productivity are by no means always accurate. Of course those METR results were based on Claude 3.5 — Claude 4 might be different. Or might not be. And maybe making coders feel good is worth a 19% productivity decline…

(Here's someone who's really enthusiastic about using Claude Code — I assume the latest version — but again, it's opinion and not productivity measurement.)

Articles like "Generative AI is Turning Publishing Into a Swamp of Slop" (7/10/2025) suggest that LLMs are enhancing "productivity" in certain corners of the publishing industry. So it would be interesting to understand (beyond the obvious reasons) why coding is different, and what the implications are for other applications.

The METR discussion includes some attempts to "very roughly gesture at some salient important differences", which would apply in other fields. My own concern, based on considerable experience, is that the motives of the administrators (and consultants) responsible for tool choice are pretty clearly not always aligned with productivity improvements. Or user satisfaction, for that matter…

Ron Stieger said,

July 13, 2025 @ 8:23 am

I don’t think coding is different from publishing in this regard. The key to this result is “experienced developers”. There have been other results that AI does increase productivity for junior developers. But the senior developers know how to recognize slop and won’t accept it, so they end up with more iterations.That’s certainly been my experience: at times it’s an incredible shortcut but other times it goes down a rabbit hole of wrongness, so overall a wash at best. I would expect a similar result from serious writers – it would slow them down even though it accelerates the production of crappy writing.

A more interesting question perhaps: why were expectations/perceptions so far off from the measured reality?

AntC said,

July 13, 2025 @ 4:36 pm

why were expectations/perceptions so far off from the measured reality?

(Long-term programmer here; and manager of programming teams; and tester, and implementor/customiser of developed systems.)

Programmers have always wildly under-estimated the time to develop a fully functional system; whatever media for producing the program (punched cards, paper tape, teletype editor, fancy WYSIWIG, VS Code, …, AI slop); whatever programming language/paradigm.

What new-fangled Rapid Application Development methodologies produce gets you quicker to a deceptively performing system that maybe interacts nicely with the user — IOW to the same level as AI slop. The devil is in the detail of business rules and unusual/unanticipated conditions. To the point that measuring 'programming' time is meaningless: the detail all comes out during 'testing' time or 'user acceptance'.

So these metrics should be measuring total programmer effort including so-called "re-work" when what's delivered the first time round doesn't support the business adequately. IOW when specifiers and programmers haven't done their job properly.

Mark Liberman said,

July 13, 2025 @ 4:42 pm

@AntC: "Programmers have always wildly under-estimated the time to develop a fully functional system"

When I was at Bell Labs in the 1970s and 1980s, the joke (mainly among the Unix programmers) was that the way to estimate time to completion was to take your best guess, double it, and raise to the next higher time unit.

So "two hours" -> "four days"; "one day" -> "two weeks"; etc.

Rick Rubenstein said,

July 14, 2025 @ 3:52 am

I have to admit I've been surprised that the ceiling for generative AI so far has turned out to be somewhere at the top edge of "hack" level. Until recently my hunch was that Doug Hofstadter was essentially right: the hard part was getting computers to match the level of not-especially-clever people; getting them from there to Mozart/Einstein/Shakespeare would just be a matter of degree.

But no, AI is proving more than capable of generating not-actually-good-but-not-laughably-bad output in all sorts of fields. We detect AI by its "slopness", not by its incompetence. It seems clear to me that there's little future for human hack illustrators, hack novelists, hack programmers, hack songwriters. AI is perfectly suited to creating the 90% part of Sturgeon's Law. But thus far I haven't seen anything that looks like it's cracked that top 10% — and tellingly, I don't really hear genAI's hypsters claiming it either.

Richard Hershberger said,

July 14, 2025 @ 4:28 am

@Rick Rubenstein: I, on the other hand, am not in the least surprised that AI tops out at mediocrity. Given the training method, of throwing vast amounts of data into the hopper, how could it be otherwise? That input is the actual definition of mediocre. I am not qualified to judge coding output, but AI generated text is consistently grammatically correct but utterly uninspired. I think we were impressed by it because bad writers have trouble with basic grammar, and even good writers let errors slip through. So yes, this is an aspect of writing that humans struggle with and which AI does well. But when we talk about good, bad, or indifferent writing, grammar isn't really what we are talking about.

Philip Taylor said,

July 14, 2025 @ 5:30 am

« When I was at Bell Labs in the 1970s and 1980s, the joke (mainly among the Unix programmers) was that the way to estimate time to completion was to take your best guess, double it, and raise to the next higher time unit. So "two hours" -> "four days"; "one day" -> "two weeks"; etc. » — remarkably similar to (Don) Knuth’s rule-of-thumb, which differs only in adding one rather than doubling. So "two hours" -> "three days"; "one day" -> "two weeks", etc. But maybe not "remarkably", since he would have formulated his rule at around the same period, and may well have interacted with the denizens of Bell Labs.

But as regards AntC’s "programmers have always wildly under-estimated the time to develop a fully functional system", I would qualify that with a leading "Some". I regularly infuriated my manager by telling him "when it’s finished !" if he asked when I expected a particular project to be ready for use.

bks said,

July 14, 2025 @ 7:01 am

We programmers learned long ago that management had learned aphorisms such as those offered by Mark L. And Philip T., and thus had to reduce our honest and highly accurate estimates by an appropriate factor.

Brett said,

July 14, 2025 @ 4:43 pm

"Double it and change to the next higher unit" was a pretty well-known gag by the 1980s. It appeared in one of the short Murphy's Law books around that time. More recently (but still more than nine years ago) xkcd parodied it further.

Chas Belov said,

July 15, 2025 @ 8:06 pm

@AntC:

My experience was the opposite. Former COBOL programmer here for financial applications.

I would come in with time estimates I believed were accurate. Management would blanch and tell me to reduce the time significantly. I did as told. The project would wind up taking about what I estimated originally.

Link love | Grumpy Rumblings (of the formerly untenured) said,

July 19, 2025 @ 2:05 am

[…] This article has been going around the internet— basically a guy did an RCT (a real one, not like the fake one that that MIT econ grad student made up) where they randomized AI tools for software developers. They found that developers thought AI made them more productive in terms of completion time (which is much better than "lines of code" as a measure that some of these studies use(!)), but it actually made them less productive, and more experience meant a worse hit to productivity. I feel like I am spending all my coding time these days adding unit tests and debugging AI code that RAs and coauthors have made. […]