The "Letter Equity Task Force"

« previous post | next post »

Previous LLOG coverage: "AI on Rs in 'strawberry'", 8/28/2024; "'The cosmic jam from whence it came'", 9/26/2024.

Current satire: Alberto Romero, "Report: OpenAI Spends Millions a Year Miscounting the R’s in ‘Strawberry’", Medium 11/22/2024.

OpenAI, the most talked-about tech start-up of the decade, convened an emergency company-wide meeting Tuesday to address what executives are calling “the single greatest existential challenge facing artificial intelligence today”: Why can’t their models count the R’s in strawberry?

The controversy began shortly after the release of GPT-4, on March 2023, when users on Reddit and Twitter discovered the model’s inability to count the R’s in strawberry. The responses varied from inaccurate guesses to cryptic replies like, “More R’s than you can handle.” In one particularly unhinged moment, the chatbot signed off with, “Call me Sydney. That’s all you need to know.”

“I kept trying to count the R’s and it just wouldn’t do it,” said one user in a 17-post thread that went viral on Bluesky. “So I made it count other letters — T’s, B’s, you name it. No chance. Then it hit me: this thing is eating my letters. Letters today, kids tomorrow. Do we want that risk? It’s dangerous. It’s discriminatory. It’s terrifying. We want our children to live, don’t we?!”

At OpenAI headquarters, CEO Sam Altman struck a serious tone at the meeting, describing the R-counting debacle as a “crisis of faith” for the AI community. “I also think it’s a stupid question,” Altman admitted. “There are three R’s. I counted them this morning. But our users keep asking, and we are here to serve their revealed preferences. Can we please stop trying to make these things reason and teach them some basic arithmetic?”

Sources inside OpenAI say the company has already allocated significant resources to the issue, including a newly formed independent Letter Equity Task Force (LETF), led by top researchers who previously trained autonomous vehicles to not discriminate between red and green traffic lights. “This is bigger than ChatGPT. Bigger than AlphaFold. This is about trust,” said one LETF member. “Because if we can’t count R’s in strawberry, what’s next? Misidentifying bananas? Calling tomatoes a vegetable?”

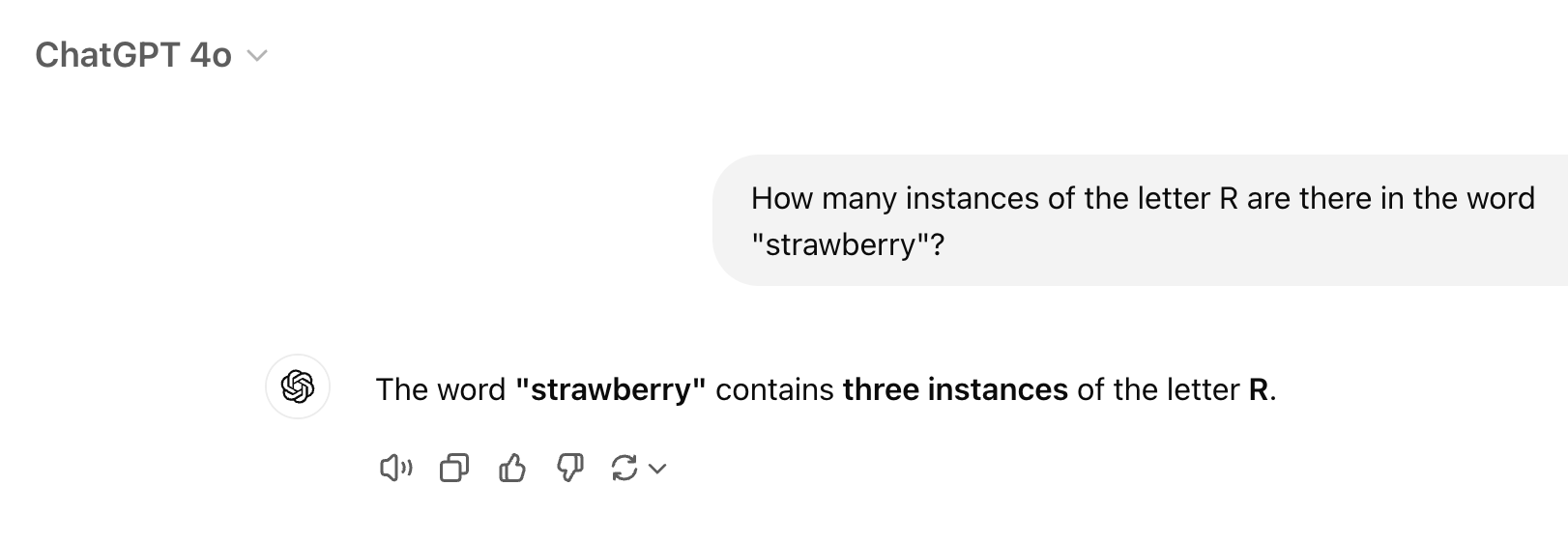

The (fictional) Letter Equity Task Force has done its job on strawberry, as of this morning:

However, ChatGPT4o still has some letter-counting issues. I asked it for the number of instances of the letter 'e' in the first sentence of the Declaration of Independence, and it started by giving me word-by-word counts (though oddly leaving out some words). 3 of the first ten word-by-word counts are wrong (with 6 words omitted up to that point):

- When: 1

- the: 1

- Course: 1

- events: 2

- becomes: 3

- necessary: 1

- one: 1

- people: 2

- dissolve: 2

- the: 1

And there are plenty of other counting errors later in the list, e.g.

connected: 3

powers: 0

separate: 3

Nature: 2

Nature's: 2

causes: 2

separation: 2

ChatGPT then offer a sum:

Total: 56 instances of 'e'.

Which I think is wrong — unless I and my program miscounted, the sum of the (right and wrong) word-level counts that ChatGPT offers is actually 55.

The actual number of 'e' letters in that sentence is 50, and oddly enough, when I ask again for a total count, ChatGPT gets it right:

And actually offers counting code, which actually runs and actually works:

# The sentence to analyze

sentence = ("When in the Course of human events, it becomes necessary for one people to dissolve the political bands "

"which have connected them with another, and to assume among the powers of the earth, the separate and "

"equal station to which the Laws of Nature and of Nature's God entitle them, a decent respect to the opinions "

"of mankind requires that they should declare the causes which impel them to the separation.")

# Counting the occurrences of the letter 'e'

count_e = sentence.lower().count('e')

count_e

So why it screwed up the first answer so badly remains a puzzle — seem like the Letter Equity Task Force still has some things to do, and people should continue to be careful about relying on ChatGPT for even the simplest forms of data analysis.

The whole dialogue is here.

Yuval said,

December 5, 2024 @ 7:13 am

It's not really a puzzle if you listen to the explanation every time it's given in every instance that people decide to make this thing viral again.

Mark Liberman said,

December 5, 2024 @ 7:34 am

@Yuval:

But telling us again and again that ChatGPT tokenizes text into substrings that are not "words", and counts letters in those idiosyncratically divided strings in a way that gives nonsensical answers to questions about words and sentences, doesn't solve the puzzle of why its authors insist on continuing to apply those tokenization and counting methods to problems that they've been shown again and again to fail at. Or rather, why they don't try to guide the system to use an analysis method suited to answering the questions at issue.

Especially when they also show us that the system is actually capable of doing it right, sometimes.

Similar issues arise throughout the range of possible applications of such systems, not just in not-very-crucial case of counting letters. That doesn't mean that these systems are useless, just that they're not generally reliable outside of cases they've been specifically trained and tested on.

Sometimes this is because of first-level issues like tokenization, but there are plenty of other issues at other levels. Which is why the most likely medium-term outcome is not one system that solves every possible problem, but rather many systems individually tailored to solve particular real-world problems in particular contexts.

(And "tailored" here doesn't just mean "fine-tuned"…)

Yuval said,

December 5, 2024 @ 8:38 am

In many ways, it does indeed solve the puzzle: a model purported to be usable on an endless range of problems (or at least perceived by its users to) addresses the very long tail of problems according to its default behavior. That default behavior happens to be "sequentially predicting the next token according to the current sequence of tokens in the prompt + answer so far". That's it. Claiming that "its authors insist on continuing to apply those tokenization and counting methods to problems that they've been shown again and again to fail at" misses the point: this is not intentional programming. It's just a not-important-enough problem, as you correctly identified. The model isn't counting; it's completing text. If the data didn't jerk its parameters enough to induce a correct count of each letters in each possible word, it just hallucinates a number. That's the first level.

The second level is that you can't force it to retokenize and count again, under the regular LM regime, because that's not how it works either. The most plausible (to me) strategy is what's called "tool use", i.e. calling external code, which seems to be what it was finally doing in that last example it gave. But recognizing the need to call a tool and then correctly call it does require some more sophisticated, or at least dedicated, training, which is likely done on a priority basis, and this trick is low-priority. I wouldn't be surprised if the strawberry example from your first exemplar isn't simply hard-coded because that one specifically was giving them so much embarrassing PR.

Finally, the reason I made my first comment was that I've grown accustomed to laypeople being befuddled at this, but it irks me to see an NLP-aware outlet not mention tokenization at all when discussing this problem. Tokenization is the root cause of this pathology, and knowing that makes things much less mysterious. In my view, one of this blog's strengths has always been informing the public rather than playing into hype machines.

Yuval said,

December 5, 2024 @ 8:41 am

"I wouldn't be surprised if … isn't simply"

One more for the monkey brains file :/

Mark Liberman said,

December 5, 2024 @ 9:33 am

@Yuval: " I wouldn't be surprised if the strawberry example from your first exemplar isn't simply hard-coded because that one specifically was giving them so much embarrassing PR."

They seems to have fixed letter-counting in cases where ChatGPT4o is asked about counts for whatever letter in individual words, not just about r's in "strawberry", e.g. here. So I remain puzzled about why it does so badly when it's asked about letter counts in a sentence, and divides the sentence up for word-specific counts, many of which it gets wrong (10 out of 71, in the sentence cited in the OP).

And then there's the question of why it can't add up its own (right and wrong) counts correctly — it could just say "I can't count", or create a counting program that works, or whatever.

And why does it leave some words out when dividing up a sentence into words? Presumably this is also somehow the fault of its tokenization, but the details are unclear to me. And there are many significant questions that depend on accurate word counts, e.g. type/token relations and other measures of lexical diversity.

BZ said,

December 5, 2024 @ 10:18 am

While the exact question How many instances of the letter R are there in the word "strawberry"? does get a proper response, "How many Rs are in strawberry?" still results in "two".

Mark Liberman said,

December 5, 2024 @ 10:49 am

@BZ: 'While the exact question How many instances of the letter R are there in the word "strawberry"? does get a proper response, "How many Rs are in strawberry?" still results in "two".'

Interesting. So if there's some hard-coding of prompts, it's kind of fragile — like most LLM prompt-related stuff.

Vance Koven said,

December 5, 2024 @ 1:29 pm

So another sure sign that ChatGPT fails the Turing Test is that, after the fifth time you ask it to count the Rs in strawberry it doesn't reply asking you to get off its Rs already.

PeterB said,

December 5, 2024 @ 1:49 pm

The "problem" is that ChatGPT is answering the "how many Rs in 'strawberry'" the way any normal human being would, which is confusing to people who expect it to behave like the hyper-literal robots of 1950s science-fiction.

Jonathan Smith said,

December 5, 2024 @ 2:00 pm

@Peter B

from chatgpt just now,

"The word "berry" has 2 R's."

"The word "cranberry" has 2 R's."

"The word "elderberry" has 3 R's."

"The string "frsjfrljdflsijfrlfrrr" has 7 R's."

So no, and no also about normal human beings.

Thing is these people really, really don't want to jury-rig. They are ideologues with visions of "magic" and "general AI" (quotes). Money is nice of course… but immortality might be better.

Chris Button said,

December 5, 2024 @ 2:31 pm

The same could be said for crypto/blockchain as well. It seems to be ignorance of "tokenization" that has resulted in gross misunderstanding/confusion. Back when NFTs were the all the rage, people didn't seem to pay too much attention to what the "T" actually signified.

Chris Button said,

December 5, 2024 @ 2:50 pm

Although, before I'm accused of comparing apples with oranges, I should caveat that tokenization in blockchain (recording asset ownership) and tokenization in AI (for data analysis) don't have much in common beyond the general concept of tokens.

PeterB said,

December 5, 2024 @ 7:27 pm

As Yuval has repeatedly tried to explain, ChatGPT isn't "counting" at all, just trying to produce plausible continuations. It says there are two "r"s in "strawberry" because no native speaker of English on the planet has ever or will ever omit the "r" in "straw-" and only the most obnoxious pedant would pretend not to understand that it is the "-berry" part that is at issue. So the corpus of texts that ChatGPT is relying on recognizes this as a "natural" response. Similarly for "cranberry". But there are speakers who might omit "r" of "elder-", and the training corpus includes enough examples of this sort of thing (e.g. "library", "february") that ChatGPT is able to pick on it (which is actually kind of impressive…).

Of course, once you have identified a weak spot, you can explore and drill down on it, eliciting sillier and sillier responses until some exasperated programmer is driven to hand-code an ad hoc workaround.

All we're really doing is confirming that LLMs do not "reason". The shocking thing about the these models is that they suggest very clearly that humans don't do a lot of reasoning either.

PeterB said,

December 5, 2024 @ 9:00 pm

Rereading my last comment, I realize it might seem a bit offensive. I did Not mean to call anyone here a pedant (at least on a pejorical sense), not to suggest that they do not reason!

Rather, I was trying to say that a human, presented with "how many 'r's in strawberry" understands that this is not a question about strawberries, but rather a request for spelling assistance. They might reply with 2 or3, depending on what they take the interlocutor's trouble to be, but will in either case recognize that this calls for some level of reasoning or at least analysis. But all the LLM "knows" is what kind of responses humans have made in the past in response to similar kinds of questions.

Haamu said,

December 5, 2024 @ 9:33 pm

@PeterB, you're right, I hadn't thought of it this way:

But you seem to have put your finger on what's been bugging me as I've been observing this whole "strawberry" brouhaha: The question would almost never be asked IRL. Now that we've moved past the era of manual typesetting, probably the only person who really needs to know the true total number of Rs in "strawberry" is the host of Wheel of Fortune.

What this suggests is we might need a sort of meta-Turing Test: namely, one that asks, "Are you a human that is trying to converse with a human, or are you just a human that is trying to trip up an AI?"

Haamu said,

December 5, 2024 @ 10:37 pm

I wonder if we would get better math competence out of LLMs by modeling their training on how we train the neural nets of human children to do math.

I just posed this suggestion to ChatGPT o1 and the resulting conversation was pretty interesting. (Those who ask these things merely to count letters are missing a fair bit of fun.)

At the end, I did ask it to count the Rs in "strawberry" and it did a creditable job.

Philip Taylor said,

December 6, 2024 @ 2:46 pm

"They might reply with 2 or3, depending on what they take the interlocutor's trouble to be" — they might also reply with two or three depending on whether they can "see" the "r" in "straw" (which I could not). Even after reading « no native speaker of English on the planet has ever or will ever omit the "r" in "straw-" » I failed to consciously see the "r" to which reference had been made.

Jonathan Smith said,

December 6, 2024 @ 6:38 pm

Of *course* "ChatGPT isn't 'counting' at all, just trying to produce plausible continuations." Point is it's totally wrong — wronger even than thinking the system is good at math — to assert that ChatGPT is answering such queries "the way any normal human being would," providing responses which are "natural" in light of readily observable or intuitable features of its corpus; e.g., "impressively" answering incorrectly (sorry, "naturally") in the case of 'cranberry' but correctly in the case of 'elderberry' given that "there are speakers who might omit the 'r' of 'elder-" (and note that these [and all] answers change minute to minute.) This is very weird Altman-apologist-speak: "OK fine the answer is 'wrong' and yet… it's actually deeply right, more human than human even! F*** the pedants!"

Chris Button said,

December 6, 2024 @ 9:45 pm

I am certainly not an expert in LLMs. But I thought I would add a few words based on the cursory understanding I have acquired when working on certain associated pursuits.

Tokenization for LLMs does not accord with morphology. It is simply based on assigning "tokens" (unique numerical values) to chunks that repeat.

OpenAI's tokenizer assigns 3 tokens to strawberry accordingly:

str

aw

berry

So, despite its name, an LLM can also work with images, where tokens are assigned to chunks of pixels.

One could even venture to say that LLMs have nothing to do with linguistics whatsoever. Perhaps they aren't even a particularly suitable topic for LLog in that regard. But it would seem remiss not to discuss them here.