"Emote Portrait Alive"

« previous post | next post »

EMO, by Linrui Tian, Qi Wang, Bang Zhang, and Liefeng Bo from Alibaba's Institute for Intelligent Computing, is "an expressive audio-driven portrait-video generation framework. Input a single reference image and the vocal audio, e.g. talking and singing, our method can generate vocal avatar videos with expressive facial expressions, and various head poses".

As far as I know, there's no interactive demo so far, much less code — just a github demo page and an arXiv.org paper.

Their demo clips are very impressive — a series of X posts from yesterday has gotten 1.1M views already. Here's Leonardo DiCaprio artificially lip-syncing Eminem:

(There are plenty more where that came from — I uploaded that sample to YouTube because I was unable to persuade the html <video> element to modify the display width without distortion.)

I'm always skeptical of hand-selected synthesis demos, since we have no way of knowing how many problematic attempts were discarded, or what sorts of side-information might have been provided. Still, their examples are impressive. The arXiv.org paper explains:

To train our model, we constructed a vast and diverse audio-video dataset, amassing over 250 hours of footage and more than 150 million images. This expansive dataset encompasses a wide range of content, including speeches, film and television clips, and singing performances, and covers multiple languages such as Chinese and English. The rich variety of speaking and singing videos ensures that our training material captures a broad spectrum of human expressions and vocal styles, providing a solid foundation for the development of EMO. We conducted extensive experiments and comparisons on the HDTF dataset, where our approach surpassed current state-of-the-art (SOTA) methods, including DreamTalk, Wav2Lip, and SadTalker, across multiple metrics such as FID, SyncNet, F-SIM, and FVD. In addition to quantitative assessments, we also carried out a comprehensive user study and qualitative evaluations, which revealed that our method is capable of generating highly natural and expressive talking and even singing videos, achieving the best results to date.

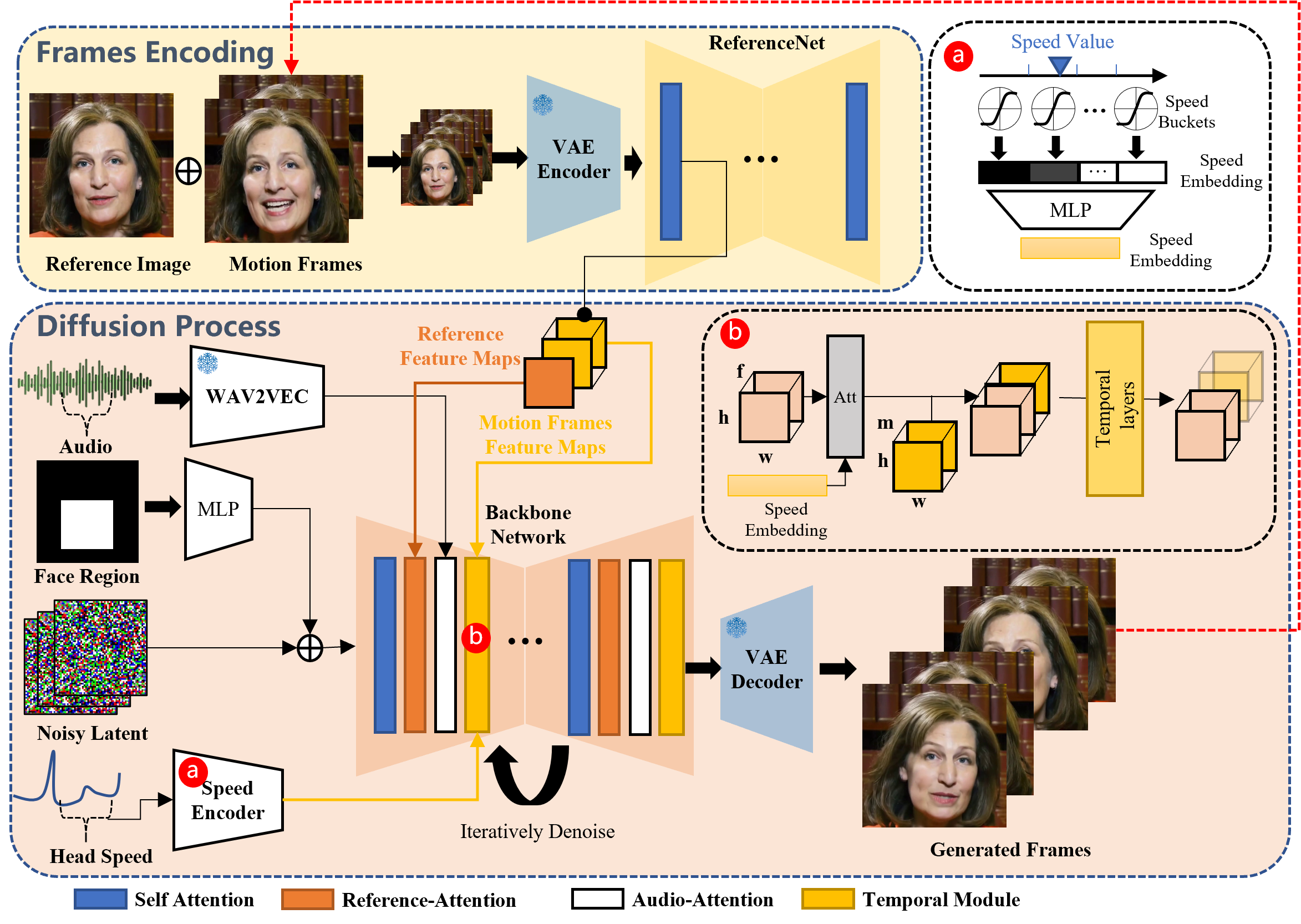

…and provides this graphical overview:

Here's AI Mona Lisa lip-syncing Miley Cyrus covered by Yuqi — because the syllabic pace is slower, it's easier to visually evaluate the (generally excellent) phonetic lip/face synchrony:

Update — I was a bit puzzled by their account of the training set size: "over 250 hours of footage and more than 150 million images". If the images came from the standard digital video frame rate of 30 fps, that would be 250*60*60*30 = 27 million. The standard movie rate of 24 fps would yield a lower total. So did they actually use more "footage"? or did they do some interpolation? or did they multiply wrong?

The paper says in another section that

We collected approximately 250 hours of talking head videos from the internet and supplemented this with the HDTF [34] and VFHQ [31] datasets to train our models. As the VFHQ dataset lacks audio, it is only used in the first training stage.

That would explain the difference, but it means that the training was actually based on a lot more than "250 hours of footage".

Update #2 — There are quite a few recent experiments in similar directions, mostly following up on Wav2Lip (K.R. Prajwal, et al., "A lip sync expert is all you need for speech to lip generation in the wild", 2020), whose authors provided an interactive demo and source code.

For example, some recent work at NVidia provides an interesting set of videos comparing their system's outputs to those several others.

David L said,

February 29, 2024 @ 10:36 am

If I were Leonardo DiCaprio or Eminem I would be calling a lawyer.

Jim said,

February 29, 2024 @ 6:46 pm

I saw an ad in a phone app for an iOS app doing this just yesterday. Some version of the tech appears to be out there already.

Scott JP said,

March 1, 2024 @ 4:40 pm

Singing is one thing, but what about speaking? Can someone now find a picture of me and create a video with me saying something I never said?

Mark Liberman said,

March 1, 2024 @ 5:14 pm

@Scott JP: "Can someone now find a picture of me and create a video with me saying something I never said?"

Yes, by putting together several things.

This program can take a head-shot and an audio file — speaking just like singing — and create a video of the pictured person speaking or singing the recording. It doesn't create the audio, and it doesn't try to imitate a given person's voice.

Of course there are text-to-speech synthesis programs, and there are ways to make such synthesis sound like a particular voice. And with TTS, the task of figuring out what the lips and jaw and face should be doing is actually easier than with only audio.

Anyhow the piece parts exist to do what you ask about, and in fact such "deep fakes" have been around for a while.

What's new about this program — if anything — is the quality of its lip-syncing and its head and face movements.

Jarek Weckwerth said,

March 3, 2024 @ 5:15 pm

@ Mark Liberman: the quality of its lip-syncing and its head and face movements

Yes, in particular the head movements are finally done in an almost natual manner. For a long time in avatar animation, the head would just do some random slo-mo "floating around" without much relation to the rhythm of the speech. Here, the movements are more punctuated and, let's say, abrupt, and generally in sync with the audio. Impressive.