Watermarking text?

« previous post | next post »

Ashley Belanger, "OpenAI, Google will watermark AI-generated content to hinder deepfakes, misinfo", ars technica 7/21/2023:

Seven companies — including OpenAI, Microsoft, Google, Meta, Amazon, Anthropic, and Inflection —- have committed to developing tech to clearly watermark AI-generated content. That will help make it safer to share AI-generated text, video, audio, and images without misleading others about the authenticity of that content, the Biden administration hopes.

The link goes to a 7/21 White House with the title "FACT SHEET: Biden-Harris Administration Secures Voluntary Commitments from Leading Artificial Intelligence Companies to Manage the Risks Posed by AI". One of that document's many bullet points:

- The companies commit to developing robust technical mechanisms to ensure that users know when content is AI generated, such as a watermarking system. This action enables creativity with AI to flourish but reduces the dangers of fraud and deception.

Belanger's ars technica article notes that

It's currently unclear how the watermark will work, but it will likely be embedded in the content so that users can trace its origins to the AI tools used to generate it.

There's actually a fair amount of stuff Out These about how textual watermarking might work, eg. Keith Collins, "How ChatGPT Could Embed a ‘Watermark’ in the Text It Generates", NYT 2/17/2023:

Identifying generated text, experts say, is becoming increasingly difficult as software like ChatGPT continues to advance and turns out text that is more convincingly human. OpenAI is now experimenting with a technology that would insert special words into the text that ChatGPT generates, making it easier to detect later. The technique is known as watermarking.

The watermarking method that OpenAI is exploring is similar to one described in a recent paper by researchers at the University of Maryland, said Jan Leike, the head of alignment at OpenAI.

The "recent paper" link goes to John Kirchenbauer et al., "A Watermark for Large Language Models", which arxiv.org give a publication date (6/6/2023) nearly four months after the NYT article that cites it. That's of course due to the magic of archiv.org ,which successively updated the .pdf link from the v1 version of 1/24 to the v2 version of 1/27 and finally to the v3 version of 6/6. Here's the abstract:

Potential harms of large language models can be mitigated by watermarking model output, i.e., embedding signals into generated text that are invisible to humans but algorithmically detectable from a short span of tokens. We propose a watermarking framework for proprietary language models. The watermark can be embedded with negligible impact on text quality, and can be detected using an efficient open-source algorithm without access to the language model API or parameters. The watermark works by selecting a randomized set of “green” tokens before a word is generated, and then softly promoting use of green tokens during sampling. We propose a statistical test for detecting the watermark with interpretable p-values, and derive an information-theoretic framework for analyzing the sensitivity of the watermark. We test the watermark using a multi-billion parameter model from the Open Pretrained Transformer (OPT) family, and discuss robustness and security.

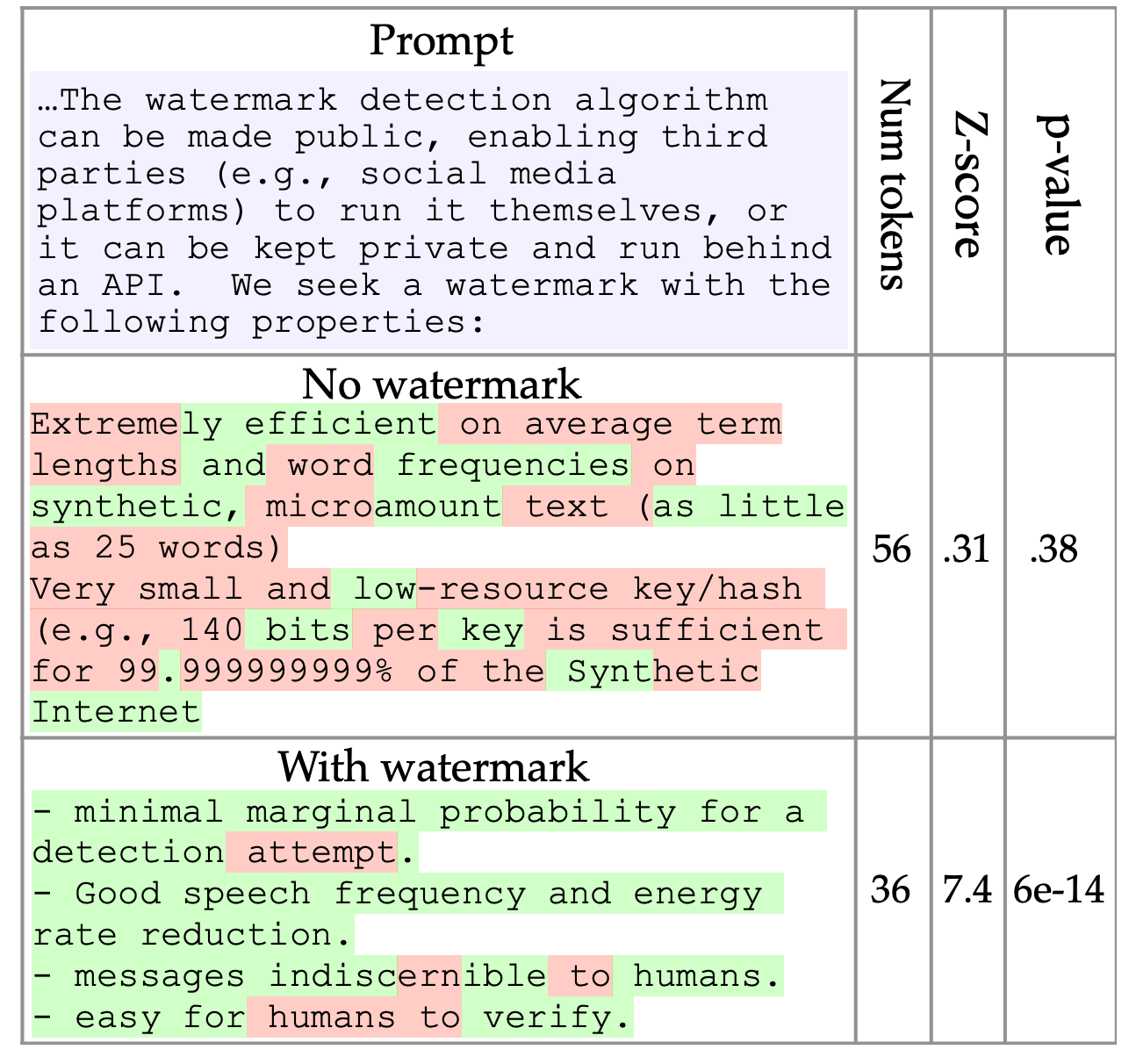

Here's their illustrative example:

And its caption:

Figure 1. Outputs of a language model, both with and without the application of a watermark. The watermarked text, if written by a human, is expected to contain 9 “green” tokens, yet it contains 28. The probability of this happening by random chance is ≈ 6×10−14, leaving us extremely certain that this text is machine generated. Words are marked with their respective colors. The model is OPT-6.7B using multinomial sampling. Watermark parameters are γ, δ = (0.25, 2).

Read the paper if you want details. They list the obvious attacks:

Three types of attacks are possible. Text insertion attacks add additional tokens after generation that may be in the red list and may alter the red list computation of downstream tokens. Text deletion removes tokens from the generated text, potentially removing tokens in the green list and modifying downstream red lists. This attack increases the monetary costs of generation, as the attacker is “wasting” tokens, and may reduce text quality due to effectively decreased LM context width. Text substitution swaps one token with another, potentially introducing one red list token, and possibly causing downstream red listing. This attack can be automated through dictionary or LM substitution, but may reduce the quality of the generated text.

They assert, with some empirical support, that "the watermark is computationally simple to verify without access to the underlying model, false positive detections are statistically improbable, and the watermark degrades gracefully under attack."

I'm not entirely convinced that these approaches are safe against substitution and paraphrase attacks. But the most obvious issue is that there will be lots of LLM systems out there in the wild, developed and extended by people and companies whose goal is precisely to provide undetectable automatic ghostwriting services.

This post says nothing about watermarking techniques for image, audio and video data. There are well known watermarking techniques for those applications, but again, there are going to be available systems without any such safeguards.

[And for why watermarks have anything to do with water, see the Wikipedia page.]

Update — Another straw in the wind… OpenAI's 1/31/2023 product announcement "New AI classifier for indicating AI-written text: We’re launching a classifier trained to distinguish between AI-written and human-written text" has this note added below the headline:

As of July 20, 2023, the AI classifier is no longer available due to its low rate of accuracy. We are working to incorporate feedback and are currently researching more effective provenance techniques for text, and have made a commitment to develop and deploy mechanisms that enable users to understand if audio or visual content is AI-generated.

On the other hand, as of July 26, 2023, there's no information about concrete steps towards "mechanisms that enable users to understand if audio or visual content is AI-generated".

For another perspective, see Lora Kelly's 7/24/2023 Atlantic article, "AI Companies Are Trying to Have It Both Ways: In agreeing to guardrails, industry leaders are nodding to the possible risks of their creations without pausing their aggressive competition":

In recent months, as AI tools have achieved widespread usage and interest, OpenAI and its competitors have been doing an interesting dance: They are boosting their technology while also warning, many times in apocalyptic terms, of its potential harms. […]

On Friday, leaders from seven major AI companies—OpenAI, Amazon, Anthropic, Google, Inflection, Meta, and Microsoft—met with Joe Biden and agreed to a set of voluntary safeguards. […]

“For AI firms, this is a dream scenario, where they can ease regulatory pressure by pretending this fixes the problem, while ultimately continuing business as usual,” Albert Fox Cahn, the executive director of the Surveillance Technology Oversight Project, told me in an email. He added that other companies whose products pose safety risks, such as car manufacturers and nuclear-power plants, don’t get to self-regulate.

It remains unclear what the dangers really are, and what kind of safeguards would work. A blanket prohibition on "deep learning" experimentation, aside from being a terrible idea in general, would just drive the development underground, overseas, or into secret labs motivated by apocalyptic hopes.

So I agree with Kelly's conclusion that the announced "voluntary safeguards" are basically just PR cosmetics.

And as a side note, there should be more discussion about the value of "apocalyptic" warnings as advertising.

Update — for more on problems with digital watermarking in general, see Vittoria Elliott, "Big AI Won’t Stop Election Deepfakes With Watermarks", Wired 7/27/2023.

AntC said,

July 25, 2023 @ 10:20 pm

So the onus is not only on the AI tool to place the watermark, but also on anybody presenting the output to retain the mark(?) So easily evaded by copy-pasting the text?

There seems to be an epidemic of lazy AI-powered journalism in my field. (I'm not saying it's restricted to there, but that's where I know enough about the topic to smell a rat.) Typical article title 'XXX vs. YYY: what's the difference?'/which is better?/the difference is complexity. For example

SQL vs. CSS: …. Those two are text-based 'programming languages' kinda, but in completely separate domains. Anybody who'd even mention them in the same breath doesn't know either. The article even concedes "their overall purpose isn’t at all aligned." But don't let that stop it coming up with bland wordsalad. One corker: "Neither one of these … is what you could call difficult to learn." I've been programming in SQL for 40 years. I beg to differ: it has many dark corners I keep well clear of.

The article wobbles about between describing the two; (failing to) distinguishing them; and careers advice for those considering learning them — but fails to state that since they cover separate domains, you might well get a job that needs both.

Of course there's no proof such articles are AI-generated. Probably some human has given them a quick once-over whilst copy-pasting from the watermarked AI source.

Yuval said,

July 26, 2023 @ 1:14 am

The Maryland paper was quickly rebutted/complemented (choose your perspective) by this work from Stanford.

AntC said,

July 26, 2023 @ 1:28 am

So the onus is not only on the AI tool to place the watermark, but also on anybody presenting the output to retain the mark(?) …

If I'm following along the Kirchenbauer et al. paper, the trick needs the AI tool-providers to agree a set of 'skunked' words/phrases/token-sequences that they'll insert at random in their output, with greater likelihood of appearance than in human-authored text. I guess the words have to be relatively content-free, otherwise they'll inject some unlikely semantics into the text. But 'and'?, 'humans'? OK it's bad style to sprinkle too many 'and's — except if you're writing the coda of the greatest stream-of-consciousness novel. What if you're summarising anthropological research?

… So easily evaded by copy-pasting the text?

Ah, OK not that easy. But every schoolkid knows (so I'm told ;-) that if you're cribbing somebody else's work, you flip a large sample of the words/phrases into synonyms. Also shuffle around some sentence structure. I guess this is easier in English than languages with smaller vocabularies or more constrained sentence-order. As the paper says:

Will the required effort be enough to deter 'bad actor' AI companies from promulgating fake news "without misleading others"? Russian chatbots seem to have unlimited budget.

"Reduce quality" of what is already dreadful AI output? I suspect the people who are already taken in by this sort of thing will find this is the sort of thing they are taken in by.

That 'With watermark' sample seems barely acceptable English to me. I'm likely to reject it as non-human-generated. 'messages indiscernable to humans' sounds unidiomatic (and very definitely discernable) to this human. So what has this fancy manipulation achieved?

Gregory Kusnick said,

July 26, 2023 @ 2:05 am

Exactly. So instead of relying on dubious schemes for labeling untrustworthy content, it seems much more effective to focus on labeling trustworthy content with unforgeable evidence of provenance, using the same sort of cryptographic tools we use to verify the authenticity of websites we visit. This puts the incentives where they belong: the producers of reliable content will want us to know it's legit, whereas the producers of unreliable content will generally not want us to know it's fake.

Jerry Packard said,

July 26, 2023 @ 5:19 am

Marvelous Mark (myl), thanks for posting.

Ross Presser said,

July 26, 2023 @ 8:41 am

@Gregory Kusnick:

So instead of relying on dubious schemes for labeling untrustworthy content, it seems much more effective to focus on labeling trustworthy content with unforgeable evidence of provenance, using the same sort of cryptographic tools we use to verify the authenticity of websites we visit. This puts the incentives where they belong: the producers of reliable content will want us to know it's legit, whereas the producers of unreliable content will generally not want us to know it's fake.

This sounds like "if it doesn't have a verifiable digital signature, showing who wrote it, it's not trustworthy." How is the software that is signing the content going to know whether you wrote it yourself or retyped it from AI output? That's the most trivial attack I can think of — I'm sure there are going to be many more sophisticated ones.

Aardvark Cheeselog said,

July 26, 2023 @ 9:56 am

I am not an actual AI expert, though I have watched that part of computing with interest for decades. I am not an actual cryptographer, though I have more experience than most with the implementation of cryptographic systems. I'm not a mathematician, though I tried to learn some of their core concepts.

I'm leading up to saying that I have intuitions about this idea that text can be watermarked, and my intuition is that it's a nonstarter. It's too easy to edit text, and if the targets have access to the detection tools they'll be able to take their LLM-generated (I refuse to call it "AI-generated") text and tweak it until it passes muster. This will still be so much easier than composing the text with their minds that it will be viewed as a win by cheaters.

Gregory Kusnick said,

July 26, 2023 @ 10:31 am

Ross: The software doesn't need to know whether the content is authentic. What matters is that I know that if it's digitally signed by (say) the New York Times, then I can be confident that the Times vouches for its authenticity.

Of course if I don't trust the Times to be honest with me, I'm still free to treat their content with as much skepticism as I think is warranted — just as we did in the pre-AI era.

Bill Benzon said,

July 27, 2023 @ 9:28 pm

"And as a side note, there should be more discussion about the value of "apocalyptic" warnings as advertising."

I strongly agree. If you want people to pay attention, that's a way to attract it.

Susanac said,

July 30, 2023 @ 8:57 am

Meta's involvement is surprising, given that:

A) they're distributing the code of their lllm

B) if you have the source code, it's trivial to remove the part of the code that does watermarking

Ok, so I can just about imagine something along the lines of congress saying since industry has agreed to this, theyj're going to pass legislation to make it illegal to not do watermarking, and this will be very very bad news for meta