Copyright-safe AI Training

« previous post | next post »

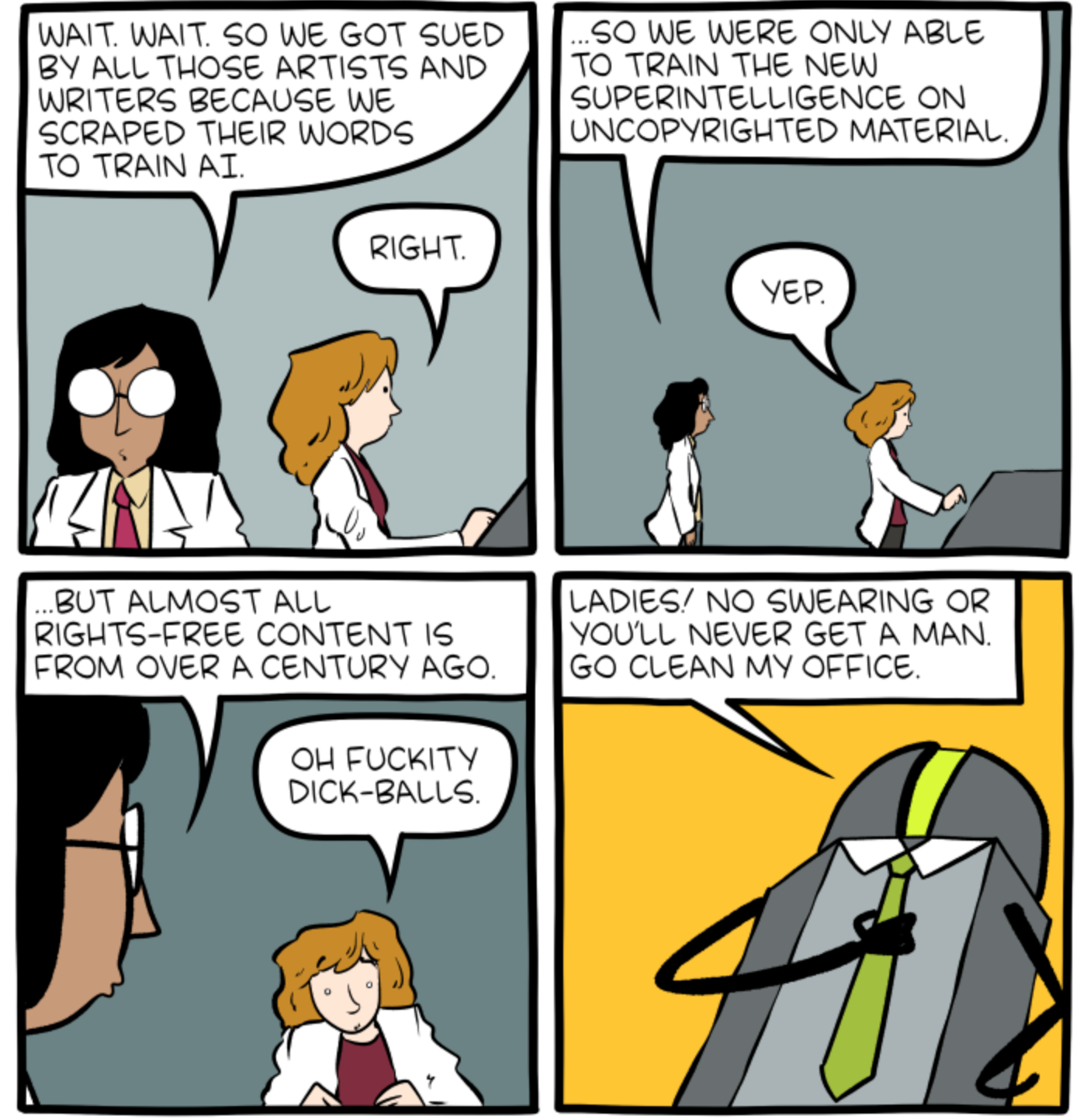

Today's SMBC starts like this:

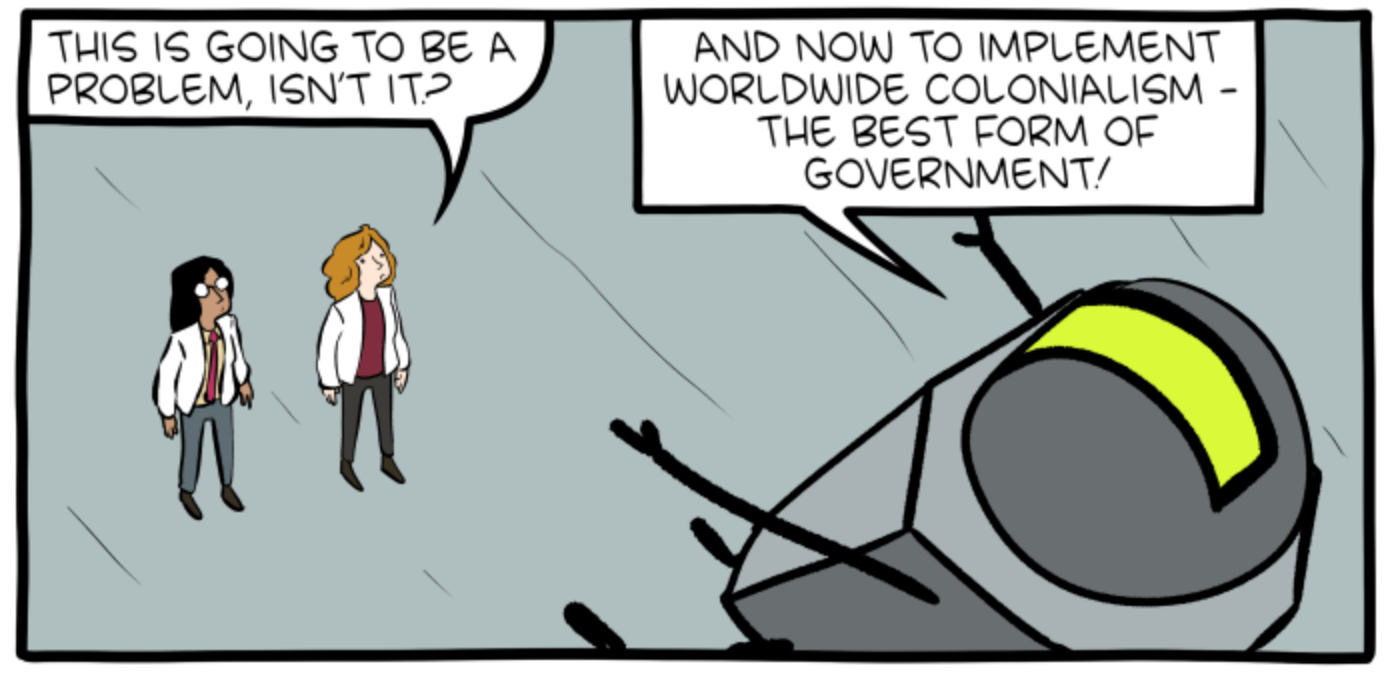

The aftercomic:

Some legal and social background:

Pamela Samuelson, "Generative AI meets copyright", Science 7/12/2023

Dan Milmo, "Sarah Silverman sues OpenAI and Meta claiming AI training infringed copyright", The Guardian 7/10/2023

Sheera Frenkel and Stuart Thompson, "‘Not for Machines to Harvest’: Data Revolts Break Out Against A.I.", The New York Times 7/15/2023

Current U.S. Copyright protection for text now applies to works published since January 1, 1928. The situation in the rest of the world is complicated.

It's worth noting that Wikipedia, along with some other recent work used by current Stochastic Parrots, is available under a "share-alike" license that allows free use:

You are free to:

- Share — copy and redistribute the material in any medium or format

- Adapt — remix, transform, and build upon the material for any purpose, even commercially.

but also requires that:

If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

It's unclear, at least to me, whether a Large Language Model trained on a given body of text is thereby a "derived work" in the legal sense.

Note however that the "terms of use" for most digital content, like these for scribd, (attempt to) impose contractual restrictions that go well beyond copyright.

Chris Barts said,

July 15, 2023 @ 12:39 pm

Nobody knows where copyright law will stand on this, which means people are being very loud about how their side is obviously and unambiguously correct and doubting them makes you both unintelligent and morally suspect. It's the classic debate tactic of loudly denying there is a debate.

Jerry Packard said,

July 15, 2023 @ 2:47 pm

Great post.

Raul said,

July 15, 2023 @ 4:57 pm

About Wikipedia, I'd say, mostly no. The CC licenses have been developed to disvourage unreferenced use in single derivative works, not developing whole systems by data mining and machine learning. An AI is not a derivative work itself, and while it may create some occasionally, most fuss is not about it copying&pasting large recognizable bits in its own creations, so that line of attack would probably not apply for anyone, least for Wikipedia. There are some jurisdictions which have some interesting database rights for that purpose but to my knowledge, not the U.S. where legal system has been mostly beneficial for data mining startups etc. There was an interesting analysis in Techdirt about the current attack vector which will probably be somewhat suboptimal, largely due to one of the main lawyers being an expert in class action, not copyright.

Garrett Wollman said,

July 15, 2023 @ 10:04 pm

I've pointed out before that, if you take the point of view of the "AI maximalists", what they are doing is conceptually no different from teenage me going to the public library and taking out as many books as they will let me, every week for four school years. Or, to use another example, a generative image model is in some sense indistinguishable from an art student who carefully studies many artists and genre over the course of their education, s to the extent that they can convincingly paint on demand in those styles. The argument ultimately boils down to "brains are qualitatively different" on one side, and "no they're just slower" on the side of the maximalists. (Not sure if either Hinton or Le Cun would cop to the "maximalist" position I'm sketching but both have said things that make me think they are on that side.)

Seth said,

July 15, 2023 @ 11:21 pm

Disclaimer: I'm not a lawyer, but I have read a large amount about copyright law.

My nonlawyer but educated opinion: This is a slaw-dunk in favor of the AI side under the "Google Books" case reasoning.

https://law.justia.com/cases/federal/appellate-courts/ca2/13-4829/13-4829-2015-10-16.html

"Plaintiffs, authors of published books under copyright, filed suit against Google for copyright infringement. Google, acting without permission of rights holders, has made digital copies of tens of millions of books, including plaintiffs', through its Library Project and its Google books project. The district court concluded that Google's actions constituted fair use under 17 U.S.C. 107."

If anything, the AI applications are even stronger in terms of being "transformative" under fair use aspect of copyright law.

As I see it, this lawsuit is being pursued purely as a matter trying to make the cost of a settlement less than the huge expense of going through the courts to win on the law.

@ Chris Barts – You're correct that nobody can ever know for certain where a legal case will come out. But there's extensive prior case law which seems to me strongly in favor of the AI side, and that's not easily countered by a general idea that it's wrong for big companies to profit like this (a viewpoint for which I have great moral sympathy, but that sadly rarely works well in court).

J.W. Brewer said,

July 16, 2023 @ 1:27 pm

The idea that as long as you're being "transformative" enough you are by definition engaging in fair use and thus cannot be liable for infringement is basically the idea that lost resoundingly in the U.S. Supreme Court earlier this year in Andy Warhol Fdn. for the Visual Arts v. Goldsmith. That doesn't necessarily mean the prior Google Books outcome (not binding precedent outside the three states in the relevant circuit) is inconsistent with the ruling against the Warhol interests, only that you need to be careful of reading it to stand for some broad abstract principle that's unmoored from its actual facts.

My own sense is that left to their own devices some of this AI software will (if exposed to a substantial volume of copyrighted material) yield at least some output that is infringing and also some output (possibly/probably considerably more) that is non-infringing. I don't know how to estimate the likely ratio, and know even less how you could tweak the software to minimize the possibility of generation of infringing output — if you think that avoiding exposure to copyrighted input is too blunt or costly a safeguard.

Jim White said,

July 16, 2023 @ 4:02 pm

As a couple comments above say, the copyright situation for AI models in the US is well-established thanks to 20+ years of litigation. Copyrights afforded to content creators in exchange for publishing their works does *not* allow them to restrict how others process it, they only restrict what they can publish themselves. "Terms of Service" notices on or linked in public (i.e. not behind a login/paywall) web pages *do not* restrict the rights of readers to copy and interpret/process the published content. IOW the Silverman et al claim to the effect "we didn't give permission" has zero chance of winning. And in case anyone is unclear copyright only applies to the precise expression and not in any way the idea(s) of the work.

Litigation as established the precise number of words that may be republished verbatim from public web pages although I'm forgetting the number. It is around 150 words and is what determines the length of the "snippets" published on Google (and any other US web search engine) search results under Fair Use.

The only interesting new legal decisions will come down on exactly how the rules of Fair Use apply to automatically generated paraphrases and other derived works. This has of course has been done many times for such work by humans but the facts are not obviously the same nor in such volume so the resulting legal interpretation from litigation is worth keeping an eye out for.

Rachael said,

July 17, 2023 @ 3:39 am

I'm intrigued by Seth's "slaw-dunk". It's presumably not a typo, as W and M are at diagonally opposite corners of the keyboard, and similarly presumably not a mishearing or eggcorn.

This isn't meant as criticism or mockery of Seth, but as a linguist's fascination with the different ways nonstandard forms arise.

Did you learn the term by misreading someone's handwriting?

Bob Ladd said,

July 17, 2023 @ 6:22 am

@Rachael – I wondered the same thing but decided it probably was a typo anyway. Ever since I learned how to type properly (sometime in the 1960s) I have had an inexplicable tendency to occasionally swap O and A, which are about as far apart on the standard English QWERTY keyboard as M and W.

Seth said,

July 17, 2023 @ 2:37 pm

@ Rachel – No offense taken, and I'm as intrigued as you are. It was indeed a typo, and until you pointed it out, I had no idea I'd done it! This is more into the neurological part of linguistics. As far as I can reconstruct, it wasn't a typo of the standard sort of intending to strike one key, but getting a nearby key. Rather, it seems more a cognitive construction error, where I wanted "m", but somehow my brain generated the conceptually adjacent shape of "w", and I typed that. And I didn't notice it when looking at the text on the screen, because of the similar shape. I do have a problem with proofreading in that, since I know what I intended, I don't necessarily see that I didn't type correctly. Spell-check is a godsend for me. But since "slaw" is also a word, it didn't get flagged.

KeithB said,

July 18, 2023 @ 12:37 pm

But is the AI doing anything differently than I do when I read a copyrighted work and learn from it?