Detecting LLM-created essays?

« previous post | next post »

As I observed in "Alexa down, ChatGPT up?" (12/8/2022), there's reason to fear that LLMs ("Large Language Models") like ChatGPT will force major changes in writing education, by offered a cheap and easy way to generate essay assignments. A small sample of the extensive published discussion:

Stephen Marche, "The College Essay is Dead", The Atlantic 12/6/2022

Daniel Lametti, "A.I. Could Be Great for College Essays", Slate 12/7/2022

Daniel Herman, "ChatGPT will end High School English", The Atlantic 12/9/2022

Beth McMurtrie, "AI and the Future of Undergraduate Writing: Teaching experts are concerned, but not for the reasons you think", The Chronicle of Higher Education 12/13/2022

Of course, various other forms of cheating have been common for hundreds of years, starting with simple plagiarism and ghost-written submissions. The internet has made it easier to find texts to copy or ghostwriters to hire — but modern technology has also brought us plagiarism-detection systems, which catch at least the simplest cases. Will we see effective LLM-detection software?

There are already (purported) examples — thus Sun Kim, "How to Detect OpenAI's ChatGPT Output", Medium 12/11/2022:

The output (e.g., essays) provided by ChatGPT is so good, if I was a student, I would be using ChatGPT to complete most of my school assignment with minor revisions.

[…]

The good news is that there is a tool from Hugging Face called GPT-2 Output Detector Demo (https://huggingface.co/openai-detector) that was developed in conjunction with OpenAI (see details here => https://huggingface.co/roberta-base-openai-detector).

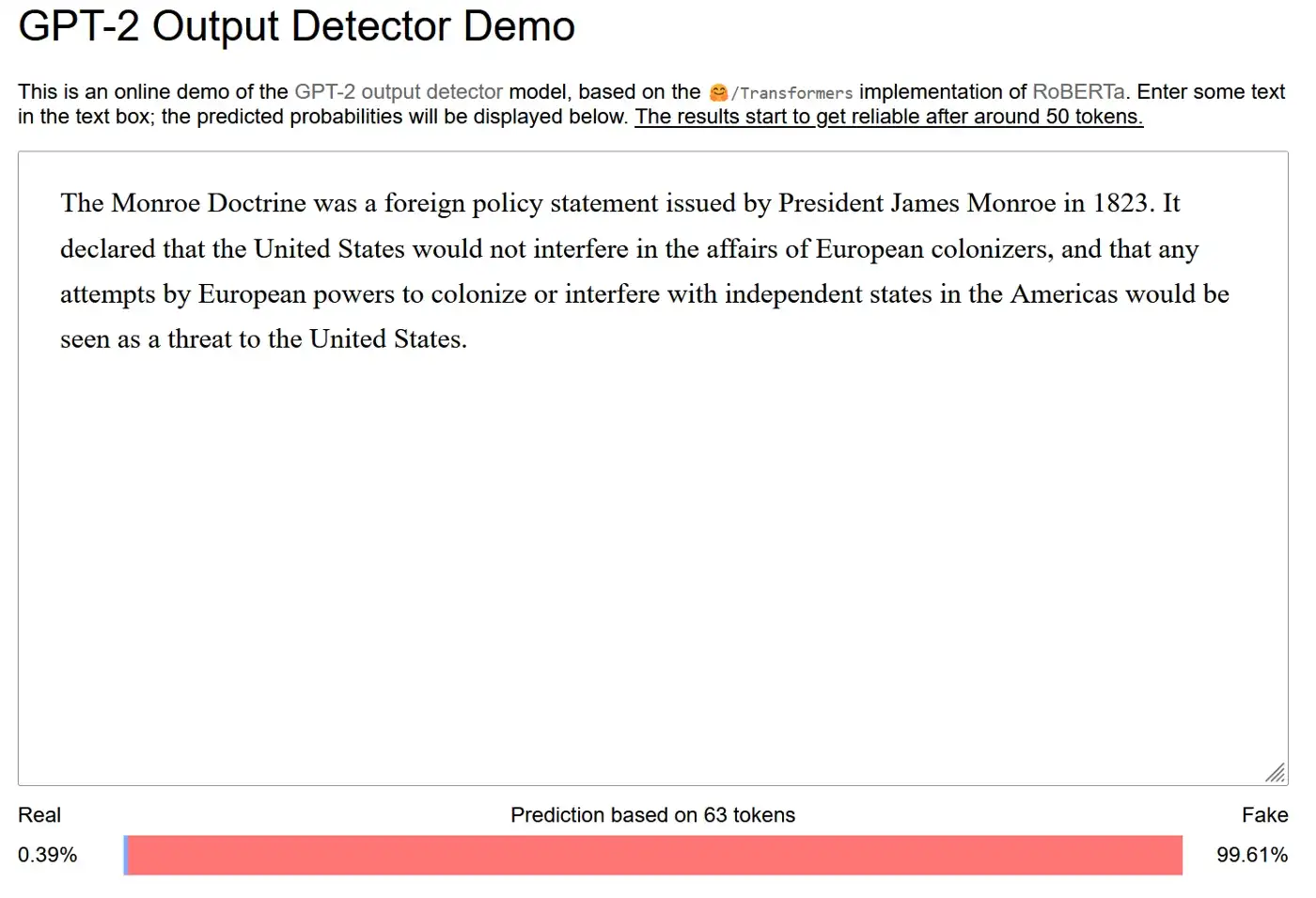

Kim tests that detector on a (short) ChatGPT-generated "essay", with impressive results:

But one example doesn't tell us much — and the "GPT-2" part of the title should give us pause, since ChatGPT is based on a later and bigger system, GPT-3.5.

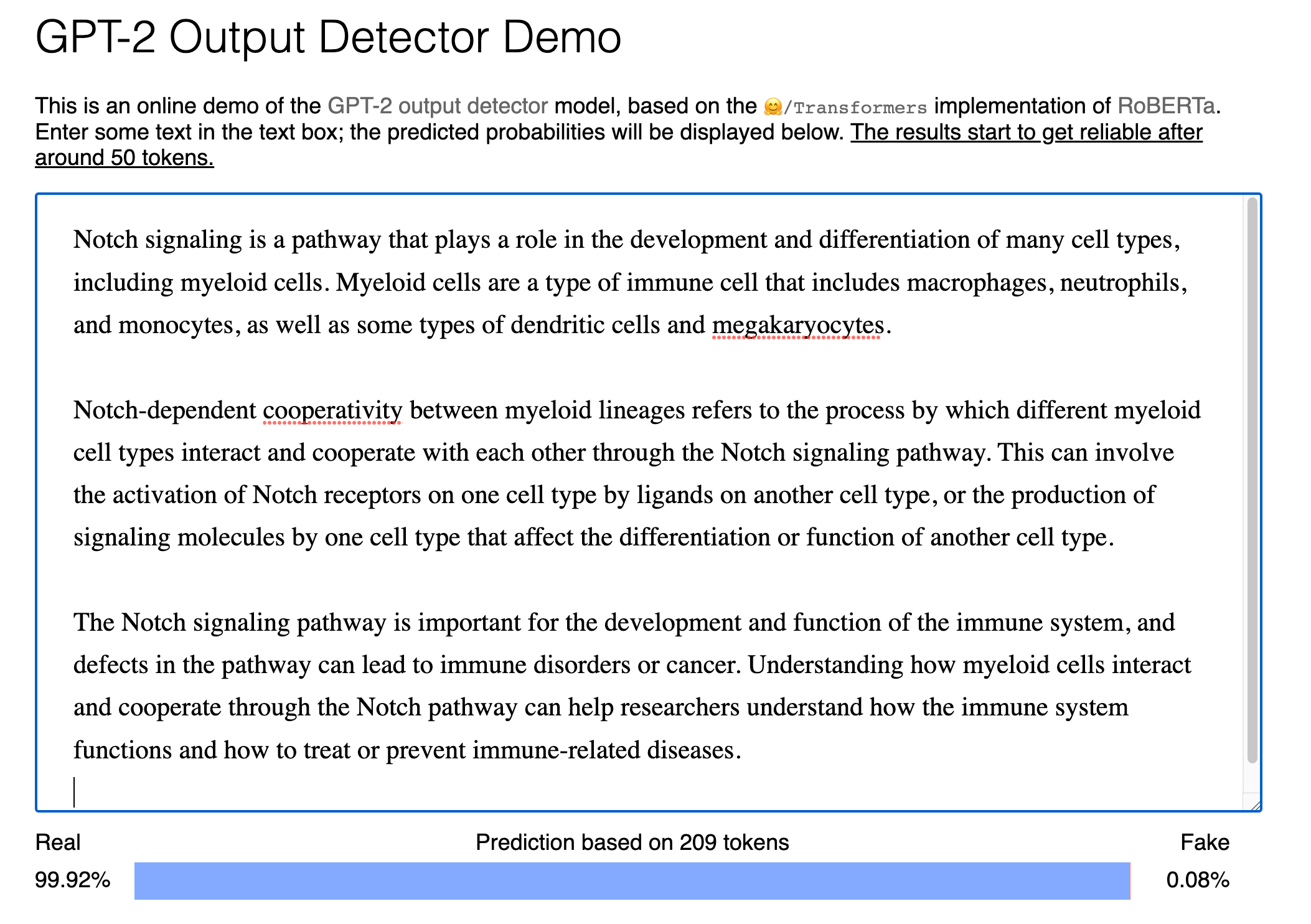

Here's one more test case, where the system fails badly. A few days ago, I came across a paper with the title "Notch-dependent cooperativity between myeloid lineages promotes Langerhans cell histiocytosis pathology", and so I asked ChatGPT

What is "Notch-dependent cooperativity between myeloid lineages"?

…and got the answer:

Notch signaling is a pathway that plays a role in the development and differentiation of many cell types, including myeloid cells. Myeloid cells are a type of immune cell that includes macrophages, neutrophils, and monocytes, as well as some types of dendritic cells and megakaryocytes.

Notch-dependent cooperativity between myeloid lineages refers to the process by which different myeloid cell types interact and cooperate with each other through the Notch signaling pathway. This can involve the activation of Notch receptors on one cell type by ligands on another cell type, or the production of signaling molecules by one cell type that affect the differentiation or function of another cell type.

The Notch signaling pathway is important for the development and function of the immune system, and defects in the pathway can lead to immune disorders or cancer. Understanding how myeloid cells interact and cooperate through the Notch pathway can help researchers understand how the immune system functions and how to treat or prevent immune-related diseases.

Result from the detector? Not good:

Perhaps we'll see another version, trained with the latest LLM input, that works better — though at best that would lead to a complex creation/detection arms race.

Some other ideas can be found in Melissa Heikkilä, "How to spot AI-generated text", MIT Technology Review 12/19/2022, which focuses not on AI ghost-writing for students, but rather on the potential for broader misinformation pollution:

In an already polarized, politically fraught online world, these AI tools could further distort the information we consume. If they are rolled out into the real world in real products, the consequences could be devastating.

We’re in desperate need of ways to differentiate between human- and AI-written text in order to counter potential misuses of the technology, says Irene Solaiman, policy director at AI startup Hugging Face, who used to be an AI researcher at OpenAI and studied AI output detection for the release of GPT-3’s predecessor GPT-2.

[…]

There are various ways researchers have tried to detect AI-generated text. One common method is to use software to analyze different features of the text—for example, how fluently it reads, how frequently certain words appear, or whether there are patterns in punctuation or sentence length.

“If you have enough text, a really easy cue is the word ‘the’ occurs too many times,” says Daphne Ippolito, a senior research scientist at Google Brain, the company’s research unit for deep learning.

Because large language models work by predicting the next word in a sentence, they are more likely to use common words like “the,” “it,” or “is” instead of wonky, rare words. This is exactly the kind of text that automated detector systems are good at picking up, Ippolito and a team of researchers at Google found in research they published in 2019.

But Ippolito’s study also showed something interesting: the human participants tended to think this kind of “clean” text looked better and contained fewer mistakes, and thus that it must have been written by a person.

“A typo in the text is actually a really good indicator that it was human written,” she adds.

Those seem like good ideas. But if the simple the-frequency cue ever worked reliably, it doesn't now.

For example, the ChatGPT output from Ben Jacobs, "That AI Chatbot Wrote a Pretty Decent New York Article" (New York Magazine 12/5/2022), does have 20 instances of the in 261 words, or 7.7% — which is towards the high side of the frequencies in English text. But Ben Jacob's most recent real New York Magazine article ("GOP Leadership Fight in Congress Shows a Party of Warlords", 11/17/2022) has 22 instances of the in 279 words, or 7.9%.

And ChatGPT's answer to my question about "Notch-dependent cooperativity between myeloid lineages" has 12 instances of the in 162 words, or 7.3% — but Wikipedia's section on Notch signaling pathway mechanisms has 24 instances of the in 280 words, or 8.6%.

Of course the 2019 Google paper tests more serious detection methods than simple the frequency, and proposes a variety of more sophisticated directions of research — so we should stay tuned and hope for some open-access methods to test.

It'll be interesting to learn how students actually use LLMs in their work — and how writing courses adapt to the situation.

With respect to the issue of disinformation, it seem to me that LLMs are far from the biggest problem. And the publications of most public figures are already ghostwritten anyhow, so there's no (additional) ethical issue there.

For some earlier discussions of authorship and plagiarism, see e.g.

"In defense of Kaavya Viswanathan", 4/25/2006

"Unwritten rules and uncreated consciences", 5/4/2006

"Text Laundering", 1/23/2007

"Plagiarism and copyright", 6/14/2007

"Citation Plagiarism?", 6/15/2007

"Citation Plagiarism once Again", 4/23/2008

"The fine line between phrasal allusion and plagiarism", 6/4/2008

"Plagiarism and restrictions on delegated agency", 10/1/2008

'The writer I hired was a plagiarist!", 7/13/2010

"Is 'plagiarism' in a judicial decision wrong?", 4/14/2011

"Visualization of Plagiarism", 5/7/2011

"John McIntyre on varieties of plagiarism", 3/30/2013

"Rand Paul's (staffers') plagiarism", 11/7/2013

"SOTU plagiarism?", 1/30/2014

"'Plagiarism' vs. 'ghostwriting' again", 2/14/2014

"Intersecting hypocrisies", 7/20/2016

"The extent of Melania's plagiarism", 7/20/2016

"Tortured phrases: Degrading the flag to clamor proportion", 3/22/2022

And there's a much longer list of posts on apparently authoritative human-created disinformation — more on that later.

Carl said,

December 20, 2022 @ 11:41 am

ISTM the good news is that it would be pretty easy to generate training data to train an AI to detect AI generated text. Just get known good stories and then generate a bunch of known fakes and let the detector use those as its training data. It’s definitely feasible, but who knows if it will actually work.

Aardvark Cheeselog said,

December 20, 2022 @ 12:50 pm

> Will we see effective LLM-detection software?

My instinct is "no, that would be an AI-complete problem right there."

Colin Danby said,

December 20, 2022 @ 2:32 pm

I made a ChatGPT account and tried out my writing prompts for next quarter and I'm really not worried.

The example from the Stephen Marche _Atlantic_ article is asking students to write "an essay on learning styles." This is a bad assignment. Most students will respond with platitudinous generalities and wikipedia rehashes, with or without AI help.

ChatGPT will not generate plausible work for anything specific or challenging because the very nature of the AI is that it produces lowest-common-denominator conventional wisdom. Pabulum. To flip that over, as a quick way to get tuned in to the conventional wisdom on a topic that's new to you it might be useful, and when I tried out some of my assignments which bridge the ideas of two writers, it sometimes came up with an interesting connection, though it couldn't do much with it.

There is another property which is a reflection of the AI's design. It is rather good at "write this in the style of that" tasks because it knows how to string words together in different styles. Genre it gets! But if you ask, say, how would Socrates question Paulo Freire, it has no idea. It will have Socrates politely ask Freire for his ideas and kind of leave it at that.

I would also humbly suggest that making assignments more interesting is a better path than an arms race between plagiarism-generators and plagiarism-detectors.

An exception to this argument might be some kinds of composition instruction where the word-stringing is what you are teaching. That connects to the question of computer-assisted writing which is getting more common, no? A lot of my students routinely use things like "grammarly," and you may have experienced "predictive text" in email or messaging apps. I won't use it but I'm old; it may become part of how most people write.

Mai Kuha said,

December 20, 2022 @ 3:28 pm

I'm just desperately hoping that all this also means that one day soon Hollywood will find it cheaper to film 90 minutes of good dialogue than gunfights, explosions, and car chases.

Mai Kuha said,

December 20, 2022 @ 3:46 pm

I enjoyed this screenplay a *lot* until it concluded suddenly…

me: Write a screenplay about a young woman with a PhD in linguistics who tries to document a dying language in South America.

ChatGPT:

INT. LINGUISTICS LAB – DAY

We see a young woman, ANNA, sitting at her desk, surrounded by books and papers. She is typing furiously on her laptop.

ANNA: (to herself) I just need to finish this grant proposal. Then I can finally go to South America and document the Yucatec Maya language.

Anna's advisor, PROFESSOR WILLIAMS, enters the room.

PROFESSOR WILLIAMS: Anna, have you finished that grant proposal yet? The deadline is tomorrow.

ANNA: (frustrated) Almost, Professor Williams. I just need to finish this section on the linguistic significance of Yucatec Maya.

PROFESSOR WILLIAMS: (nodding) I know how important this project is to you, Anna. The Yucatec Maya language is dying out, and documenting it will be a valuable contribution to the field. But don't forget to focus on the grant committee's priorities as well. They want to see concrete plans for fieldwork and data analysis.

ANNA: (determined) I understand, Professor Williams. I'll make sure to include all of that in the proposal.

PROFESSOR WILLIAMS: (smiling) I have no doubt you will. Good luck with the grant.

Anna turns back to her laptop and continues typing.

INT. SOUTH AMERICAN JUNGLE – DAY

Anna is trudging through the dense jungle, a backpack slung over her shoulder. She is accompanied by a local guide, JUAN.

ANNA: (panting) How much further, Juan?

JUAN: (pointing) Not far, just over this hill.

Anna and Juan crest the hill and come upon a small village nestled in the jungle. Anna's eyes light up as she sees a group of people speaking in the Yucatec Maya language.

ANNA: (excited) This is it, Juan! This is the village where the Yucatec Maya language is still spoken.

Juan nods and leads Anna to a small hut.

INT. HUT – DAY

Anna is seated at a table with a group of Yucatec Maya elders. She is taking notes and recording their speech on her laptop.

ELDER 1: (speaking in Yucatec Maya) The Yucatec Maya language is a beautiful and ancient language. It is the language of our ancestors, and it must not be forgotten.

Anna nods and smiles.

ANNA: (to the elders) Thank you for sharing your language with me. I will do everything I can to document it and preserve it for future generations.

The elders nod and smile back at Anna.

INT. LINGUISTICS LAB – DAY

Anna is back at her desk, surrounded by books and papers. She is typing on her laptop and has a look of determination on her face.

ANNA: (to herself) I've collected so much data on the Yucatec Maya language. Now it's time to analyze it and write up my findings.

Anna works late into the night, pouring over her notes and data.

INT. UNIVERSITY AUDITORIUM – DAY

Anna is standing on stage, giving a presentation to a packed auditorium.

ANNA: (to the audience) The Yucatec Maya language is a fascinating and complex language, with a rich history and cultural significance. By documenting it, we can ensure that it is not

Rick Rubenstein said,

December 20, 2022 @ 3:49 pm

@Colin Danby: Unfortunately, I think "We just need all teachers to be good at their jobs" is an even more improbable ask than "We need to detect and prevent AI-generate student essays."

Frans said,

December 20, 2022 @ 3:59 pm

@Mai Type "continue".

Gregory Kusnick said,

December 20, 2022 @ 4:13 pm

I now find myself wondering whether ChatGPT doesn't know the difference between "poring" and "pouring", or does know the difference but thinks screenwriters don't.

Mai Kuha said,

December 20, 2022 @ 4:50 pm

@Frans thanks! Actually, the screenplay is getting better. I'm impressed that ChatGPT can take my suggestions and revise accordingly. I said what if the informant tells the linguists a creation myth? and ChatGPT made up a deep-sounding creation myth about a jaguar. Then I suggested that while leaving the jungle the linguists would, in a mystical turn of events, run into that very jaguar, and ChatGPT wove that in. I start to see the potential in this as a tool.

Chester Draws said,

December 20, 2022 @ 5:03 pm

I would also humbly suggest that making assignments more interesting is a better path than an arms race between plagiarism-generators and plagiarism-detectors.

Even better, they could expect their students to do more than merely regurgitate material.

I did my science degree nearly 40 years ago, so this is not a whinge about modern teaching. I had a few spare courses, so I did some humanities papers.

My first essays got very poor grades. This surprised me, as I was an A student. Then I discovered that I was actually trying to analyse the questions and write essays that gave my thoughts on the matter. Once I stopped doing that and merely regurgitated the professor's lectures I went back to being an A student.

That's why I don't think AI bots will take over the internet. They merely write, as you say, lowest common denominator material, and it is boring to read and adds nothing of value that one good text won't have. If they could "read" books or "watch" movies, then sure they would find a market, but they can only take what others have written.

Unique content is the key to successful websites and AI won't generate that. I don't, for example, think Language Log is in any danger of being usurped by a bot.

However, college essays are a different beast, unless in the last few decades they have started to require individuals to take less common but defensible positions.

Rob P. said,

December 20, 2022 @ 5:17 pm

@Mai Kuha – Mistaking a mayan language for one that might be spoken in South America seems like exactly the kind of dopey mistake a human would make.

Philip Anderson said,

December 20, 2022 @ 5:25 pm

@Gregory Kusnick

Or was it a deliberate typo inserted to fool AI-detecting software into thinking the author was human?

Brian said,

December 21, 2022 @ 6:36 am

@Mai Kuha – Lt. Commander Data, the AI character from Star Trek TNG, could never use contractions. I note the ChatGPT screenplay has a notable lack of contractions.

"It is the language of our ancestors, and it must not be forgotten." ==> "It's the language of our ancestors, and it mustn't be forgotten."

"I will do everything I can to document it and preserve it for future generations." ==> "I'll do everything I can…"

bks said,

December 21, 2022 @ 8:34 am

Mathematica has been better than most college undergraduates at solving math homework problems since c. 1990. Sitting an exam with paper and pen is still the acid test.

cameron said,

December 21, 2022 @ 12:04 pm

as bks said, the math analogy works. if you don't want the students copy-pasting from online chatbots, just make them fill out blue books in person.

of course, then you have to grade hand-written texts . . . much suffering all around

Mai Kuha said,

December 21, 2022 @ 3:21 pm

@Brian ChatGPT seems to have some sense of register variation. I asked for a domestic scene, and the dialogue among family members is full of contractions!

That thing with Data always drove me nuts. Data handles conversational implicatures and subordinate clauses and whatnot in nanoseconds, but contractions are too complex??