Zoom time

« previous post | next post »

I'm involved with several projects that analyze recordings from e-interviews conducted using systems like Zoom, Bluejeans, and WebEx. Some of our analysis techniques rely on timing information, and so it's natural to wonder how much distortion might be introduced by those systems' encoding, transmission, and decoding processes.

Why might timing in particular be distorted? Because any internet-based audio or video streaming system encodes the signal at the source into a series of fairly small packets, sends them individually by diverse routes to the destination, and then assembles them again at the end.

If the transmission is one-way, then the system can introduce a delay that's long enough to ensure that all the diversely-routed packets get to the destination in time to be reassembled in the proper order — maybe a couple of seconds of buffering. But for a conversational system, that kind of latency disrupts communication, and so the buffering delays used by broadcasters and streaming services are not possible. As a result, there may be missing packets at decoding time, and the system has to deal with that by skipping, repeating, or interpolating (the signal derived from) packets, or by just freezing up for a while.

It's not clear (at least to me) how much of this happens when, or how to monitor it. (Though it's easy to see that the video signal in such conversations is often coarsely sampled or even "frozen", and obvious audio glitches sometimes occur as well.) But the results of a simple test suggest that more subtle time distortion is sometimes a problem for the audio channel as well.

The simple test is to use a metronomic "click track", playing it on one end and recording it at the other end (or in the middle). Yesterday, Chris and Caitlin Cieri tried a version of this test, using a ten-minute signal from the Wikipedia "click track" article. This signal is suitably regular, as this sample indicates:

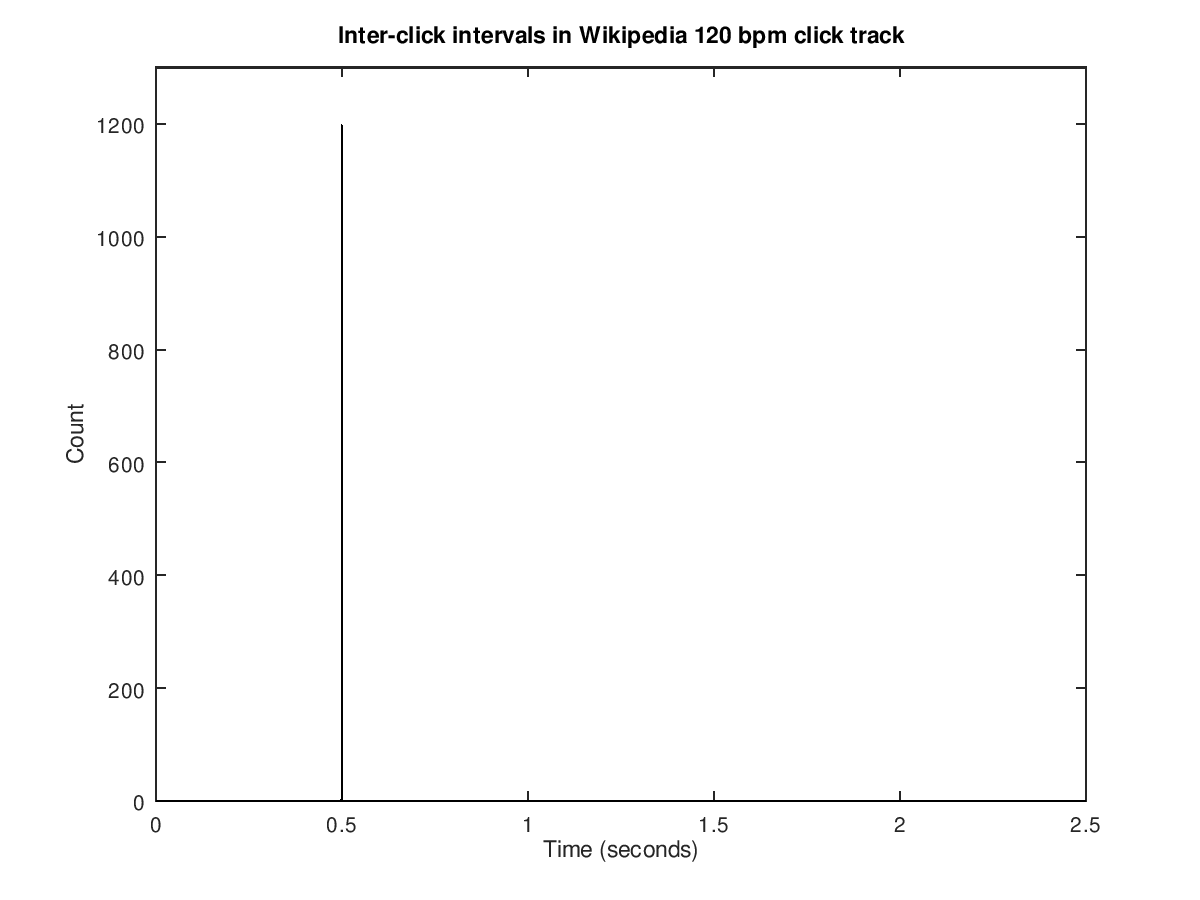

When I apply a simple click-detection program to this signal, the resulting histogram of inter-click intervals is suitably exact. The click track is at metronome marking 120, equivalent to 120 clicks per minute, or two per second. And all 1200 clicks occur exactly half a second apart:

Caitlin and Chris recorded the "conversation" (in this case entirely one-sided) via Zoom, using Zoom's cloud recording capability (which in effect records in the middle, so to speak, on a server that's mediating among the participants). The results are sometimes rather erratic, as these samples indicate:

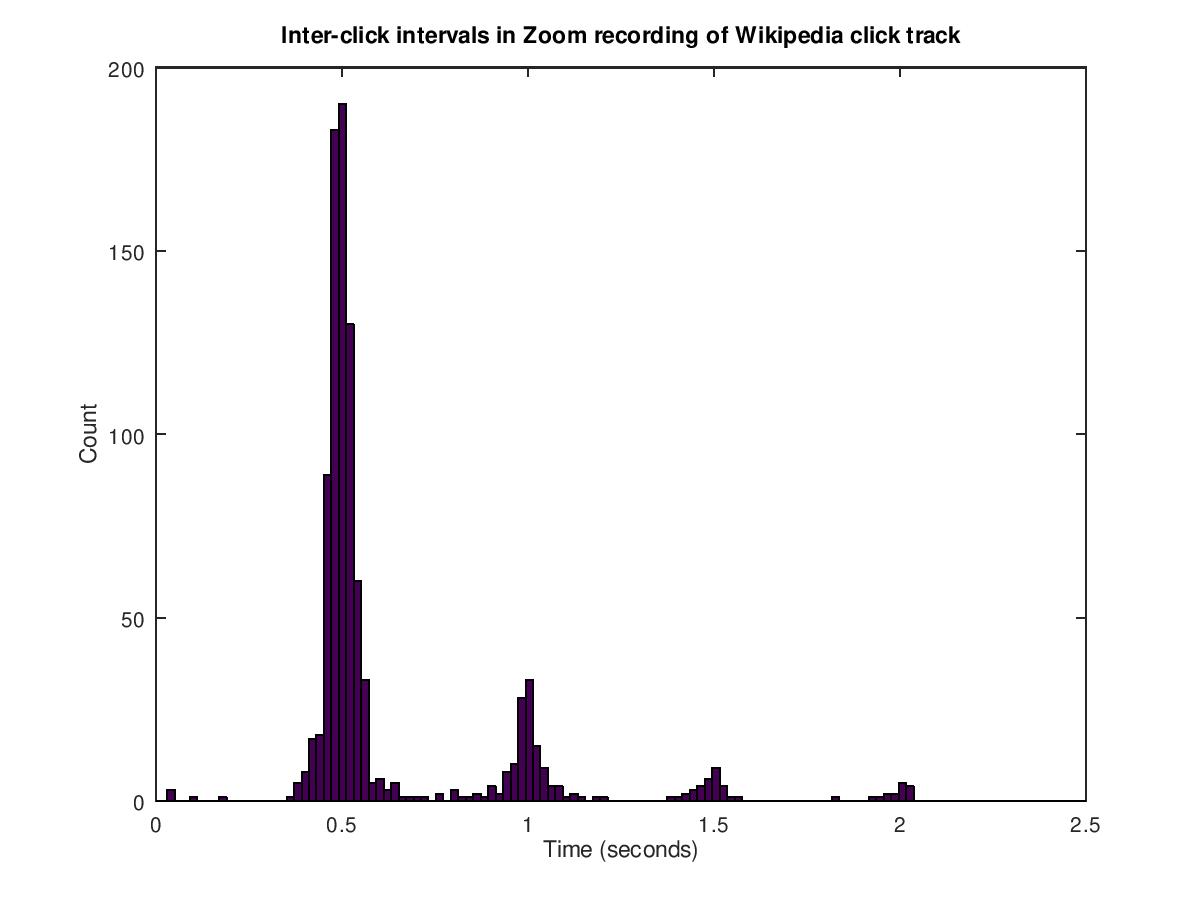

And the histogram of inter-click intervals shows it:

This is just one sample, using one system (Zoom 5.0.2), one network configuration and traffic condition at one time, and one recording method, for a one-way audio stream of a particular kind. And it's possible that Zoom's encoding and decoding system is doing something special with (what it reckons to be) silence, prolonging or curtailing silences in order to even out transmission latencies. So we'll try some additional tests, and let you know what we find.

(And you can try it yourself — someone on one end of an e-conversation can play the Wikipedia click track, and someone on the other end can record it. I'm happy to share my click-interval code, or you can write your own…)

Aside from our concern about timing cues in interview analysis, audio time distortions of this kind may be responsible for some of what people experience as "Zoom fatigue".

Robot Therapist said,

May 15, 2020 @ 5:54 am

"it's possible that Zoom's encoding and decoding system is doing something special with (what it reckons to be) silence, prolonging or curtailing silences in order to even out transmission latencies."

I'm interested in what Zoom does. It seems to try quite hard, but not always successfully, to work out who is the "speaker", but doesn't entirely mute sounds from others, those little "yeah"s and "hmm"s that people drop in. There can sometimes be some quite funny effects.

Mark Metcalf said,

May 15, 2020 @ 6:28 am

Recent article in the WSJ about the challenges faced by virtual choruses and virtual orchestras using Zoom to facilitate their endeavors.

A Symphony of Complication and Tenacity

Online classical-music performances are increasing, but piecing together an orchestra digitally has many difficulties.

https://www.wsj.com/articles/a-symphony-of-complication-and-tenacity-11587812402 (possibly behind a paywall)

Gregory Kusnick said,

May 15, 2020 @ 10:30 am

Presumably the smaller peaks at 1, 1.5, and 2 seconds count instances of dropped packets (respectively one, two, and three consecutive clicks lost).

[(myl) Yes, exactly.]

Tobias said,

May 15, 2020 @ 4:27 pm

At least WebRTC based systems (eg Bluejeans, but not Zoom) use NetEQ to smoothly piece things together.

https://webrtc.googlesource.com/src/+/refs/heads/master/modules/audio_coding/neteq/

> To conceal the effects of packet loss, WebRTC’s NetEQ component uses signal processing methods, which analyze the speech and produce a smooth continuation that works very well for small losses (20ms or less), but does not sound good when the number of missing packets leads to gaps of 60ms or more. In those latter cases the speech becomes robotic and repetitive, a characteristic sound that is unfortunately familiar to many internet voice callers.

https://ai.googleblog.com/2020/04/improving-audio-quality-in-duo-with.html?m=1

Interesting to listen to the click track examples. The experience I have with video conferences is rather less jarring. Guess that the system is not really optimized for clicks.

john burke said,

May 15, 2020 @ 5:02 pm

An app called JamKazam claims to have solved the latency problem; there are a few impressive clips on the Web site. I haven't tried it and every knowledgeable person I've talked to says the latency problem is a matter of physics and can't be "solved"–I don't know. I would really like to be able to play chamber music in real time over the Net; Groupmuse (q.v.) are working around this by actually getting musicians together IRL to record and then distributing the videos. But it's not real-time ensemble playing, and it doesn't follow strict social distancing. I can't read the WSJ article but would be very interested to know how other musicians are dealing with this.

Philip Taylor said,

May 16, 2020 @ 3:56 am

I am sure that you did not mean this as I initially interpreted it, John, but when you say "I would really like to be able to play chamber music in real time over the Net", I am sure that you realise that this is a physical impossibiity — there will always be a delay inherent in the transmission mechanism, and thus that which you eventually hear will never be in "real time" but rather delayed by some finite amount that will depend in part on the number of hops through which the packets must pass on their journey from recording studio to you.

john burke said,

May 16, 2020 @ 10:58 am

@Philip Taylor: I meant it in a kind of aspirational way, I guess. You and many others who understand the electronics of this far better than I do are unanimous in saying what I have in mind is physically impossible. On the other hand, the JamKazam people, if no one else, insist they can overcome this constraint, and a combination of ignorance and hope makes me willing to give the app a try. Their Web site does include some videos of people apparently doing what I have in mind, and the app is free; I realize you and everyone else I've consulted believe it can't be done, but I'm willing to put in a few hours to see if somehow they've suspended the laws of nature.

Philip Taylor said,

May 16, 2020 @ 11:06 am

Well, I look forward to reading your review, John, but I am afraid that I remain sceptical — "real-time music via the Internet" and "free energy" both carry the same taint of snake oil for me …

Gregory Kusnick said,

May 16, 2020 @ 4:01 pm

Depends on what you mean by "real time". By my figuring, large orchestras must tolerate edge-to-edge latencies on the order of 50-100 ms just due to the propagation of sound in air (~3 ms/m). So that's the standard JamKazam has to meet to make live online collaboration feasible. My uninformed guess is that for legato chamber music or trippy acid rock it probably works OK; tight staccato funk might be a different story.