Syllables

« previous post | next post »

From a physical point of view, syllables reflect the fact that speaking involves oscillatory opening and closing of the vocal tract at a frequency of about 5 Hz, with associated modulation of acoustic amplitude. From an abstract cognitive point of view, each language organizes phonological features into a sort of grammar of syllabic structures, with categories like onsets, nuclei and codas. And it's striking how directly and simply the physical oscillation is related to the units of the abstract syllabic grammar — there's no similarly direct and simple physical interpretation of phonological features and segments.

This direct and simple relationship has a psychological counterpart. Syllables seems to play a central role in child language acquisition, with words following a gradual development from very simple syllable patterns, through closer and closer approximations to adult phonological and phonetic norms. And as Lila Gleitman and Paul Rozin observed in 1973 ("Teaching reading by use of a syllabary", Reading Research Quarterly), "It is suggested on the basis of research in speech perception that syllables are more natural units than phonemes, because they are easily pronounceable in isolation and easy to recognize and to blend."

In 1975, Paul Mermelstein published an algorithm for "Automatic segmentation of speech into syllabic units", based on "assessment of the significance of a loudness minimum to be a potential syllabic boundary from the difference between the convex hull of the loudness function and the loudness function itself." Over the years, I've found that even simpler methods, based on selecting peaks in a smoothed amplitude contour, also work quite well (see e.g. Margaret Fleck and Mark Liberman, "Test of an automatic syllable peak detector", JASA 1982; and slides on Dinka tone alignment from EFL 2015).

In this post, I'll present a simple language-independent syllable detector, and show that it works pretty well. It's not a perfect algorithm or even an especially good one. The point is rather that "syllables" are close enough to being amplitude peaks that the results of a simple-minded, language-independent algorithm are surprisingly good, so that maybe self-supervised adaptation of a more sophisticated algorithm could lead in interesting directions.

Code (in GNU octave) is here. The method is to

- calculate the amplitude spectrum in a 16 msec window 100 times a second;

- at each time step, subtract the sum of the frequencies above 3 kHz from the sum of the frequencies below 3 kHz;

- set negative values to zero;

- smooth the resulting time function by convolving with a 70-msec Hamming window;

- select all peaks whose value is greater than 4% of the maximum peak value.

I set the three free parameters of the algorithm (the 3 kHz pivot frequency, the smoothing time constant, and the peak threshold) based on common sense phonetics and experimentation with a half-dozen English sentences. The resulting algorithm was then applied without any tuning to a set of 6000 Chinese sentences (120 from each of 50 speakers), as described in Jiahong Yuan et al., "Chinese TIMIT: A TIMIT-like corpus of standard Chinese", O-COCOSDA 2017. (This dataset is soon to be published by the LDC.)

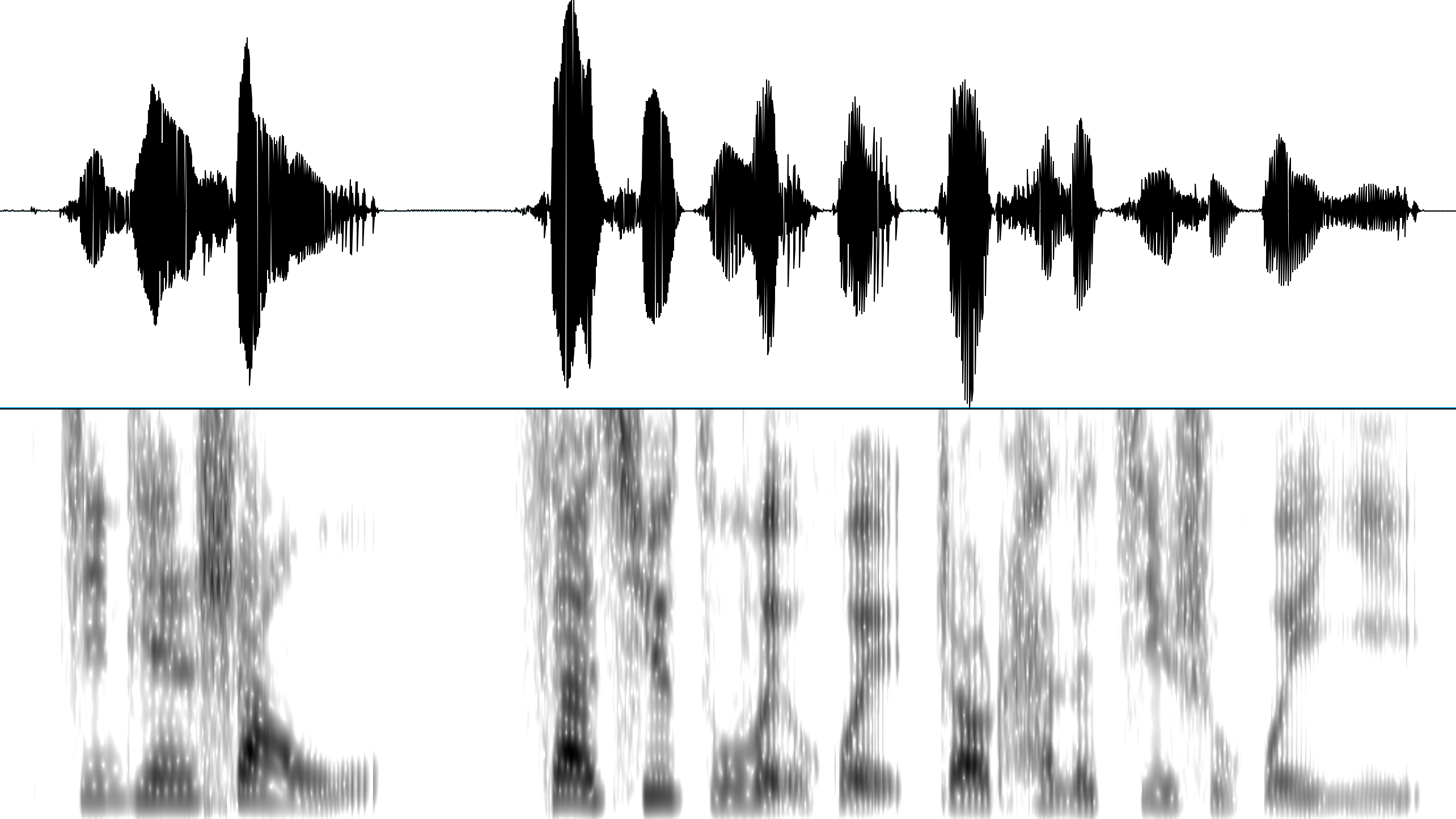

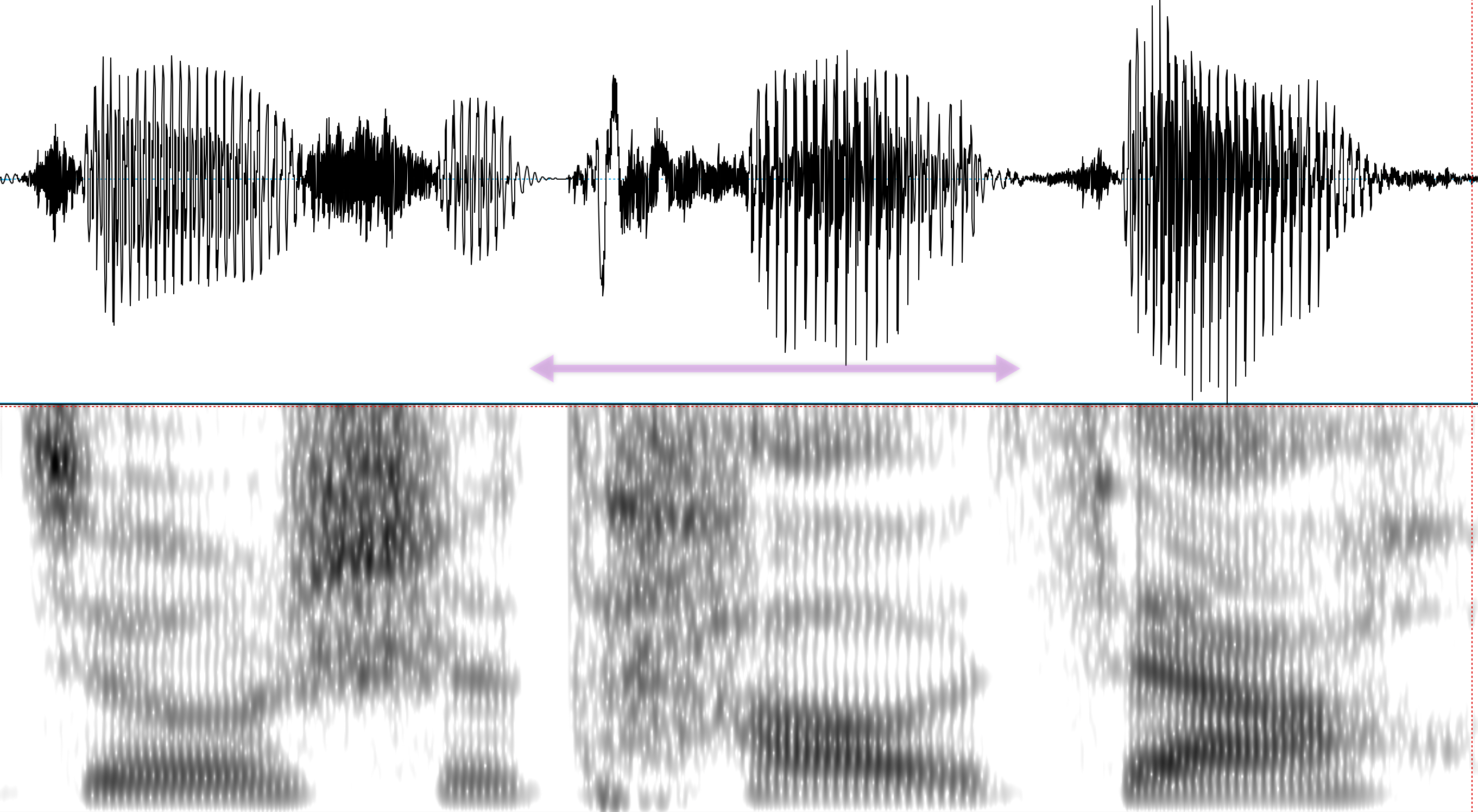

Here's the audio, waveform and spectrogram for a randomly-selected example:

Here's the forced alignment of a phone-level transcription, parsed into syllables, with each syllable preceded by its estimated starting and ending times in seconds:

0.2325 0.3925 j in 0.3925 0.5725 t ian 0.5725 0.8425 sh ang 0.8425 1.0225 u 1.3425 1.5525 s ai 1.5525 1.7325 q v 1.7325 1.8925 z u 1.8925 2.0225 ui 2.0225 2.3025 h ui 2.3025 2.4925 zh ao 2.4925 2.6625 k ai 2.6625 2.7425 l e 2.7425 2.9325 j i 2.9325 3.0725 sh u 3.0725 3.2425 h ui 3.2425 3.5425 i

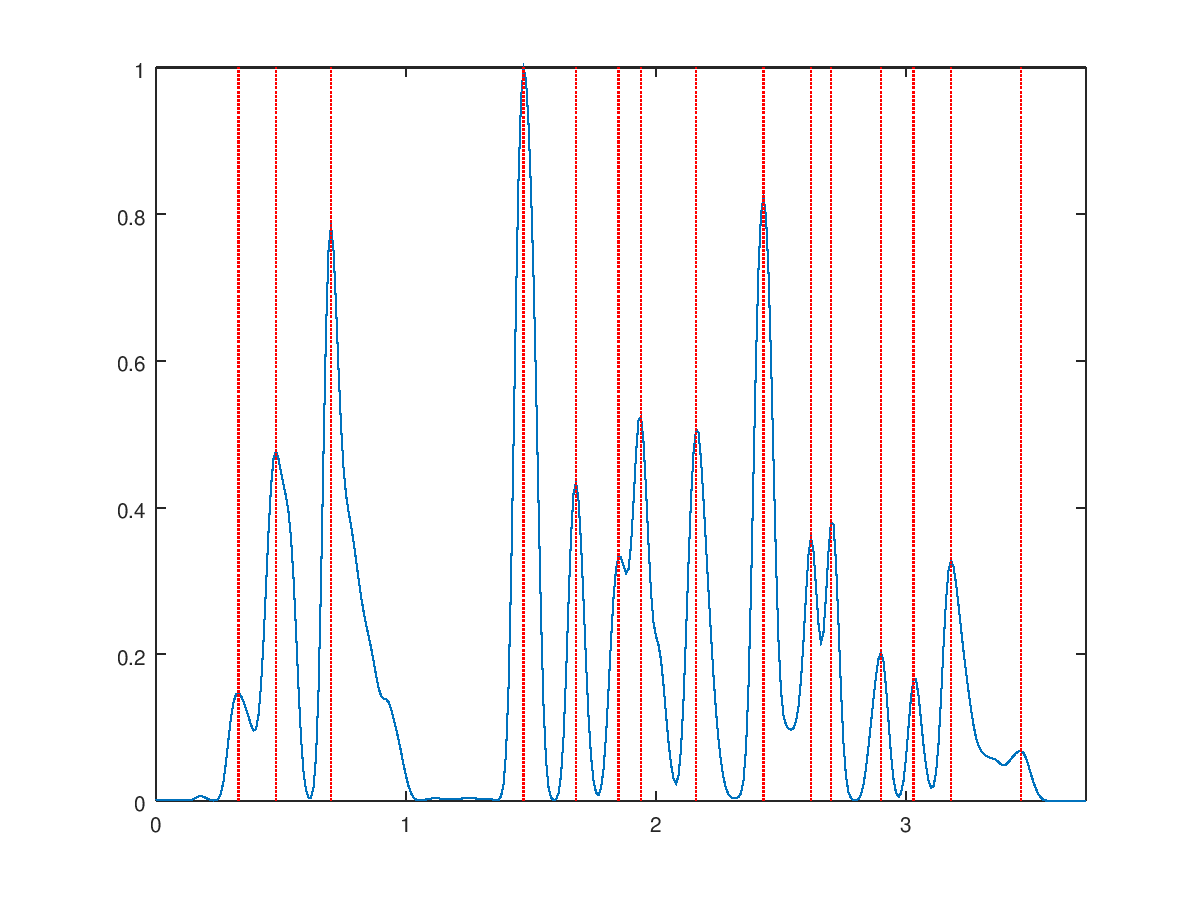

Here's a plot showing the smoothed amplitude contour for the same recording, with the peaks indicated with vertical red lines:

Here are the times and relative values of amplitude peaks, as output by the program given above:

0.330 0.147 0.480 0.476 0.700 0.784 1.470 1.000 1.680 0.434 1.850 0.333 1.940 0.524 2.160 0.507 2.430 0.824 2.620 0.359 2.700 0.380 2.900 0.202 3.030 0.166 3.180 0.328 3.460 0.068

Lining those peaks up with the lexical-phonological syllables, we see that there is one miss and no false alarms:

0.2325 0.3925 j in 0.330 0.3925 0.5725 t ian 0.480 0.5725 0.8425 sh ang 0.700 0.8425 1.0225 u MISSING 1.3425 1.5525 s ai 1.470 1.5525 1.7325 q v 1.680 1.7325 1.8925 z u 1.850 1.8925 2.0225 ui 1.940 2.0225 2.3025 h ui 2.160 2.3025 2.4925 zh ao 2.430 2.4925 2.6625 k ai 2.620 2.6625 2.7425 l e 2.700 2.7425 2.9325 j i 2.900 2.9325 3.0725 sh u 3.030 3.0725 3.2425 h ui 3.180 3.2425 3.5425 i 3.460

The miss is due to failure to catch the zero-onset syllable /u/:

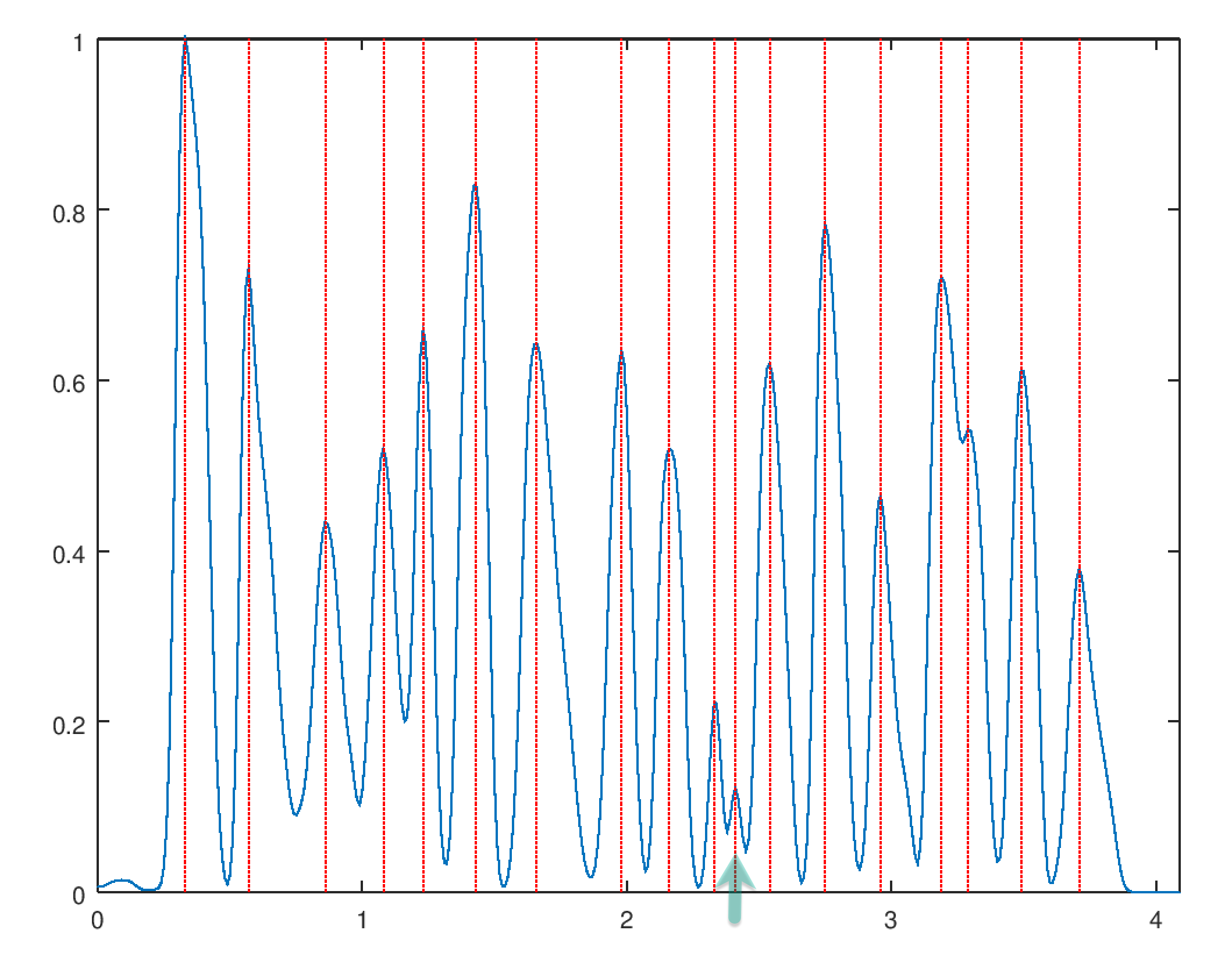

That disyllabic sequence /sh ang u/ might plausibly be a single syllable in some other language:

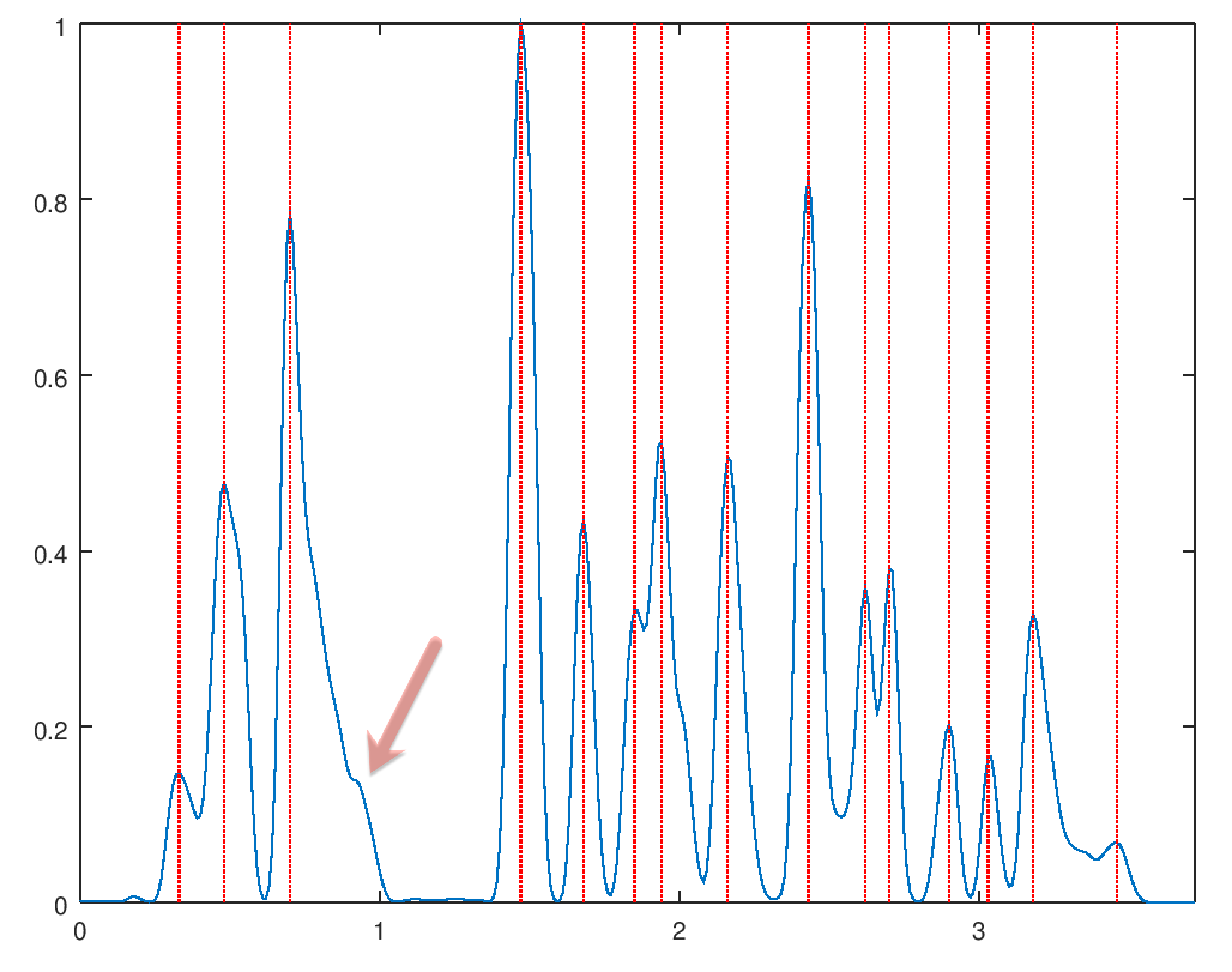

Here's another randomly-selected sentence from the same collection:

In this case, there are no misses but one false alarm — two peaks in the syllable /t ao/:

0.2125 0.4525 g ou 0.330 0.4525 0.7325 f ang 0.570 0.7325 0.9725 t an 0.860 0.9725 1.1725 p an 1.080 1.1725 1.2925 d e 1.230 1.2925 1.4925 g uo 1.430 1.4925 1.8825 ch eng 1.660 1.8825 2.0525 ie 1.980 2.0525 2.2225 j iu 2.160 2.2225 2.3725 sh iii 2.330 2.3725 2.6325 t ao 2.410 2.540 2.6325 2.8425 j ia 2.750 2.8425 3.0825 h uan 2.960 3.0825 3.2625 j ia 3.190 3.2625 3.3825 d e 3.290 3.3825 3.5725 g uo 3.490 3.5725 3.8725 ch eng 3.710

Looking at the waveform and spectrogram for the /t ao/ syllable in context, it seems that there may have been some microphone breath capture:

Anyhow, across the whole set of 6000 sentences and 88,724 "true" syllables, we get

N SYLLABLES N MISSES N FALSEALARMS 88724 6706 8262 Precision 0.915 Recall 0.924 F1 0.920

…where "precision" is the proportion of detected syllables that are valid; "recall" is the proportion of valid syllables that are detected; and F1 is the harmonic mean of precision and recall, i.e. 2*precision*recall/(precision+recall).

Again, it would be easy to tune the algorithm to do better, and a more sophisticated modern deep-learning approach would doubtless do much better still. But the point is that the basic correspondence between phonological syllables and amplitude peaks in decent-quality speech is surprisingly good, and using this correspondence to focus the attention of (machine or human) learners is probably a good idea.

Update — See also "English syllable detection", 2/26/2020.

Chris Button said,

February 24, 2020 @ 6:48 am

I wonder how it would fare with Tashlhiyt Berber

[(myl) Point me at a Berber corpus (with syllable-level transcriptions) and I'll give it a try :-)…

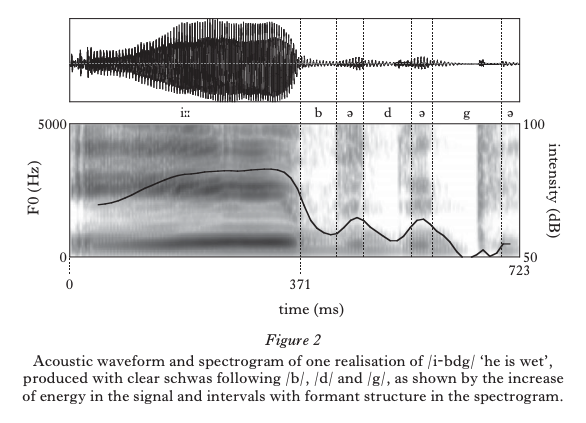

Meanwhile, what I get from Rachid Ridouane's work on Berber is that the long strings of consonants mostly emerge as individual phonetic syllables with epenthetic schwas, with variation due to both prosodic and information-structural considerations, e.g. (from Questions de phonologie berbère à la lumière de la phonétique expérimentale):

or from "A story of two schwas: a production study from Tashlhiyt":

Of course it's true that the syllabic phonology-phonetics correspondence is more (or less) transparent in some languages than in others. English is certainly less transparent than Putonghua, because of cases like "owl" or "hour" where opinions differ about the syllable count, and because of unstressed syllables that may be phonetically elided or merged with their neighbors, etc. ]

Chris Button said,

February 24, 2020 @ 7:11 am

I also wonder how much more advanced linguistics would be as a discipline had it been dominated in its formative years by linguists whose first languages were written in scripts that prominently maintain the notion of the syllable…

[(myl) This point has often been made, implicitly or explicitly, by Japanese linguists among others — see e.g. Osamu Fujimura, "Syllable as a unit of speech recognition", IEEE Transactions on Acoustics, Speech, and Signal Processing 1975:

Basic problems involved in automatic recognition of continuous speech are discussed with reference to the recently developed template matching technique using dynamic programming. Irregularities in phonetic manifestations of phonemes are

discussed and it is argued that the syllable, phonologically redefined, will serve as the effective minimal unit in the time domain. English syllable structures are discussed from this point of view using the notions of “syllable features” and “vowel affinity.”

]

Idran said,

February 24, 2020 @ 9:04 am

Wait, there actually is a physical connection with syllables? I thought that they were purely a construct. What about English words that people disagree on syllable count for, like "fire"? That can't just be accent or dialect, because I've had that mini-argument with people that share both with me.

[(myl) The physical/cognitive association remains strong in such cases, in the sense that both signal-processing algorithms (like the linked program) and human judgments (as you suggest) are variable and inconsistent. In other words, when human perception is clear, the physical correspondence is generally also clear; and when not, generally not. ]

Victor Mair said,

February 24, 2020 @ 9:24 am

Since many languages (e.g., English and even Mandarin and other Sinitic topolects as they are spoken in daily life, as I have demonstrated numerous times on Language Log) cannot readily be written syllabically without distorting what is actually said, I don't think it would be wise or realistic to wish / imagine that linguistics in its formative years would have been dominated by the notion of the syllable. As it actually developed, linguistic science offers the possibility of syllabic analysis when that is called for, but it also allows for sound stream analysis when that is suitable.

See, for example:

Drew V. McDermott, Mind and Mechanism (Cambridge, MA: M.I.T. Press, 2001), p. 75.

Bruce J. MacLennan, "Continuous Computation and the Emergence of the Discrete", in Karl Pribram, ed., Origins: Brain and Self Organization (Hillsdale, NJ: Lawrence ErlBaum Associates, 1994), p. 139, here focusing on the division into words rather than into syllables, but the same principle of truncation applies.

[(myl) But of course it's even more true that speech can't be written segmentally without serious uncertainty and distortion — see e.g. "On beyond the (International Phonetic) Alphabet". The correspondence between signals and symbols is always complex at best. The point here is that looking at speech through syllabic spectacles brings signals and symbols into a surprisingly close relationship, many times simpler and more direct than the alternatives. This doesn't mean that writing systems should be syllabaries — that's part of an entirely different set of questions.]

Sally Thomason said,

February 24, 2020 @ 9:41 am

I wonder how your program would work on Montana Salish words like sxwcst'sqa (a monosyllable; should have hacheks over the c & 2nd s; and the xw is a labialized velar fricative, not a sequence of x + w, so it's only a 7-consonant onset cluster, not an 8-consonant cluster). There's audio on line, for instance here: http://www.salishaudio.org/SalishLC/SalishLC.html

— if you click on the Place Names button, you'll find some mostly monosyllabic place names, often with fairly elaborate consonant clusters.

The transcriptions usually tell you how many vowels are in the word.

[(myl) Thanks! I've got to go teach now, followed by other appointments of various kinds, so I might not be able to get to this before tomorrow, but I'll take a look.]

KevinM said,

February 24, 2020 @ 9:57 am

So Shakespeare was onto something with the "syllable of recorded time."

Bob Ladd said,

February 24, 2020 @ 10:51 am

While everyone is giving you their favourite "but what will your algorithm do with X?" example, let me add the case of Vowel-Vowel sequences across word boundaries, as commonly encountered in e.g. Italian and Greek. Ordinary sentences like Mario ha ordinato una pizza [marioaordinatounapitsa] 'Mario ordered a pizza' have sequences like [oao] and [ou] which are often said to involve 'elision' but generally preserve at least some of the spectral and temporal cues to the presence of sequential vowels. I suspect that these would fall under your rubric of "when human perception is not clear, the physical correspondence won't be clear either", but it would still be interesting to see how your algorithm deals with such cases.

Jonathan said,

February 24, 2020 @ 11:55 am

Your description of the problem sounds a lot like ones that Hidden Markov Chain Models are good for. I've never used them myself, but I was wondering if they've found any application here.

Barbara Phillips Long said,

February 24, 2020 @ 1:33 pm

Is “vocal track” a typo (or faulty autocorrect) for “vocal tract”?

[(myl) Yes. Fixed now — thanks!]

david said,

February 24, 2020 @ 2:06 pm

Was Pāṇini /a> a linguist and is sanskrit syllabic?

Chris Button said,

February 25, 2020 @ 12:24 am

@ Idran

Might I recommend J. C. Wells' 1990 paper "Syllabification and Allophony" (1990). His approach runs counter to the received orthodoxy so, despite being correct in my opinion, unsurprisingly hasn't been adopted by most. To quote Wells:

@ Victor Mair

To Prof. Liberman's point, I wasn't referring to the pros and cons of different writing systems.

Regarding linguistic theory in general, I actually think it provides many ways to avoid having to deal with the syllable. The shortcomings of the approach really come into focus in the comparative historical sphere because the further back one goes the harder it becomes to avoid the syllable. I can really do no better than quote Søren Egerod's comments on Old Chinese in his 1970 paper "Distinctive Features and Phonological Reconstructions":

Unfortunately, only Edwin Pulleyblank made significant strides in this manner, but once again it wasn't widely accepted because it flew in the face of the received orthodoxy (presumably that's an unfortunate reality of much of academia). And now we have to suffer overly prescriptive Old Chinese reconstructions based on a confusion of surface phonetics with underlying phonology that do the opposite of Egerod's recommendation.

Idran said,

February 25, 2020 @ 8:14 am

@Chris: Thanks for the rec, I'll check it out!

Jonathan Smith said,

February 25, 2020 @ 9:23 am

A different kind of test case for such systems might be the continuum in Japanese between prosodic systems considered to be based exclusively on the "syllable" (Kagoshima, where if I understand correctly, [tai], [kan], and the like require unitary treatment) and systems considered to be based exclusively on the mora (Osaka, where prosody points consistently to independent [ta], [i], [ka], [n], etc.)

See e.g. Kubozono 2004, Tone and syllable in Kagoshima Japanese

@Chris Button

Regarding the syllable as a fundamental unit at the cognitive and/or articulatory level doesn't mean favoring some particular treatment of vowels vis-a-vis consonants in descriptions or reconstructions… right? Egerod does not seem to be saying that "[emptying] the vowels of all features which can possibly be placed outside them" is a great analytical result.

@Victor Mair

I think your LL illustrations are about challenges for traditional character representation, but the examples in question remain perfectly amenable to syllable segmentation IMO… er 兒 of written Mandarin is the most obvious but far from the only illustration.

And in the recording surely xiao4qu1 校區 not sai4qu1 賽區? Or are my ears failing

Chris Button said,

February 25, 2020 @ 12:29 pm

@ Jonathan Smith

Right. What he's saying is the following:

…i.e., let's please not be too prescriptive (shame hardly anyone listened).

Surely we can apply a moraic analysis to English too. Take Idran's comment about "fire" above which could theoretically be treated as one syllable with two moras could it not?

Jonathan Smith said,

February 26, 2020 @ 3:22 am

@ Chris Button

My thought was would e.g. chi-i-sa-i, se-n-se-i be split properly by reference to amplitude? These units are syllables for practical purposes in (much of) Japanese, a situation not comparable to (and highly counterintuitive to speakers of) English, among many others…

Re: abstract representations of "features", there is the native tradition for representing medieval Chinese syllables, and Branner's transcription system thereof, and even Baxter 1992 is very abstract. But Pulleyblank complained bitterly about such systems, seeing them as evasive and dodging "commitments"… so I was not sure why you said that he made "significant strides" in the direction described by Egerod.

Chris Button said,

February 26, 2020 @ 3:45 pm

@ Jonathan Smith

Well Baxter's Middle Chinese "notation" is just that and can't be used as a good testing ground for Old Chinese forms. As for Old Chinese, here's a quote from Pulleyblank's review of Baxter (1992) regarding the "front vowel hypothesis":

Whether you assign that palatal feature to the onset, vowel or coda or to the syllable as a whole is matter of conjecture. In the case of velar codas, we can see from the rhyming that the palatal feature was strongly attracted to the coda. But even then, was it /jk/, and to what degree did that surface more like an off-glide from the preceding vowel than as a palato-velar, or was it indeed fully co-articulated as/kʲ/, but then to what degree did it surface as /c/? And if it was /c/, was it post-palatal due to its velar /k/ origin or had it already shifted to a more alveolar location on account of its merger as /c/ with the /t/ coda after /ə/ in palatalized environments? The reality would have been different for different speakers at different time depths. Old Chinese was a living language after all, not a bunch of immutable symbols on a page.

Well the "n", which can't be analyzed as a long vowel or a diphthong, can be quite distinct in singing for timing purposes. Take a listen to the word "kanjite" (感じて) at the 1:44-1:46 time in this song for example in which the "n" of "kan" 感 is quite clearly a distinct unit.

https://www.youtube.com/watch?v=tTNybXzqb-g

I'm a little out of my depth, but at least with what is essentially a sonorant coda, I suppose it comes down to the ability to approximate a syllable when afforded the requisite length. For example, I'd imagine it's the sonority of the final lateral in "feel" that explains why it sometimes sounds like it consists of two syllables while "feed" and "feet" never do (the "l" coda basically has its own in-built schwa, which for me is the syllable)

Oded Ghitza & David Poeppel said,

February 26, 2020 @ 5:28 pm

A proposal: the theta-syllable as a unit of speech information defined by cortical function

Highlighting the relevance of syllables in the ongoing debate on phonemes or syllables, Mark Liberman described a low-complexity, powerful algorithm to detect vocalic nuclei. The accuracy is demonstrated by showing that the extracted markers are situated within the reference starting and ending times of syllables parsed from a phone-level transcription.

The question arises whether these markers can be used to develop a language-independent syllable detector. At the heart of a reliable syllable detector is a definition for an acoustic correlate of the syllable. Even though the syllable is a construct that is central to our understanding of speech, syllables are not readily observable in the speech signal, and even competent English speakers can have difficulty syllabifying a given utterance. A corollary to this observation is that a consistent acoustic correlate to the syllable is hard (if not impossible) to define: vocalic nuclei alone are insufficient for defining the syllable boundaries (even though they provide audible cues that correspond to syllable “centers”). However, the robust presence of vocalic nuclei is crucial in considering a new unit of speech information, an alternative but related to the classic syllable.

Oscillation-based models of speech perception postulate a cortical computation principle by which the speech decoding process is performed on acoustic chunks defined by a time-varying window structure synchronized with the input and generated by a segmentation process (e.g., Ghitza, 2011; Giraud and Poeppel, 2012). At the pre-lexical level, the segmentation process is realized by a flexible theta oscillator locked to the input syllabic rhythm, wherein the theta cycles constitute the syllabic windows. A theta cycle is set by an evolving phase-locking process (e.g., a VCO in a PLL circuit), during which the code is generated. The acoustic cues to which the theta oscillator is locked to are still under debate (acoustic edges? vocalic nuclei?). If we postulate vocalic nuclei, a unit of speech information defined by cortical function emerges (Ghitza, 2013).

Definition: The theta-syllable is a theta-cycle long speech segment located between two successive vocalic nuclei.

According to oscillation-based models, cortical theta is in sync with the stimulus syllabic rhythm. Thus, the theta-syllable – the speech chunk marked by the theta cycle – is the corresponding VΣV segment, with Σ stands for consonant cluster. Given the prominence of vocalic nuclei in adverse acoustic conditions, the theta-syllable is also robustly defined.

Ghitza O (2013) The theta-syllable: a unit of speech information defined by cortical function. Front. Psychology, 4:138. doi:10.3389/fpsyg.2013.00138

Ghitza O (2011) Linking speech perception and neurophysiology: speech decoding guided by cascaded oscillators locked to the input rhythm. Front. Psychology 2:130. doi:10.3389/fpsyg.2011.00130

Giraud AL, Poeppel D (2012) Cortical oscillations and speech processing: emerging computational principles and operations. Nat. Neurosci. 15:511–517. doi.org/10.1038/nn.3063

Jonathan Smith said,

February 27, 2020 @ 4:25 am

@ Chris Button WRT (most) Japanese, I don't think there is any doubt about the fundamental rhythmic units at issue, but to what extent phonetic correlates of these units resemble those of other languages could be of interest (and I'm sure well-studied). That this native rhythm (generally) prevails despite the importation of vast quantities of Chinese and now European "heavy syllable"-like units (with consonant codas/diphthongs/etc. in their original forms) is kind of remarkable, IMO.

Re: early Chinese, Pulleyblank, for good or ill, strongly favored phonetic detail over what he saw as hand-wavy abstraction, so I would say the caution re: feature arrangement above is yours, not in general his…

Chris Button said,

February 27, 2020 @ 12:29 pm

@ Jonathan Smith

Yes, on reflection, I think you are probably correct than I'm imputing to him something that I lean more toward than he did. Having said that, his methodology is certainly more amenable to that way of thinking that others. To quote Pulleyblank again from the same review in terms of Baxter's approach to rhyming:

But, yes, the above does not talk about arrangement of features but rather just their existence.

Chris Button said,

February 27, 2020 @ 12:32 pm

…and Egerod is talking about both "manifestation and placement within the syllable" (italics mine)

Chris Button said,

February 27, 2020 @ 5:30 pm

A comparison of syllable structure in Proto-Indo-European (PIE) and Old Chinese (OC) is quite interesting for its distinctions in relation to their (quasi-)vowelessness in which "ə" (represented in PIE as "e") represents the default "syllable":

– In Proto-Indo-European /j/ and /w/ pattern as the other sonorants (e.g. /l/, /n/, /m/, etc) in being able to occur as the syllabic nucleus (although only /j/ and /w/ afford us with the transcriptional flexibility of /i/ and /u/ and hence the perception of high vowels in PIE).

– In Old Chinese, only the glide category of sonorants could occur as the nucleus such that a syllable of the type CjC was possible (in which C = consonant, and schwa is inherent in the glide) but something like CnC was not.

The above of course means that I am now going to need to retract my earlier response of "right" to this earlier comment:

…and also partially take back my comment saying "Yes, on reflection, I think you are probably correct" :)

The reason is that the premise of schwa as the syllable, which makes Egerod's comment of an "extreme feature analysis" possible, is contingent on what is essentially a state of vowelessness. And hence Pulleyblank's favoring of phonetic detail in OC (his approach to Middle Chinese is different) seems to me to come down more to a desire to identify the features that would have colored the resonance of a syllable than any desire to rigidly fix them at a particular point in the syllable.

Chris Button said,

February 28, 2020 @ 12:56 am

Separately, regarding moras, an anlysis of Kuki Chin languages could be really interesting.

Many of them (and all the northern ones I studied) have long/tense vowels before short/lax sonorant codas and short/lax vowels before long/tense sonorant codas.

The source of the distinction is the same as the Type A/B syllable distinction in Old Chinese as pointed out by others. But any attempts to find a direct one-to-one comparison have not been successful for a variety of prosodic, morphological and diachronic phonological reasons (a little too much to discuss here). In a response to a review of his Handbook of Proto Tibeto Burman, Jim Matisoff made a general observation about the folly of trying to find perfect one to one comparisons in all cases along the parameter of vowel length in this manner, but his comments don't seem to have been heeded..