How many possible English tweets are there?

« previous post | next post »

And how long would it take to read them all out loud?

Randall Munroe answers these questions today at xkcd's what if? page — the answer involves Claude Shannon, a rock 100 miles wide and 100 miles high, and a very long-lived bird (or perhaps a reliable species of birds). You should definitely read the whole thing.

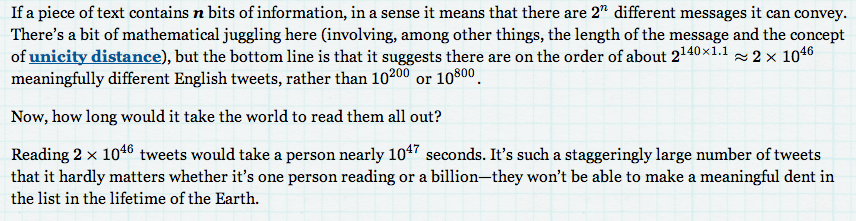

Randall calculates the number of tweets based on Claude Shannon's estimate of 1.1 bits per letter in English text, giving him an estimate of 2140*1.1 ≈ 2*1046 distinct tweets.

Let's check the rest of his arithmetic, just to be sure.

100 miles is 16,093,440 centimeters, and a cube of rock 16,093,440 centimeters on a side is about 4.17e+21 cubic centimeters. We're told that

[E]very thousand years, the bird arrives and scrapes off a few invisible specks of dust from the top of the hundred-mile mountain with its beak. When the mountain is worn flat to the ground, that’s the first day of eternity. The mountain reappears and the cycle starts again for another eternal day. 365 eternal days—each one 1032 years long—makes an eternal year. […] Reading all the tweets takes you ten thousand eternal years.

So it takes 1032/1000 = 1029 bird-visits to carry off 4.17*1021 cc of rock, or 4.17*1021/1029 = 4.17e-08 cc per bird-visit. That's about 40 billionths of a cc of rock per visit; since the density of rock is about 2.5 grams/cc, the bird has to remove and carry off about 100 nanograms of rock per visit.

That counts as "a few invisible specks of dust from the top of the hundred-mile mountain", or close enough for poetry.

The rest of the calculation seems to come up a bit short, if I haven't made a mistake in arithmetic, since ten thousand eternal years is 10000*365*10^32 = 3.65e+38 regular years, which in turn is about 3.65e+38*365*24*60*60 ≈ 1.15e+46 seconds, rather than the 1047 that Randall calculated that we actually need. But at this point, a factor of ten hardly matters.

MikeM said,

February 26, 2013 @ 1:20 pm

So a gazillion monkeys typing a gazillion tweets per second might, in a gazillion millennia, produce one meaningful tweet — or not.

Neil Coffey said,

February 26, 2013 @ 1:40 pm

The information content per bit in a *tweet* may be a little higher than in plain English text, of course, because in a tweet it is the norm that people abbreviate and use what in plain English might actually be "ungrammatical" utterances.

D.O. said,

February 26, 2013 @ 3:08 pm

Well. Mr. Munroe's post is obviously something in between a joke and an attempt to educate people in the basics of communication theory, but the premise of the central part of the post is probably wrong. For the purpose of calculating the number of tweets, information theory as employed by Mr. Munroe is not very useful. It matters a lot from a probability point of view whether the utterance is "OMG, the volcano is erupting" or "OMG, the volcano is erudite", but it would take about the same time to read. So having eternity of eternities or something thereof to read them all, it does not actually matter how much entropy there is between these two twits.

To view it form another angle, in a paper of Mr. Shannon referenced by Mr. Munroe (and copied on LL site. Hmm?), it is estimated that number of English words is about 9000, which might be more or less reasonable for the purposes of calculating entropy, but extremely stupid if one wants to estimate how voluminous the English dictionaries should be.

[(myl) Someone who has a large inventory of tweets — which would be all of us if the Library of Congress ever gets off its butt and fulfills the agreement in the deed of gift under which Twitter donated its complete archive for research use! — could easily estimate the per-character entropy of the actual phenomenon…

The estimated entropy will indeed probably be higher than Shannon's estimate. So many it'll be 100k or 1M Eternal Years rather than 10k. Either way, I'm not holding my breath.]

J.W. Brewer said,

February 26, 2013 @ 3:42 pm

Natural language (English included) is an analog phenomenon. It doesn't come in strings of 1's and 0's (and to the extent one can now turn a recording of speech into a string of 1's and 0's, the problem of translating that string into the right sequence of phonemes and then that sequence into the right sequence of written English words with conventional orthography and spacing is not a trivial one). For various purposes it may be useful to substitute in a crude schematic model of natural language that does involve strings of 1's and 0's because such a model is easier to manipulate mathematically than a more accurate description of the underlying reality would be. But don't ask me to take on faith that the results of your manipulation of the model will tell me something I should take as an accurate prediction about the empirically-existing world of natural language rather than just telling me something about your model and its limitations.

[(myl) But the question was about digital text-strings, not about utterances, so no digital transformation is required.]

Rubrick said,

February 26, 2013 @ 5:20 pm

@myl: Either way, I'm not holding my breath.

Weird, I was sure you were going to say "I'm not holding my bread."

D.O. said,

February 26, 2013 @ 5:21 pm

But my idea is that per character entropy actually doesn't matter in answering question like that…

J.W. Brewer said,

February 26, 2013 @ 5:42 pm

My intuition is the same as D.O.'s although I expect it may be too untutored an intuition to be reliable. What you need to know is what percentage of some arbitrarily large number of 140-character strings (with a blank space eligible to be a character) will by chance turn out to be meaningful (syntactically and semantically well-formed, with some allowance for the informality of syntax and orthography common in tweeting) strings of words in a given natural language (say English, but you could pose the same question for any language whose speakers tweet using the same character set). Maybe there's a way to back into that via Shannon's quasi-constant of bits-per-letter, but since apparently estimates (his own and others) of that constant have ranged from around 0.6 to around 1.5 (with Munro's 1.1 being an approximate midpoint of that range), I'm skeptical — unless this is a sort of "Fermi question" like I did back in high school Science Olympiad where the idea is just to come up with a plausible/defenskible order-of-magnitude guesstimate in situations where you it's not practicable to gather/analyze actual data.

I remain skeptical that it is even coherent to talk about natural language as containing "information" that can be quantified in "bits." Obviously, a written utterance digitally coded in a particular way (ASCII or some competitor) can be made to correspond to a particular quantifiable sequence of 1's and 0's (and I take it Shannon's work allows one to theorize about how short that sequence can be made without risking errors/ambiguities when it's decoded at the other end), but so what? Knowing the characteristics of the map as a distinct physical object doesn't necessarily tell you all that much about the territory it represents.

Bob Moore said,

February 26, 2013 @ 6:18 pm

2^(140*1.1) would be a lower bound on the number of possible English tweets, but it would only be a reasonable estimate of the number if all possible tweets were equally likely. If the probability distribution over possible tweets is highly skewed (as it surely is) the number of of possible tweets would have to be much greater than this for the entropy of "twenglish" to be as high as 1.1 bits per character.

Rube said,

February 26, 2013 @ 7:13 pm

I wish Borges were alive to write a 140 character story about this.

KWillets said,

February 26, 2013 @ 8:01 pm

@Bob you bring up a good point, but I think it's the other way around. 1.1 bits/char is an estimate for the highly skewed, Zipfian-distributed corpus of common speech, where all possible tweets are *not* equally likely. When the skew is removed it's a higher number IMO.

KWillets said,

February 26, 2013 @ 8:27 pm

Just to give an example, in common speech the completion for "I am reading a boo_" would almost certainly be "k", i.e. it has a perplexity close to 1. In a flat-distributed corpus, the final word is as likely to be "boot" or "boor", etc., as it is to be "book", so the perplexity goes way up.

Peter Nelson said,

February 26, 2013 @ 9:16 pm

@J.W.Brewer

It is basically a Fermi question. I think the estimation is really "How many, out of all the possible 140 character strings, are syntactically valid English sentences." He doesn't really specify if they need to be semantically valid. He also points out that there is an (extreme) upper bound on this if you simply take the number of valid characters to the power of 140.

It certainly does make sense to talk about the number of bits a message encodes, whether that message is in a natural language or not. The formalism is completely about picking out items from some finite set. That's it. If I have N possible items, I can specify one uniquely with log_2(N) bits. If the items occur with varying frequency, I can come up with a more efficient coding system (= fewer bits needed on average), where I give shorter codes to more frequent items and longer codes to less frequent ones. Tada, entropy.We've already established that the number of 140 character tweets is finite, so the set of English ones must be too. We don't know the probabilities, but we don't really need to. The existence of the bits/character of English tweets has already been established. Randall is just offering a convenient way to estimate the # of English tweets from some experimental measurements of English bits/character.

@KWillets I believe you are right.

Bob Moore said,

February 26, 2013 @ 9:57 pm

@KWillets, I know that the 1.1 bits per character comes from estimating the real, skewed distribution. The problem is that when you use that number, as Munro does, to estimate a number of messages, what you get is the number of *equiprobable* 140 character messages that would yield an entropy of 1.1 bpc. For a fixed number of messages of a fixed length, the more skewed the distribution, the lower the entropy. Thus, 2^(140*1.1) 140 character messages, with a skewed probability distribution, would have a lower entropy than 1.1 bpc. To get the entropy up to 1.1 bpc, you either need to flatten the distribution or increase the number of messages.

maidhc said,

February 27, 2013 @ 1:14 am

I saw an art installation a few months ago which involved printing out tweets on ticker tape and hanging them up on a sort of clothesline. I guess the idea was if you knew you were using the steampunk version, would you send the same sort of messages? Or if you went around to someone's house and there were your tweets from last week hanging up in the living room?

If you look at how people sent information when the number of bits was limited, since as wartime telegrams, there was a lot of coding used for stock sentences. The telegraphers had books with codes assigned to the most common things people wanted to say in telegrams.

In the extreme version, suppose you have 60 characters available, then each of the 60140 possible tweets could be treated as a binary number used as an index into a vast encyclopedia containing 60140 entries, each of which was a sentence, nay paragraph, nay complete book.

maidhc said,

February 27, 2013 @ 1:16 am

My <sup> worked in preview, why not when I posted?

Matt said,

February 27, 2013 @ 4:44 am

Randall is just offering a convenient way to estimate the # of English tweets from some experimental measurements of English bits/character.

I agree, but I think it's important to note that this method relies on the implicit assumption that "English tweet" can be defined as "140-character string analogous in all relevant ways to those used by Shannon in his experiments." This is fine, of course — it's the only way to get a number that's more interesting than 2^140, due to the Mxyztplk problem Munroe alludes to — but it leaves plenty of room for argument over the extent to which that assumption is justified.

One interesting thing about this method is that, if I understand it correctly, while it implies the concept of "meaningfully different", it doesn't let us look under the hood and see how that shakes out for individual strings. For example, the question of whether "I like pie" and "I liek pie" are meaningfully different (and therefore counted separately) is itself meaningless, because we aren't really counting strings at all. This does let us cut the million little Gordian knots of minor variation, e.g. we don't have to consciously decide whether to treat "liek" as an accidental typo or a meaningful lolcat typo, but it is also mysterious and somewhat frustrating in that it doesn't let us get a good sense of what there are 2×10^46 of.

MattF said,

February 27, 2013 @ 7:54 am

Here's a better 'bottom up' rather than 'top down' way– feed multiple sets of existing English tweets to a 'travesty generator':

http://en.wikipedia.org/wiki/Travesty_generator

and then do some statistics on the multi-letter correlation structures that the program generates. I predict the result will be a lot smaller than 2^140.

J.W. Brewer said,

February 27, 2013 @ 12:33 pm

Even beyond decodeable misspellings and the Mxyztplk problem (perhaps in the "science fiction" register of English there is no possible string of characters under some maximum-length limit that is not a possible proper noun – you could come up with a very large number of well-formed sentences using the template "I'm thinking about writing an sf novella in which the main alien race will be called the _____"), there's the distinction between probability and possibility. So, it's highly useful in many contexts to know that a string beginning "I am reading a boo" is overwhelmingly likely to have a k as its next character, but that's not the question here. It's not at all hard to come up with not-implausible example sentences (not even involving the rather metaphorical activity of reading boots or boors) like "I am reading a boolean-algebra textbook" or "I am reading a boob-joke anthology edited by that guy who just hosted the Oscars" or "I am reading a booster seat's assembly instructions." Each of those counts, for purposes of these questions, as having exactly the same weight as "I am reading a book." They are comparatively unlikely in terms of how often they'd pop up in a large corpus of tweets, but they're not wilfully improbable stunts along the lines of "My hovercraft is full of eels." (Is there a technical term for that sort of example sentence? It's sort of pragmatically ill-formed.) Even if you were to weed out the latter sort of thing, or some of the science fiction examples, there will be a large (almost arbitrarily large but still not infinite given the length constraint, and probably still much much smaller than the number of incoherent character strings under the same length constraint that could be generated) number of individually low-probability but perfectly acceptable English tweets.

peter said,

February 27, 2013 @ 1:15 pm

maidhc said (February 27, 2013 @ 1:14 am):

"I saw an art installation a few months ago which involved printing out tweets on ticker tape and hanging them up on a sort of clothesline."

The grounds of Shinto shrines in Japan typically have prayers written (vertically, as to be expected) on long narrow strips of paper hung vertically from trees.

Maureen said,

February 27, 2013 @ 10:32 pm

But why is the Raven of Cwm Cawlwyd attacking innocent mountains like that?

Language Log � How many possible English tweets are there? ← İngilizce Kursu, İngilizce Kursları, İngilizce Dersleri said,

February 28, 2013 @ 8:50 am

[…] Language Log � How many possible English tweets are there? […]

Douglas Bagnall said,

February 28, 2013 @ 7:41 pm

Contra J.W. Brewer (“Natural language is an analog phenomenon”), I would have thought that, using the engineering framework whence the terms arose, the linguistic content of spoken language is largely digital while its paralinguistic counterpoint is mostly analogue. A digital signal is defined as consisting of discrete symbols, while an analogue one encodes meaning on a continuum. It seems to me that language is digital on two levels — words and phonemes. You can't usefully explore the continuum between “bit” and “bat” to make intermediate meanings. Or to put it another way, if natural language is an analogue phenomenon, what is it an analogy for?

This is notwithstanding the written nature of tweets, as Mark points out. Writing has been purely digital for ever.

J.W. Brewer said,

March 1, 2013 @ 1:20 pm

Douglas Bagnall may be right on the discrete/continuous distinction; I was probably using "analog" in a looser sense meaning "not digital" while using "digital" in a tighter sense meaning "usefully doable via binary code that can be stored/manipulated via currently-existing hardware and software." Obviously a string in base 10 or base 16 or base 39 or what have you can easily be converted to binary. When it comes to the lexicon of a natural language, however (including words, productive morphemes, stock idiomatic phrases with non-compositional meanings etc), it's what? Base 30,000? Base 50,000? A lot more for a highly-inflected language if you count each inflected form of a root separately? That's probably an unanswerable question because the number of different "digits" is not only very very large (although not infinite) it's probably in certain senses indeterminate, which makes it challenging to losslessly convert a "signal" in base-umptyjillion into binary. As I understand it (to oversimplify a much more complex story), computers have not gotten better at simulating fluency in natural language because we've gotten all that much better at realistically modeling human language use in a way that can be implemented "digitally" in the sense I gave above; rather the orders-of-magnitude increase in cheap raw computing power has enabled various brute-force approximations and workarounds as a way of sidestepping the problems that everyone optimistically assumed would easily be solved when research started X decades ago but which proved much less tractable as time proceeded.

Faldone said,

March 5, 2013 @ 6:49 am

For anyone who thinks Randall Munroe takes himself too seriously, here's a quote from his latest what-if:

Linkgebliebenes 13 « kult|prok said,

March 12, 2013 @ 12:30 pm

[…] Netzwerken ist heutzutage, Fremdsprachen auch. Gut, dass wir jetzt wissen, wie viele verschiedene englische Tweets möglich sind. Bei diesem Angebot müssen sie zu schlagen, da werd' ich verrückt und Hunde moralisch. […]