Emotion detection

« previous post | next post »

Taylor Telford, "‘Emotion detection’ AI is a $20 billion industry. New research says it can’t do what it claims", WaPo 7/31/2019:

In just a handful of years, the business of emotion detection — using artificial intelligence to identify how people are feeling — has moved beyond the stuff of science fiction to a $20 billion industry. Companies such as IBM and Microsoft tout software that can analyze facial expressions and match them to certain emotions, a would-be superpower that companies could use to tell how customers respond to a new product or how a job candidate is feeling during an interview. But a far-reaching review of emotion research finds that the science underlying these technologies is deeply flawed.

The problem? You can’t reliably judge how someone feels from what their face is doing.

A group of scientists brought together by the Association for Psychological Science spent two years exploring this idea. After reviewing more than 1,000 studies, the five researchers concluded that the relationship between facial expression and emotion is nebulous, convoluted and far from universal.

The cited study is Lisa Feldman Barrett et al., "Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements", Psychological Science in the Public Interest, July 2019:

It is commonly assumed that a person’s emotional state can be readily inferred from his or her facial movements, typically called emotional expressions or facial expressions. This assumption influences legal judgments, policy decisions, national security protocols, and educational practices; guides the diagnosis and treatment of psychiatric illness, as well as the development of commercial applications; and pervades everyday social interactions as well as research in other scientific fields such as artificial intelligence, neuroscience, and computer vision. In this article, we survey examples of this widespread assumption, which we refer to as the common view, and we then examine the scientific evidence that tests this view, focusing on the six most popular emotion categories used by consumers of emotion research: anger, disgust, fear, happiness, sadness, and surprise. The available scientific evidence suggests that people do sometimes smile when happy, frown when sad, scowl when angry, and so on, as proposed by the common view, more than what would be expected by chance. Yet how people communicate anger, disgust, fear, happiness, sadness, and surprise varies substantially across cultures, situations, and even across people within a single situation. Furthermore, similar configurations of facial movements variably express instances of more than one emotion category. In fact, a given configuration of facial movements, such as a scowl, often communicates something other than an emotional state. Scientists agree that facial movements convey a range of information and are important for social communication, emotional or otherwise. But our review suggests an urgent need for research that examines how people actually move their faces to express emotions and other social information in the variety of contexts that make up everyday life, as well as careful study of the mechanisms by which people perceive instances of emotion in one another. We make specific research recommendations that will yield a more valid picture of how people move their faces to express emotions and how they infer emotional meaning from facial movements in situations of everyday life. This research is crucial to provide consumers of emotion research with the translational information they require.

Oddly, neither the WaPo story nor the APS review mentions the RBF meme. And the cited research deals with the analysis of facial expressions, but there's also a great deal of work on "emotion detection", "sentiment analysis", "opinion mining" and so on from text and speech inputs — this is relevant in analyzing things like online reviews and customer service phone calls. One reason that this is a business — \$20 billion or otherwise — is discussed in Sharon Terlep's 8/3/2019 WSJ article, "Everyone Hates Customer Service. This is Why." (Subtitle: "Technology lets companies see how badly they can treat consumers, right up until the moment they bolt".)

And although the subtitle of the WaPo "Emotion Detection" article is "Artificial intelligence advanced by such companies as IBM and Microsoft is still no match for humans", in fact the biggest problem in this general area is that humans are not very good at the task either — at least in the sense that inter-annotator agreement is depressingly low.

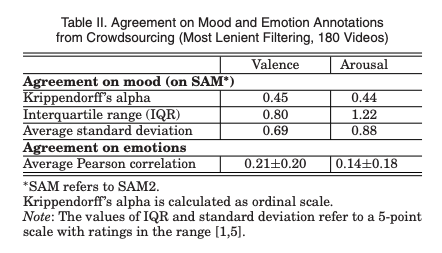

For example, from Christina Katsimerou et al., "Crowdsourcing empathetic intelligence: the case of the annotation of EMMA database for emotion and mood recognition", ACM Transactions on Intelligent Systems and Technology 2016, we get these results for inter-annotator agreement in labeling valence and arousal dimensions for a set of videos:

This page on Krippendorf's Alpha notes that

Values range from 0 to 1, where 0 is perfect disagreement and 1 is perfect agreement. Krippendorff suggests: “[I]t is customary to require α ≥ .800. Where tentative conclusions are still acceptable, α ≥ .667 is the lowest conceivable limit."

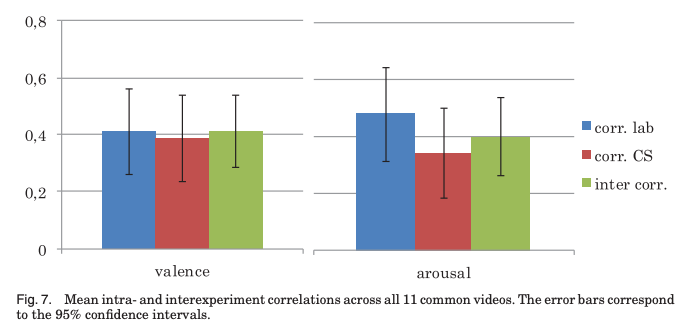

Correlations are higher after filtering to remove more unreliable results, and a bit higher still among carefully monitored-annotators in a more controlled lab environment — but still not great:

The authors observe that

The interannotator agreement, quantified through Krippendorff’s alpha and interquartile range, reveals acceptable agreement […], but is far from perfect. This may be partly due to noisy annotations that the filtering did not detect, and partly due to the inherent subjectivity of affective perception.

Jerry Packard said,

August 4, 2019 @ 11:49 am

As the blurb notes, this is like 'sentiment analysis', which is performed during 'big data' analysis to evaluate texts and audio materials based on their emotional content, also relying upon things like the 'likes' etc. that accompany the data to perform their evaluation. Brain-wave analysis also does a pretty good job at detecting differences in emotion (and veracity) in participants.

Orbeiter said,

August 4, 2019 @ 4:53 pm

>The interannotator agreement […] is far from perfect. This may be partly due to noisy annotations that the filtering did not detect, and partly due to the inherent subjectivity of affective perception.

Expanding on this, one issue with posed facial images such as these (from the 2019 study):

https://bit.ly/33b10XL

is that one might annotate them according to:

1. What facial expression do they resemble in a real context?

2. What facial expression would a talented actor be performing?

3. What expression has the model probably been asked to perform?

My answers would be the same for images #1 and #4, but e.g. in the case of #5 I would answer:

1. Could be expressing mock sadness about a video of a cute bird being caught by a cat, or preparing to disagree about someone's slightly unfair or rude criticism of something. Could also be expressing a type of empathetic disappointment in someone. The physical location of other people or objects relative to the person pulling that face would provide more indication.

2. As above.

3. I would infer sadness, but that would be partly based on a guess about the type of expression (basic and emotional) the researchers would have included in this type of study.

In the case of #4:

1. Exaggerated mock surprise.

2. Either mock surprise, genuine but stylised surprise in a crowd shot of an action film, or fear/awe.

3. Surprise

I think it's difficult to bridge the gap between this methodology and inferences about how good humans (or indeed AI programs) are at inferring emotions in real contexts. The context of an interaction often supplies extra information that narrows down the plausible range of emotions, and in many cases a single label does not entirely describe someone's emotional state or what they are trying to communicate. Also, the emotions that someone's facial expression *rules out* may be more important than the specific emotion(s) they are experiencing. How much interannotator disagreement is merely about e.g. whether someone looks specifically scared, angry, consternated or offended (when an expression might in reality represent some mixture of those)? An AI program might be very useful even if it only assigns positive or negative valence to a person's reaction to something.

Orbeiter said,

August 4, 2019 @ 4:57 pm

Correction: in the case of #6.

Brett said,

August 4, 2019 @ 6:53 pm

I was more struck by the fact that there is apparently a reporter out there named "Taylor Telford." Is this a coincidence, or was she named after the war crimes prosecutor Telford Taylor?

Benjamin E Orsatti said,

August 5, 2019 @ 6:52 am

"The problem? You can't reliably judge how someone feels from what their face is doing." A cogent conclusion, brought to us through the graces of the U.S. Department of Understanding Humanity ("DUH").

However, if there's money to be made in developing an application whereby pointing one's EyePhone in another's direction will instantly deliver access to the latter's inmost thoughts, it may just be a matter of time…

Thus, a plea to all you computational linguists out there, working towards the ultimate goal of "helping" computers to "understand" people: Just because you _can_ doesn't mean you _should_.

The Other Mark P said,

August 5, 2019 @ 2:50 pm

Given that polygraphs are rubbish, even in highly controlled circumstances and after decades of trying, makes me think these people are wasting their money.

Trogluddite said,

August 7, 2019 @ 7:29 pm

Like many other autistic people, I have endured "facial expression" array-selection tests multiple times. They're discussed quite often in the corners of the internet where autistic people gather, and my impression is that Obeiter's question number 3 (essentially; "what is the expected answer?"), along with a healthy dose of the "ruling out" also mentioned, would be very familiar to many of them; as indeed, they are to me.

So long as one is perceptually capable of discriminating the facial configurations, no emotional cognition whatsoever is required in order to learn the canonical answers. It quickly becomes little more than a shape to word mapping test; and one made all the easier if one has experienced similar pictures of such exaggerated gurning used as "therapeutic" tools, as they often are. As the "passing" of such tests is likely to please one's therapists, one's loved-ones, and possibly oneself, there is plenty of incentive to simply commit the mapping to memory.

The kind of "affective perception" AI spoken about earlier is already being trialed as an assistive technology for autistic people, though it is far too early to say how effective it might be. My personal feeling it that it is unlikely to pick up on the very subtleties that are most perplexing to many autistic people, but that might be more useful for helping with difficulties directing one's attention.

As mentioned in the cited study, therapy aimed at improving autistic people's reading of faces often works well under controlled conditions, but fails in real-world interactions. A common reason for this is that autistic people's perception is often overwhelmed by distracting sensory input – the problem is often not so much one of recognition, but that one's brain is simply not analysing the right stream of incoming data at the right moment. A device which simply indicates that any expression worthy of note is on a person's face might be as useful as one which tries to determine what the expression means (and possibly without having to force the technology to run before it can walk.)

OTOH, it could mean a future where every electronic device is as judgemental about my "atypical" affect as most humans are. I'd rather we didn't do that.